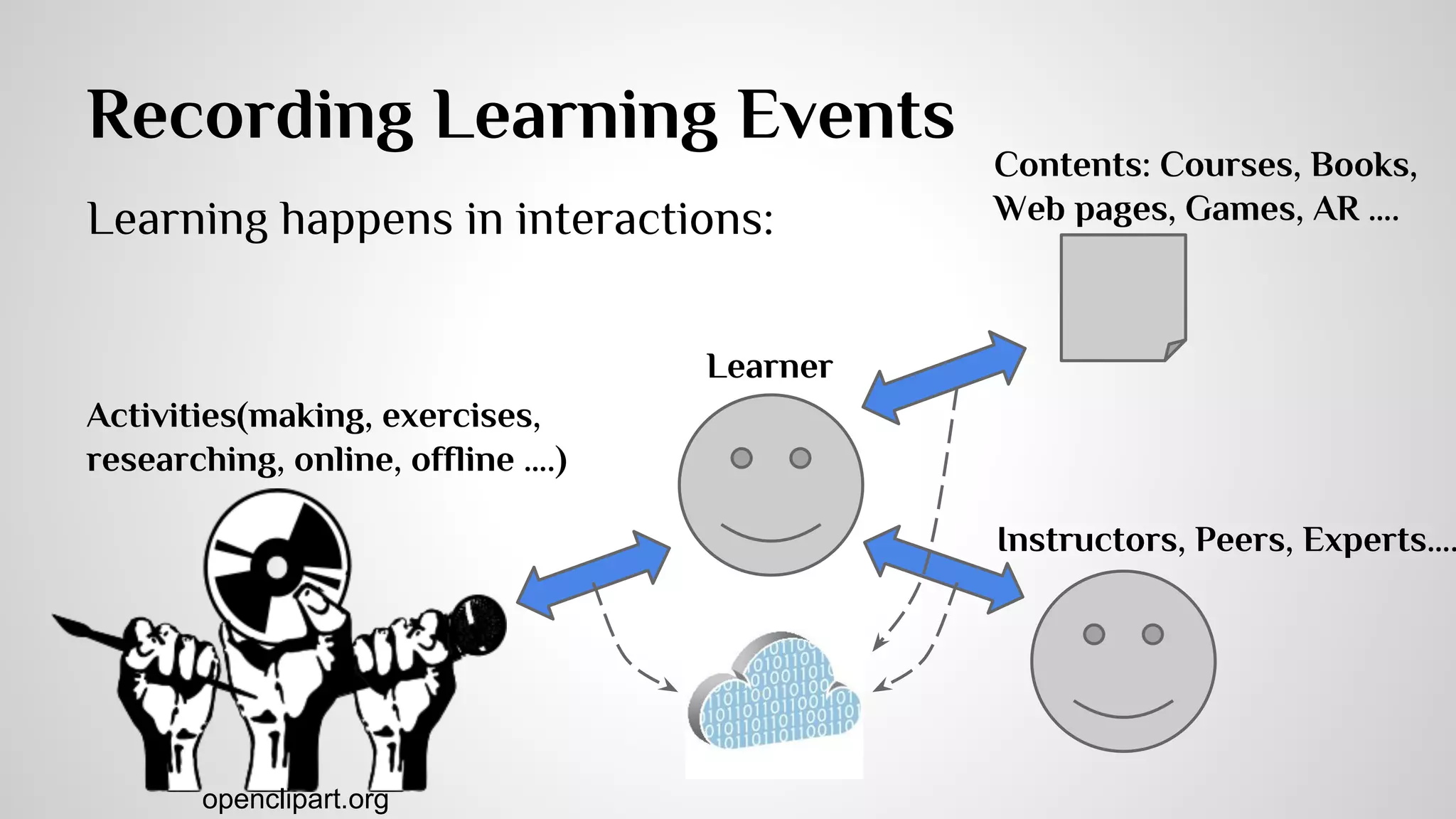

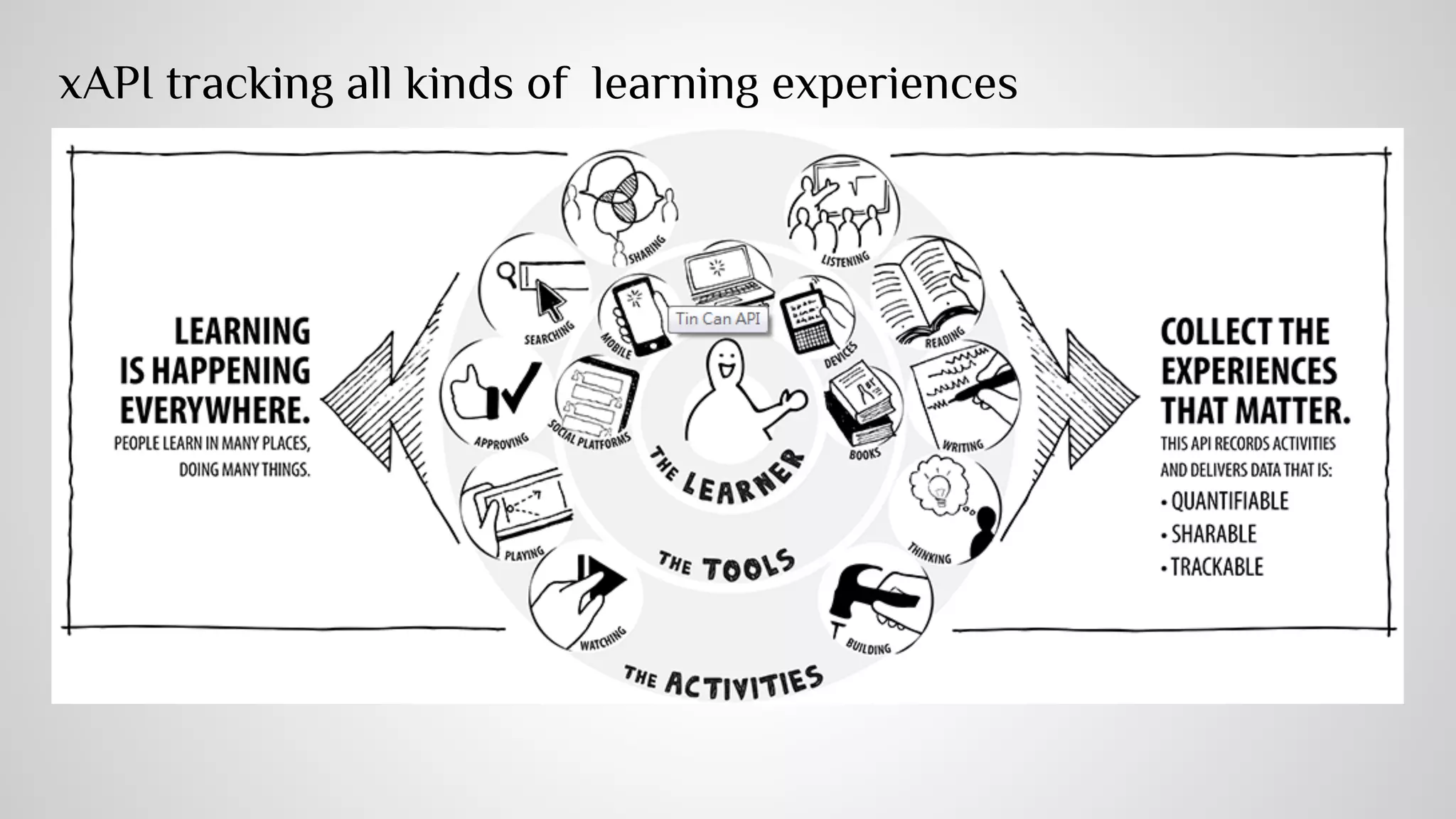

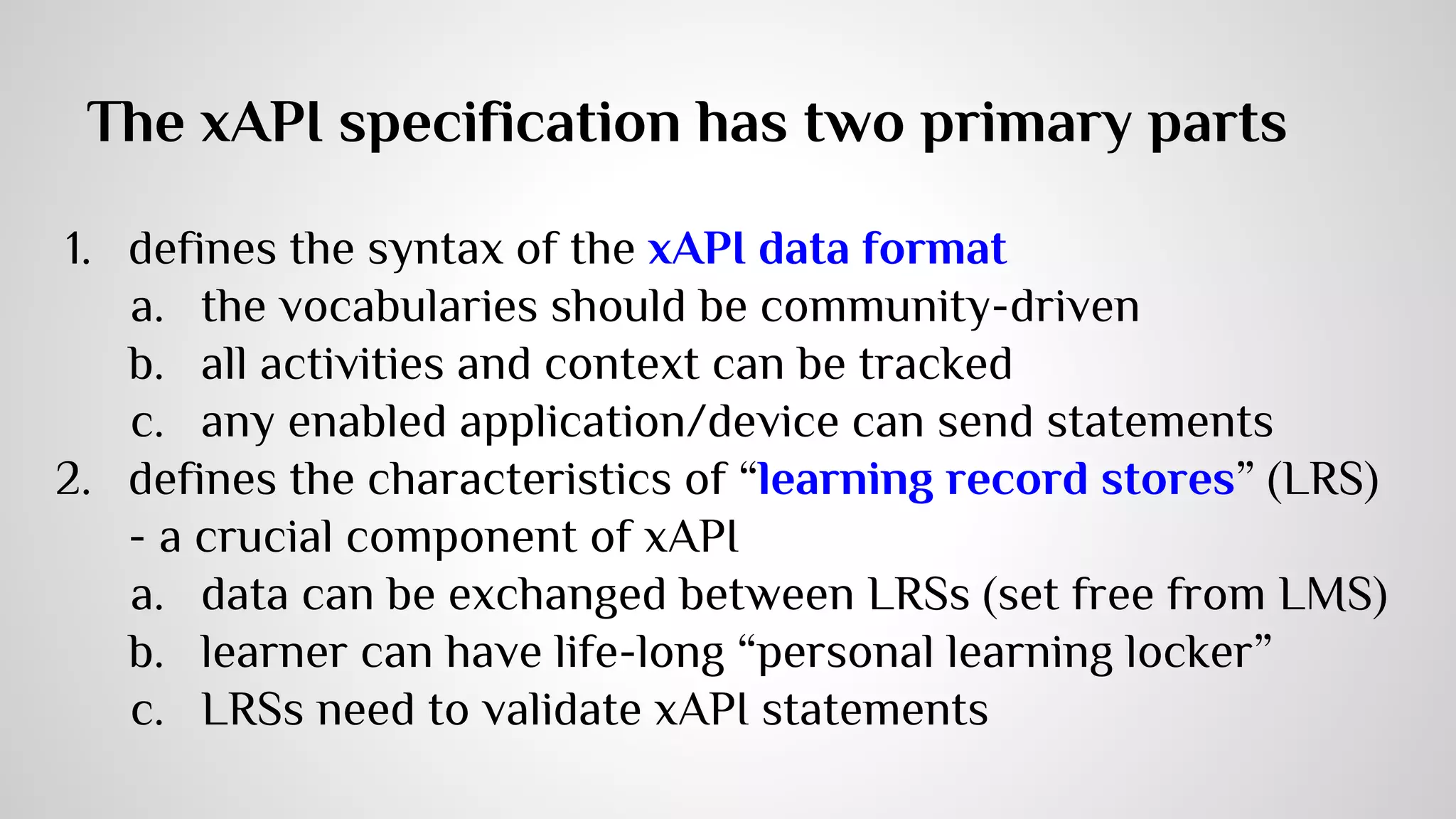

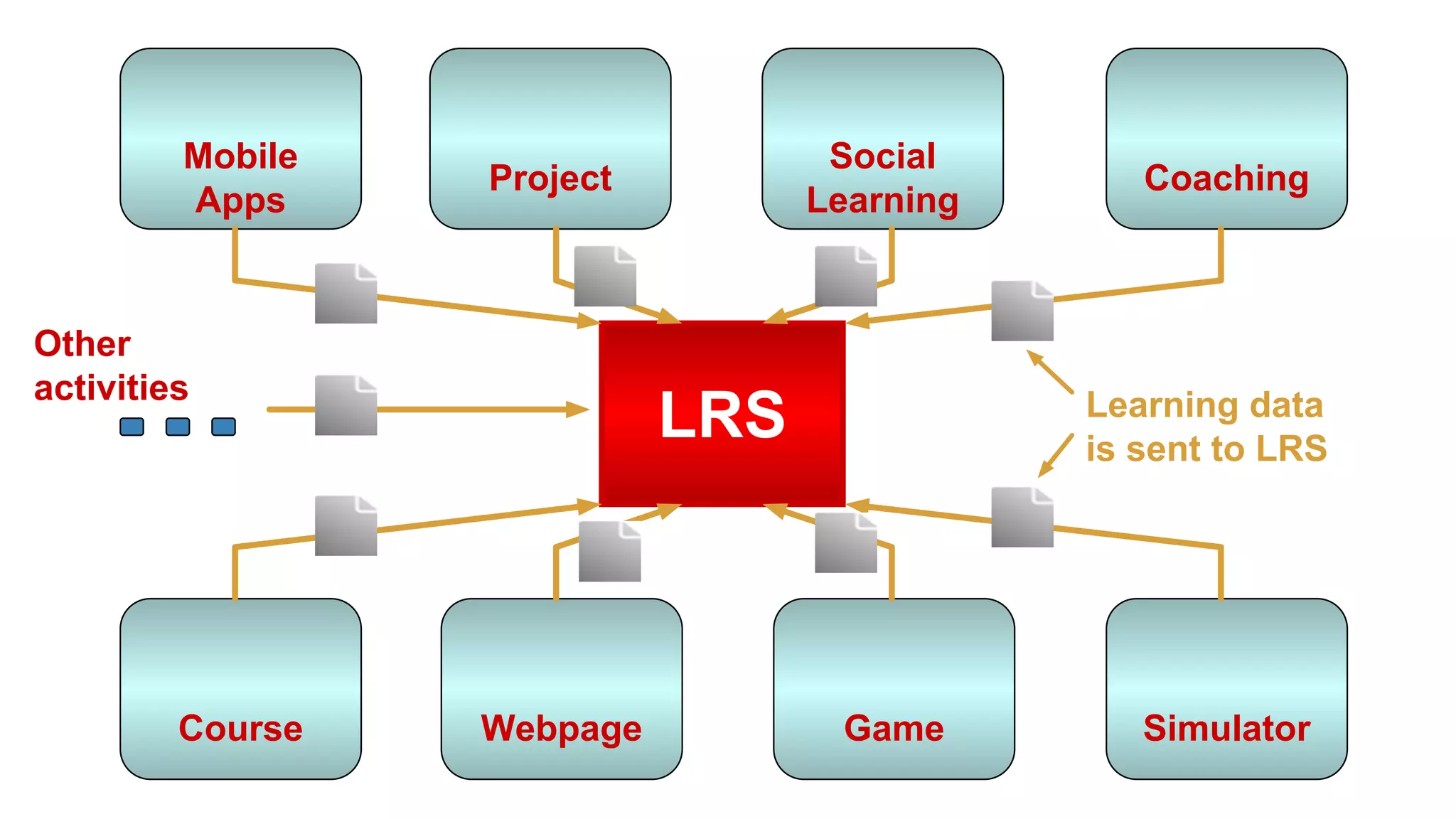

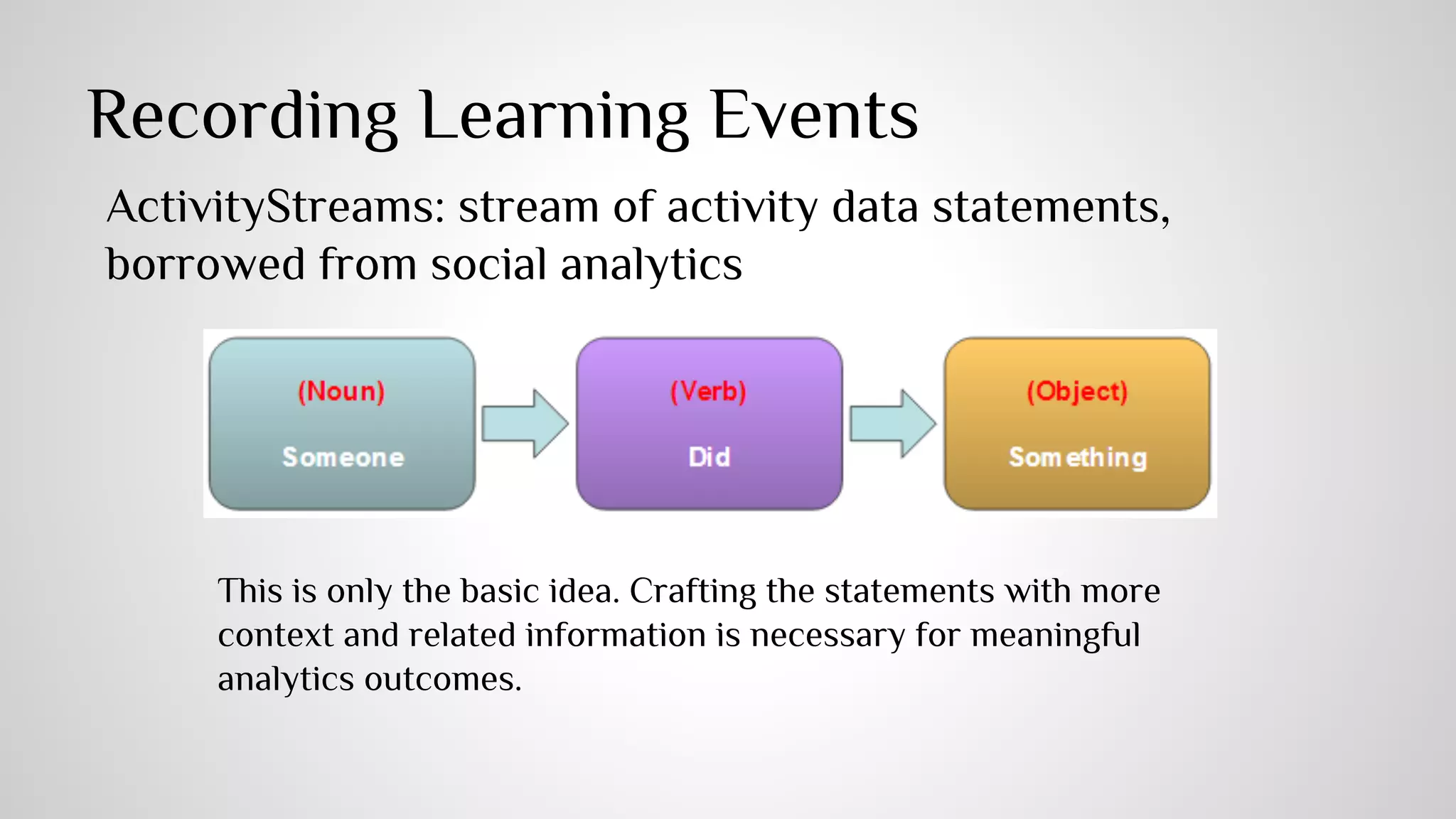

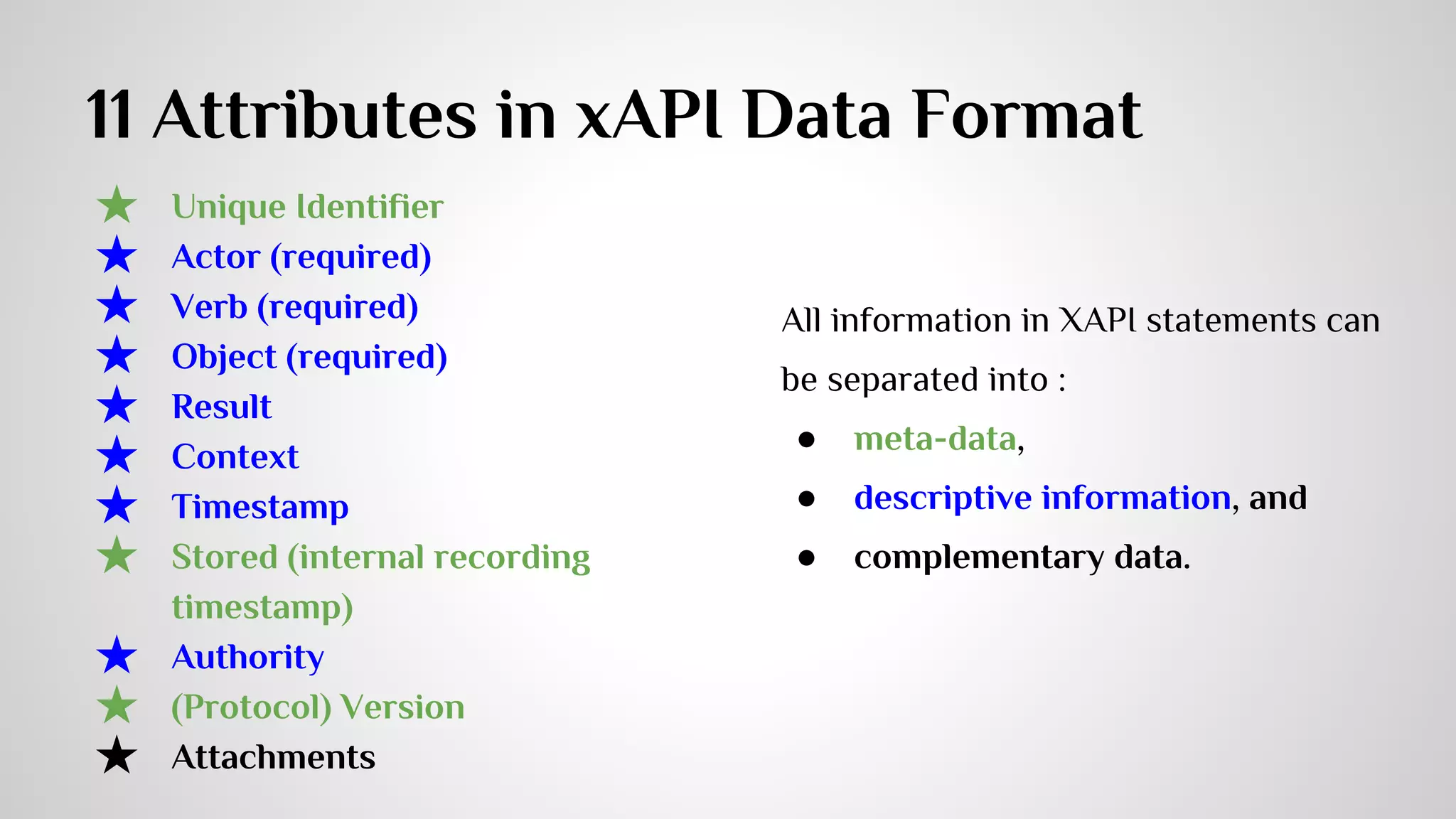

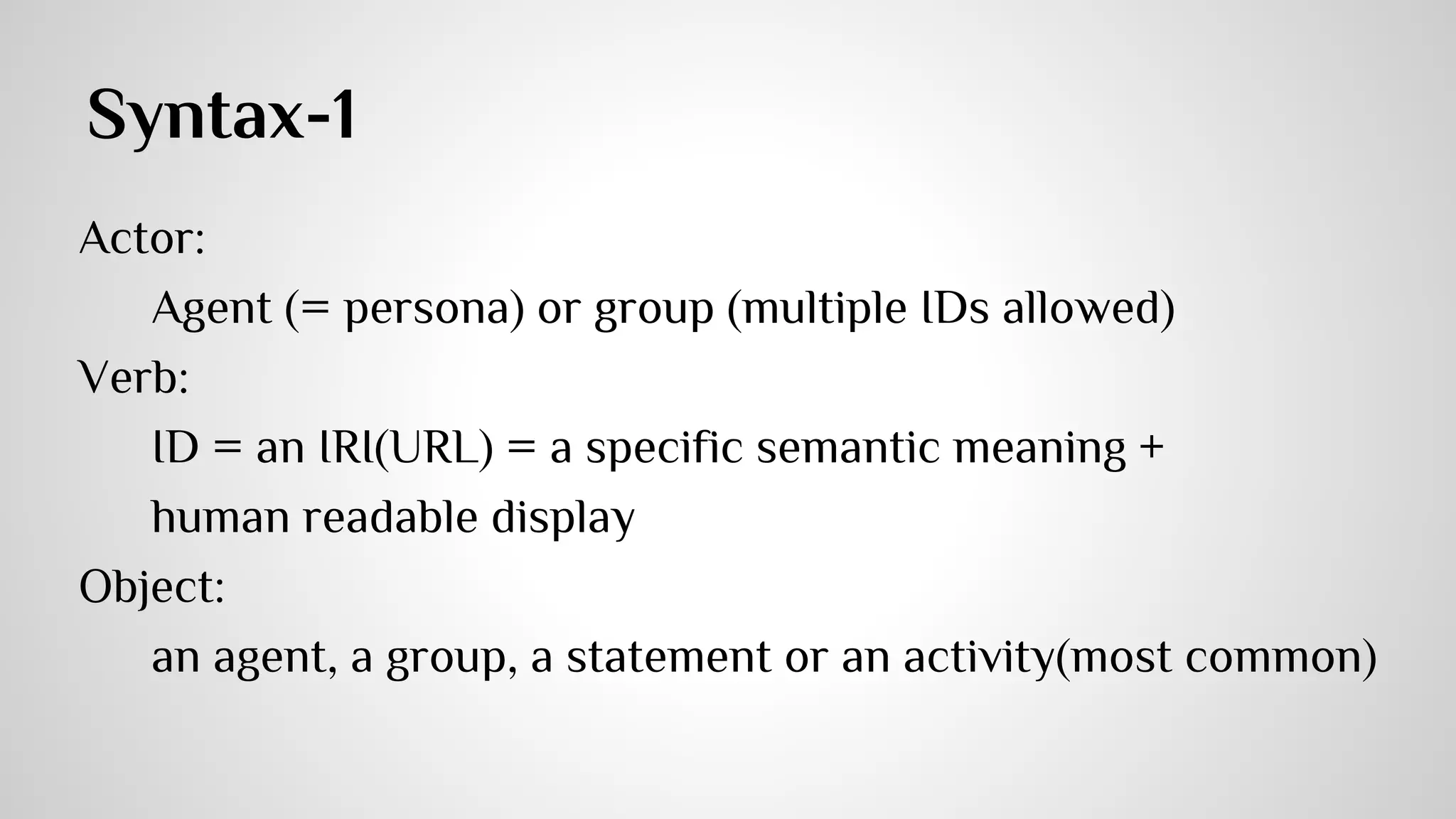

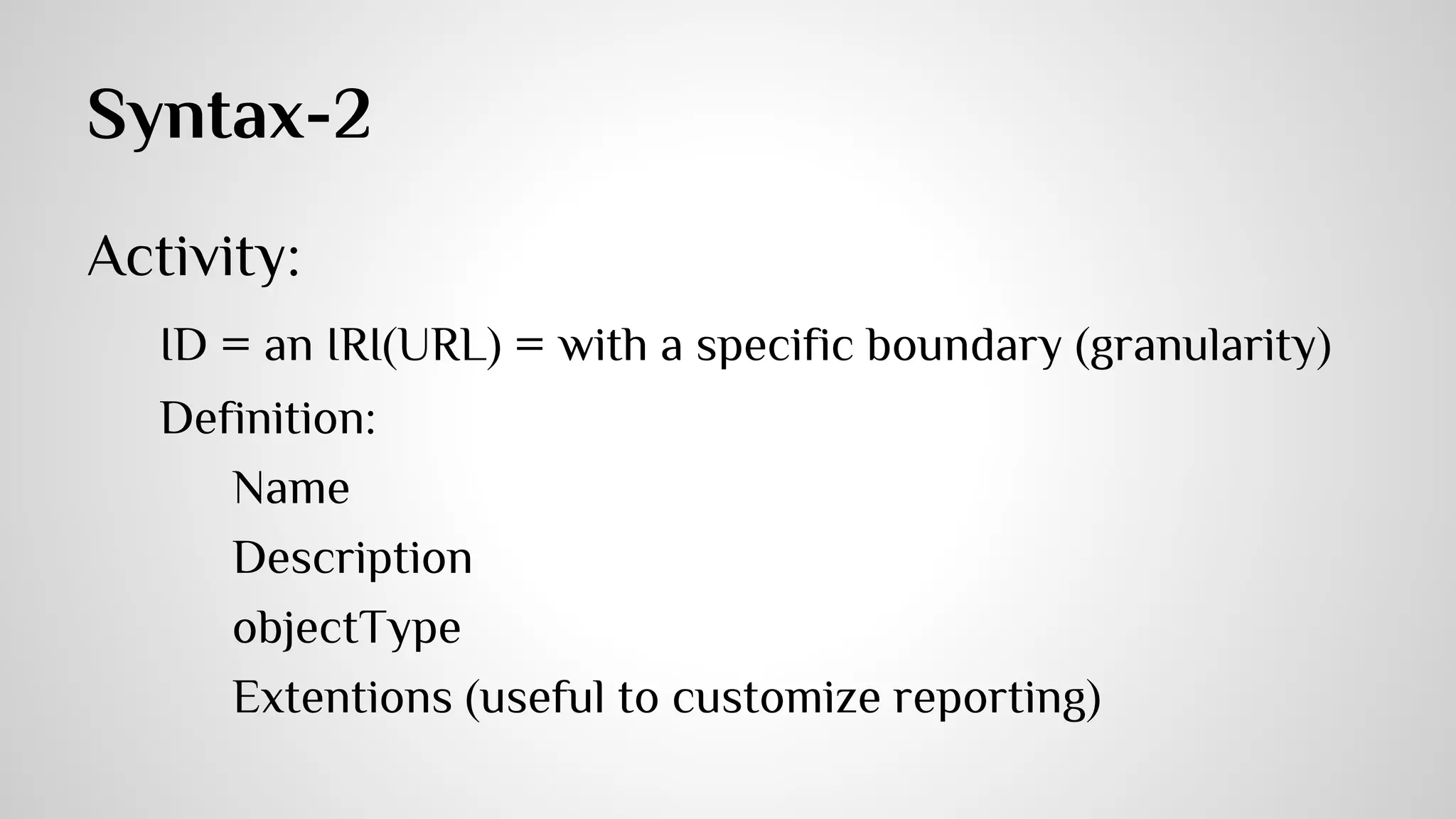

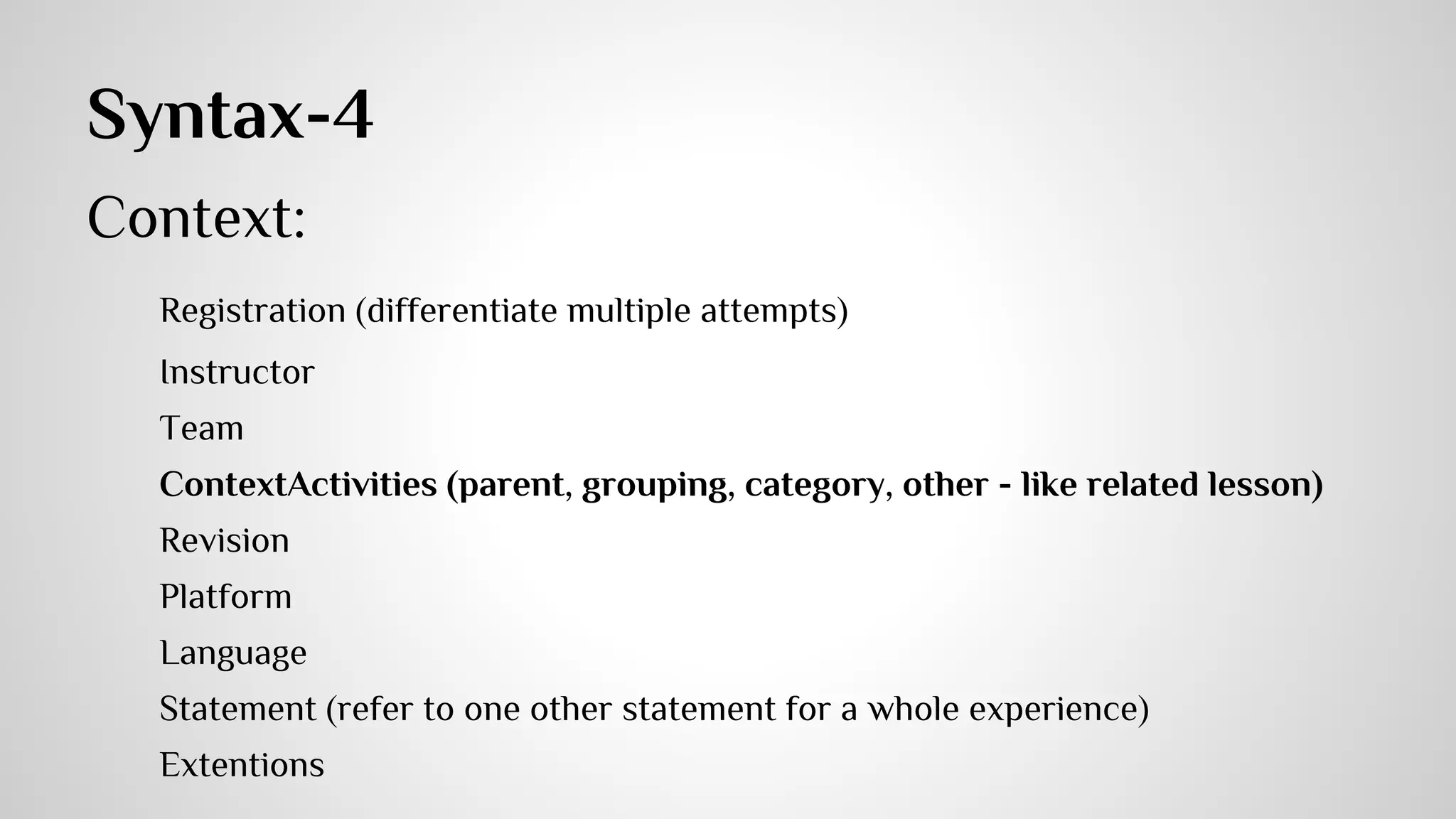

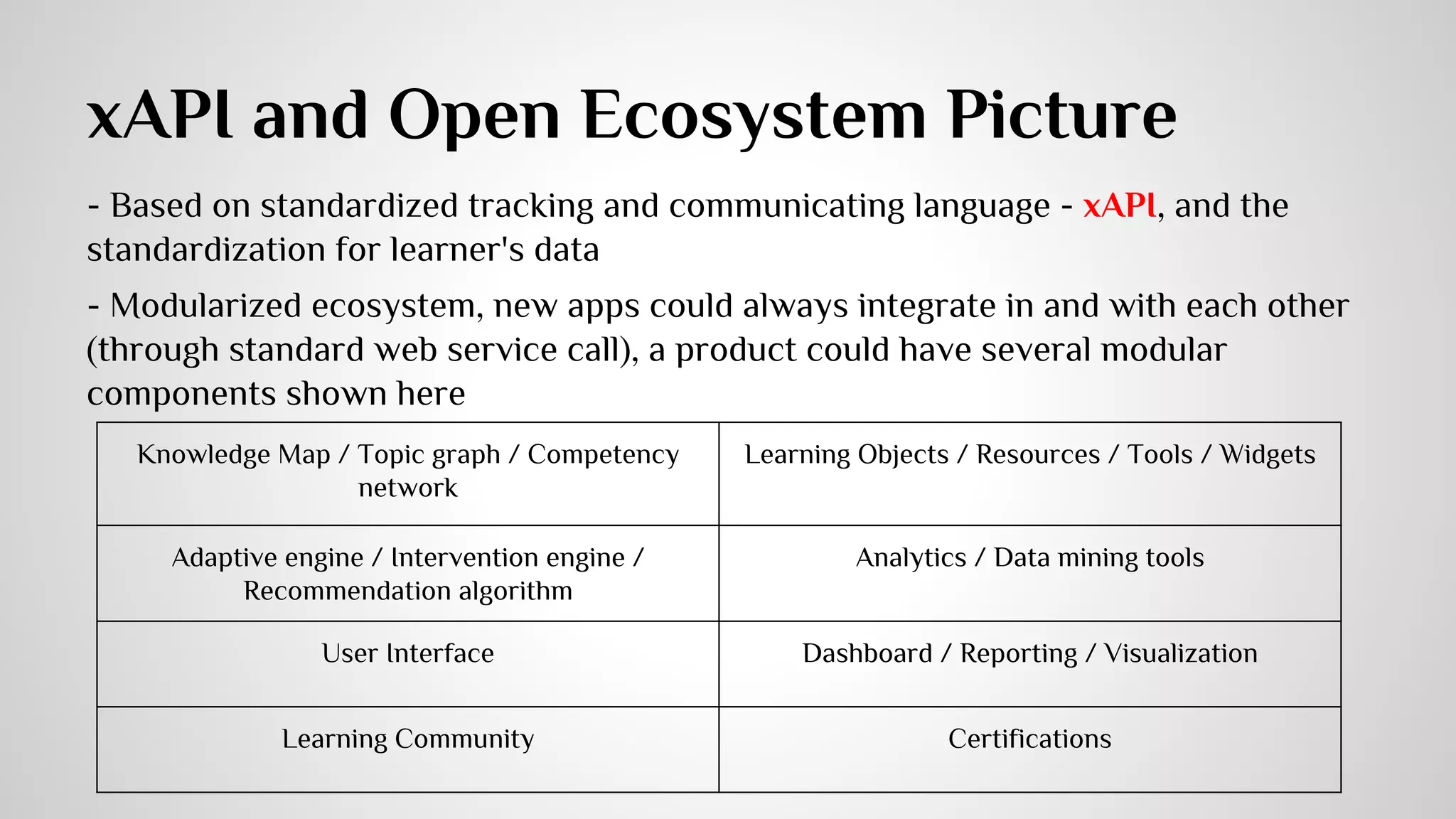

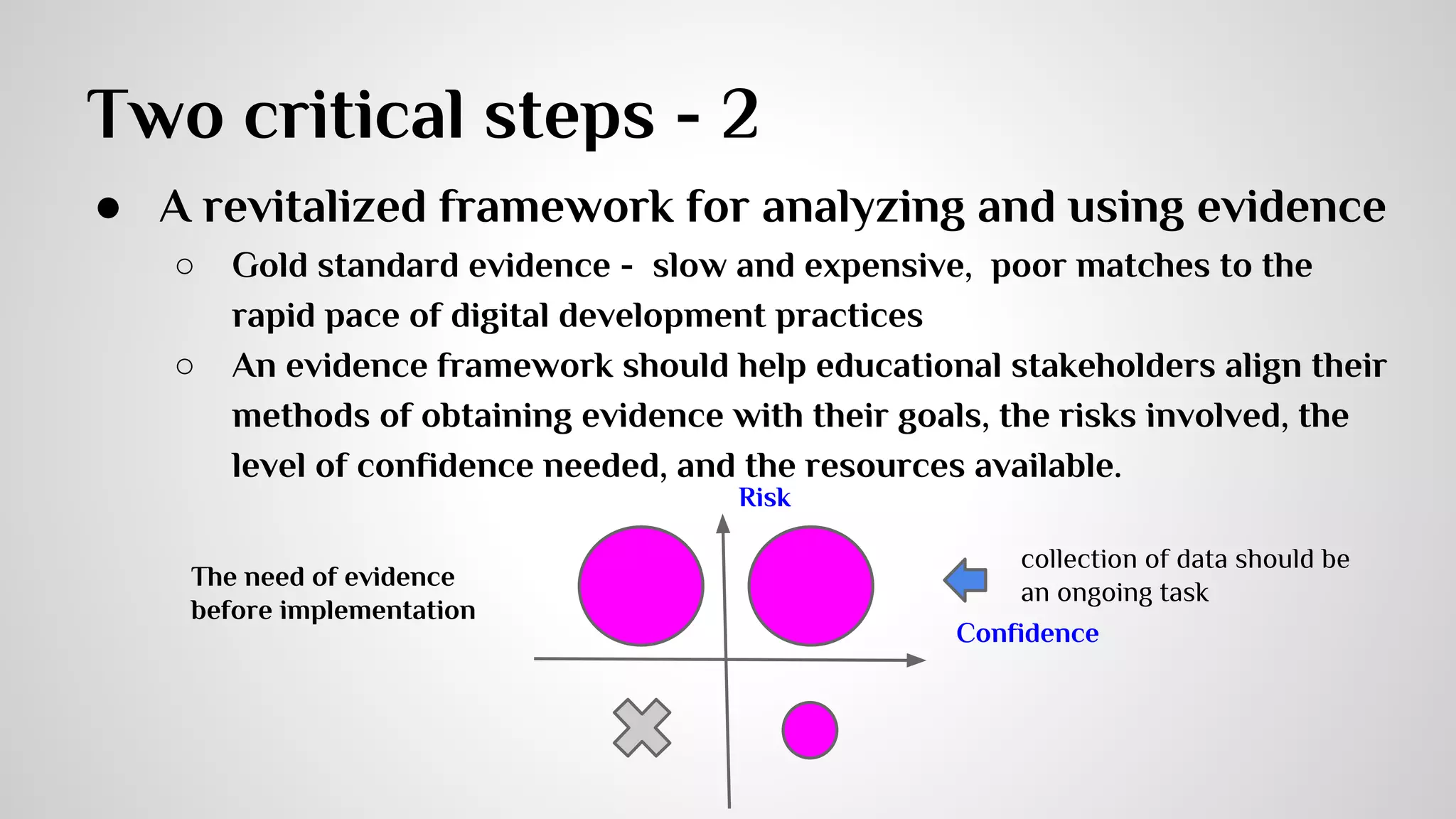

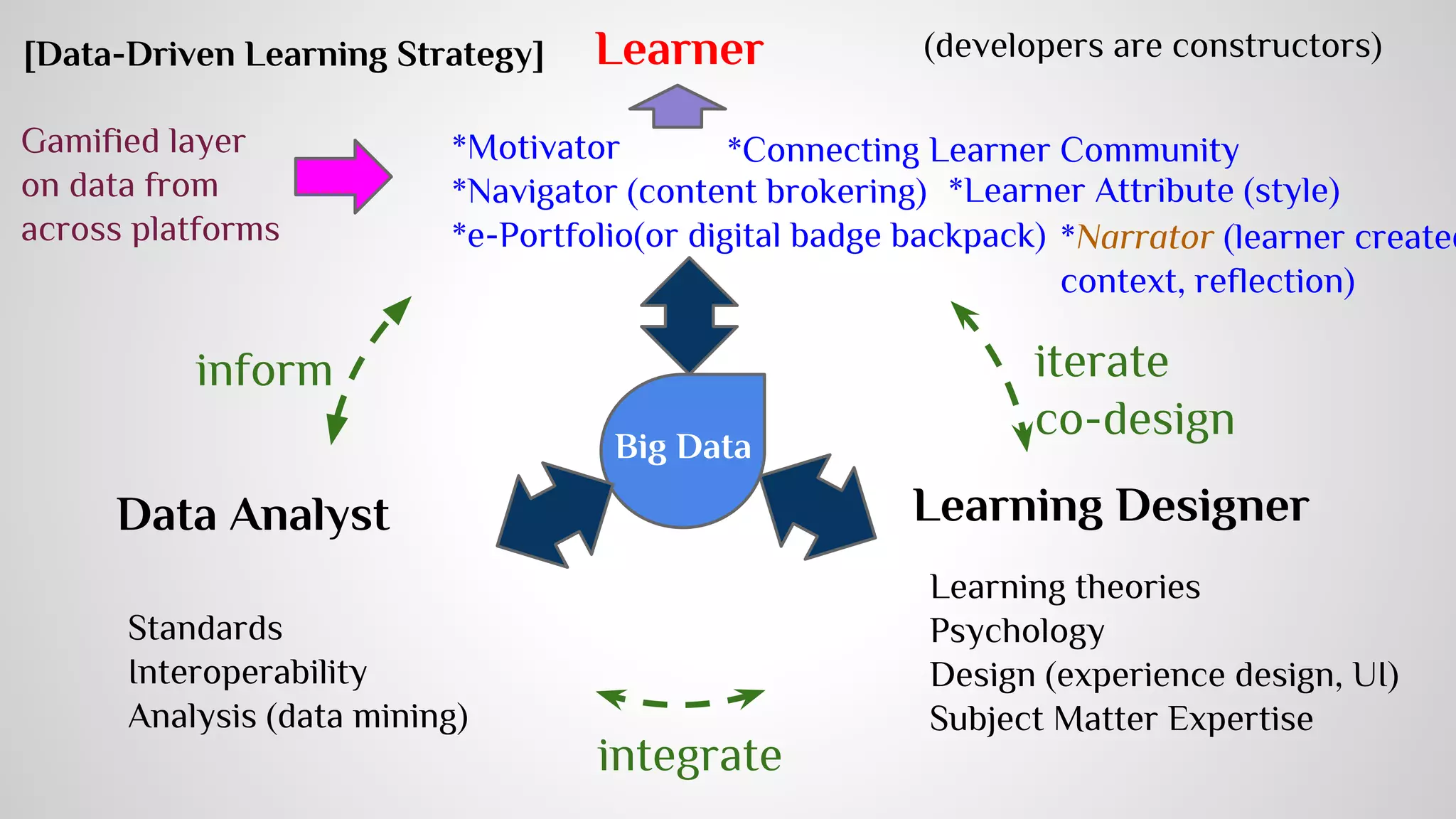

The document outlines a data-driven learning strategy emphasizing the importance of big data in enhancing educational practices and personalized learning experiences. It highlights the need for improved evidence frameworks to analyze diverse and granular educational data from digital learning systems, alongside collaborative efforts between researchers and educational institutions. Additionally, it discusses the role of technologies like xAPI in tracking learning activities and the importance of community-driven standards for effective data interoperability in educational settings.

![Learner

*Motivator

*Navigator (content brokering)

*e-Portfolio(or digital badge backpack)

inform iterate

co-design

Data Analyst Learning Designer

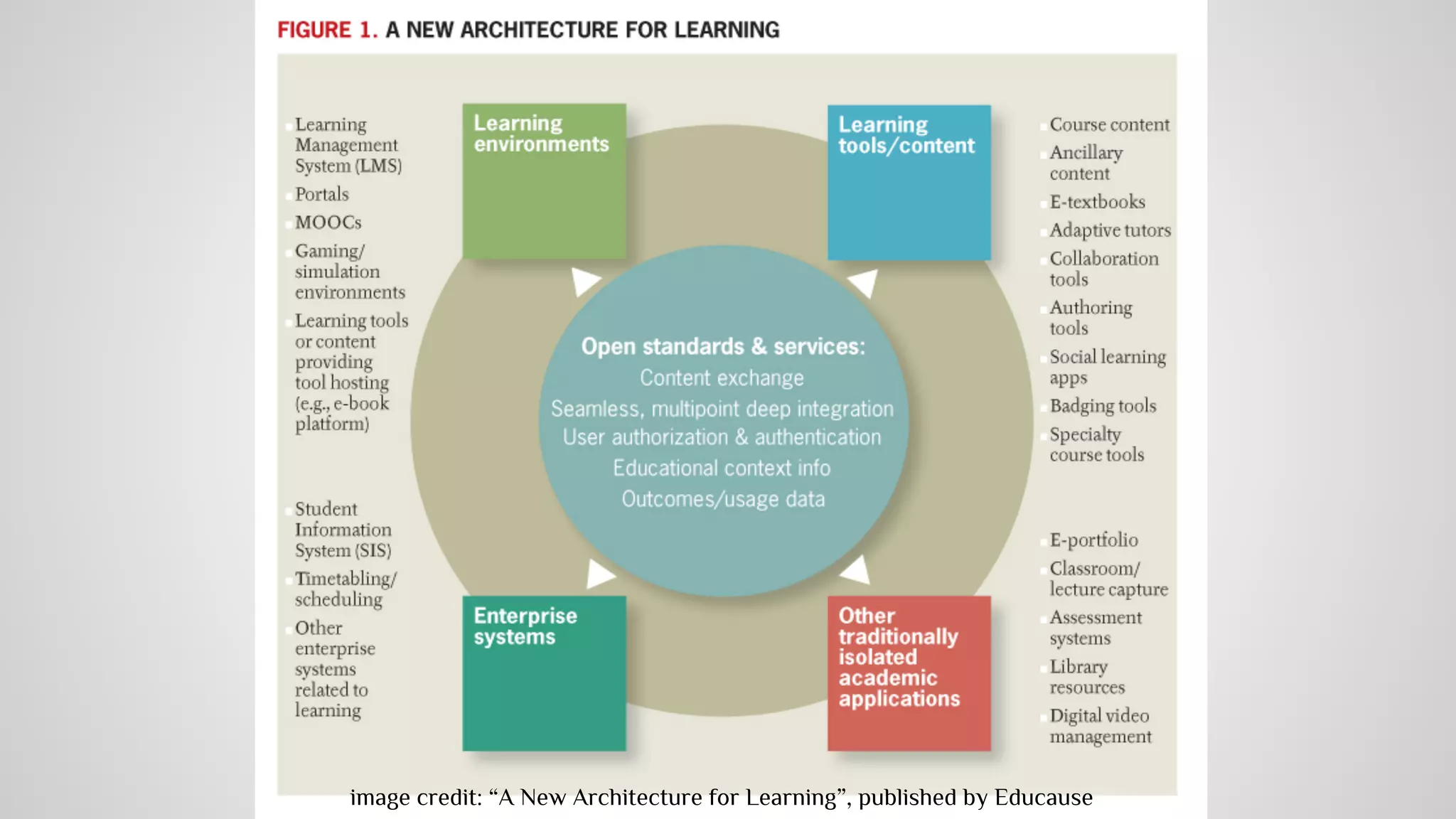

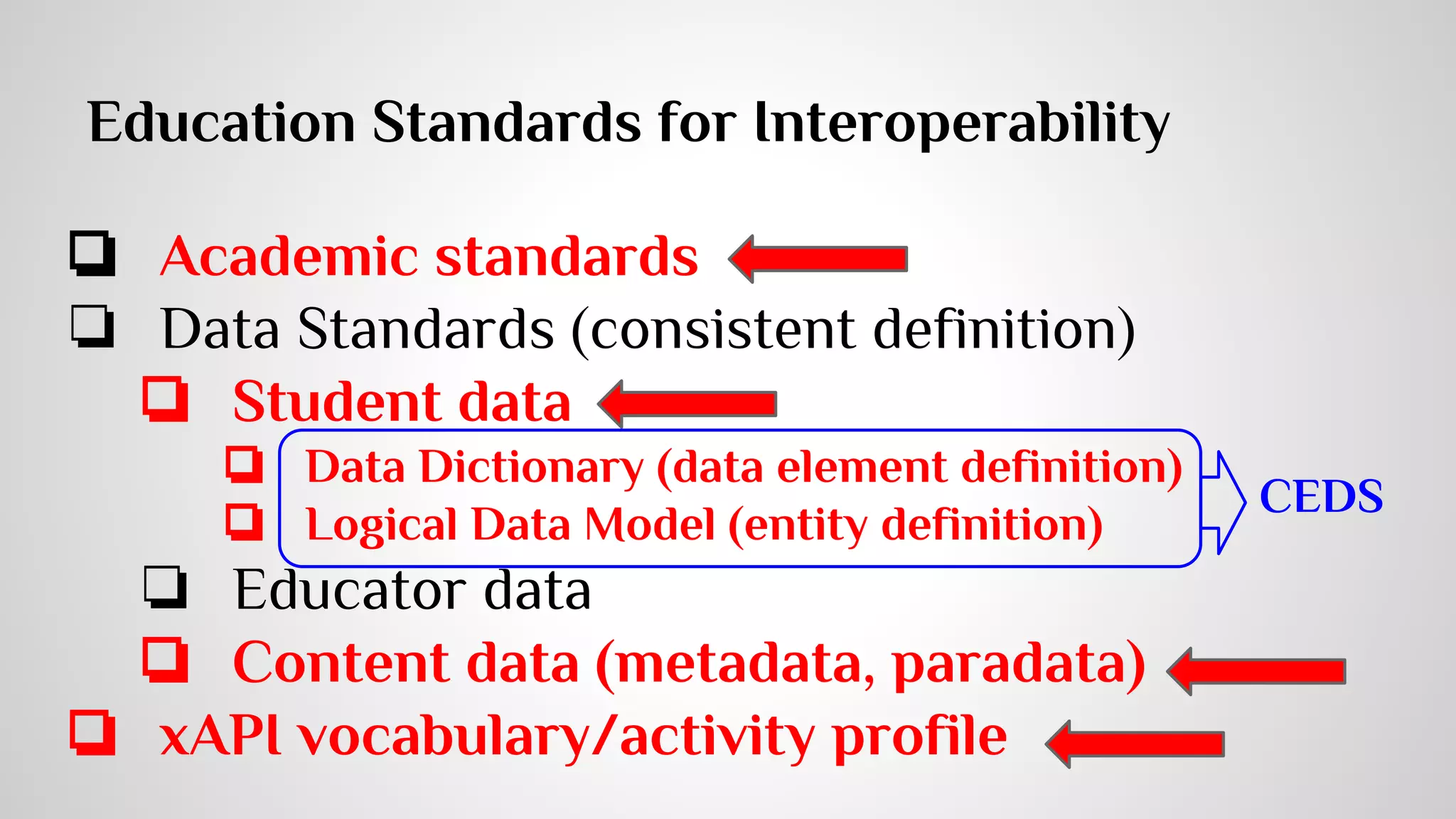

Standards

Interoperability

Analysis (data mining)

Learning theories

Psychology

Design (experience design, UI)

Subject Matter Expertise

integrate

(developers are constructors)

*Connecting Learner Community

*Learner Attribute (style)

*Narrator (learner created

context, reflection)

Gamified layer

on data from

across platforms

Big Data

[Data-Driven Learning Strategy]](https://image.slidesharecdn.com/data-drivenlearningstrategyen1-140814144042-phpapp02/75/Data-Driven-Learning-Strategy-15-2048.jpg)