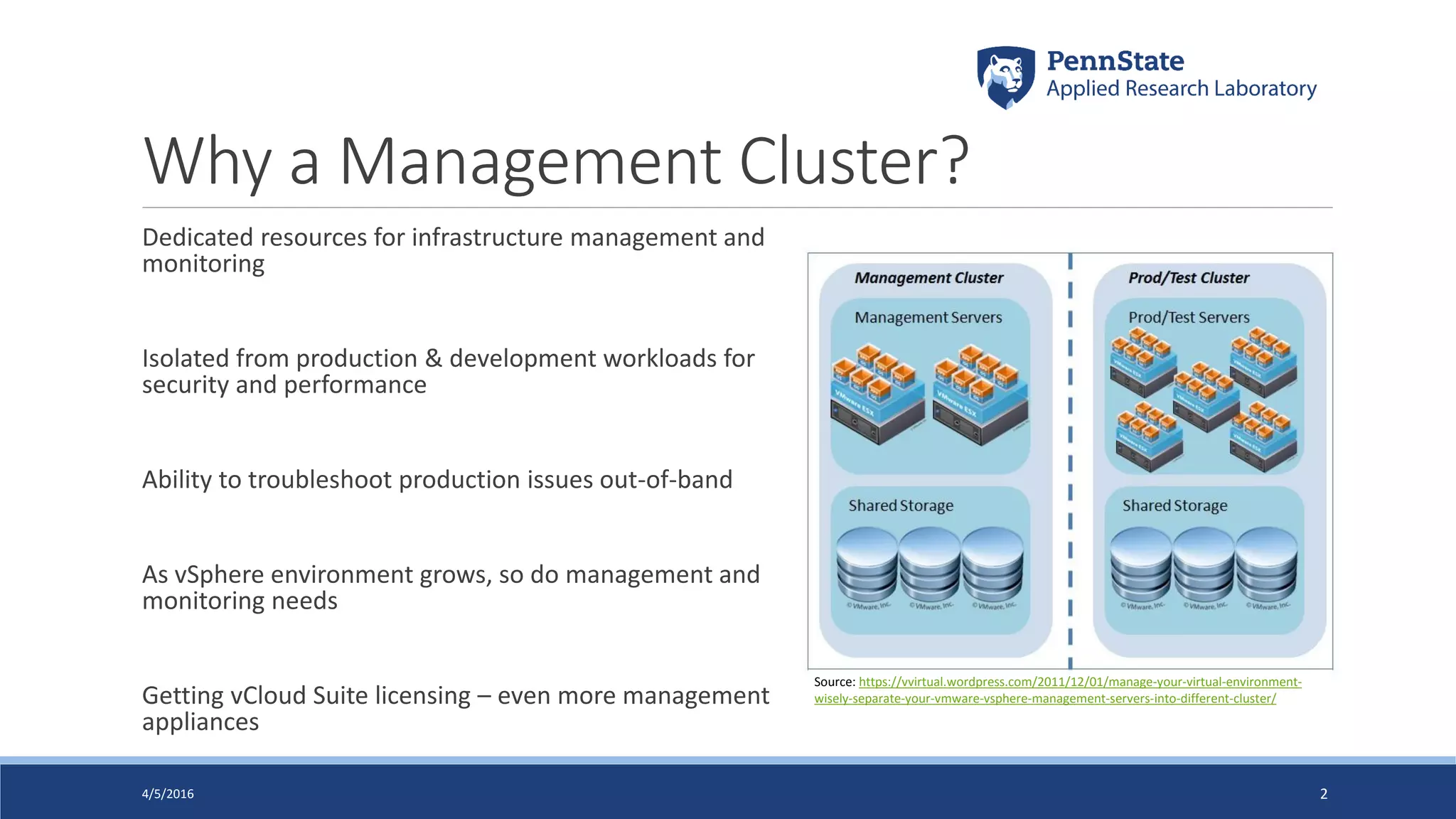

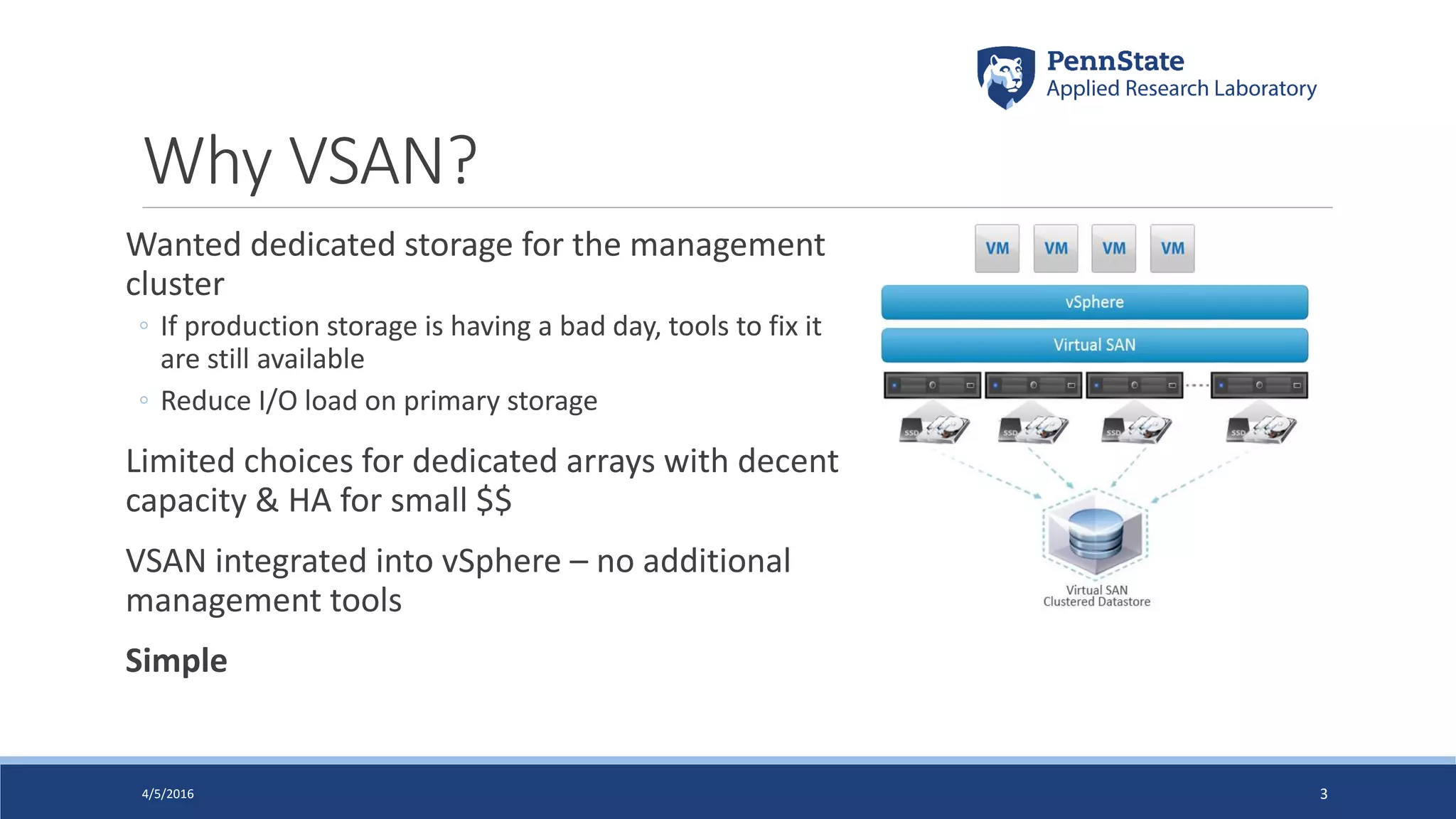

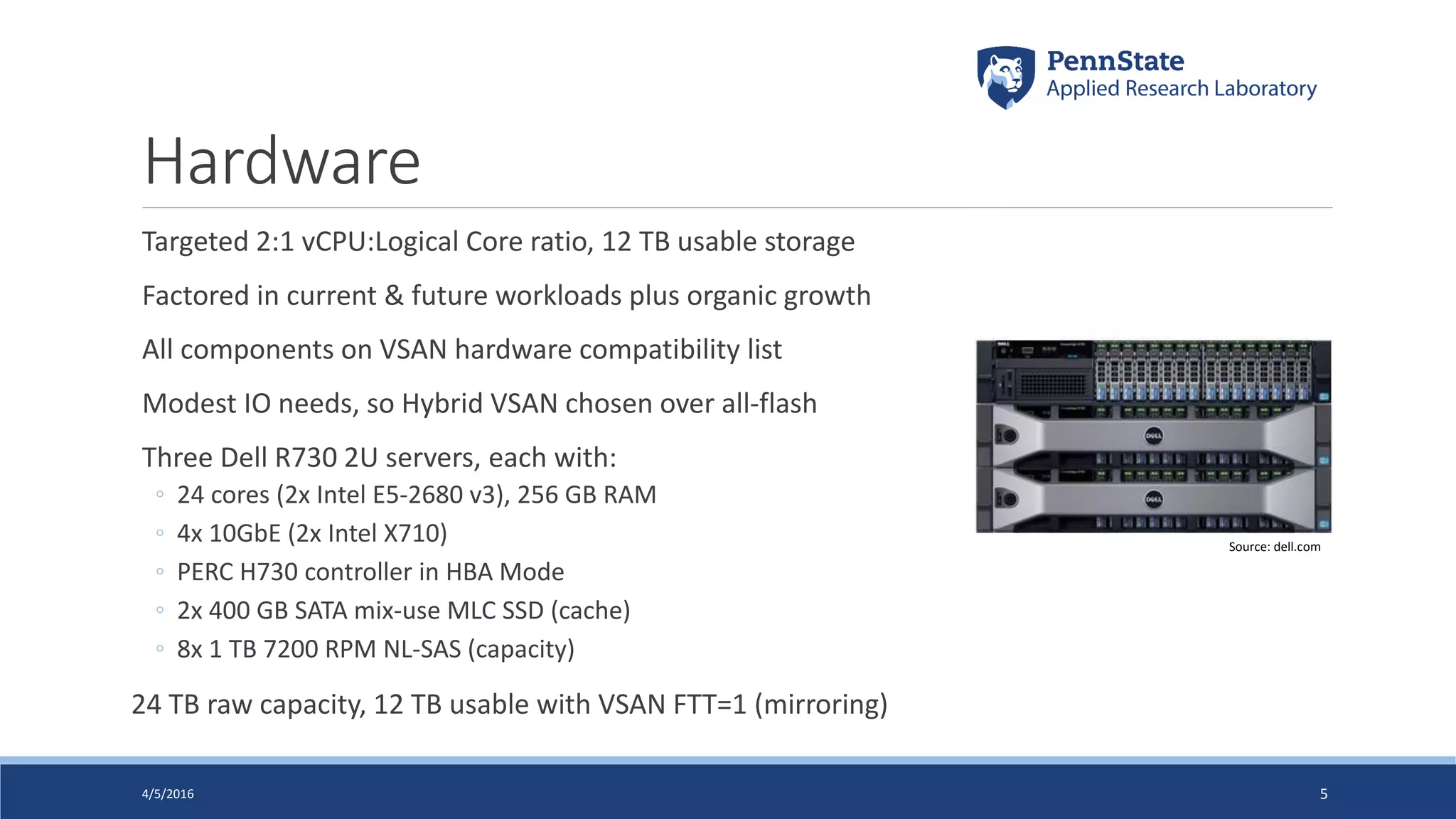

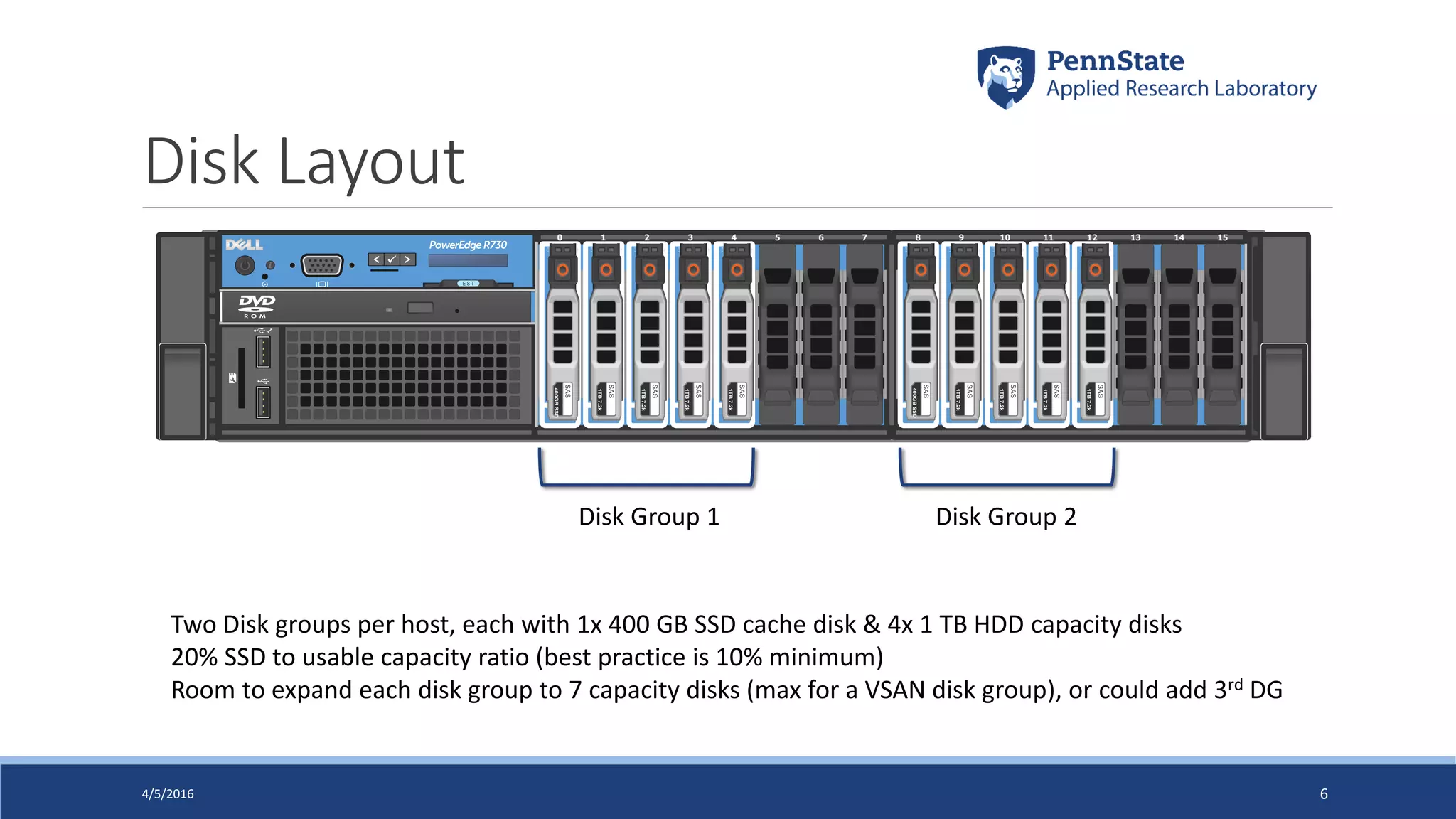

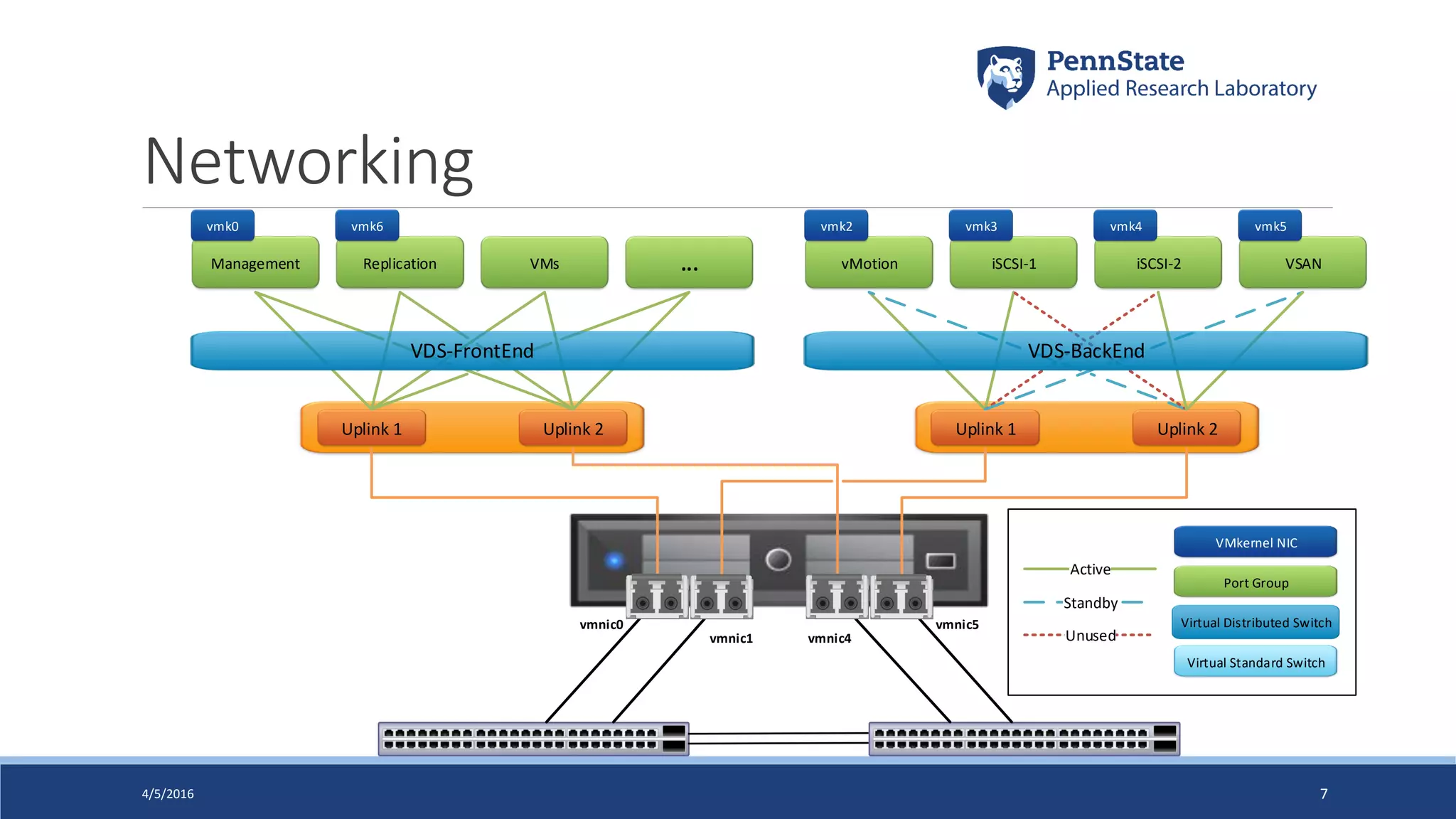

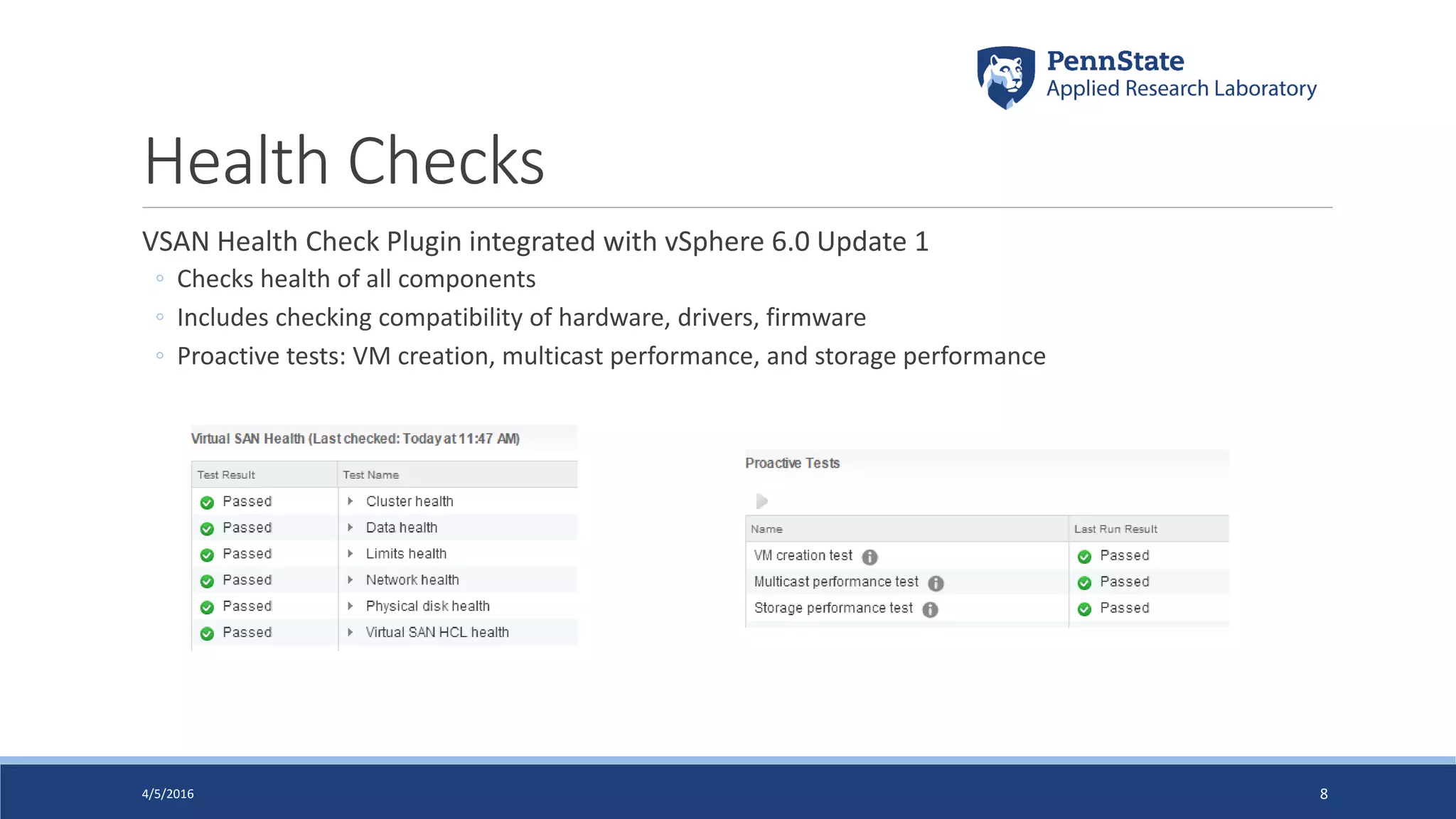

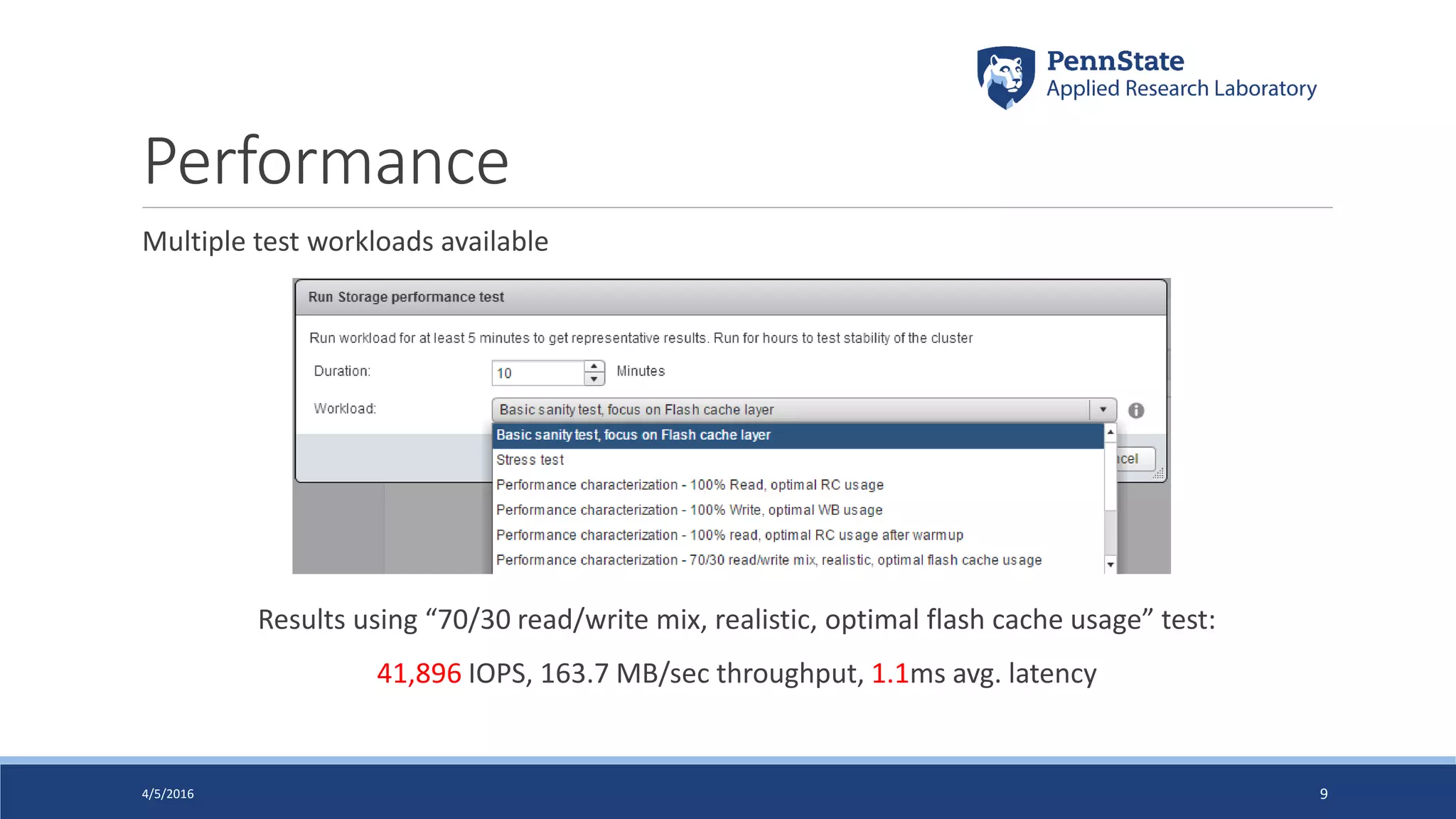

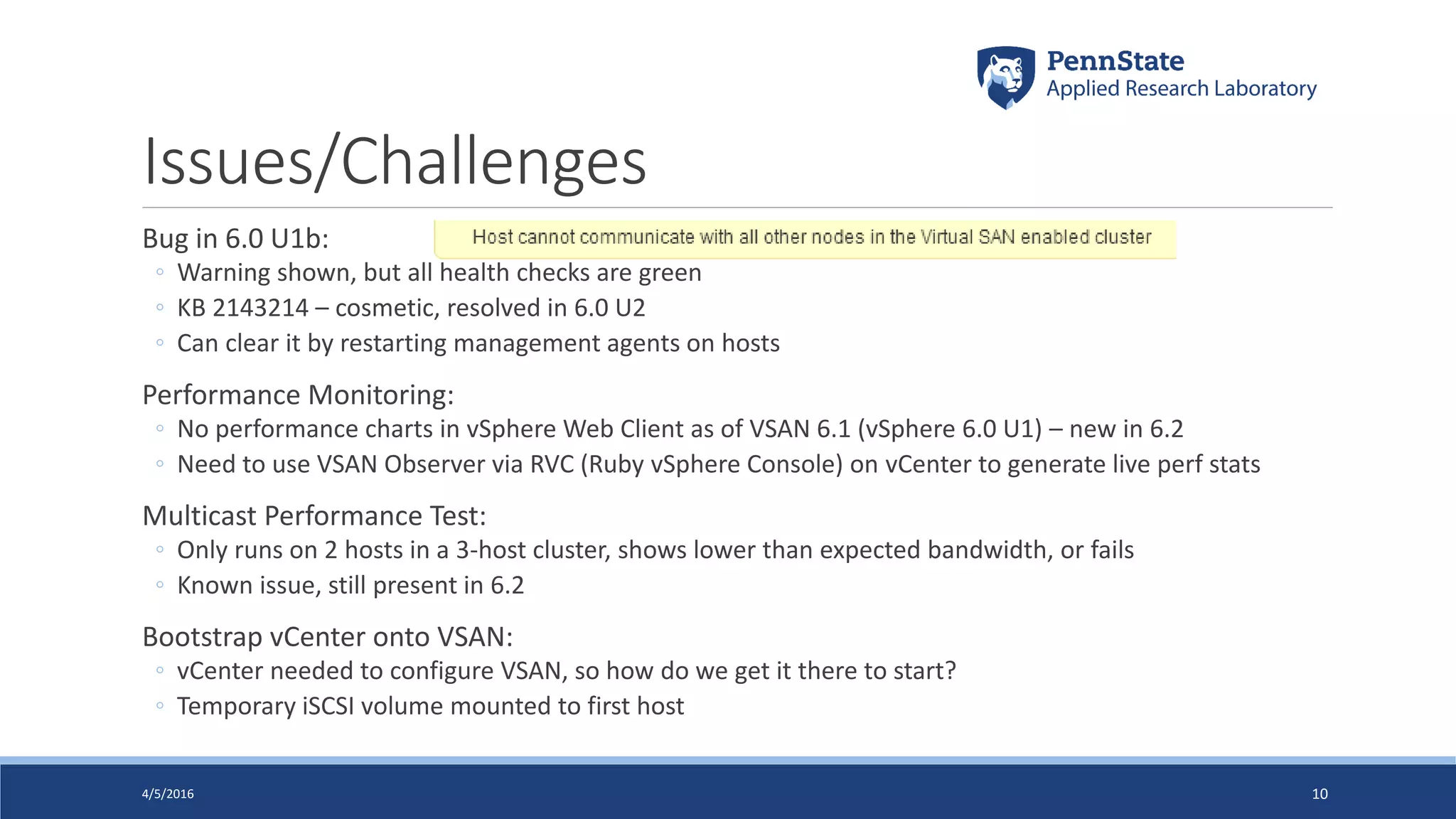

This document discusses building a management cluster with VMware VSAN for isolated management of a vSphere environment. A management cluster provides dedicated resources for infrastructure management and monitoring separate from production workloads. The author configured a management cluster with 3 Dell servers each containing SSDs for caching and HDDs for capacity. VSAN was chosen for storage to provide high availability and simplify management without an additional storage array. Various management and support VMs were configured to utilize the resources of the management cluster. Performance testing showed over 40,000 IOPS and 163 MB/s throughput. Some initial issues were encountered but resolved with VMware patches. Tips are provided for sizing, documentation, and monitoring the management cluster.