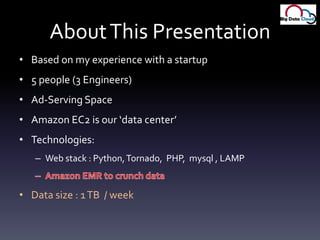

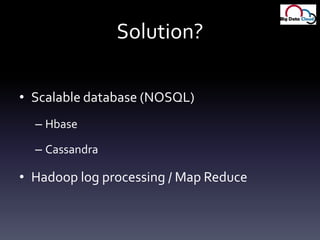

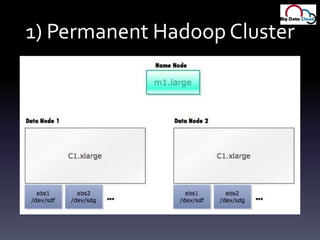

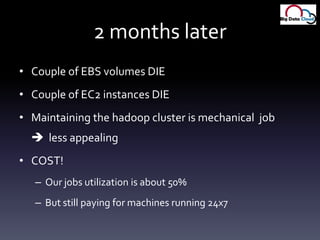

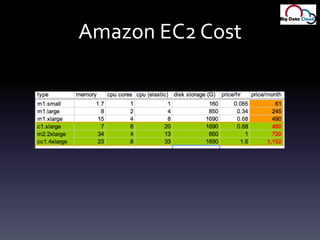

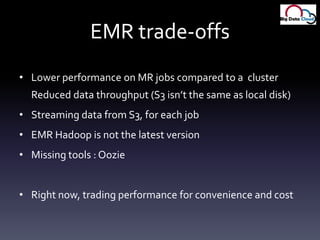

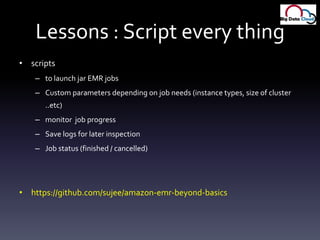

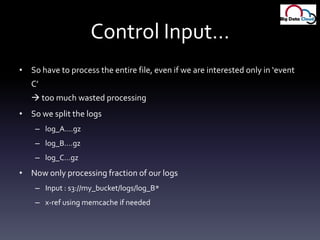

The document discusses the use of Amazon Elastic MapReduce (EMR) for cost-effective big data processing, detailing the author's experiences with setting up and managing Hadoop clusters on AWS. It highlights the growth of both primary and secondary data, the challenges startups face with big data, and the advantages of leveraging EMR for scalable and on-demand processing. Key lessons learned include managing costs, optimizing database usage, and improving job processing efficiency through various techniques and tools.

![Map reduce tips: Logfile formatCSV JSONStarted with CSVCSV: "2","26","3","07807606-7637-41c0-9bc0-8d392ac73b42","MTY4Mjk2NDk0eDAuNDk4IDEyODQwMTkyMDB4LTM0MTk3OTg2Ng","2010-09-09 03:59:56:000 EDT","70.68.3.116","908105","http://housemdvideos.com/seasons/video.php?s=01&e=07","908105","160x600","performance","25","ca","housemdvideos.com","1","1.2840192E9","0","221","0.60000","NULL","NULL20-40 fields… fragile, position dependant, hard to code url = csv[18]…counting position numbers gets old after 100th time around)If (csv.length == 29) url = csv[28] else url = csv[26]](https://image.slidesharecdn.com/amazon-emr2bcopy-110728170634-phpapp02/85/Cost-effective-BigData-Processing-on-Amazon-EC2-57-320.jpg)