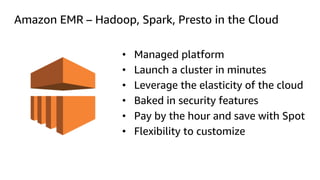

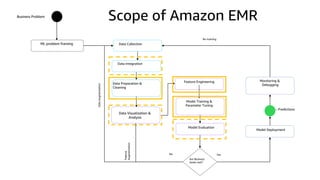

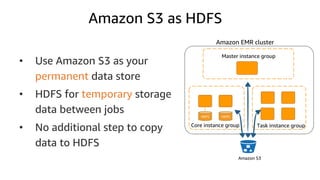

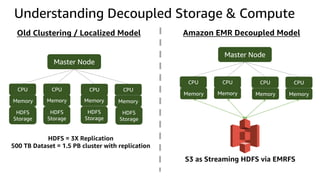

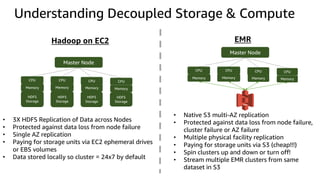

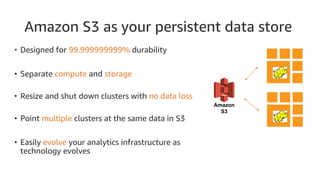

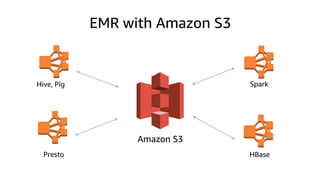

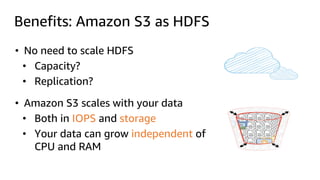

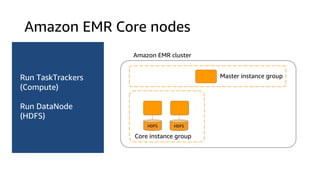

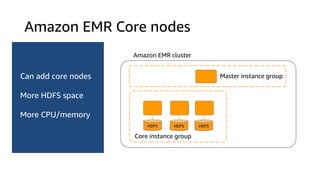

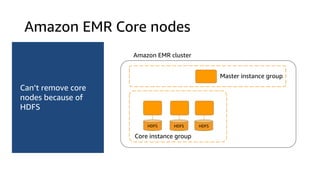

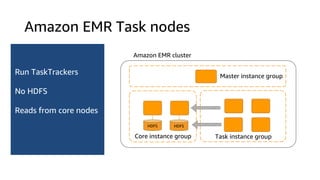

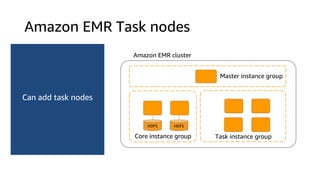

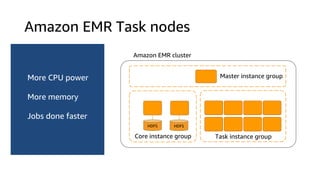

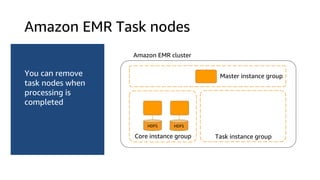

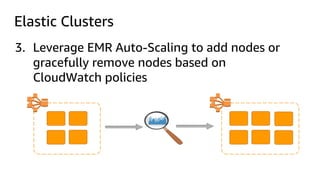

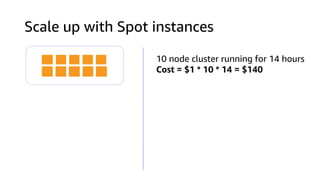

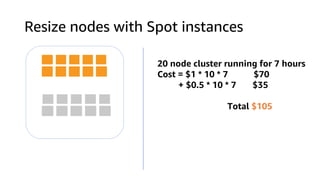

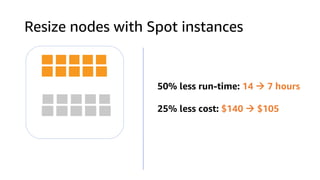

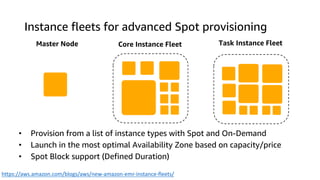

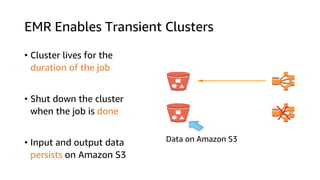

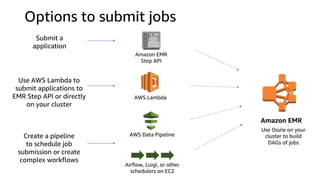

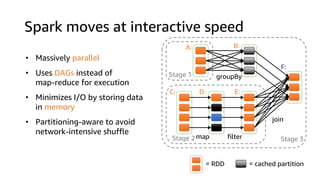

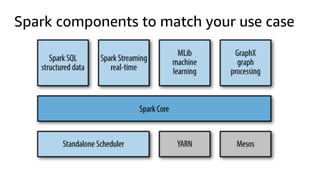

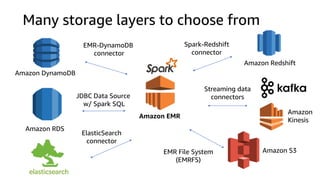

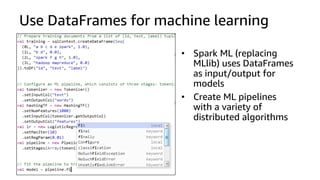

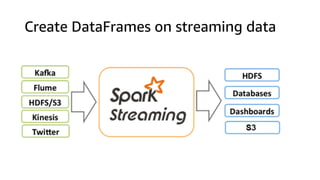

The document provides an overview of Amazon Elastic Map Reduce (EMR), highlighting its features like the use of Amazon S3 as a permanent data store, the advantages of decoupled storage and compute, and the ability to launch clusters quickly and at scale. It explains the functionality of core and task nodes, elastic clusters, and integration with tools like Spark for advanced analytics. Additionally, it emphasizes the benefits of Amazon S3 for data durability and efficient scaling compared to traditional HDFS.