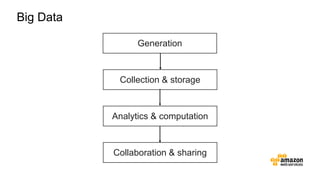

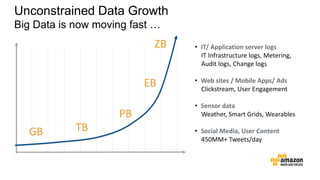

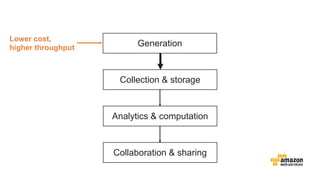

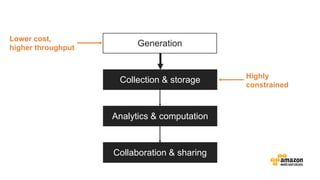

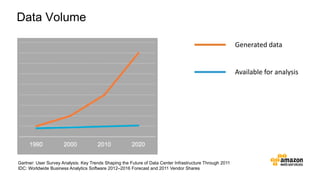

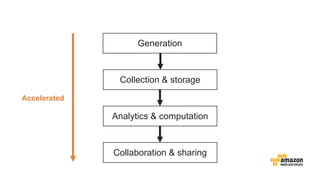

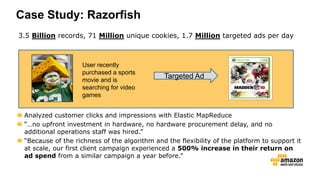

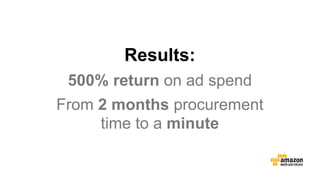

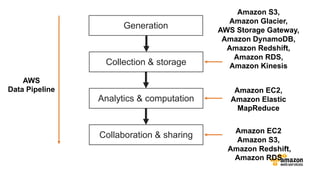

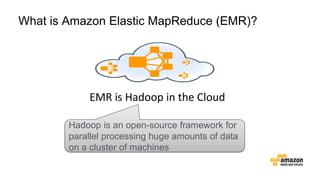

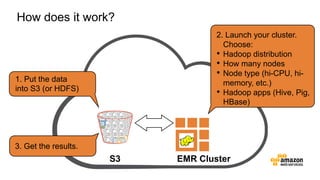

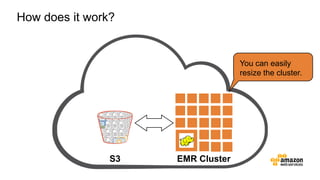

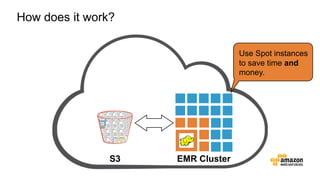

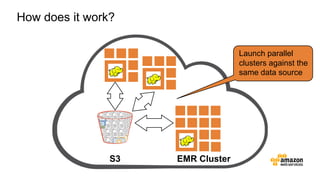

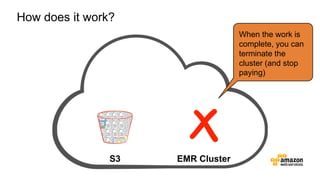

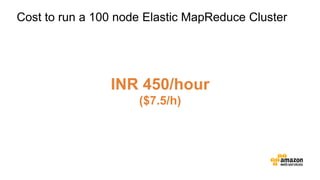

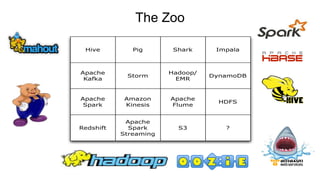

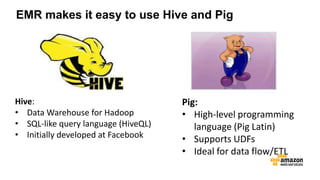

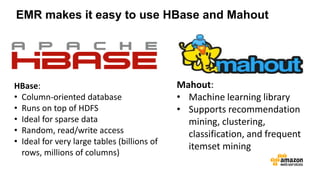

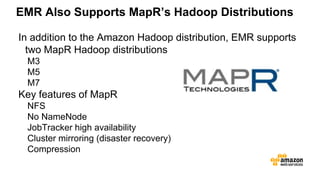

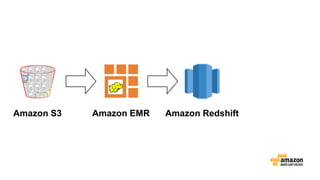

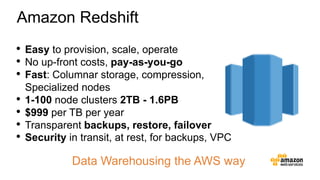

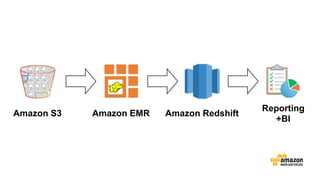

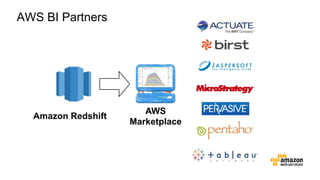

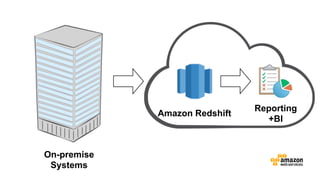

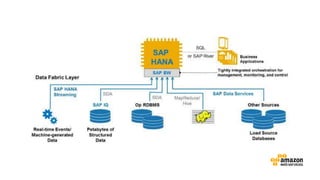

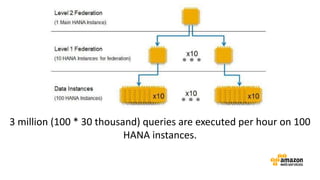

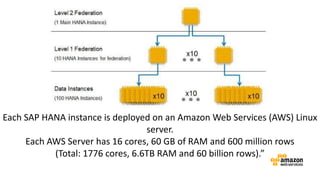

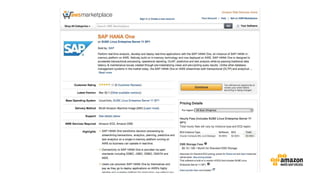

The document discusses the concept of big data and its implications for data collection, storage, and analysis, emphasizing the challenges posed by its velocity, volume, and variety. It highlights the role of cloud computing, specifically AWS services, in efficiently managing big data with tools such as Amazon EMR and Redshift, which facilitate scalable data processing and analytics. Additionally, it showcases successful case studies illustrating significant returns on investment achieved through targeted advertising campaigns leveraging big data technologies.