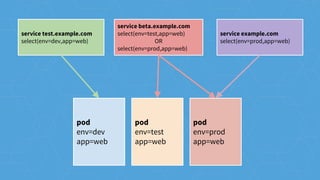

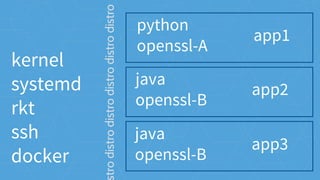

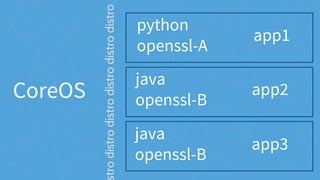

CoreOS is a container-optimized operating system designed to enhance container infrastructure management. It emphasizes automatic updates, minimal kernel use, and secure operations for applications deployed in clustered environments using components like Kubernetes and Docker. The document provides an overview of its architecture, container management, and operational practices, along with examples of commands and configurations for managing containers.

![[Service]

ExecStart=/usr/bin/kubelet --

api_servers=https://172.17.4.101 --

register-node=true --hostname-

override=172.17.4.201 --

cluster_dns=10.3.0.10 --

cluster_domain=cluster.local --tls-

cert-file=worker.pem --tls-private-key-

file=worker-key.pem](https://image.slidesharecdn.com/coreosinanutshell-160224222427/85/CoreOS-in-a-Nutshell-44-320.jpg)

![[Service]

ExecStart=/usr/bin/kubelet --

api_servers=https://172.17.4.101 --

register-node=true --hostname-

override=172.17.4.201 --

cluster_dns=10.3.0.10 --

cluster_domain=cluster.local --tls-

cert-file=worker.pem --tls-private-key-

file=worker-key.pem](https://image.slidesharecdn.com/coreosinanutshell-160224222427/85/CoreOS-in-a-Nutshell-45-320.jpg)

![[Service]

ExecStart=/usr/bin/kubelet --

api_servers=https://172.17.4.101 --

register-node=true --hostname-

override=172.17.4.201 --

cluster_dns=10.3.0.10 --

cluster_domain=cluster.local --tls-

cert-file=worker.pem --tls-private-key-

file=worker-key.pem](https://image.slidesharecdn.com/coreosinanutshell-160224222427/85/CoreOS-in-a-Nutshell-46-320.jpg)