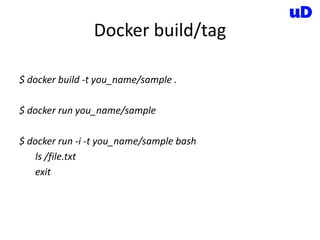

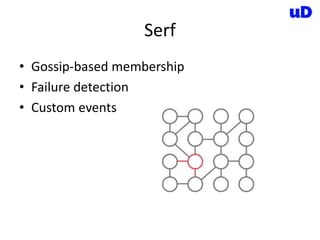

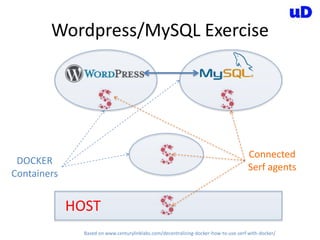

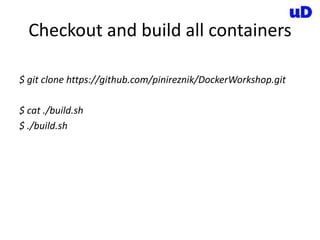

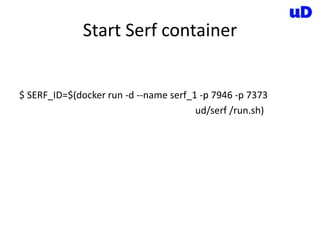

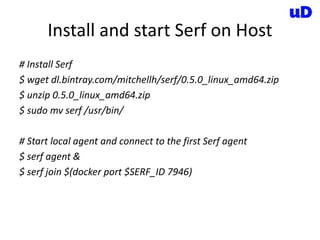

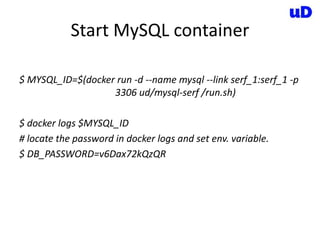

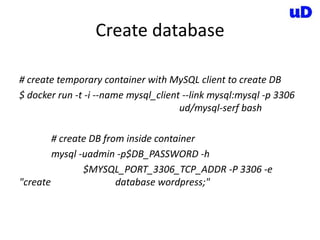

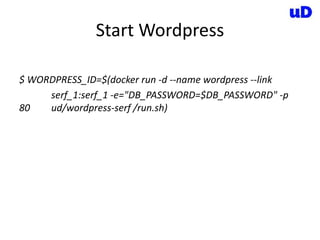

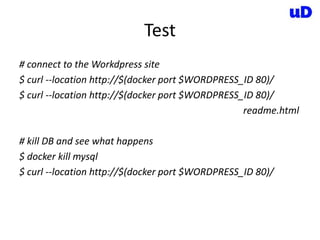

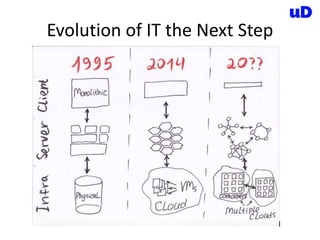

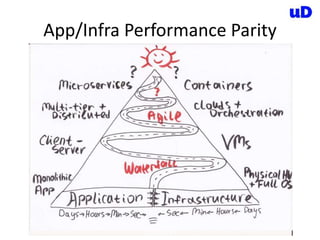

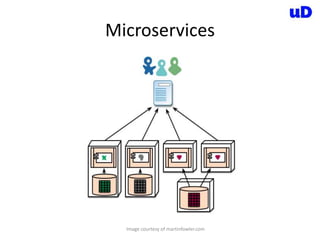

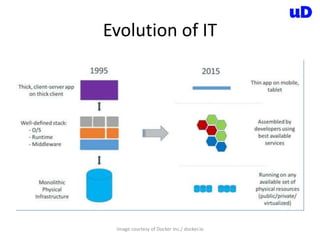

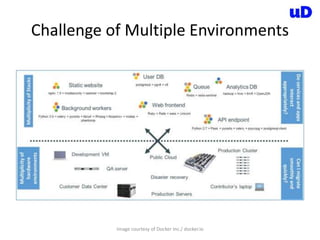

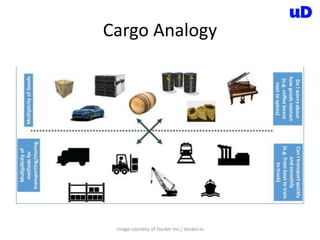

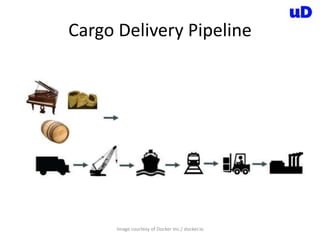

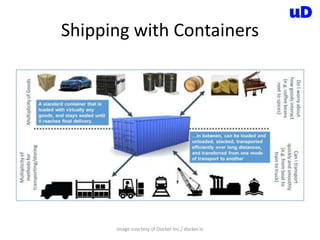

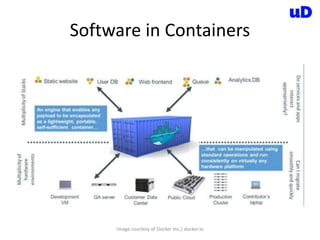

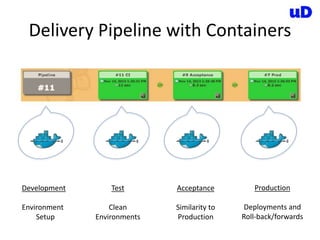

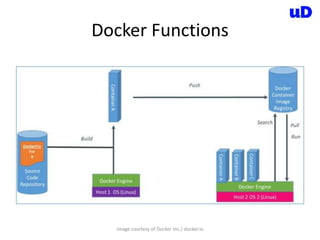

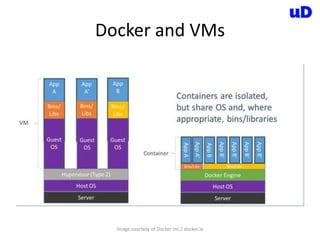

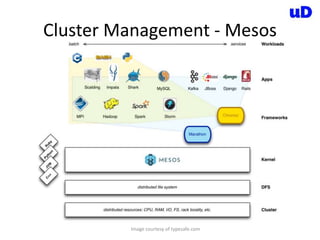

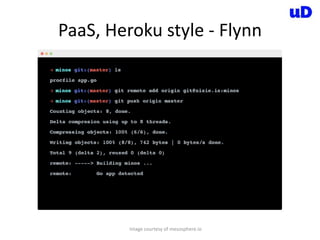

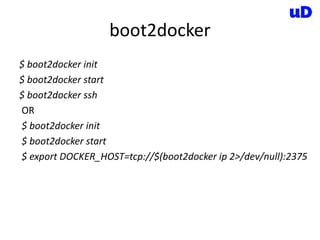

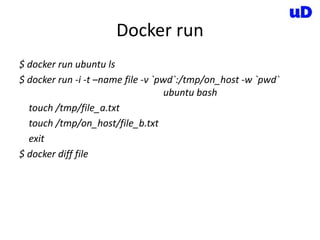

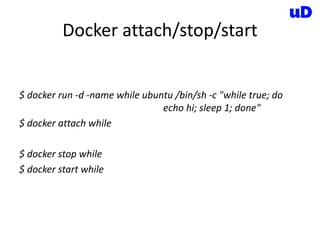

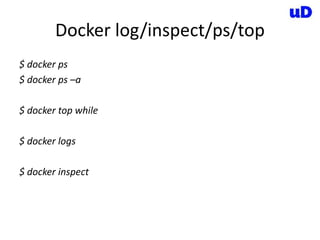

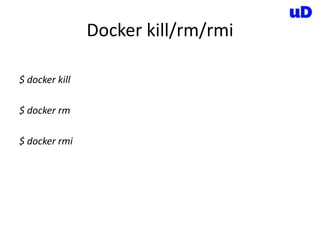

The document outlines a workshop on Docker, detailing installation instructions for different operating systems, an introduction to containers, and concepts around Docker's function and scalability. It includes practical exercises with Docker commands, integrating services like MySQL and WordPress, and highlights future infrastructure trends. The workshop also mentions Docker community events and resources for further engagement.

![Dockerfile

FROM ubuntu

MAINTAINER UglyDuckling "info@uglyduckling.nl"

RUN echo deb http://archive.ubuntu.com/ubuntu precise universe

>> /etc/apt/sources.list

RUN apt-get update

RUN apt-get install -q -y vim

ENV ENV_VAR some_stuff

ADD file.txt /file.txt

EXPOSE 8080

CMD ["bash", "-c", "ls", "/"]](https://image.slidesharecdn.com/dockerworkshop-140627030558-phpapp01/85/Docker-workshop-DevOpsDays-Amsterdam-2014-32-320.jpg)