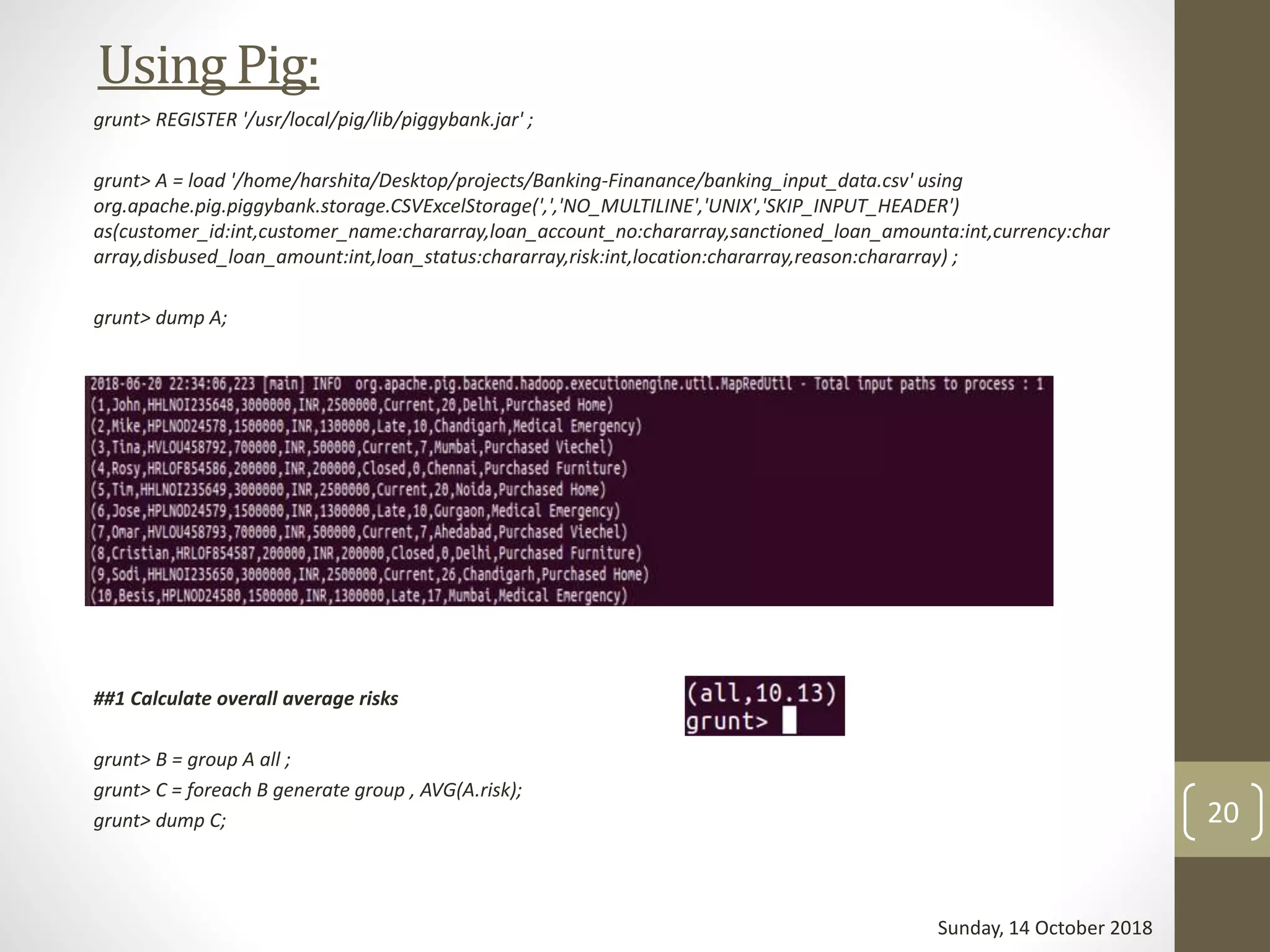

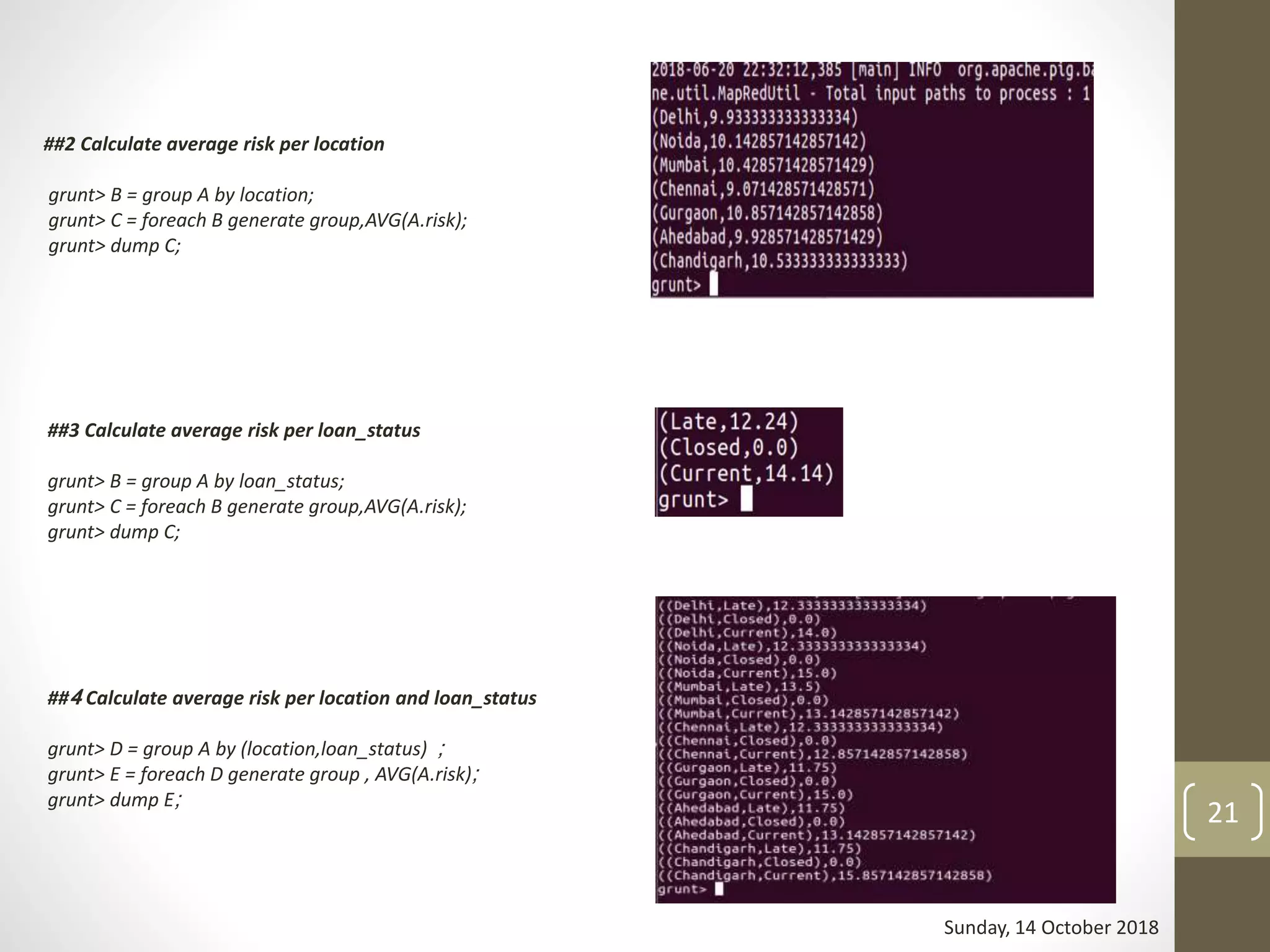

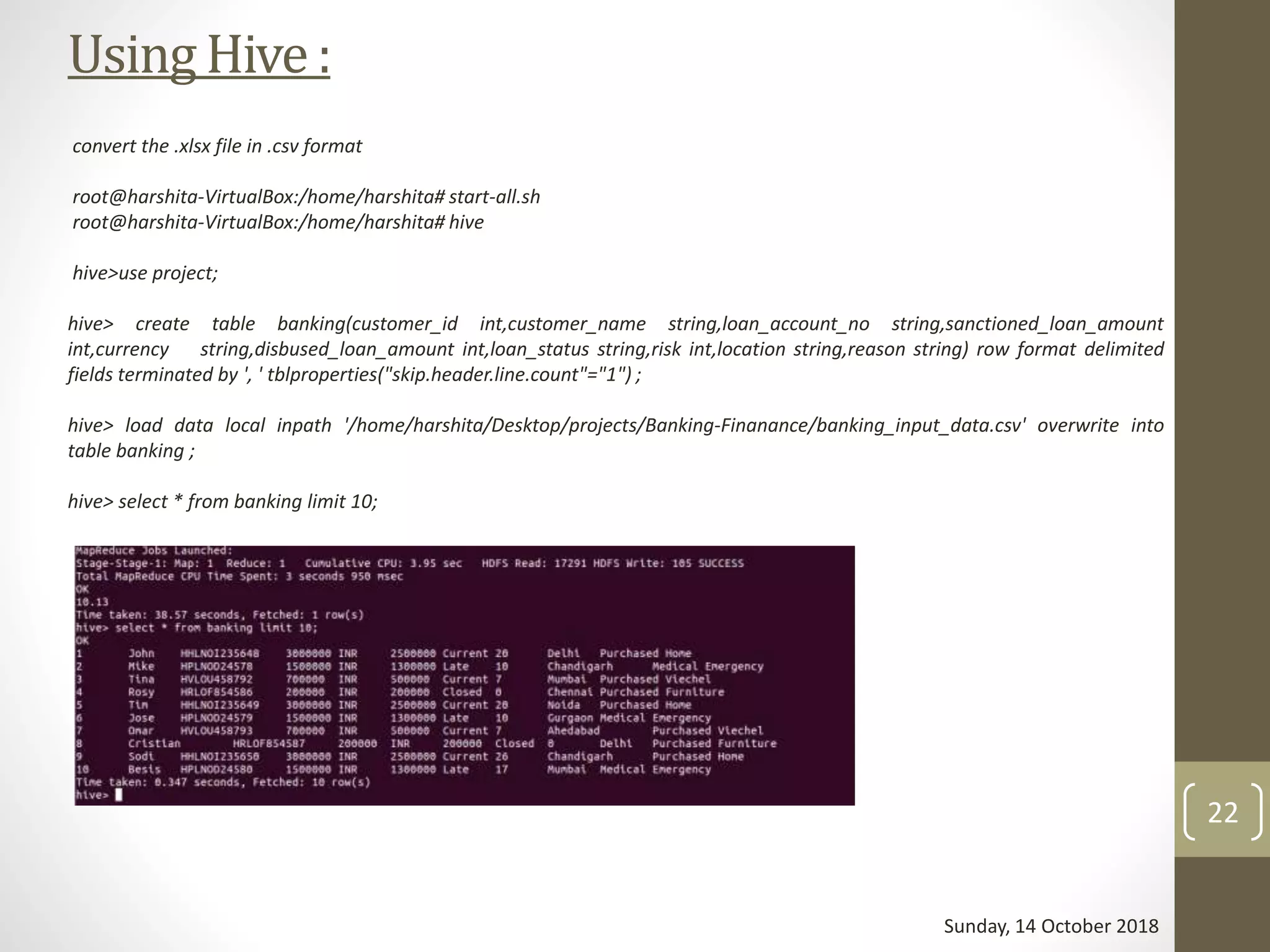

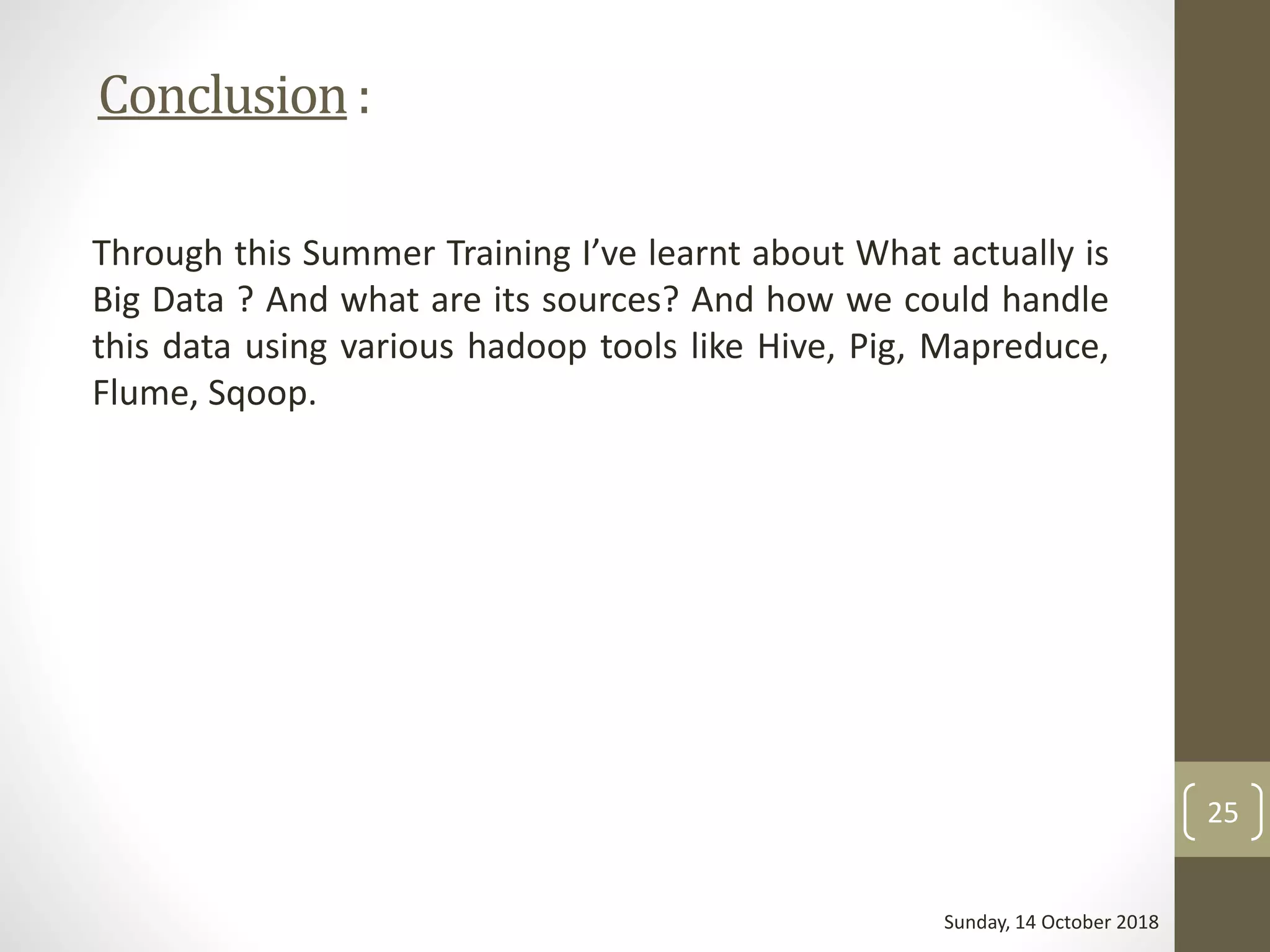

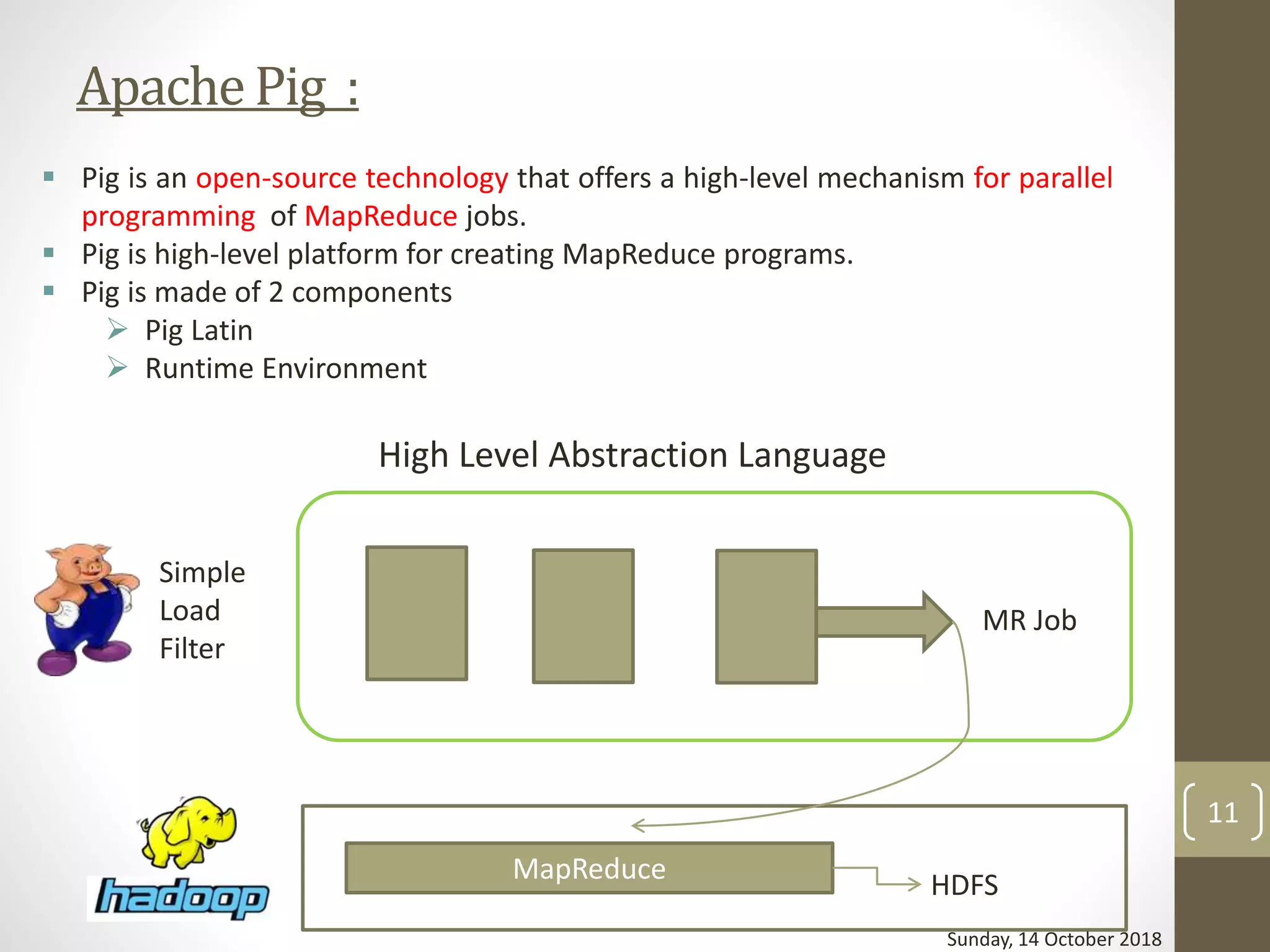

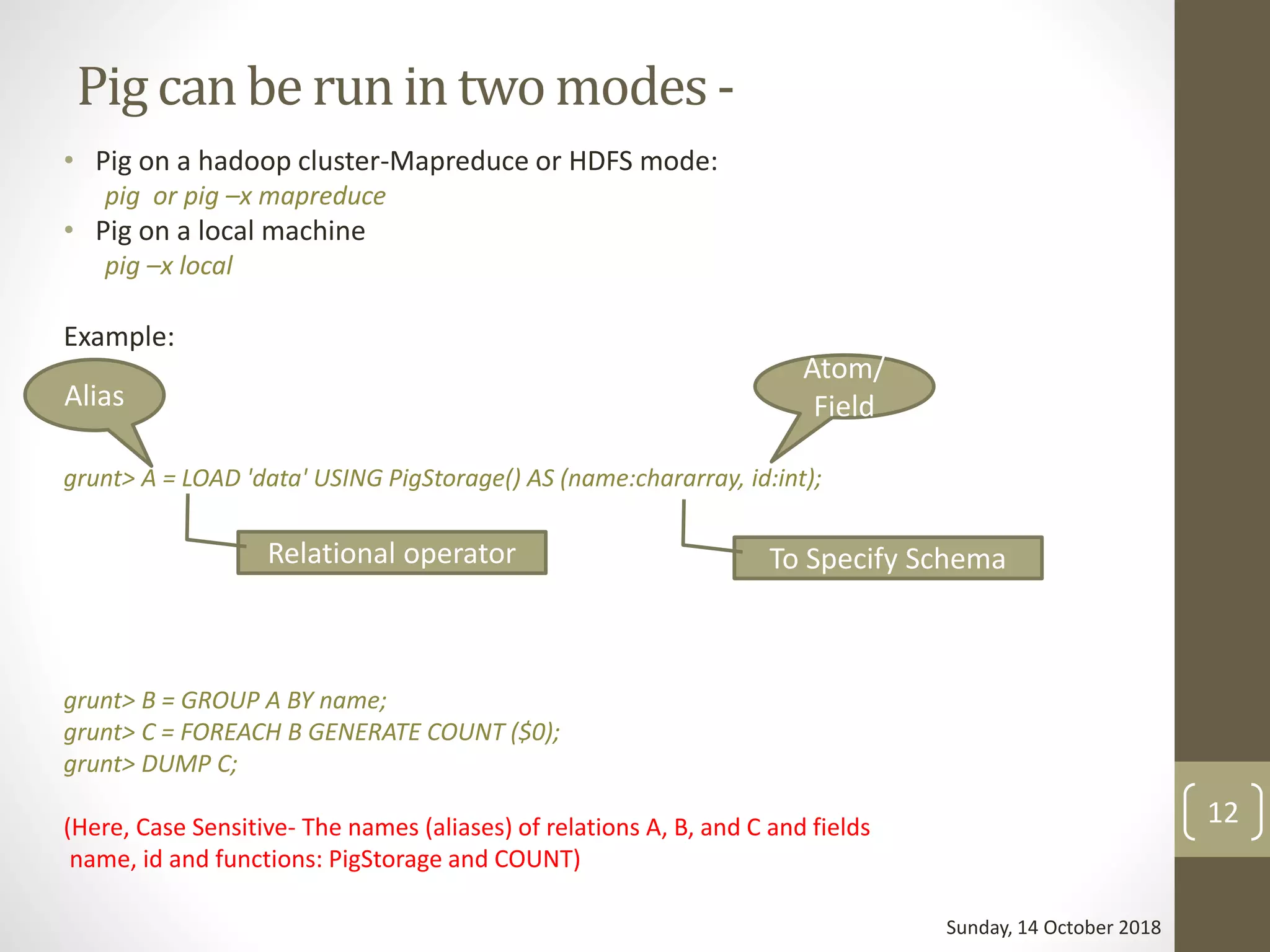

The document is a summer training presentation on big data and Hadoop, covering key concepts such as the definition of big data, the characteristics of different data structures, and an overview of the Hadoop ecosystem. It discusses various tools like MapReduce, Apache Pig, Hive, Flume, and Sqoop, along with practical examples of data analysis using these technologies. The conclusion emphasizes the learning achieved regarding big data sources and the use of Hadoop tools for data management.

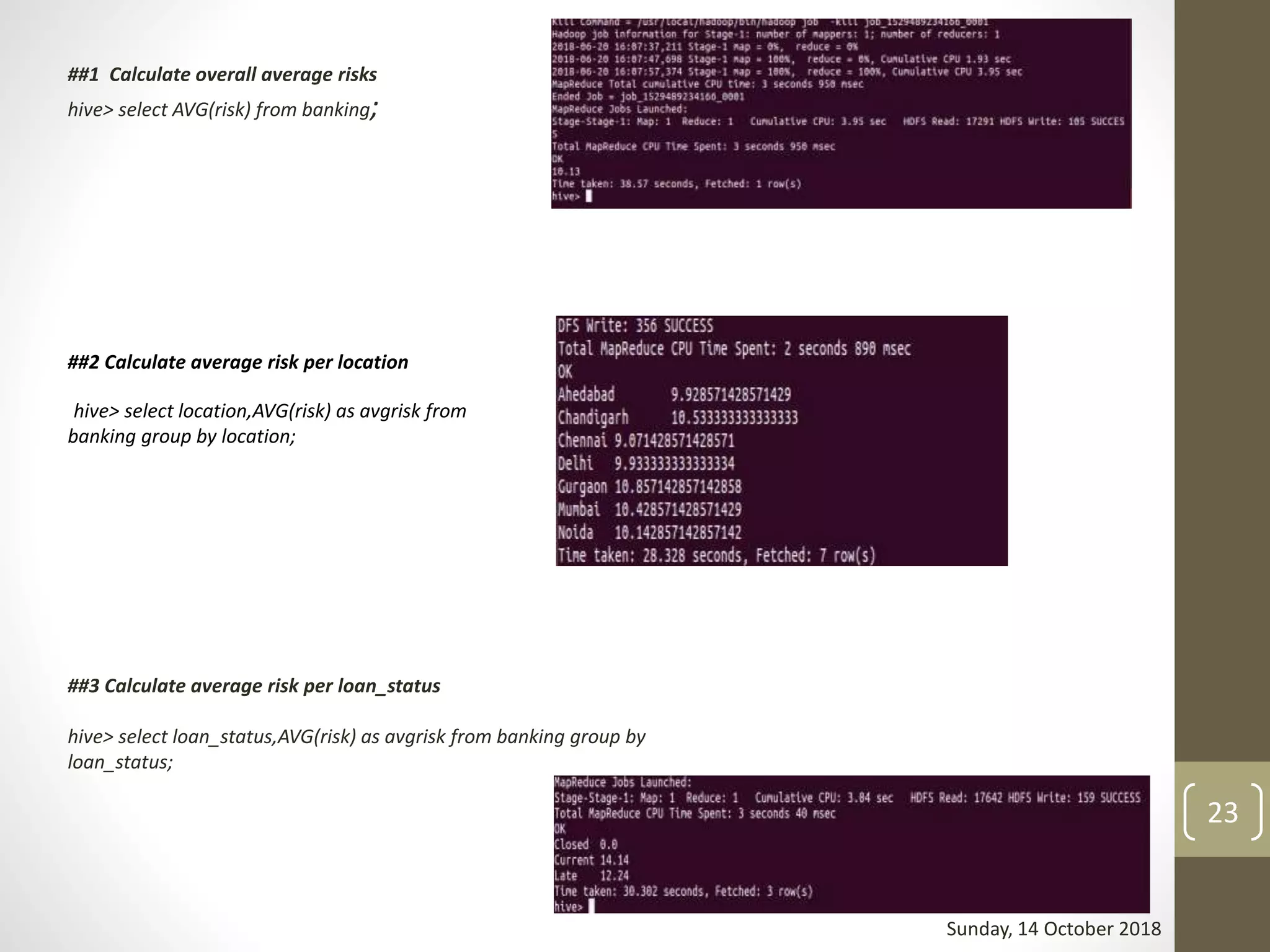

![Index:

Sunday, 14 October 2018

2

Slide no. Content

1. Introduction

2 - 3 Index

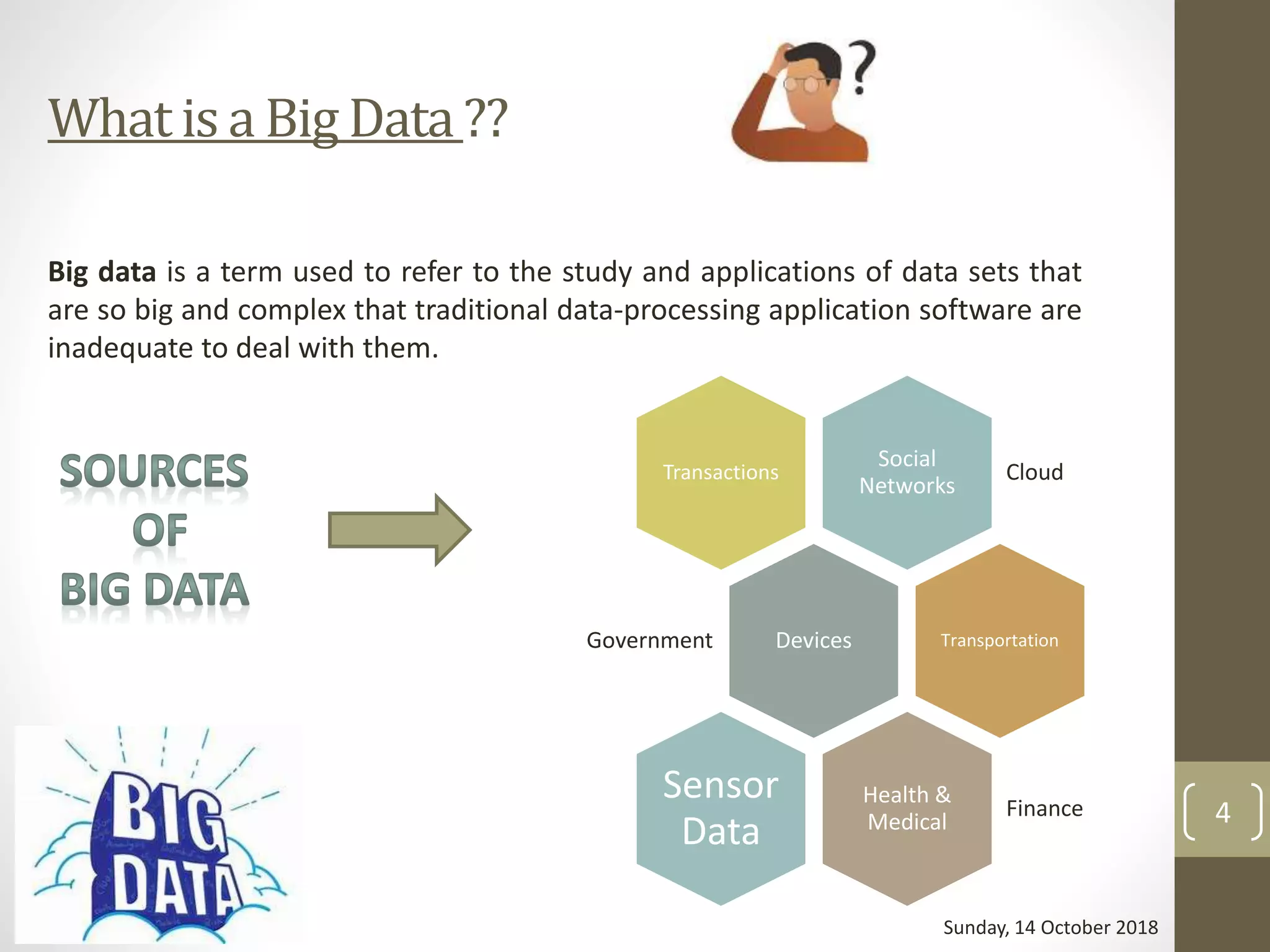

4. What is Big Data ?

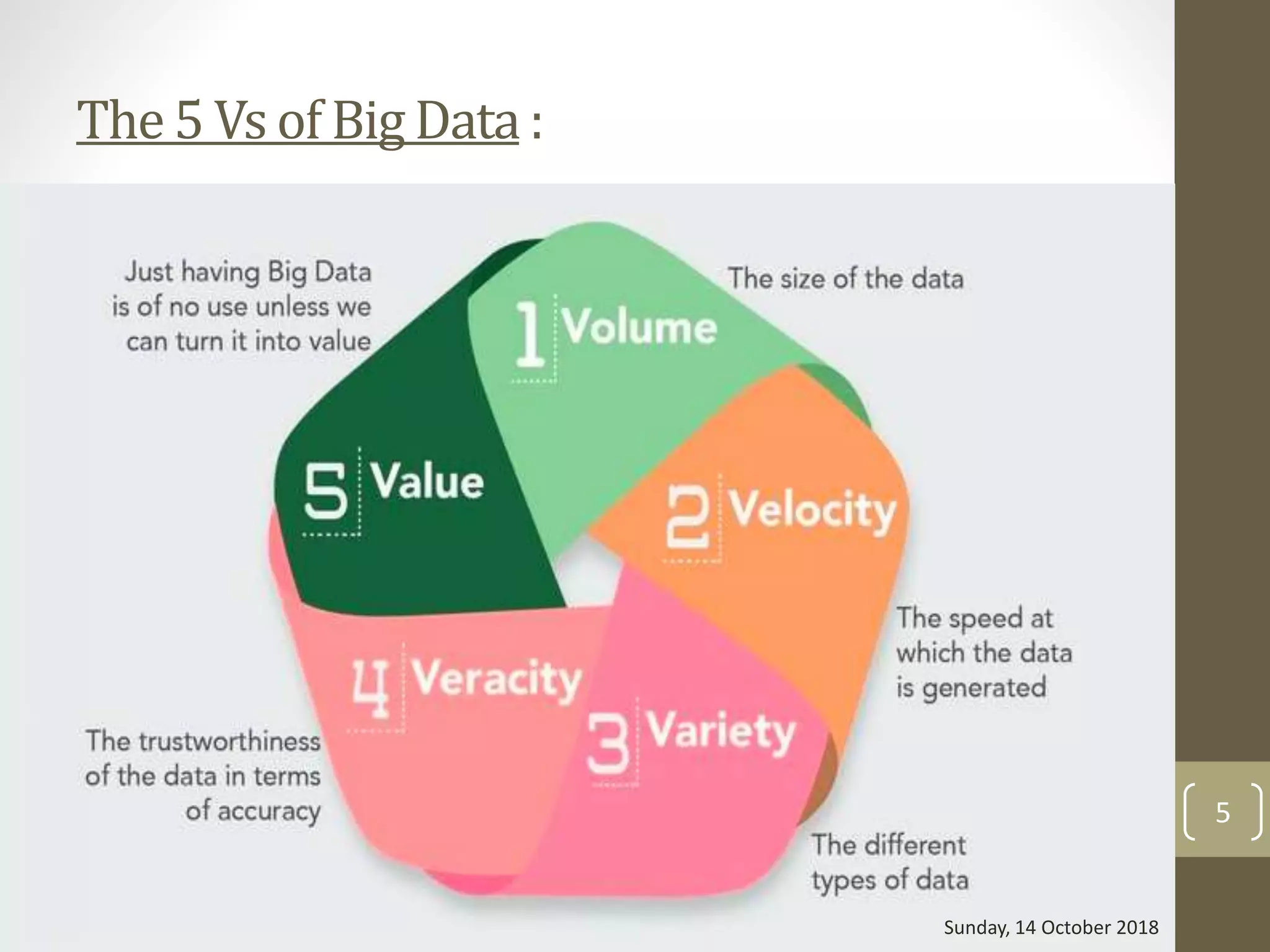

5. The 5 Vs of Big Data

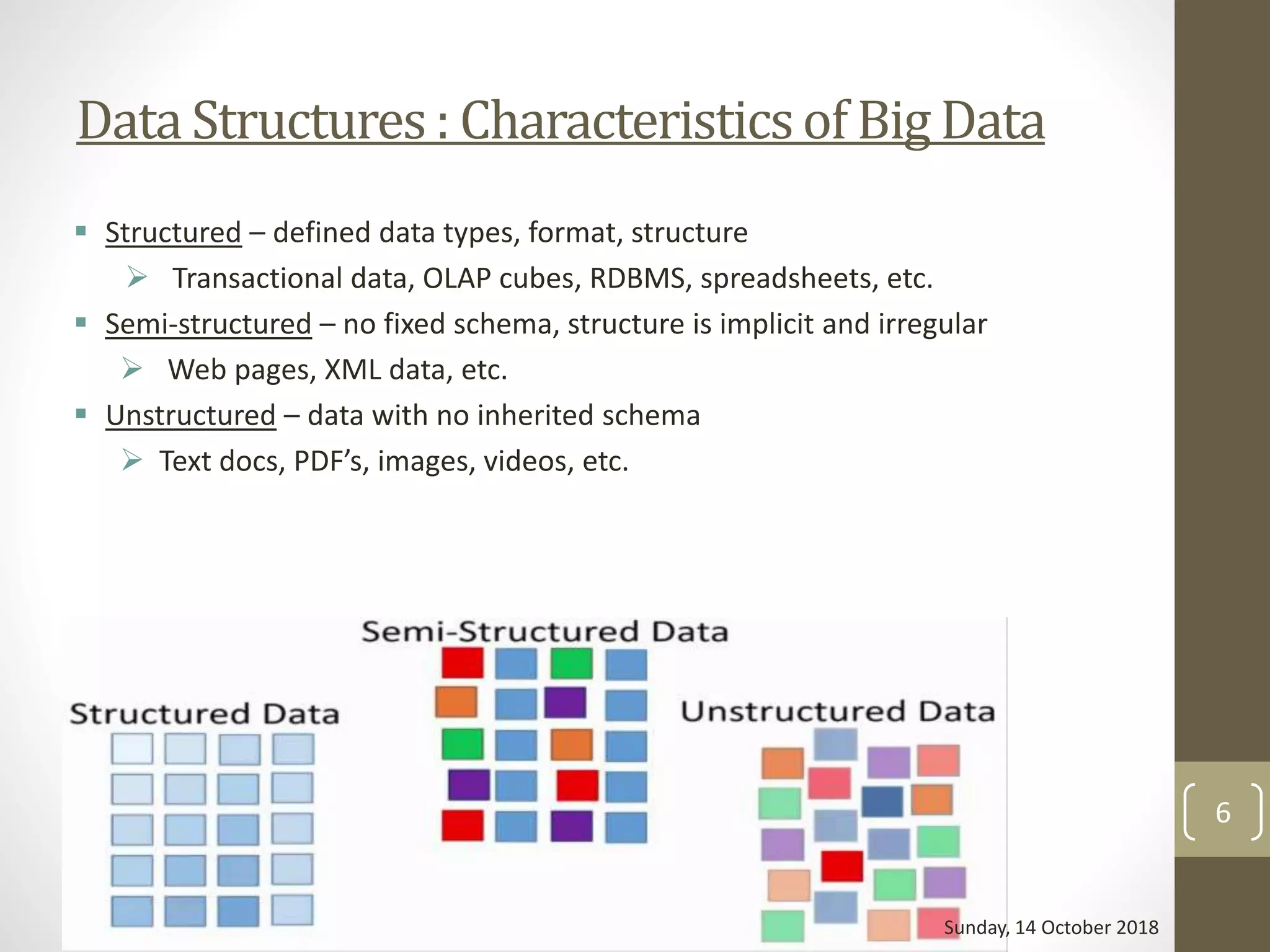

6. Data Structures: Characteristics of Big Data

7. Introduction to Hadoop

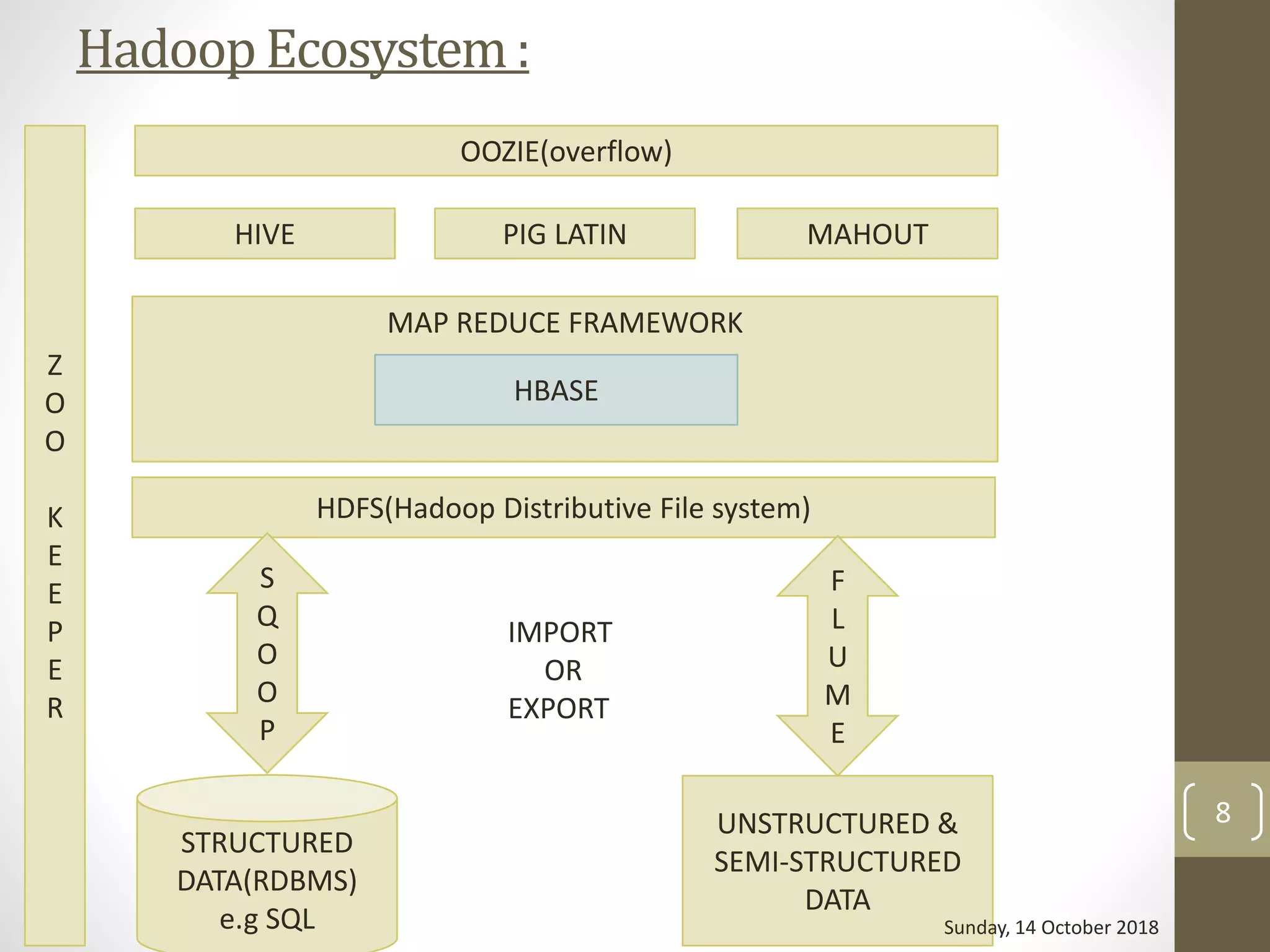

8. Hadoop Ecosystem

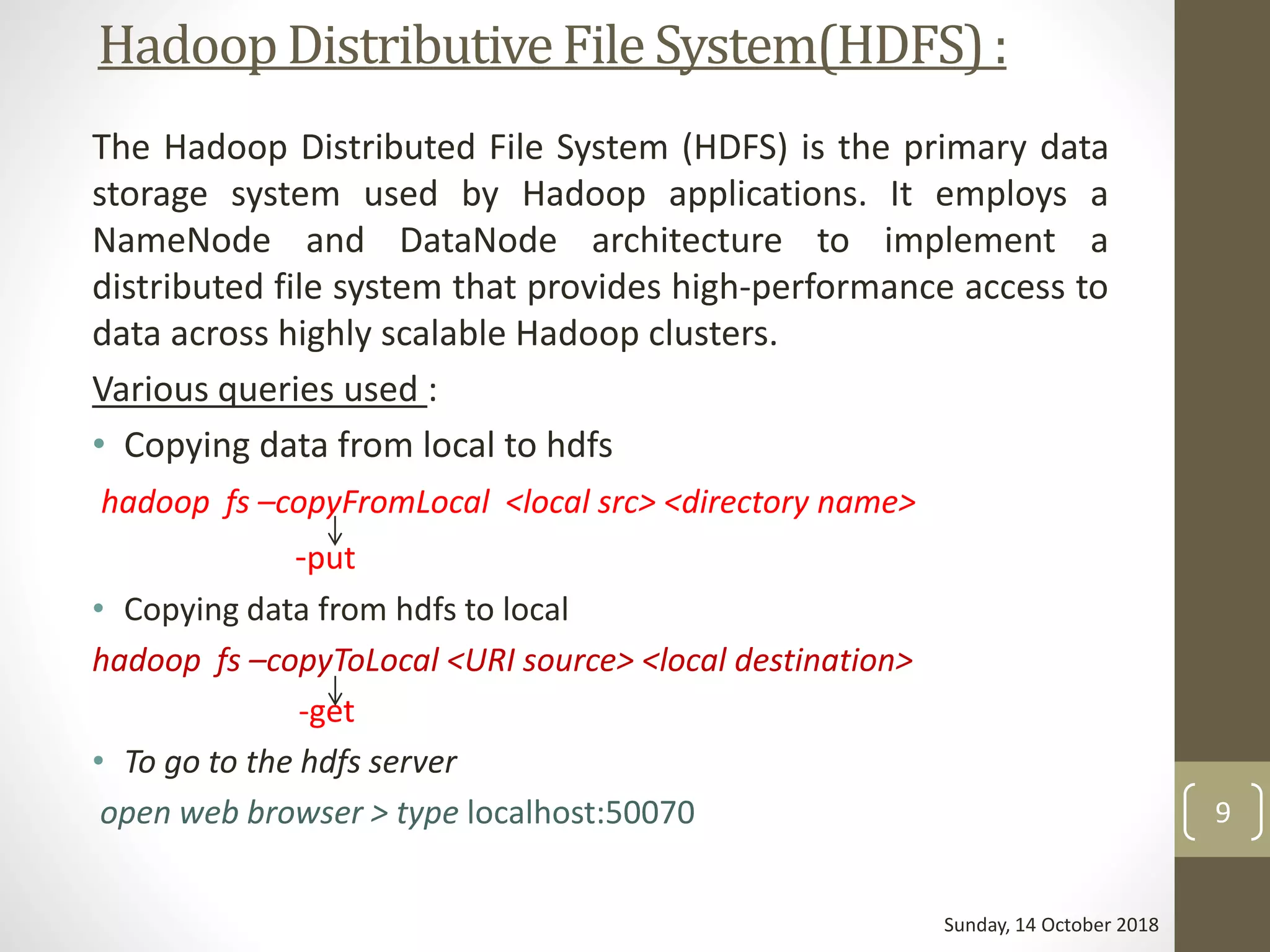

9. Hadoop Distributive File System [ HDFS ]

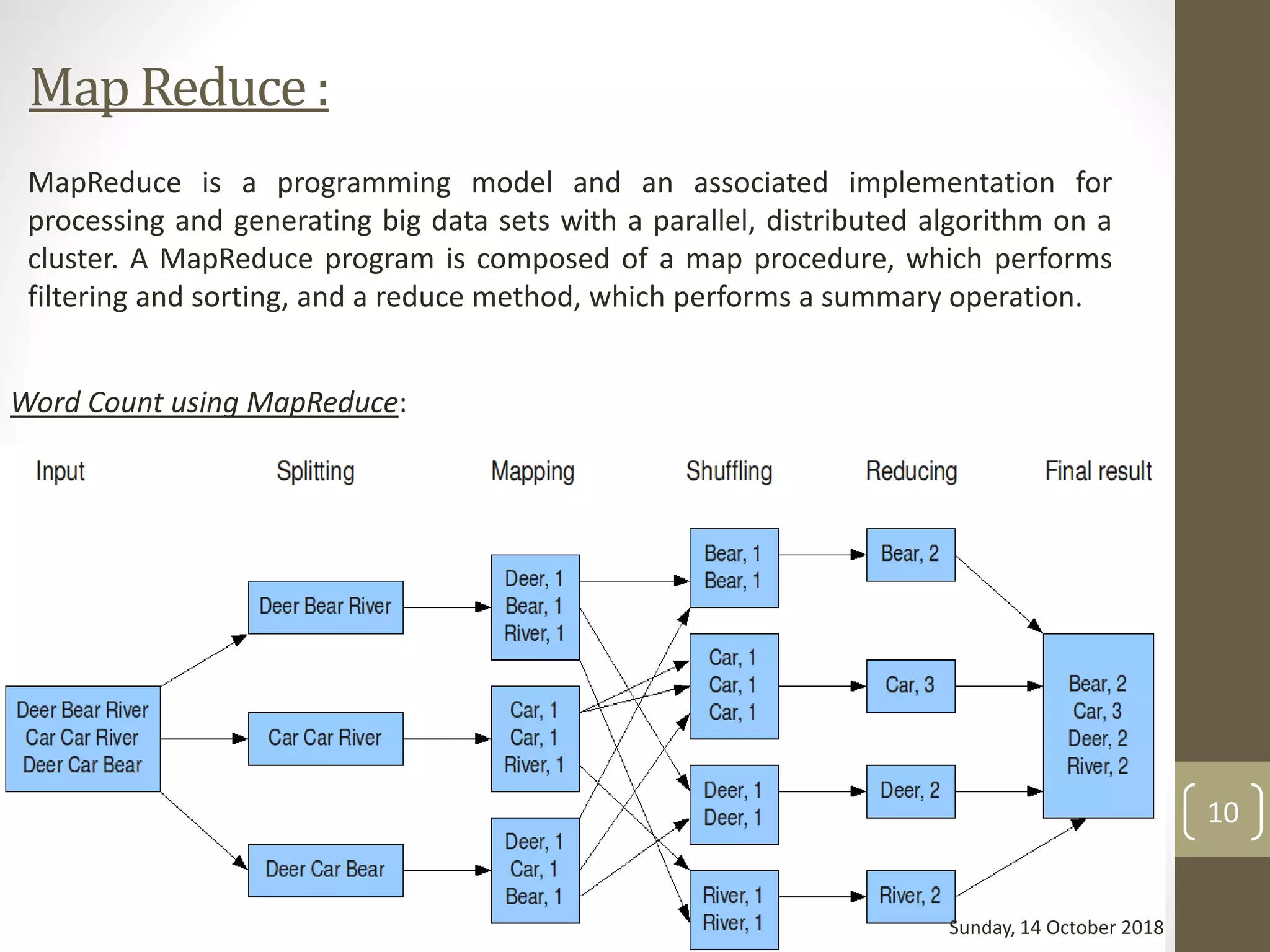

10. Map Reduce

11. Apache Pig

12. Modes of Pig

13. What is Hive ?

14. Example

15. What is Flume ?

16. Advantages of Flume](https://image.slidesharecdn.com/summertrainingpresentation-181014062304/75/Big-Data-Summer-training-presentation-2-2048.jpg)

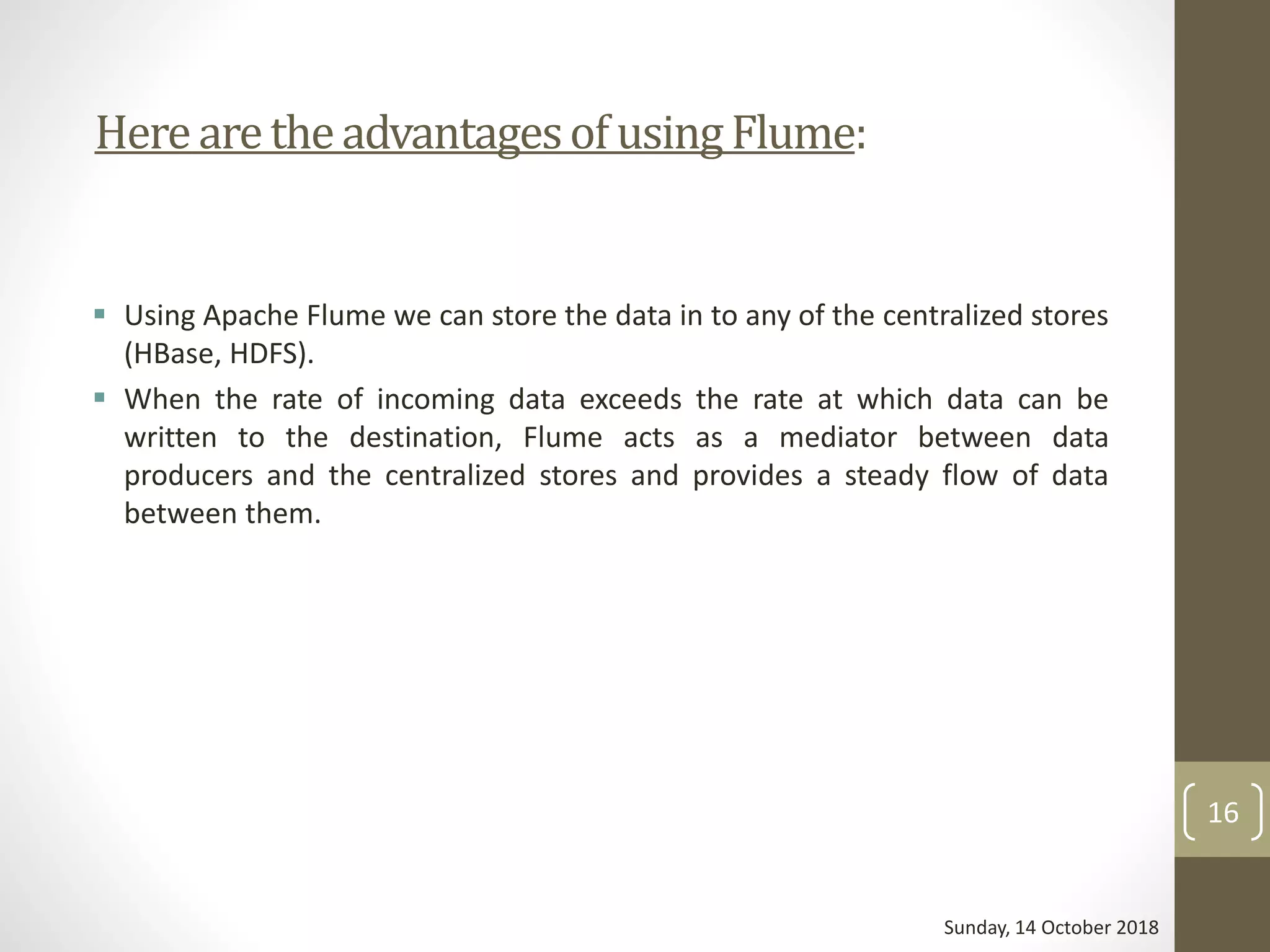

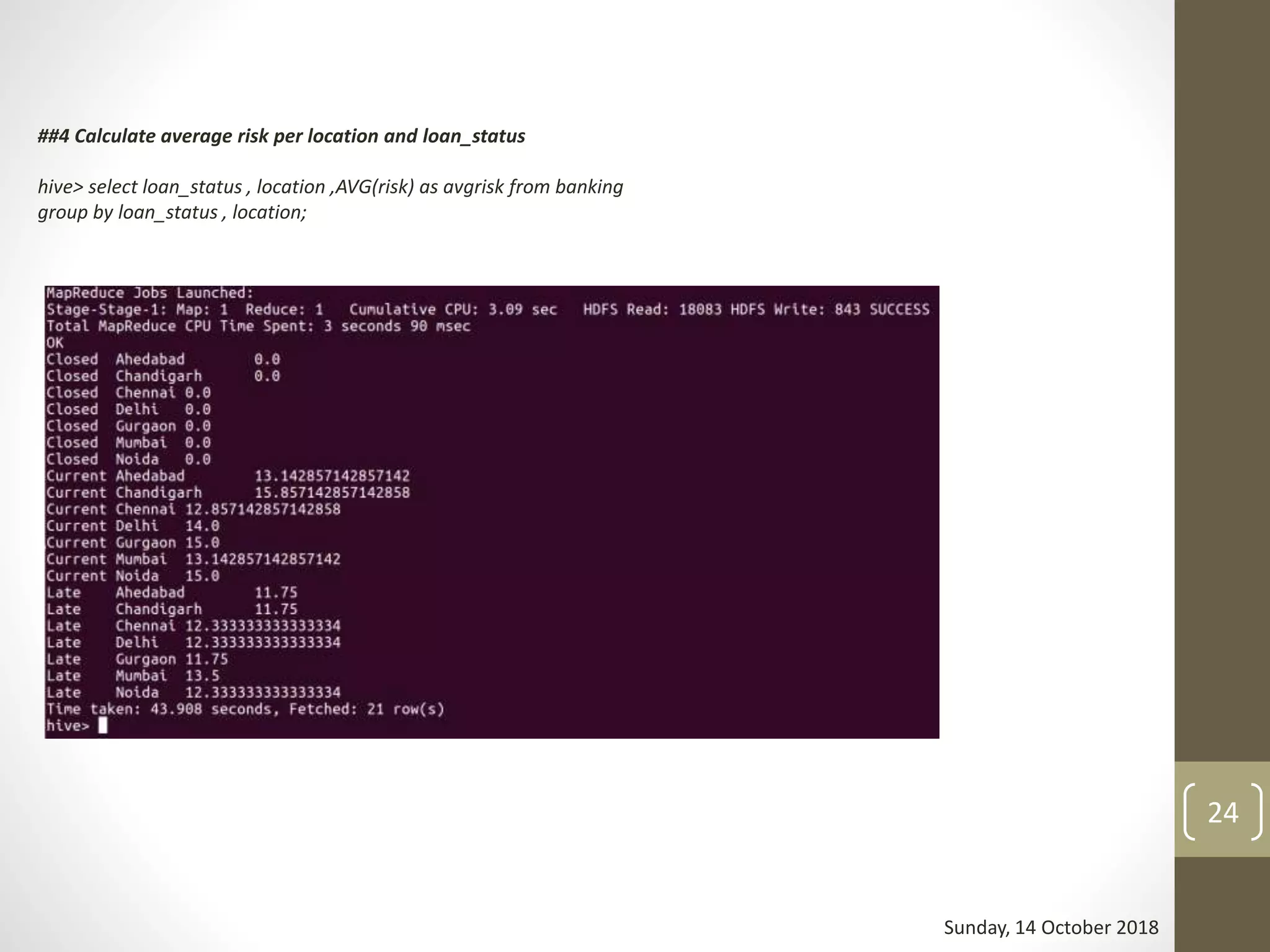

![Example:

hive> CREATE DATABASE IF NOT EXISTS user;

hive> USE user;

hive> CREATE TABLE IF NOT EXISTS employee ( eid int, name String,

> salary String, destination String)

> COMMENT ‘Employee details’

> ROW FORMAT DELIMITED

> FIELDS TERMINATED BY ‘t’

> LINES TERMINATED BY ‘n’

> STORED AS TEXTFILE;

hive> LOAD DATA [LOCAL] INPATH 'filepath' [OVERWRITE] INTO TABLE

employee;

Sunday, 14 October 2018

14](https://image.slidesharecdn.com/summertrainingpresentation-181014062304/75/Big-Data-Summer-training-presentation-14-2048.jpg)