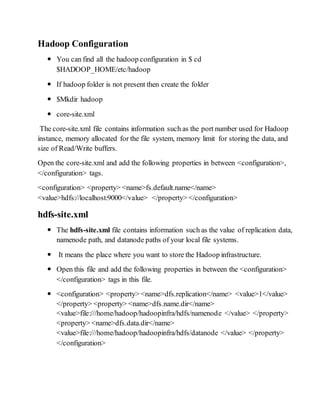

This document provides instructions for installing Hadoop on Ubuntu. It describes creating a separate user for Hadoop, setting up SSH keys for access, installing Java, downloading and extracting Hadoop, configuring core Hadoop files like core-site.xml and hdfs-site.xml, and common errors that may occur during the Hadoop installation and configuration process. Finally, it explains how to format the namenode, start the Hadoop daemons, and check the Hadoop web interfaces.