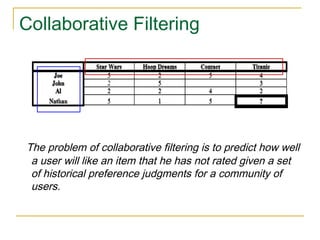

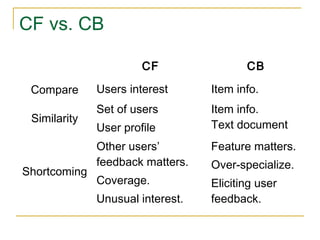

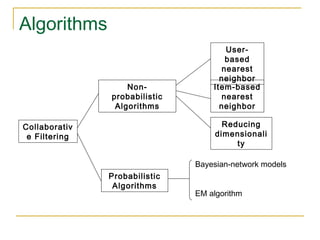

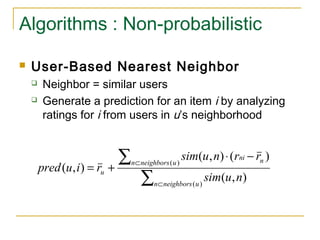

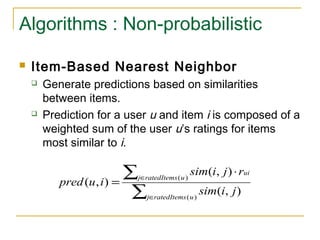

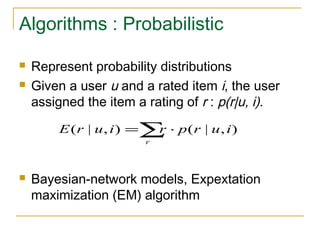

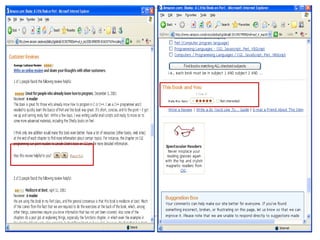

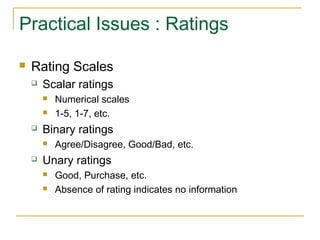

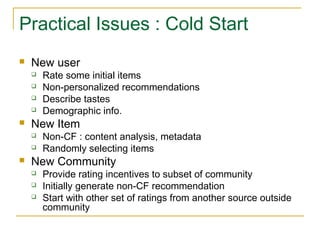

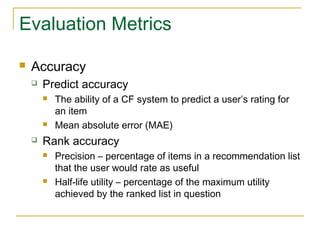

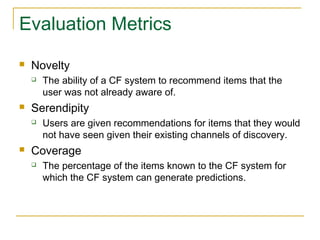

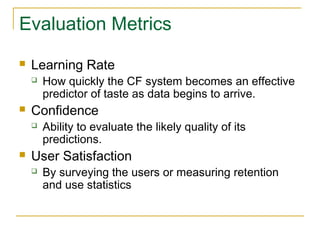

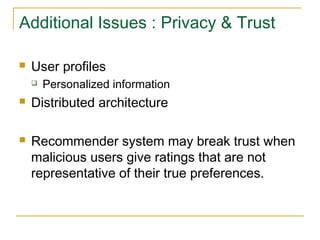

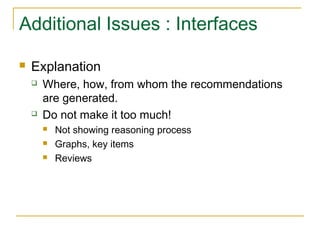

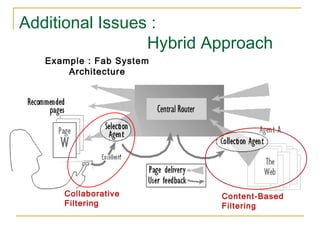

Collaborative filtering uses historical user preferences to predict how users will rate items they have not yet seen. It works by finding similarities between users or items and generating recommendations based on those similarities. Common collaborative filtering algorithms include user-based nearest neighbor, item-based nearest neighbor, and probabilistic models like Bayesian networks. Practical challenges include cold starts for new users/items, collecting accurate ratings data, and evaluating system performance. Privacy, trust, interface design and hybrid approaches combining collaborative and content-based filtering are also important issues.