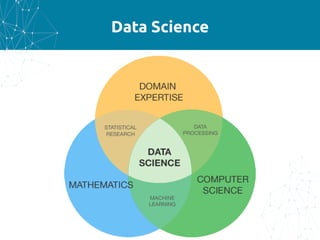

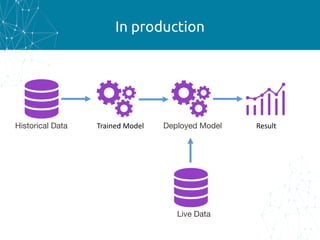

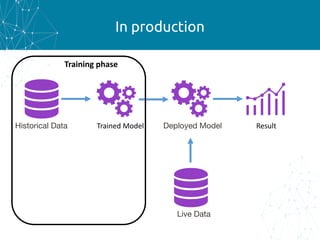

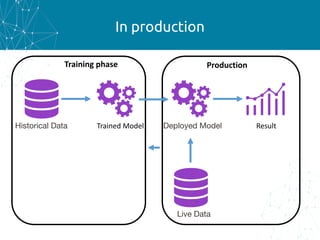

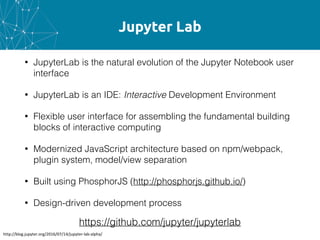

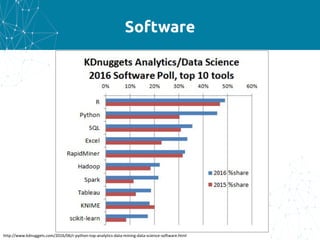

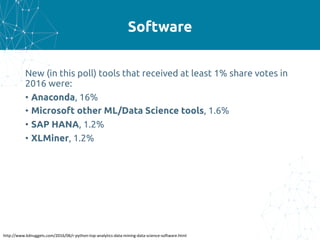

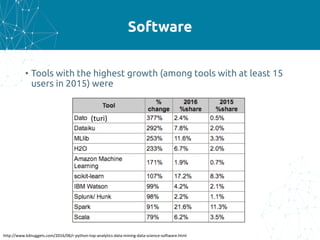

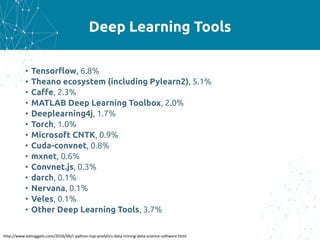

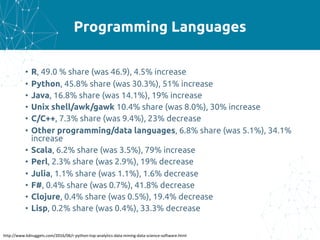

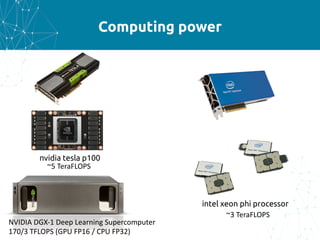

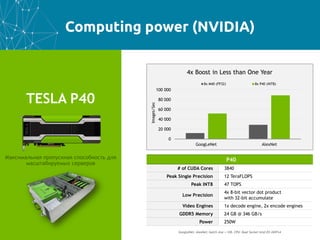

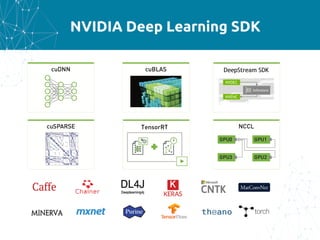

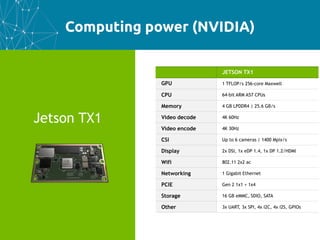

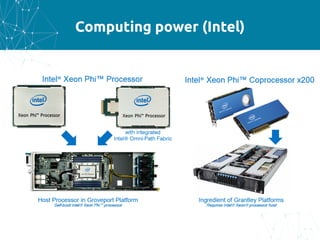

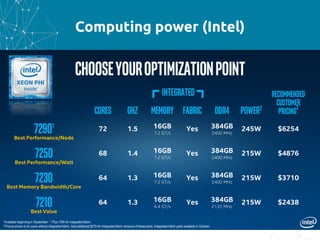

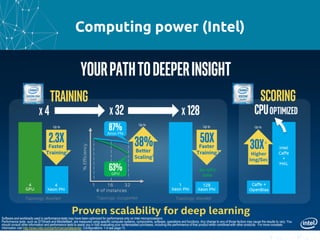

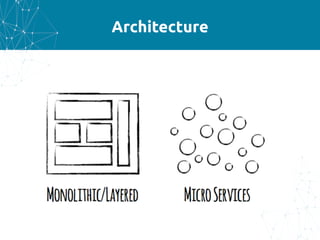

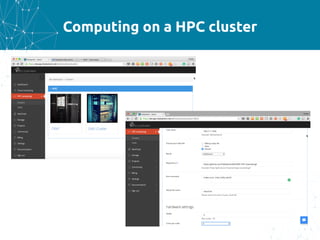

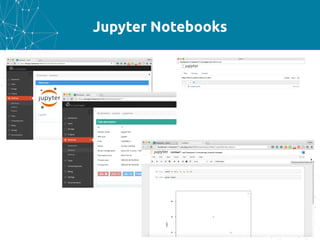

The document outlines the infrastructure and tools essential for data scientists, highlighting key topics such as production environments, software, programming languages, visualization, and computing power. It discusses the evolution of JupyterLab, popular software and deep learning tools, and the increasing importance of cloud services in data science workflows. Furthermore, it introduces the FlyElephant platform aimed at providing collaborative and efficient computing resources for data science projects.