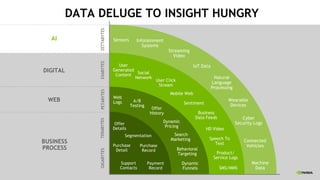

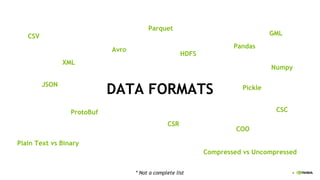

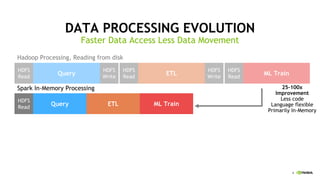

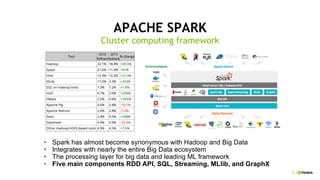

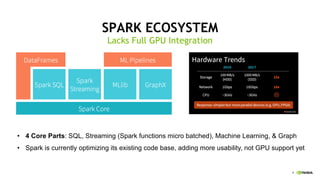

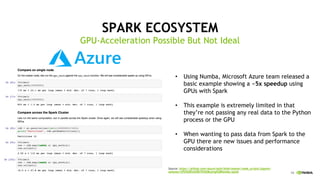

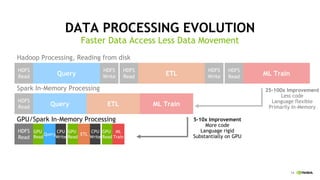

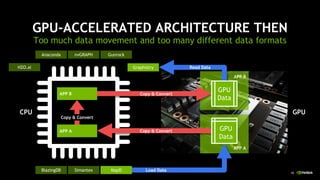

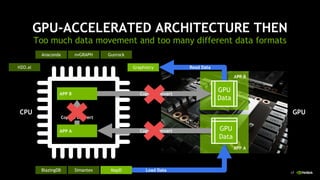

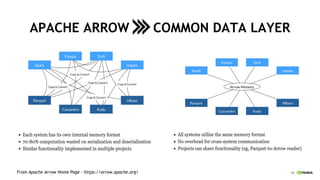

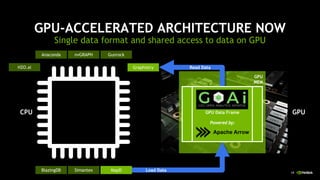

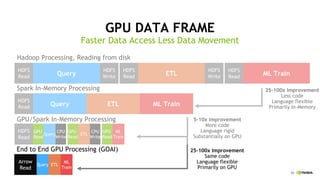

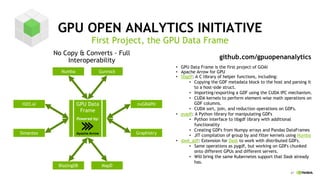

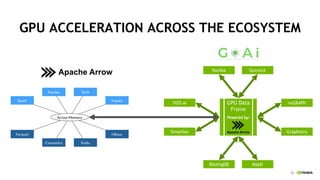

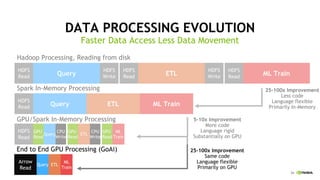

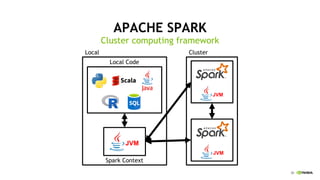

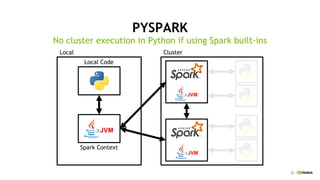

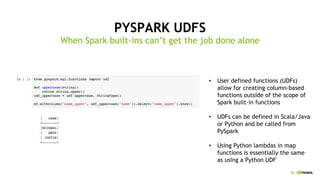

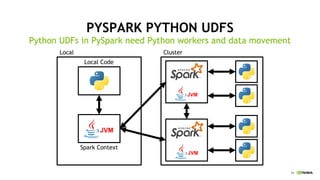

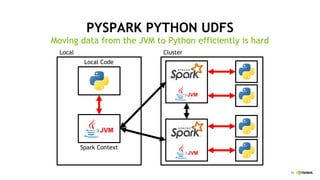

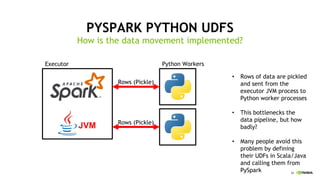

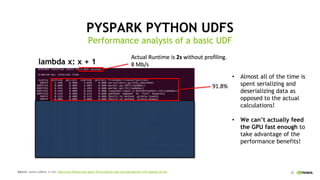

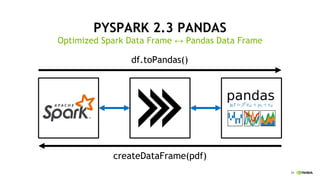

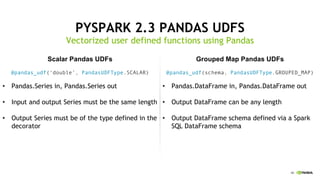

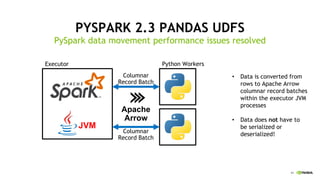

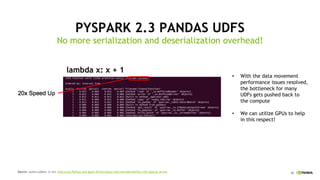

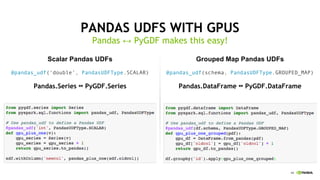

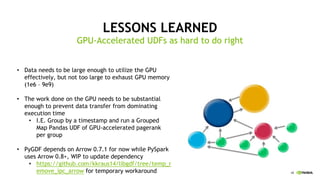

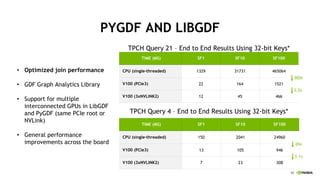

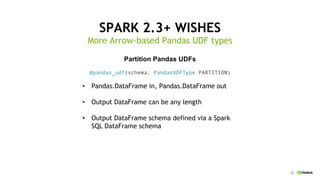

This document discusses accelerating Python user-defined functions (UDFs) in PySpark using Numba and PyGDF. It describes how data movement between the JVM and Python workers is currently a bottleneck for PySpark Python UDFs. With Apache Arrow, data can be transferred in a columnar format without serialization, improving performance. PyGDF enables defining UDFs that operate directly on GPU data frames using Numba for further acceleration. This allows leveraging GPUs to optimize complex UDFs in PySpark. Future work includes optimizing joins in PyGDF and supporting distributed GPU processing.