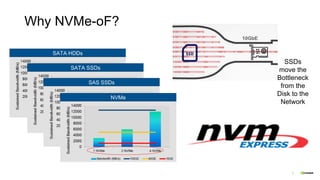

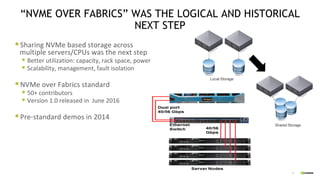

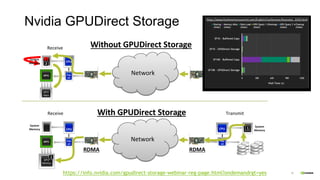

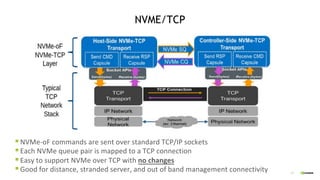

NVMe over Fabrics (NVMe-oF) allows NVMe-based storage to be shared across multiple servers over a network. It provides better utilization of resources and scalability compared to directly attached storage. NVMe-oF maintains NVMe performance by transferring commands and data end-to-end over the fabric using technologies like RDMA that bypass legacy storage stacks. It enables applications like composable infrastructure with remote direct memory access (RDMA) providing near-local performance. While NVMe-oF can use different transports, RDMA has been most common due to low latency it provides.