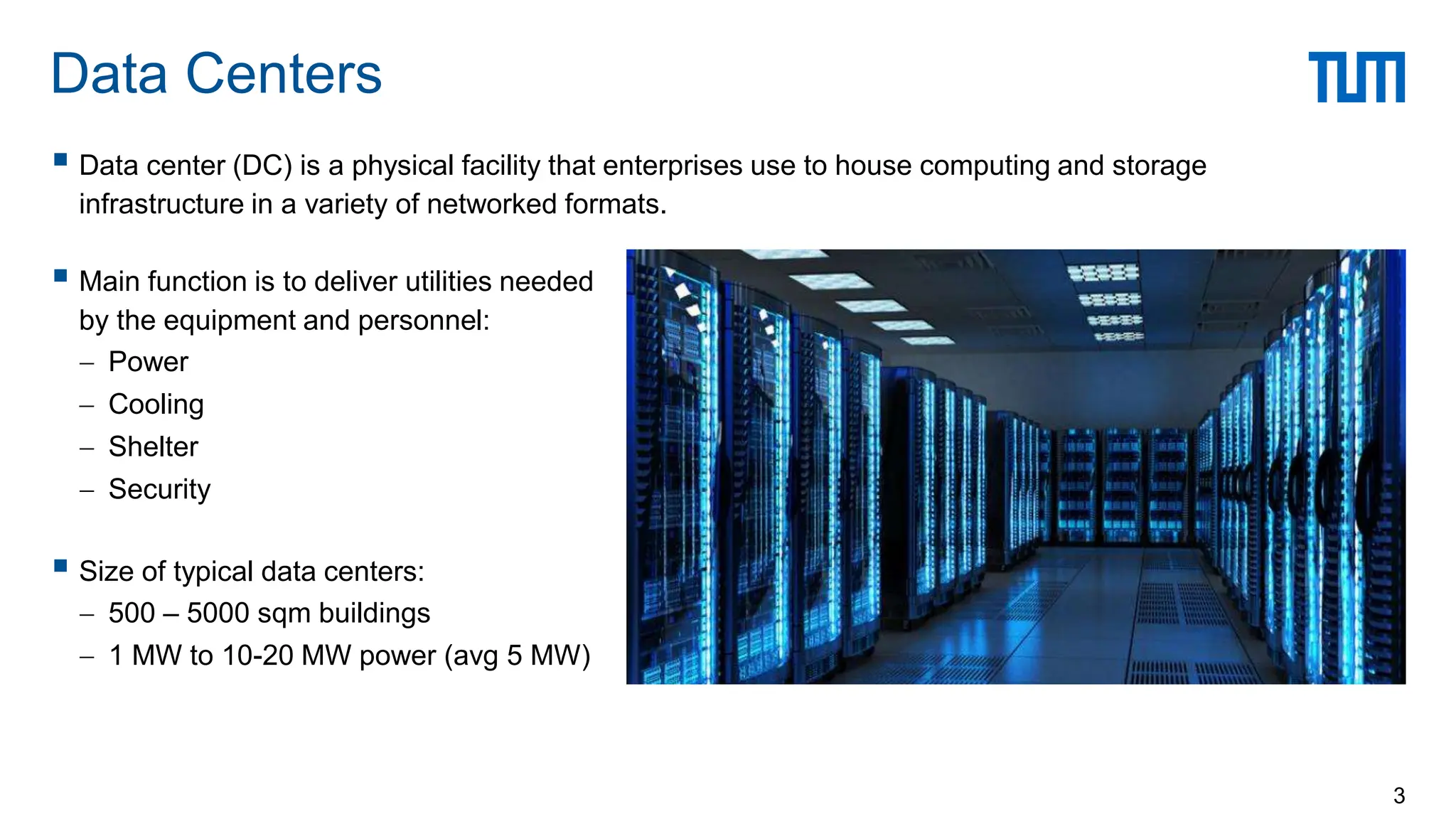

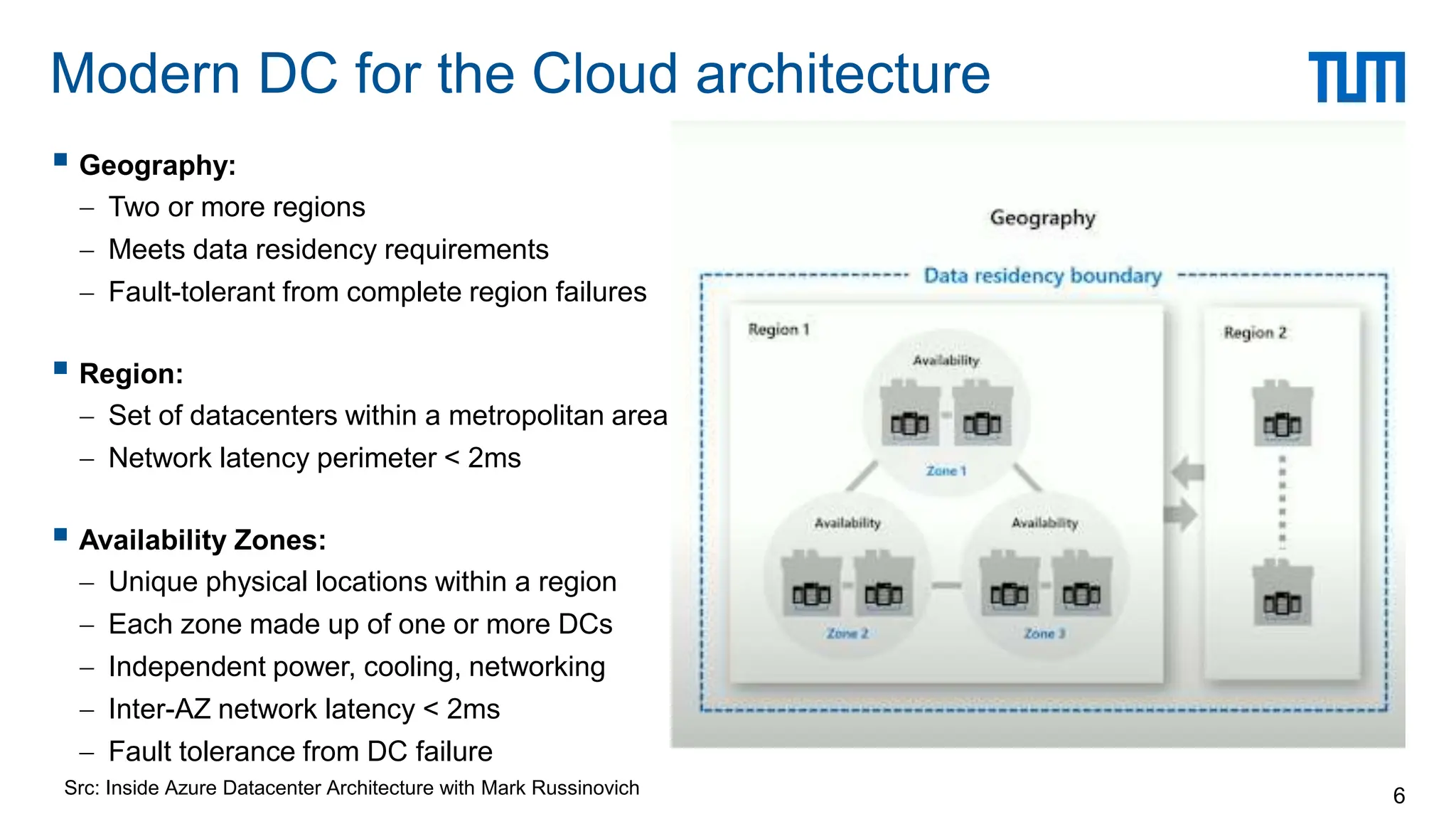

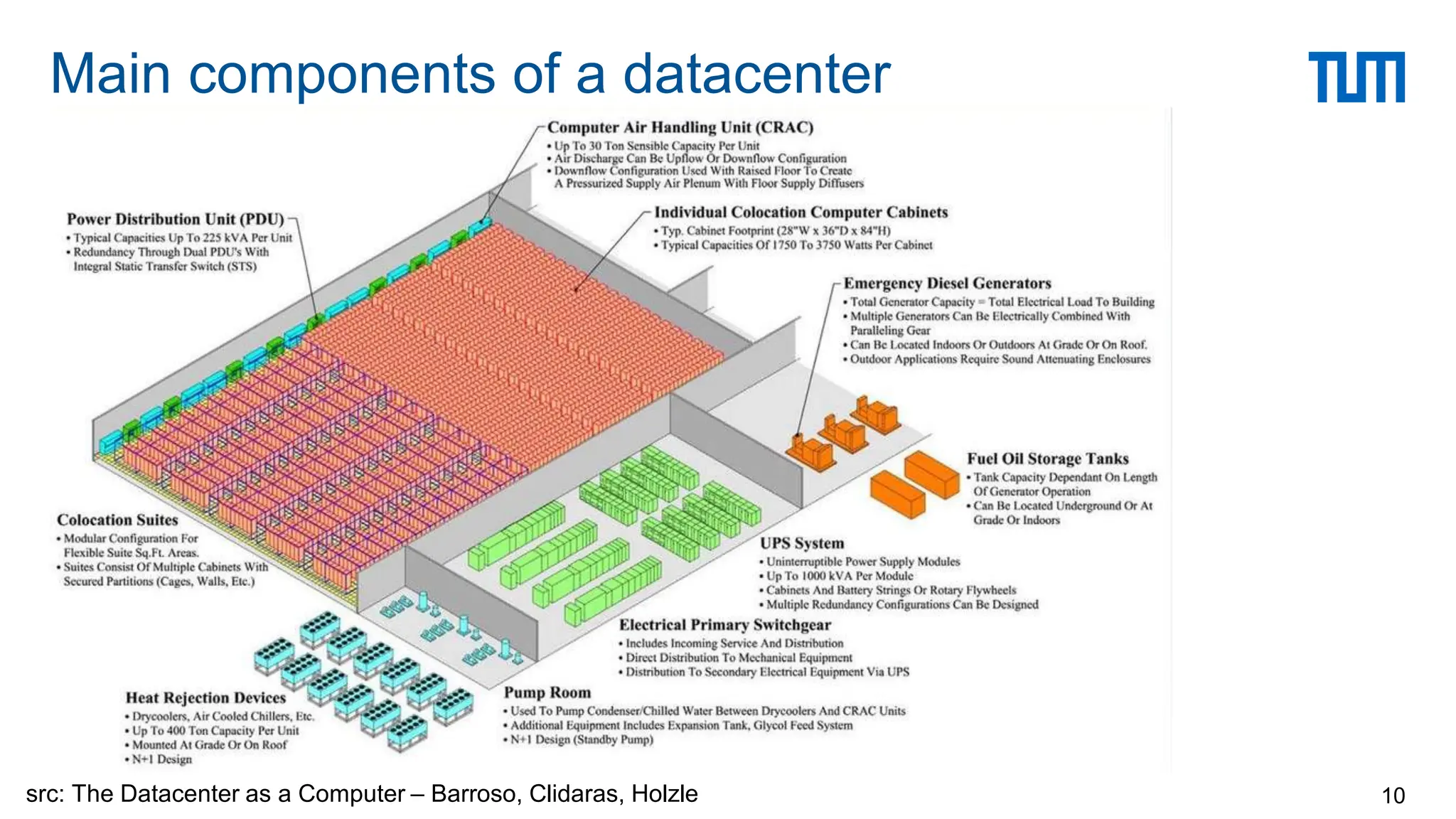

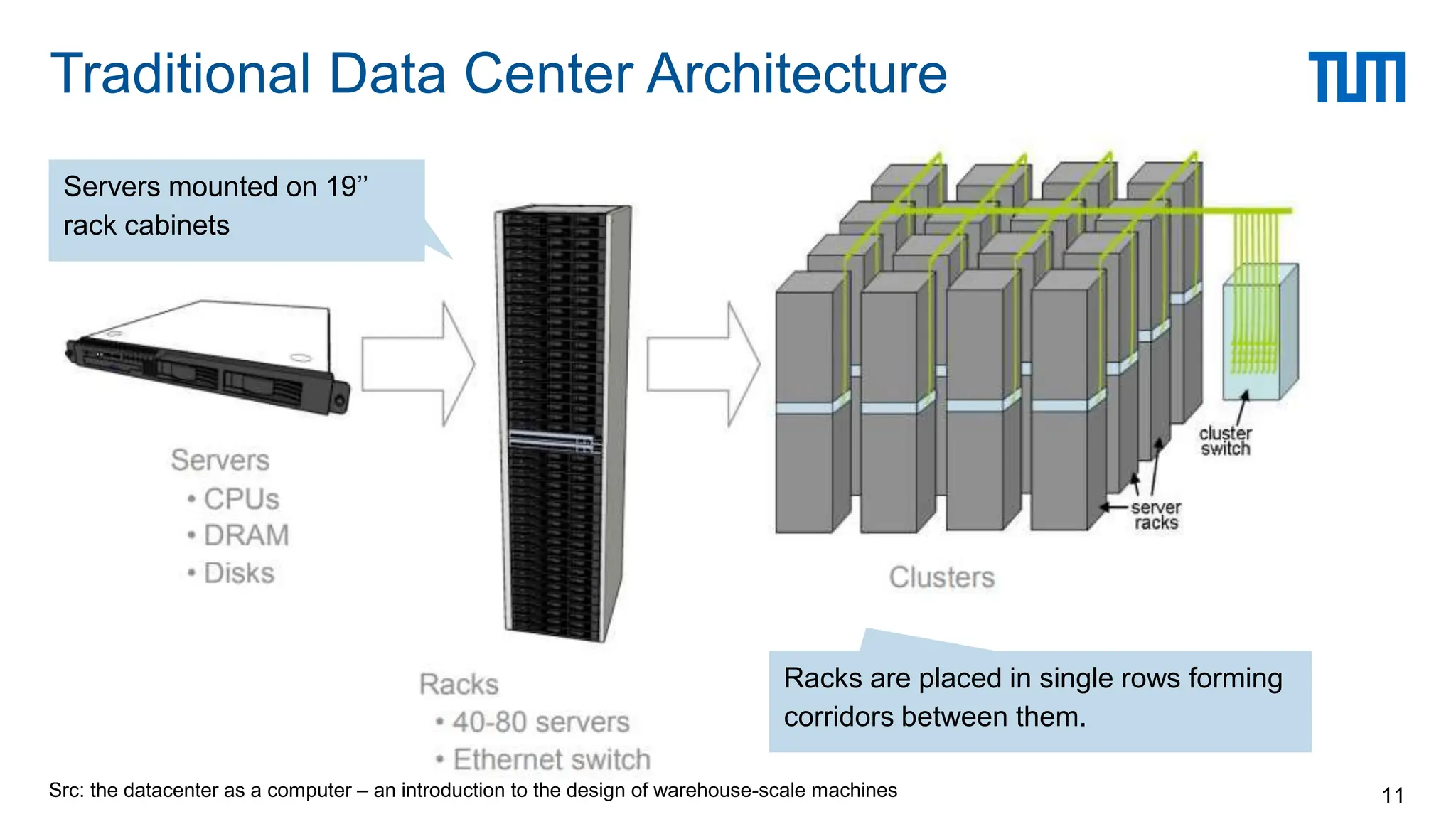

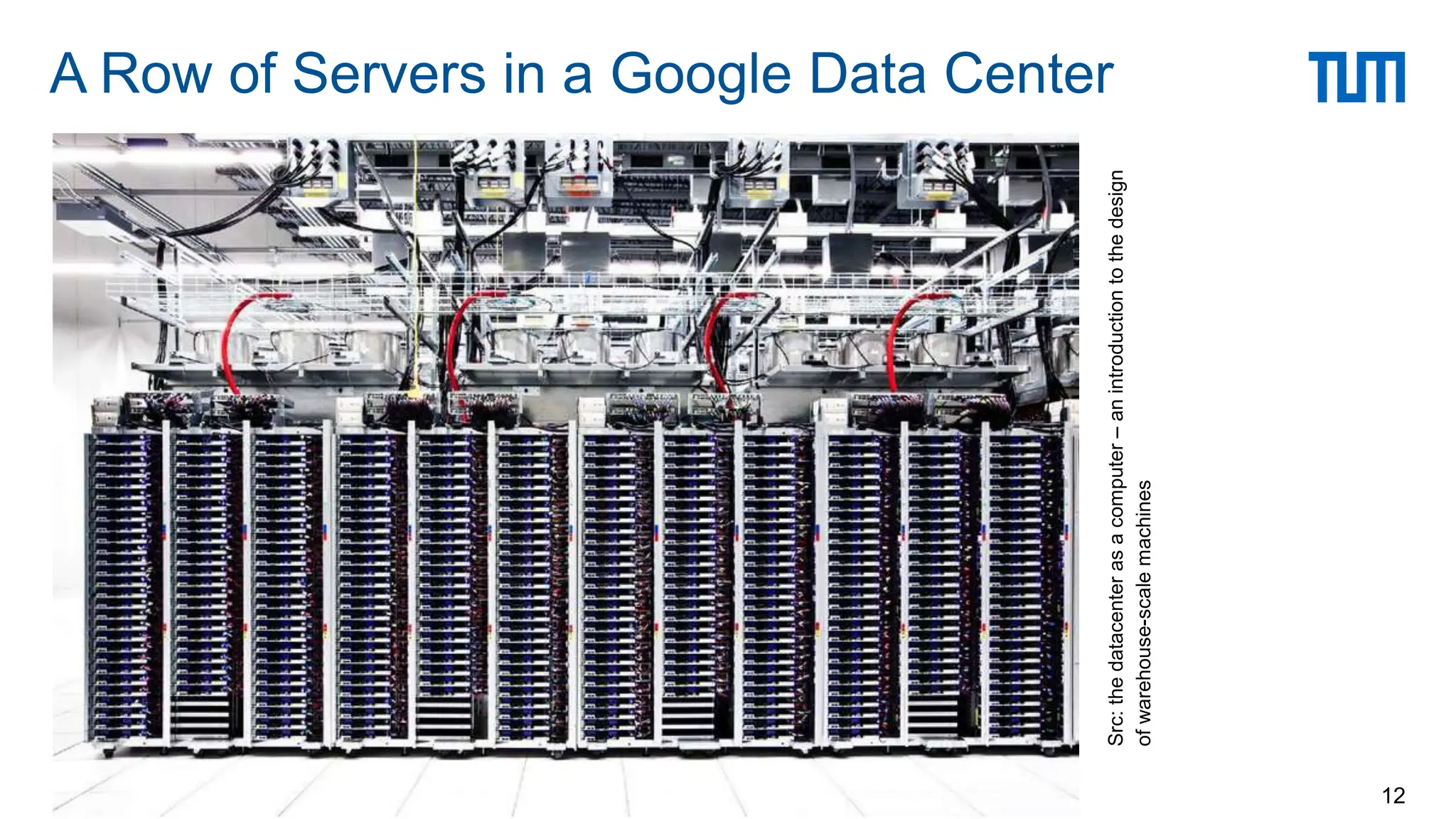

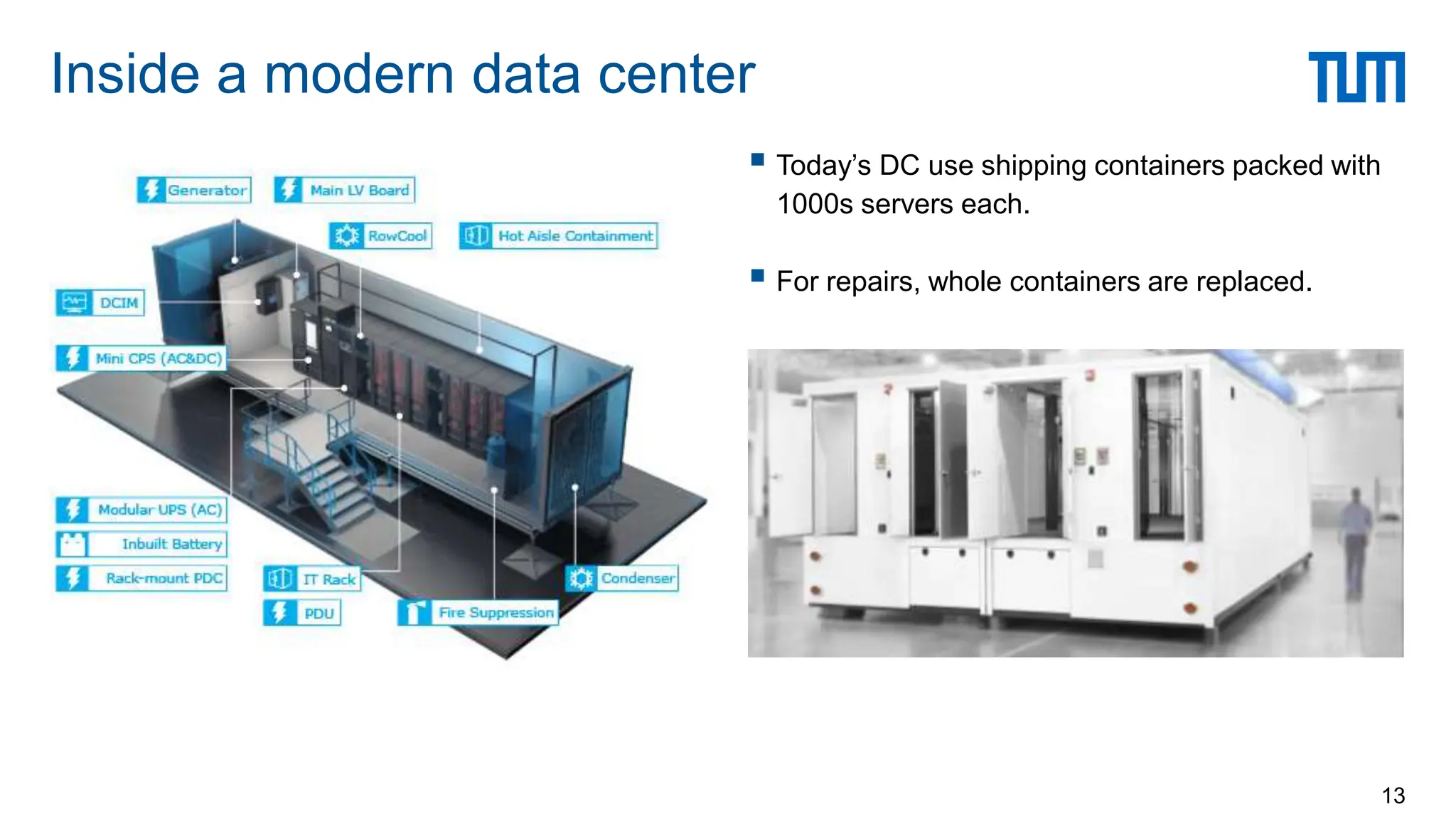

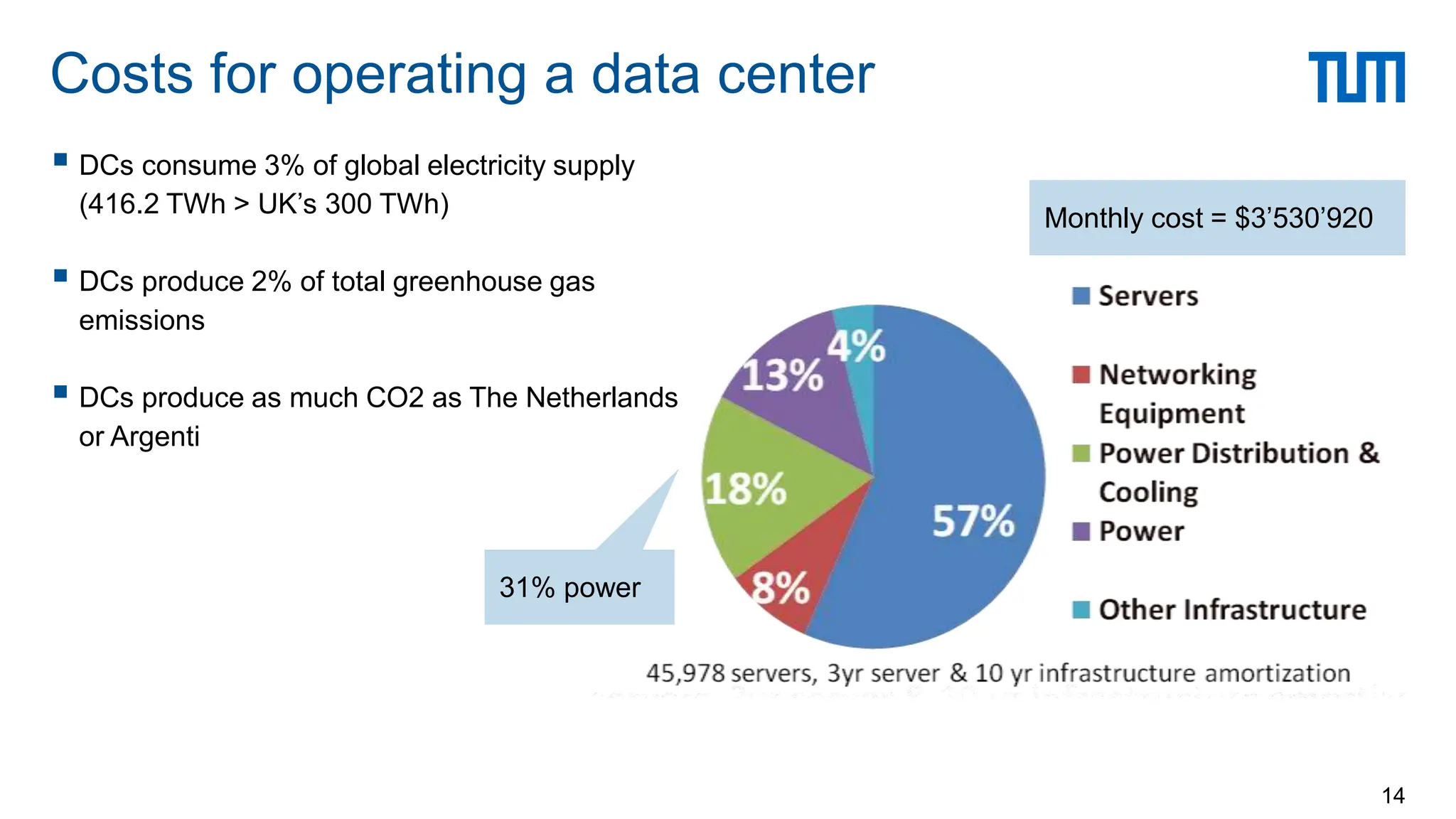

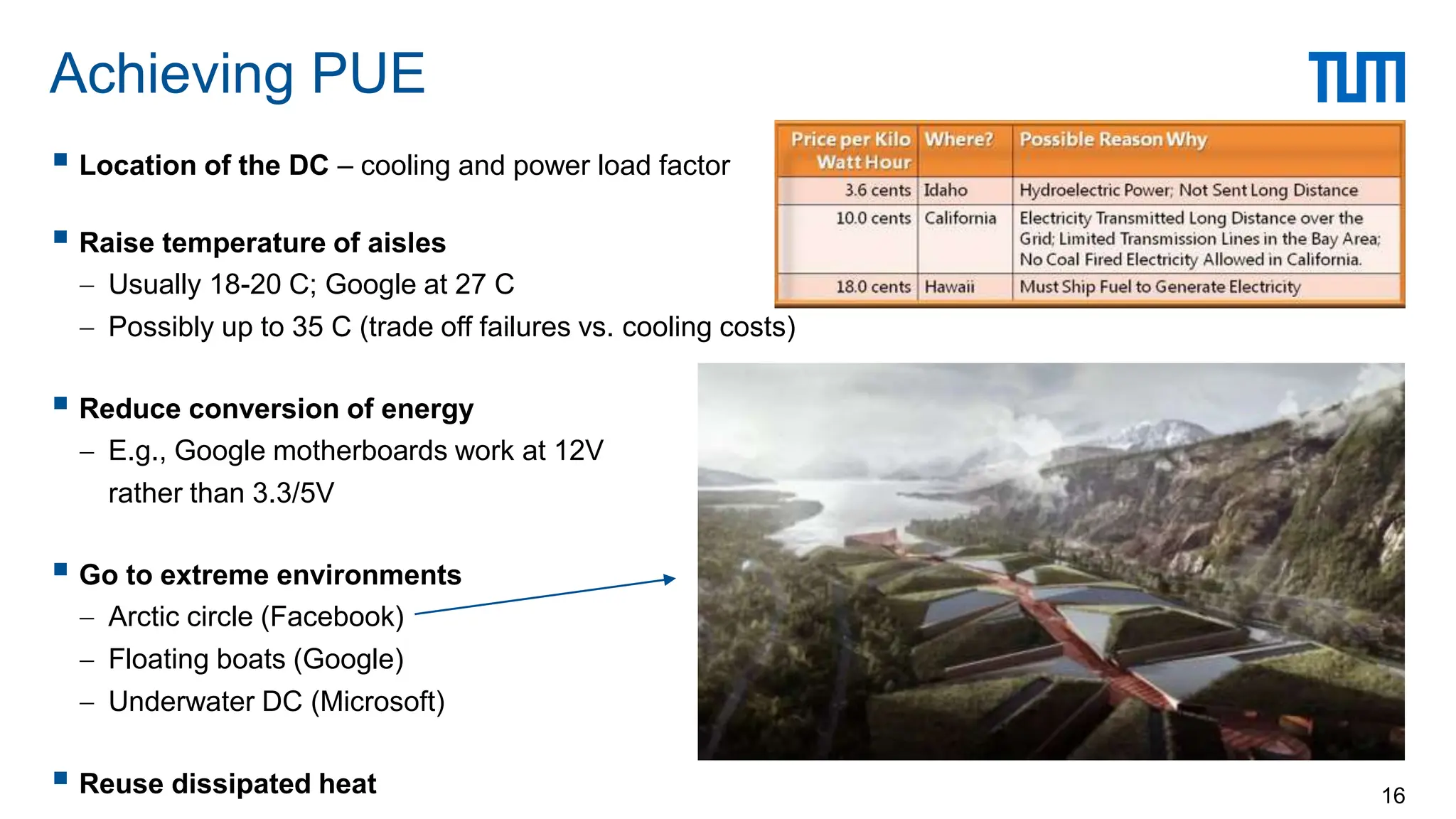

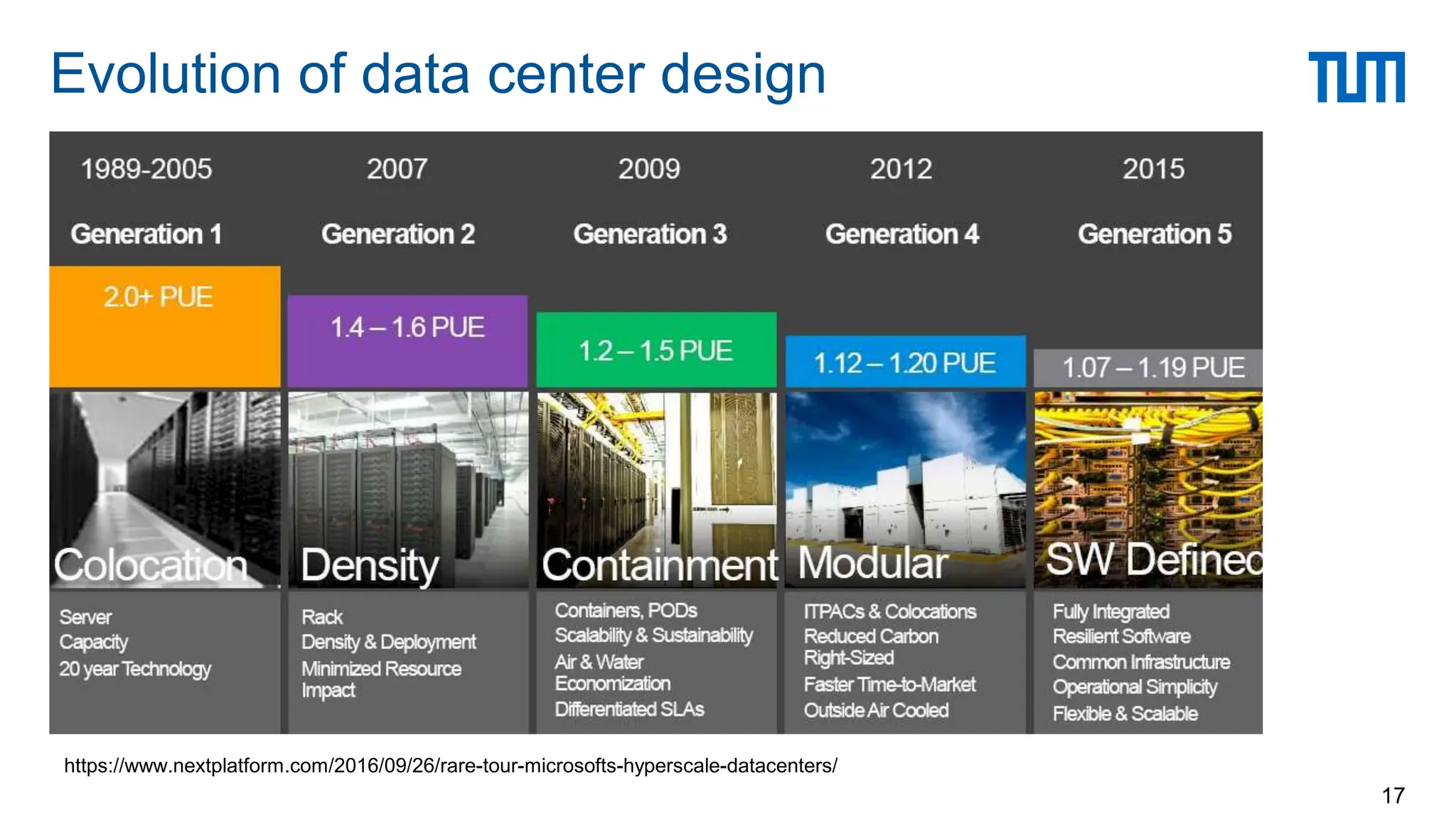

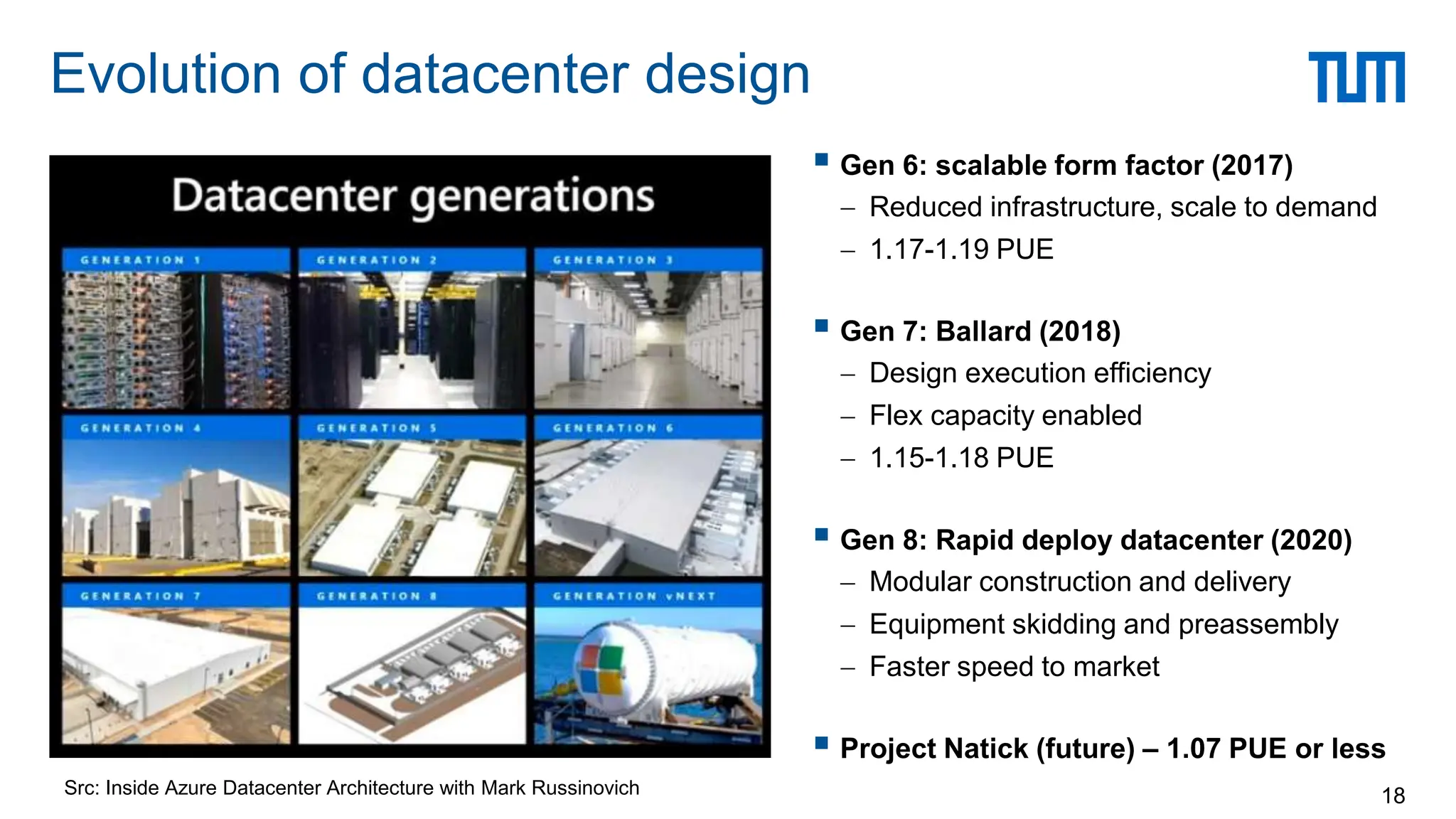

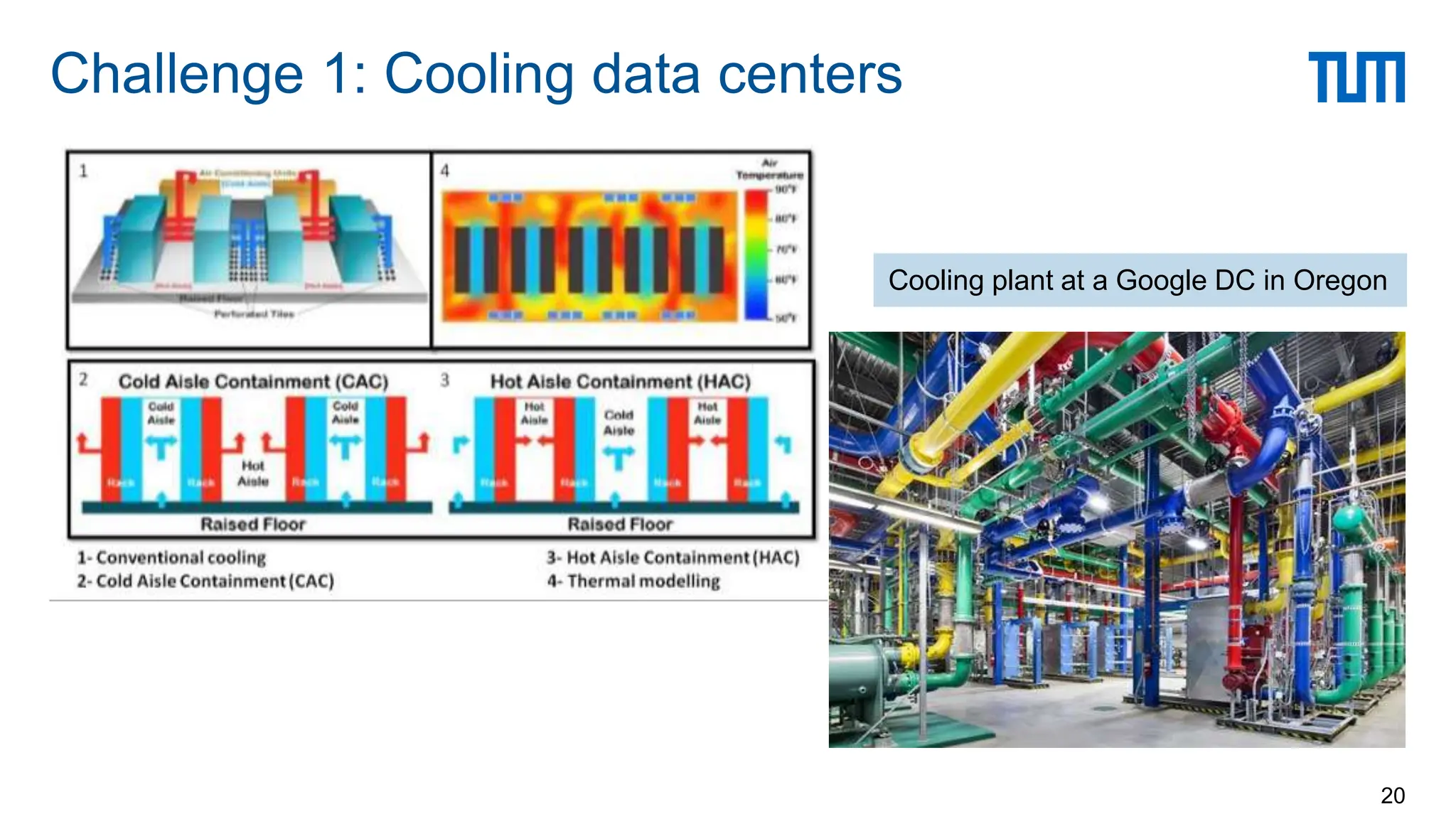

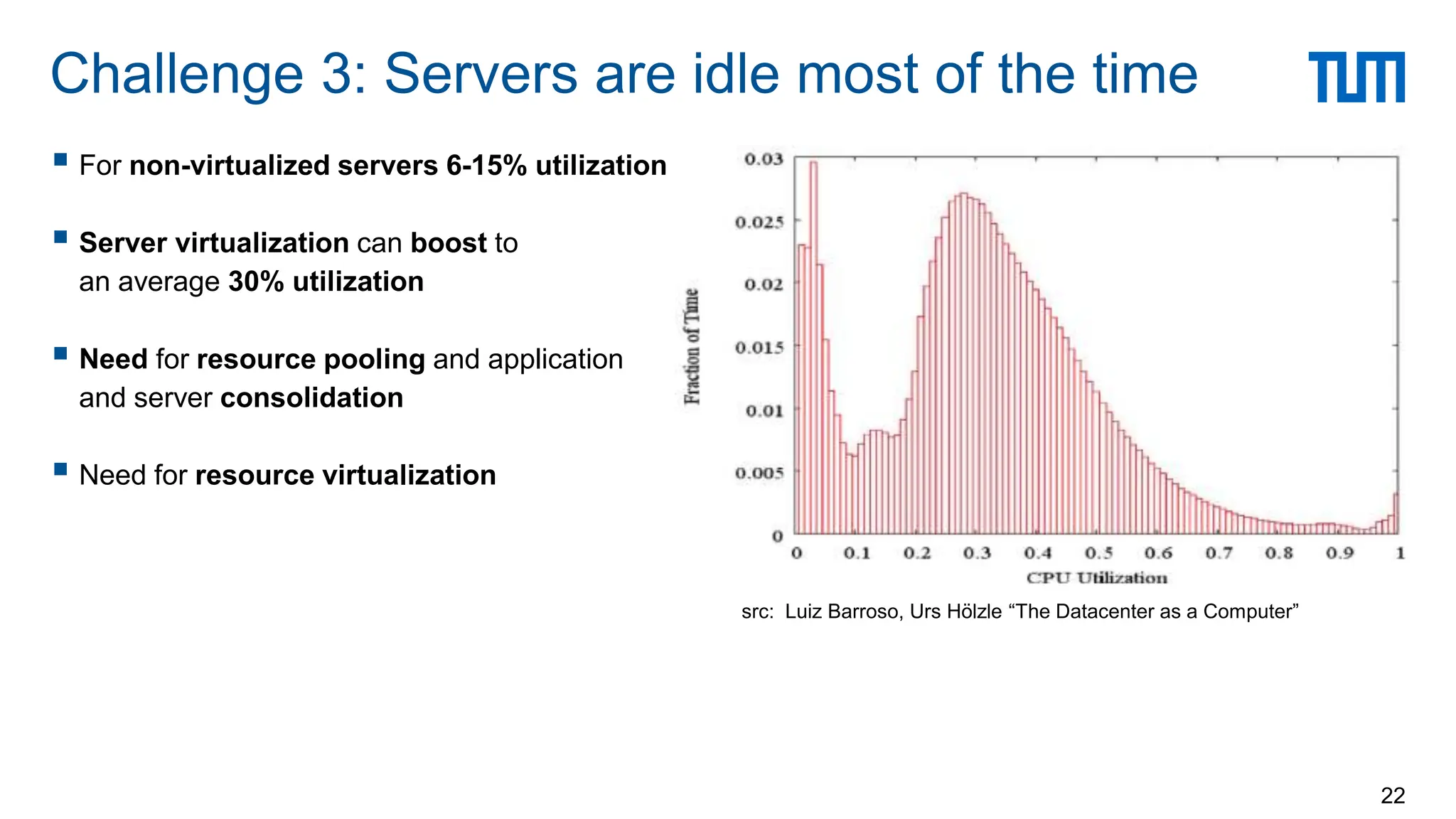

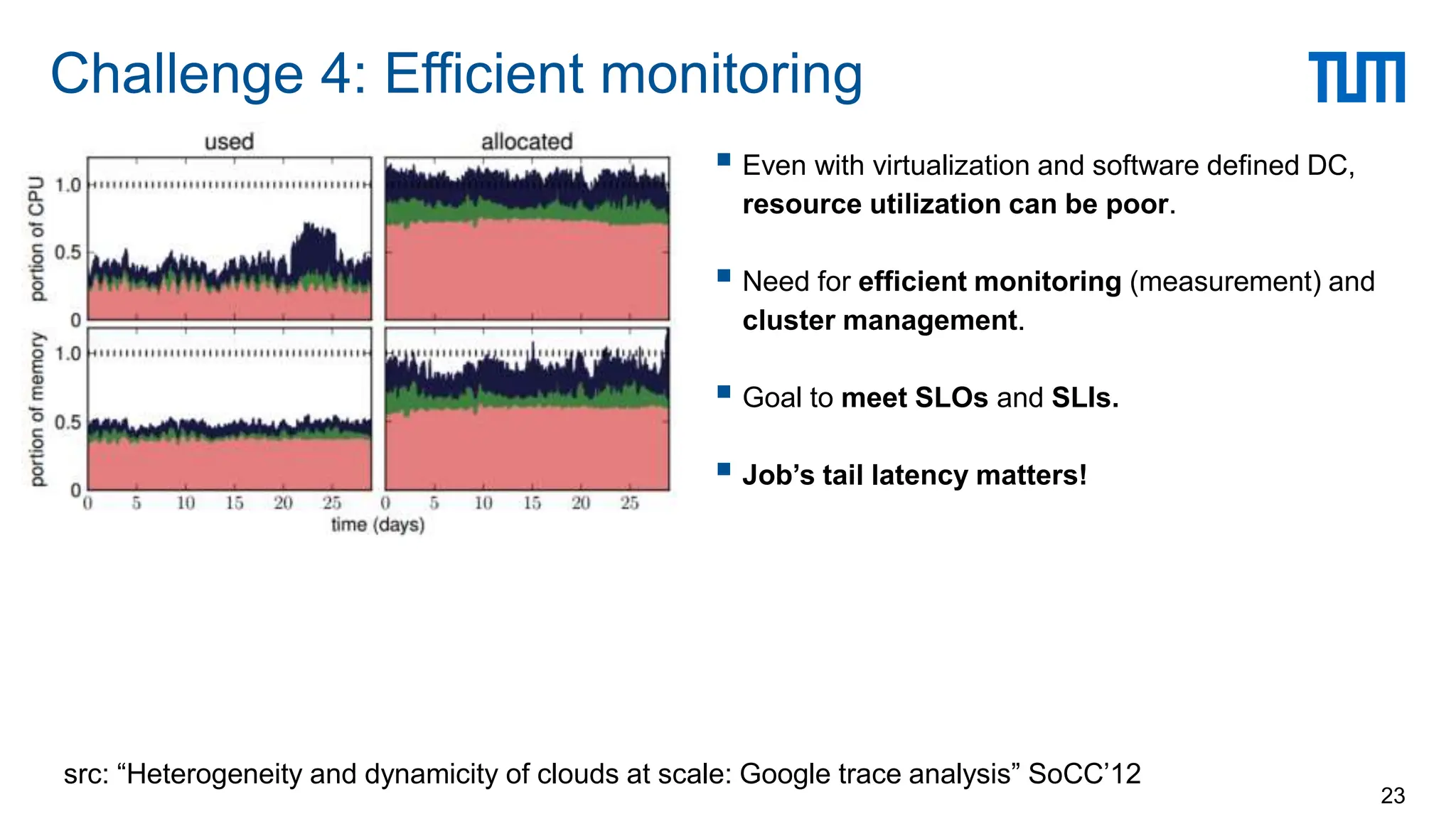

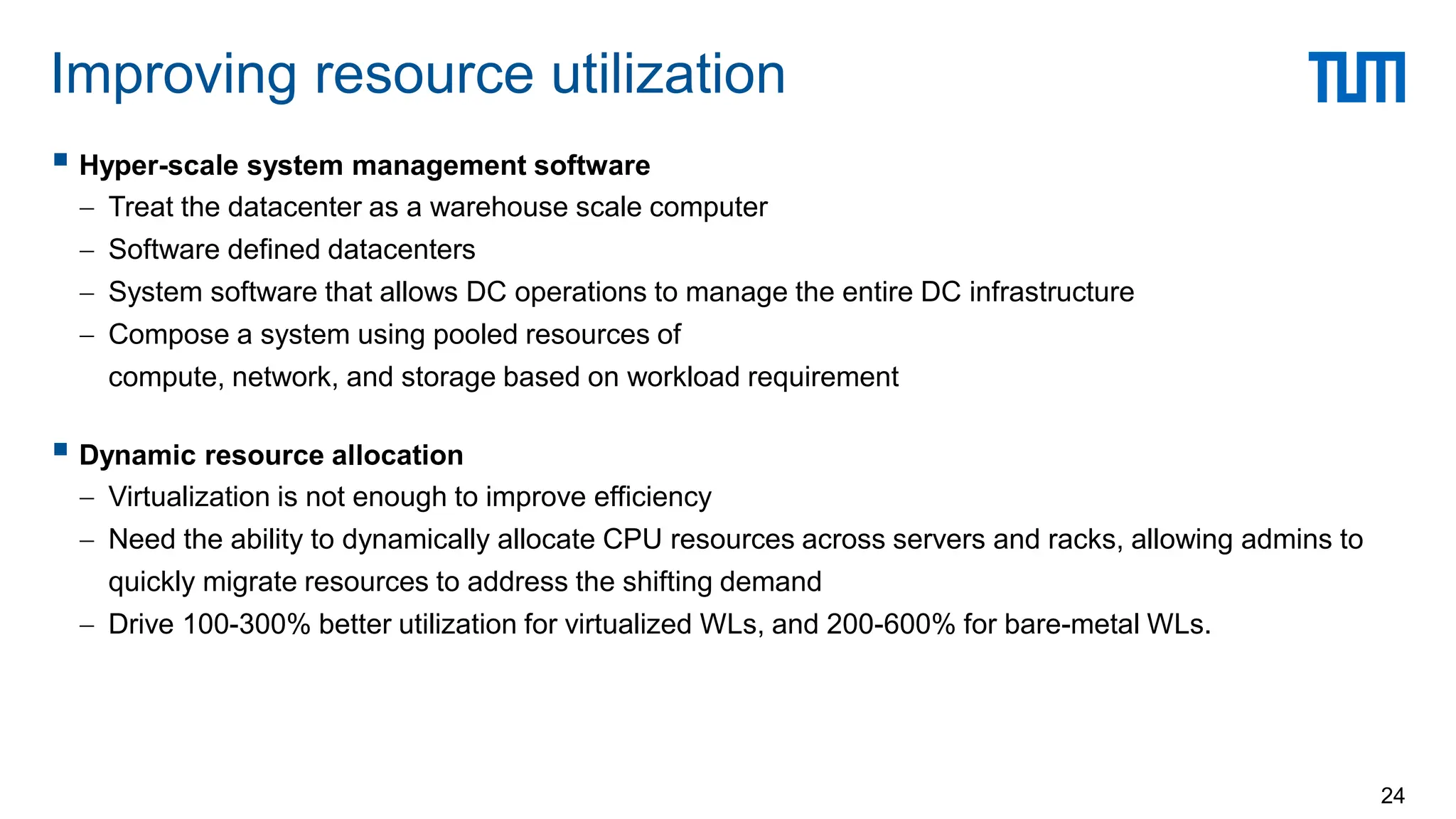

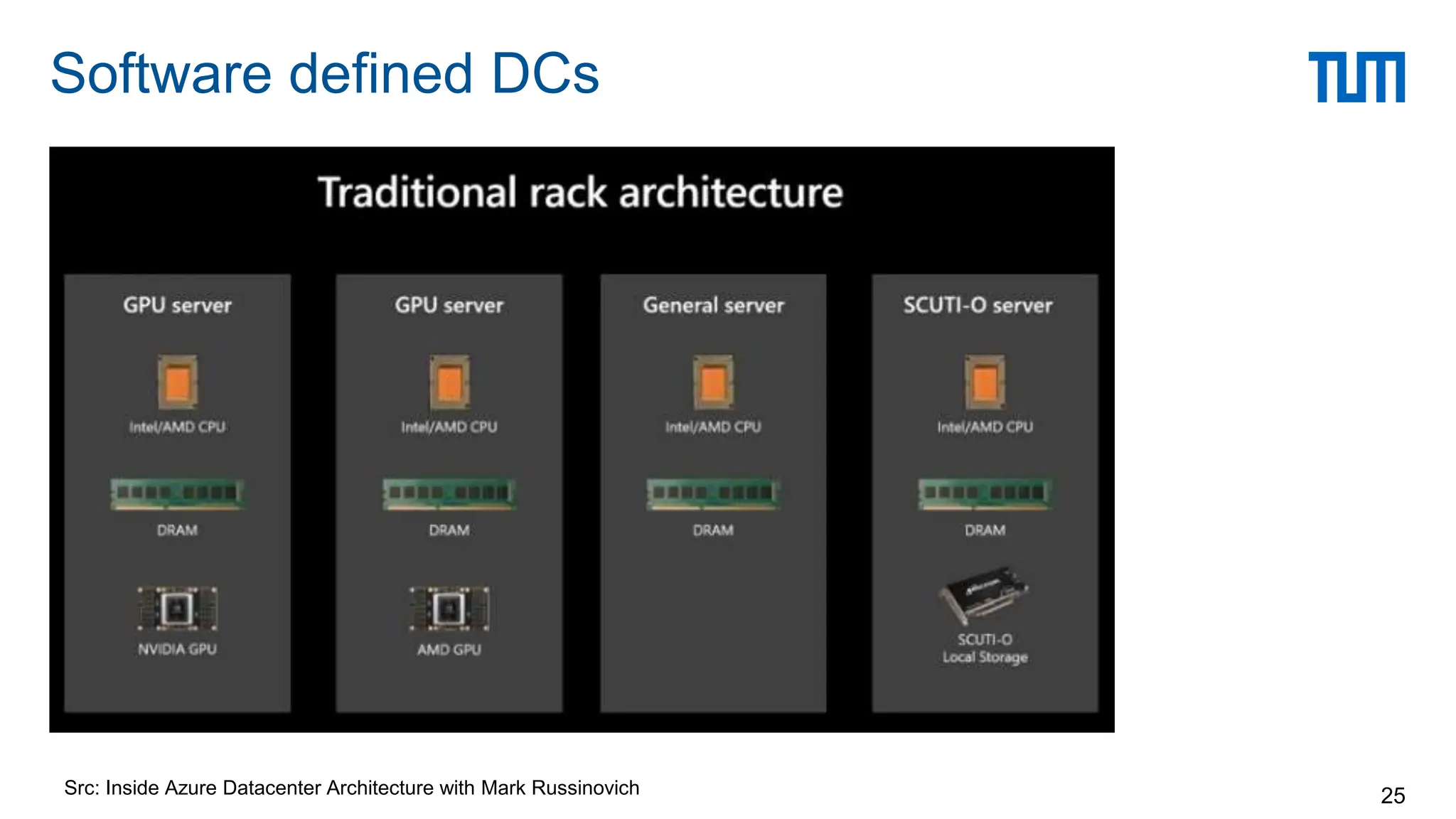

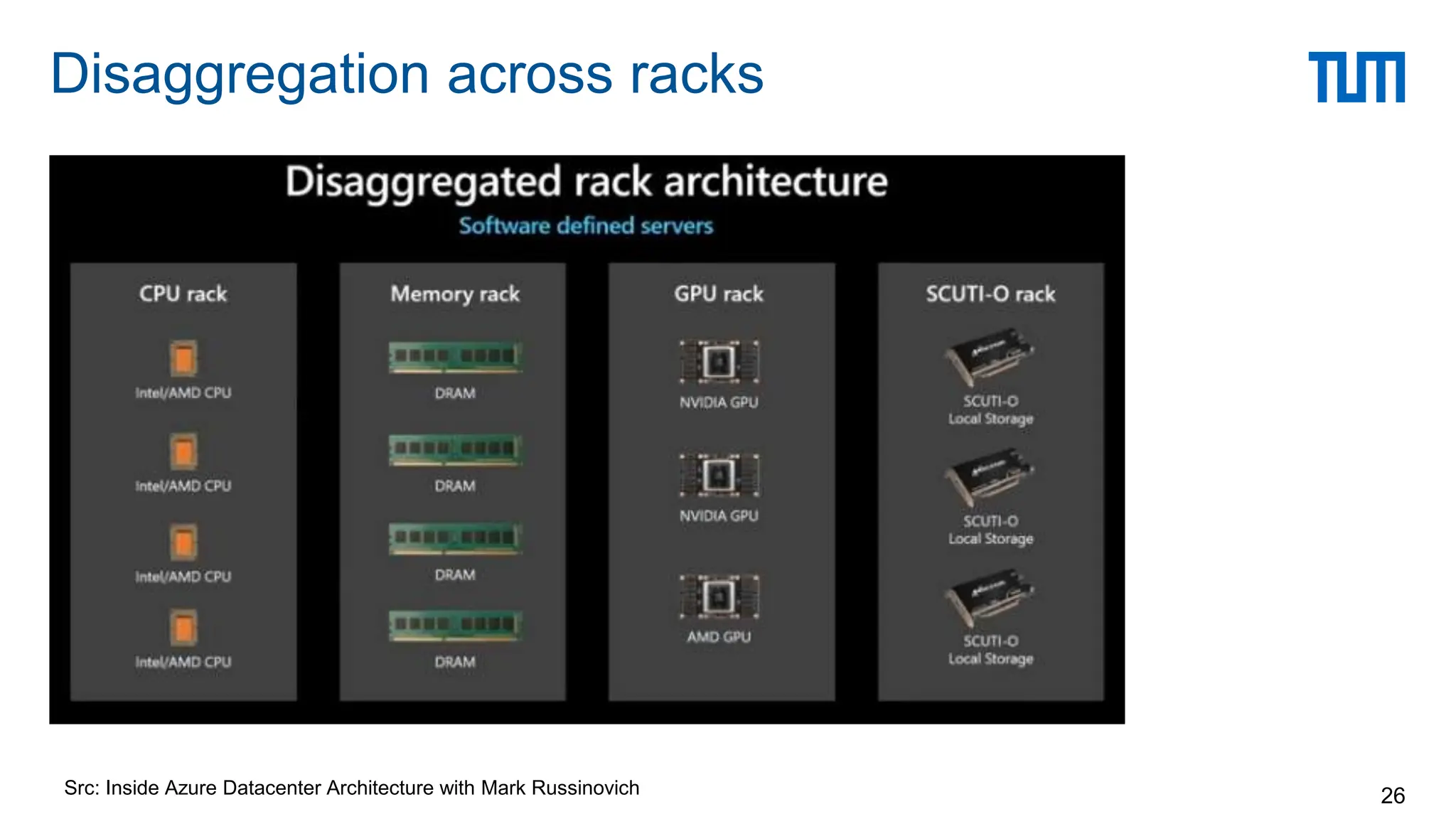

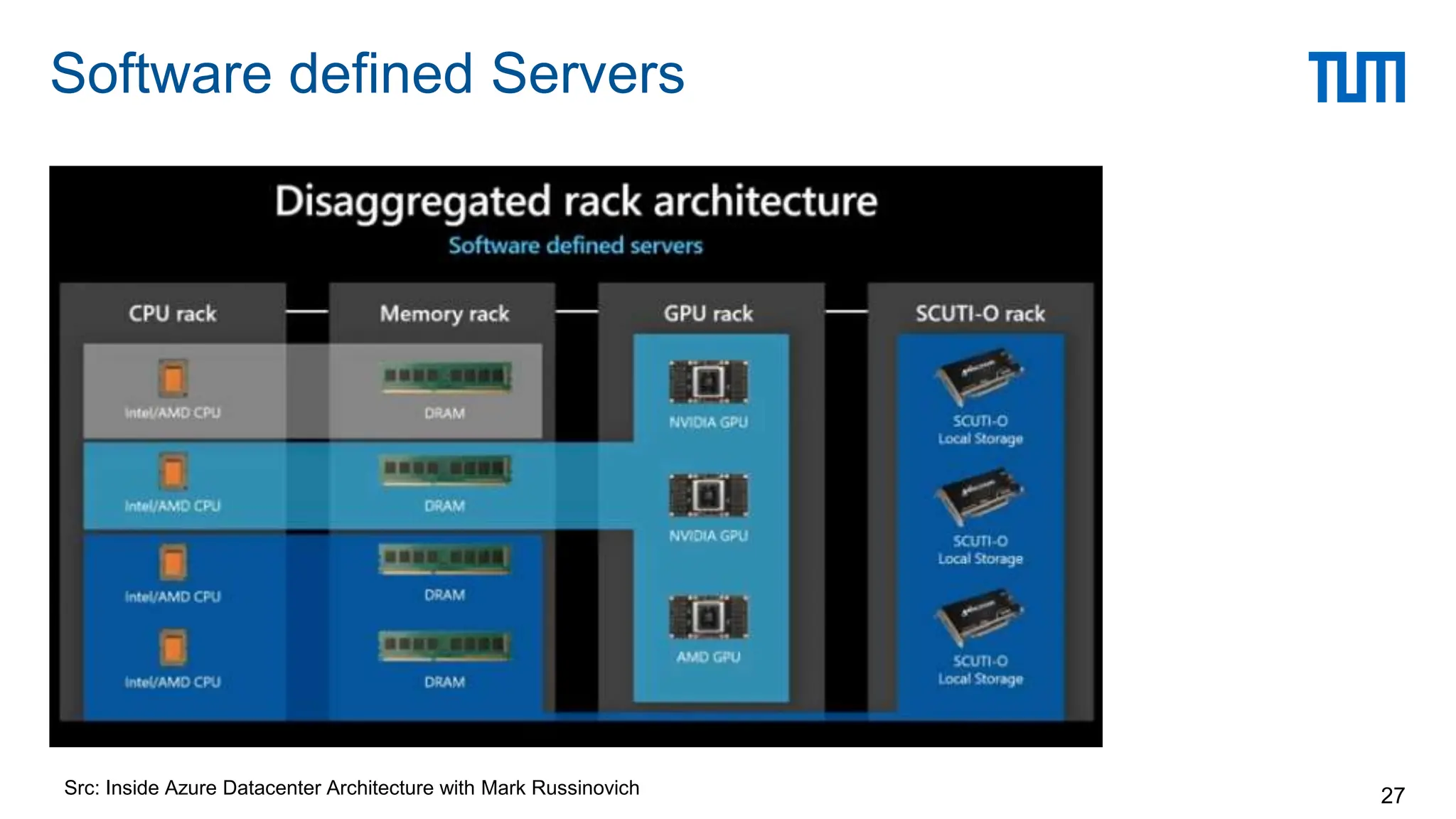

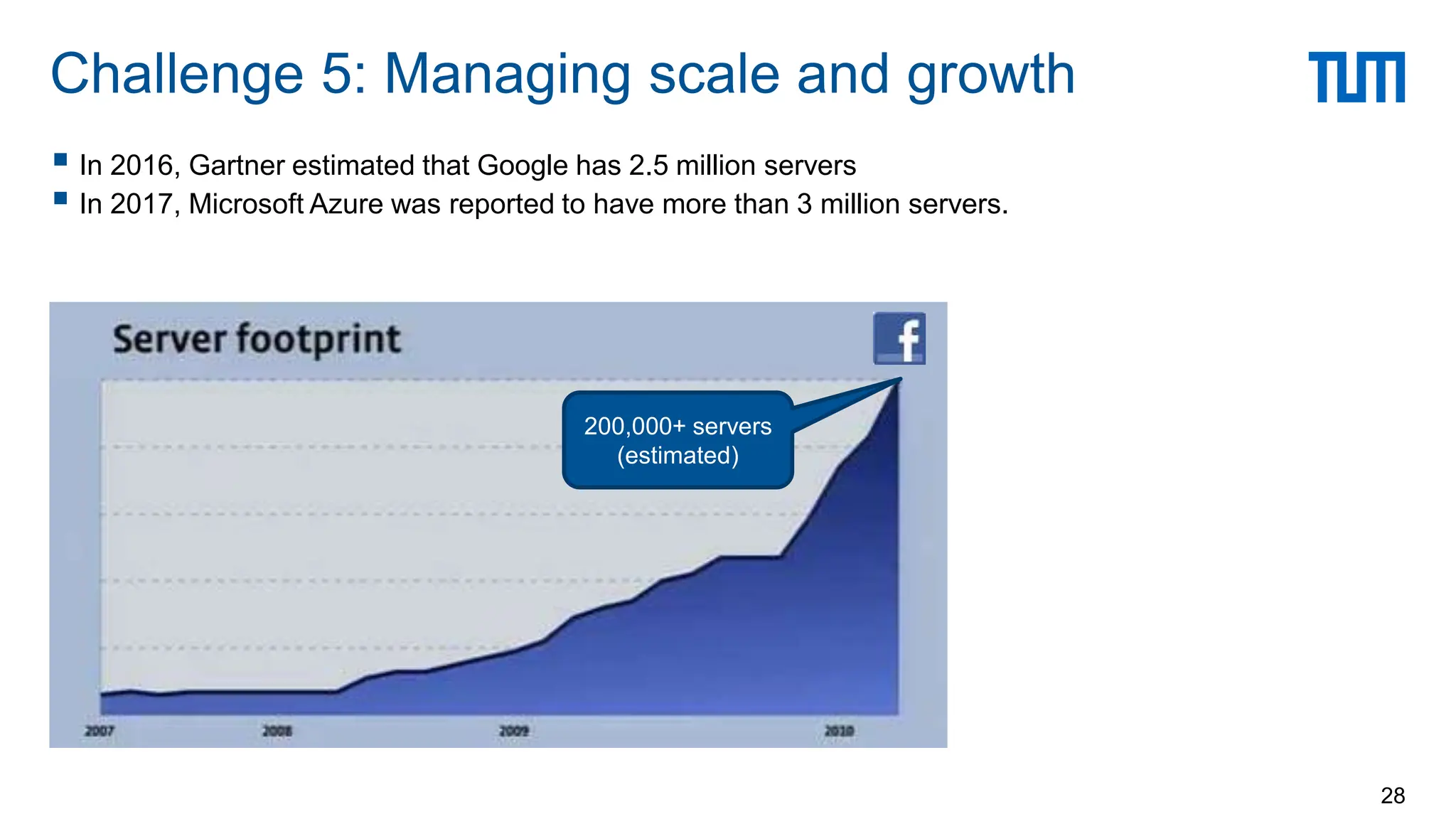

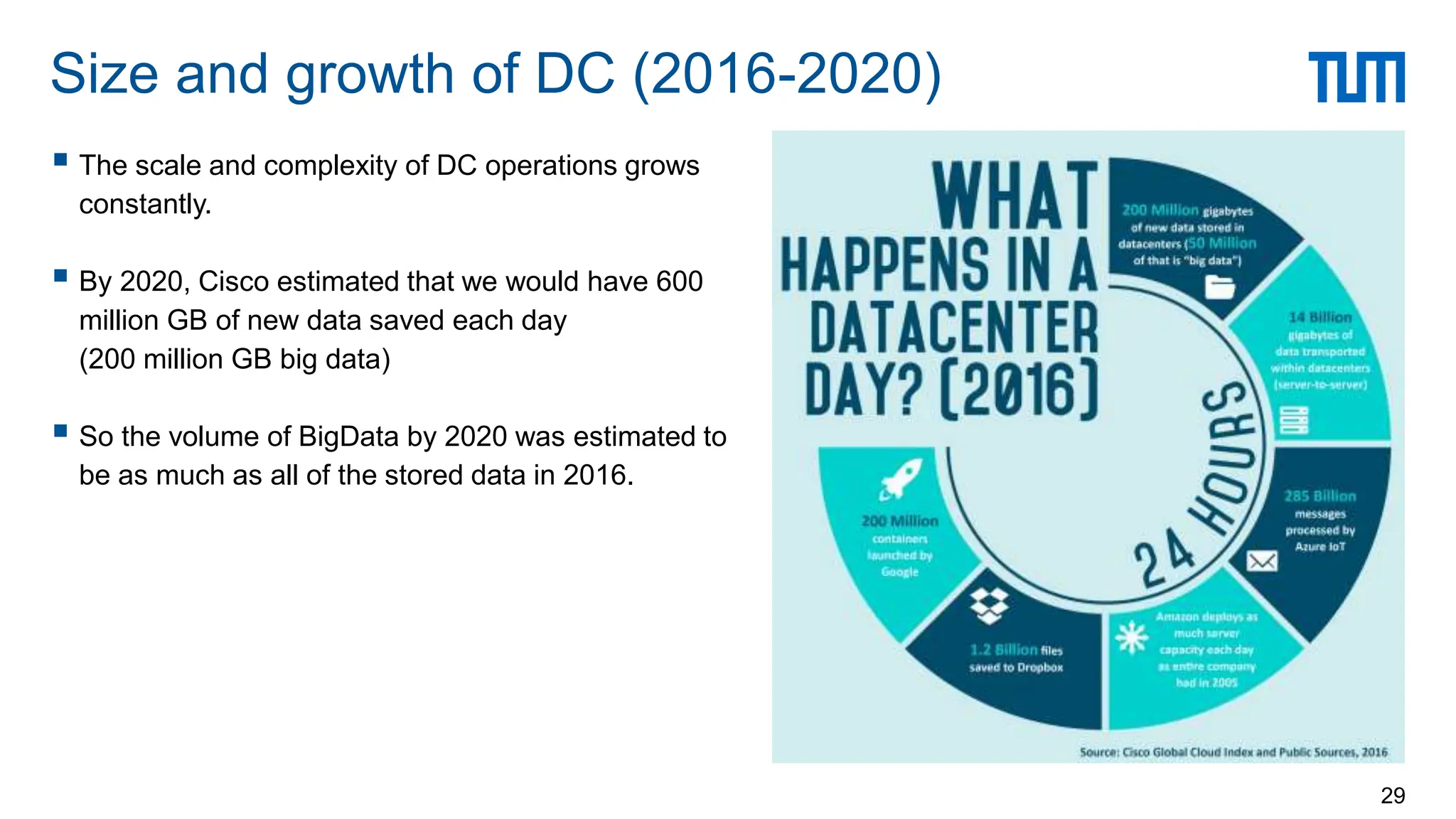

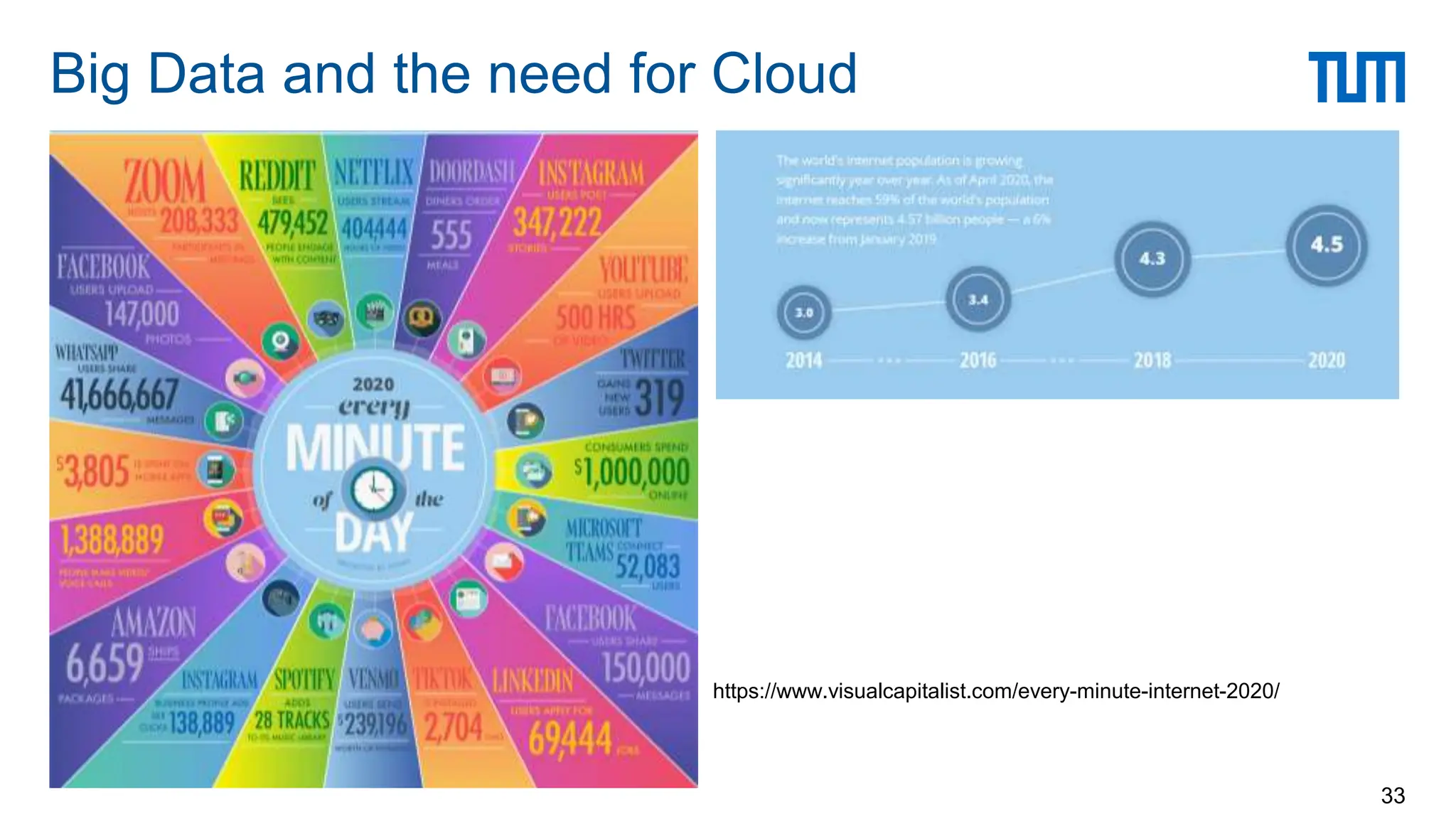

Data centers are large physical facilities that house computing infrastructure for enterprises. They provide utilities like power, cooling, security and shelter for servers and storage equipment. Modern data centers are designed with regions and availability zones for fault tolerance, with each zone consisting of one or more data centers within close network proximity. Key challenges for data centers include efficient cooling of equipment, improving energy proportionality as servers are often idle, optimizing resource utilization through virtualization and dynamic allocation, and managing the immense scale of infrastructure and traffic as cloud providers operate millions of servers globally.