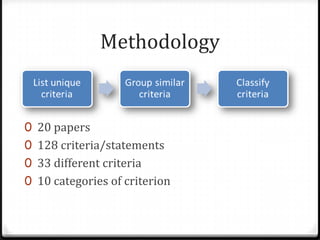

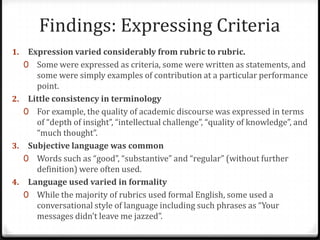

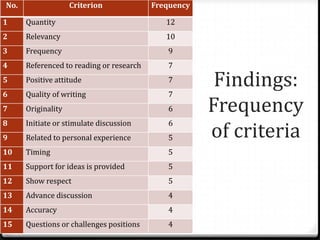

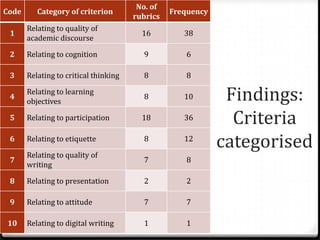

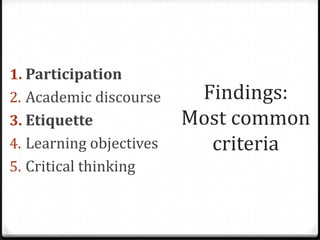

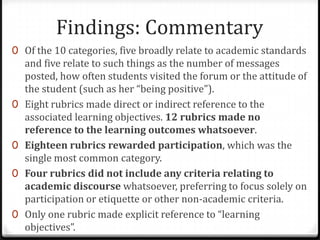

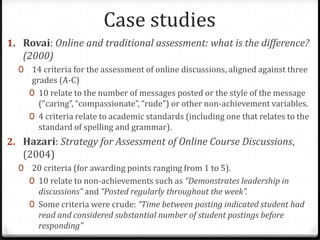

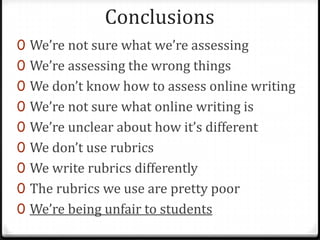

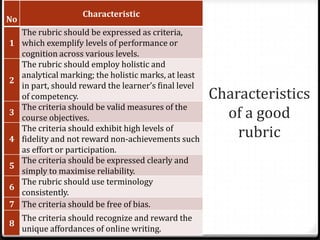

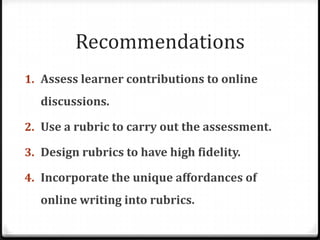

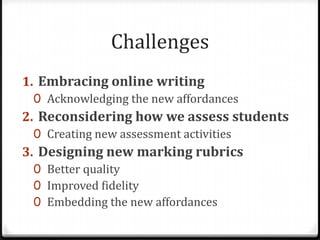

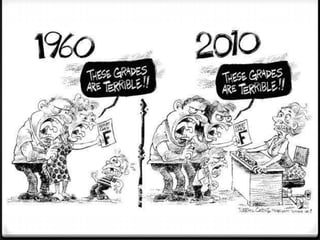

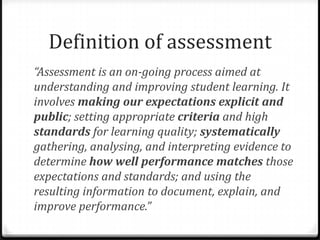

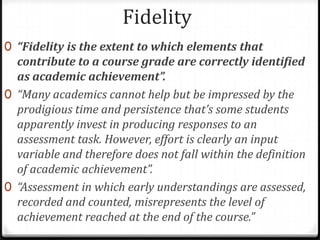

This document summarizes a presentation on assessing online writing. It finds that current practices for assessing online discussions are inconsistent and often assess participation over academic quality. Rubrics varied widely in expression and criteria, with most focusing on non-academic factors like participation rather than critical thinking or learning objectives. The document concludes current assessment lacks fidelity and recommendations designing rubrics that embrace online writing's affordances and assess learner contributions with high fidelity.

![Challenges“Most of its advocates [of writing using Web 2.0 tools] offer no guidance on how to conduct assessment that comes to grips with its unique features, its difference from previous forms of student writing, and staff marking or its academic administration.”“The few extant examples appear to encourage and assess superficial learning, and to gloss over the assessment opportunities and implications of Web 2.0’s distinguishing features.”](https://image.slidesharecdn.com/caaconference2010-assessingonlinewriting-100721062149-phpapp01/85/CAA-conference-2010-Assessing-online-writing-14-320.jpg)