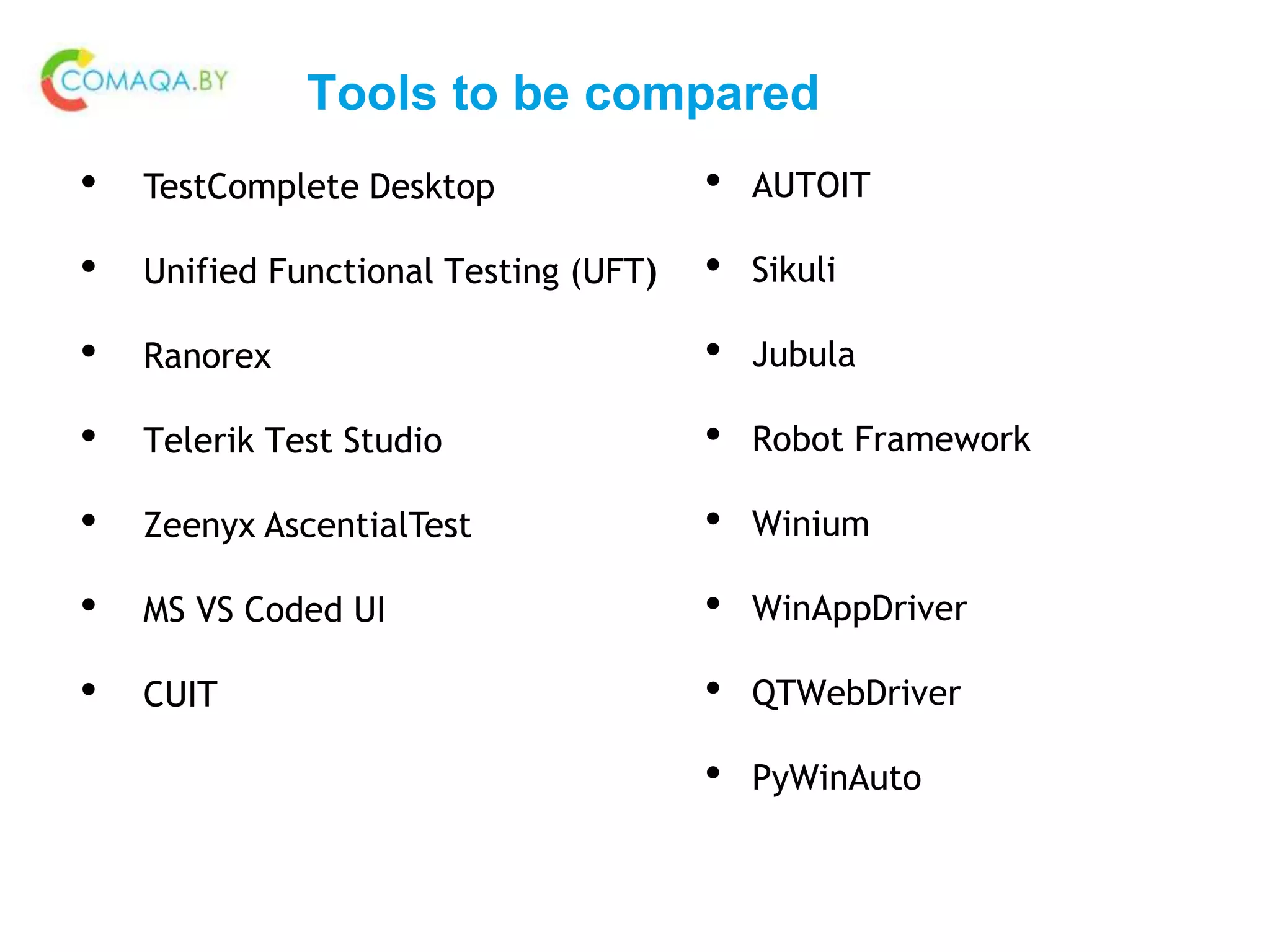

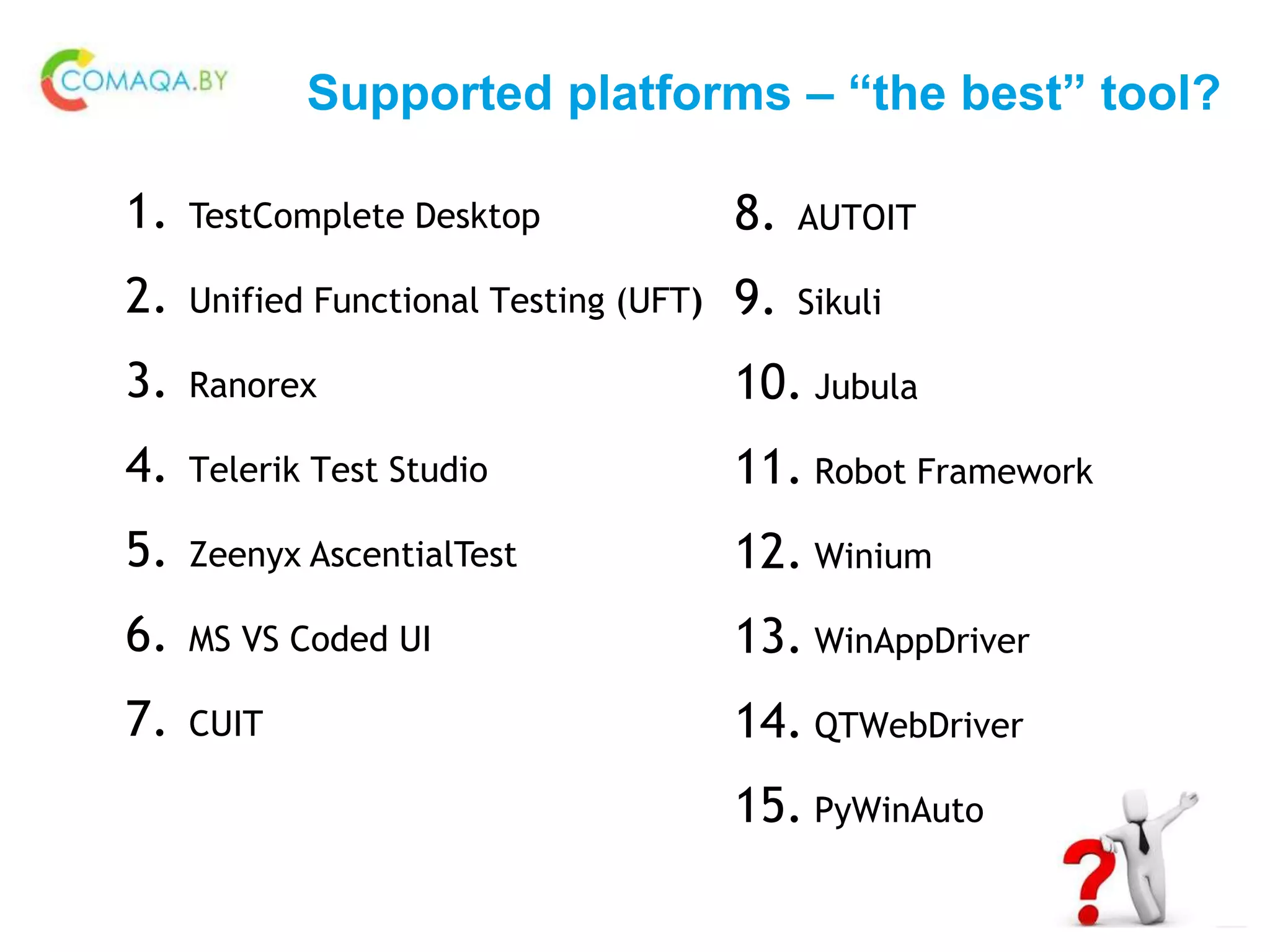

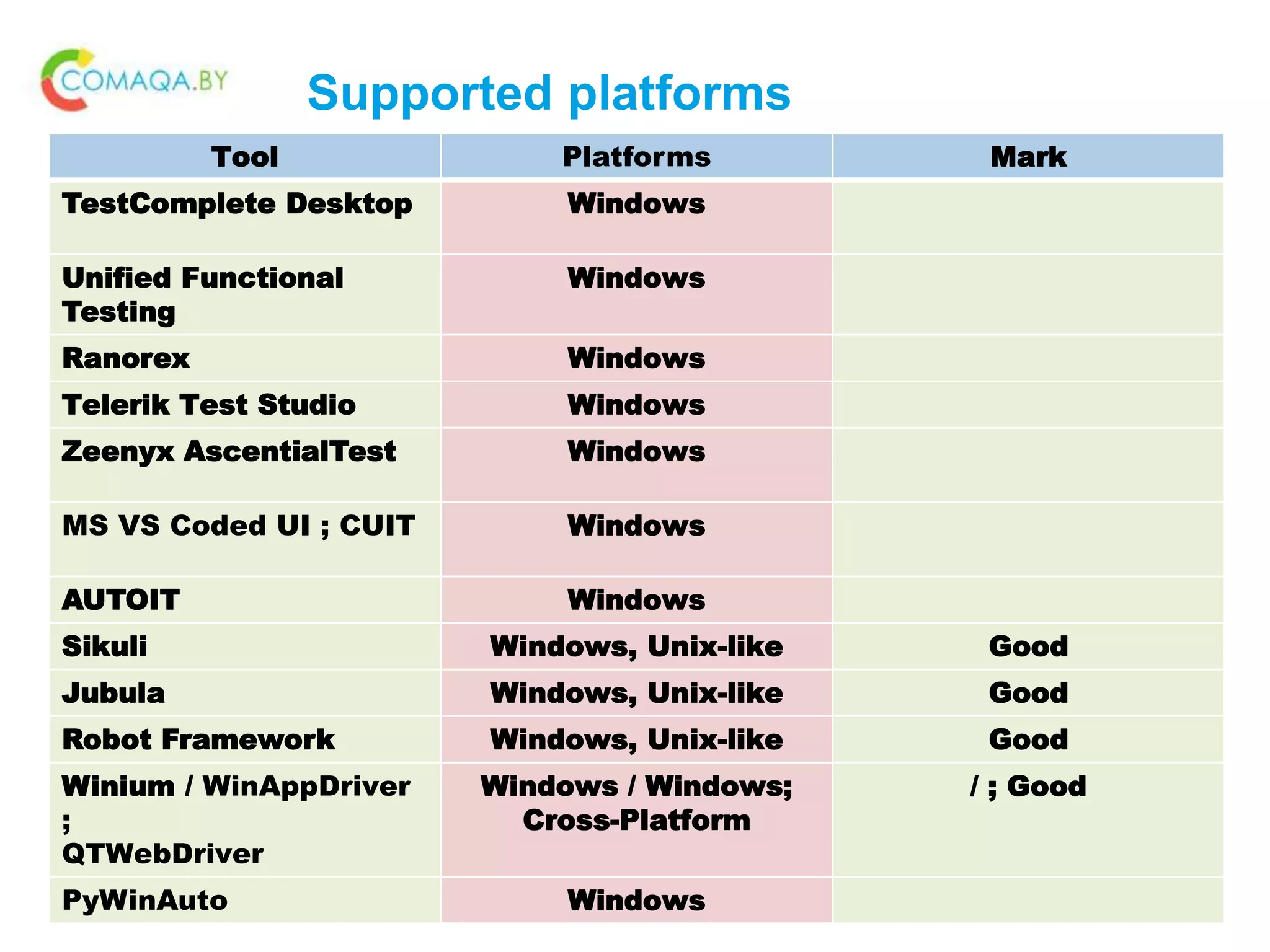

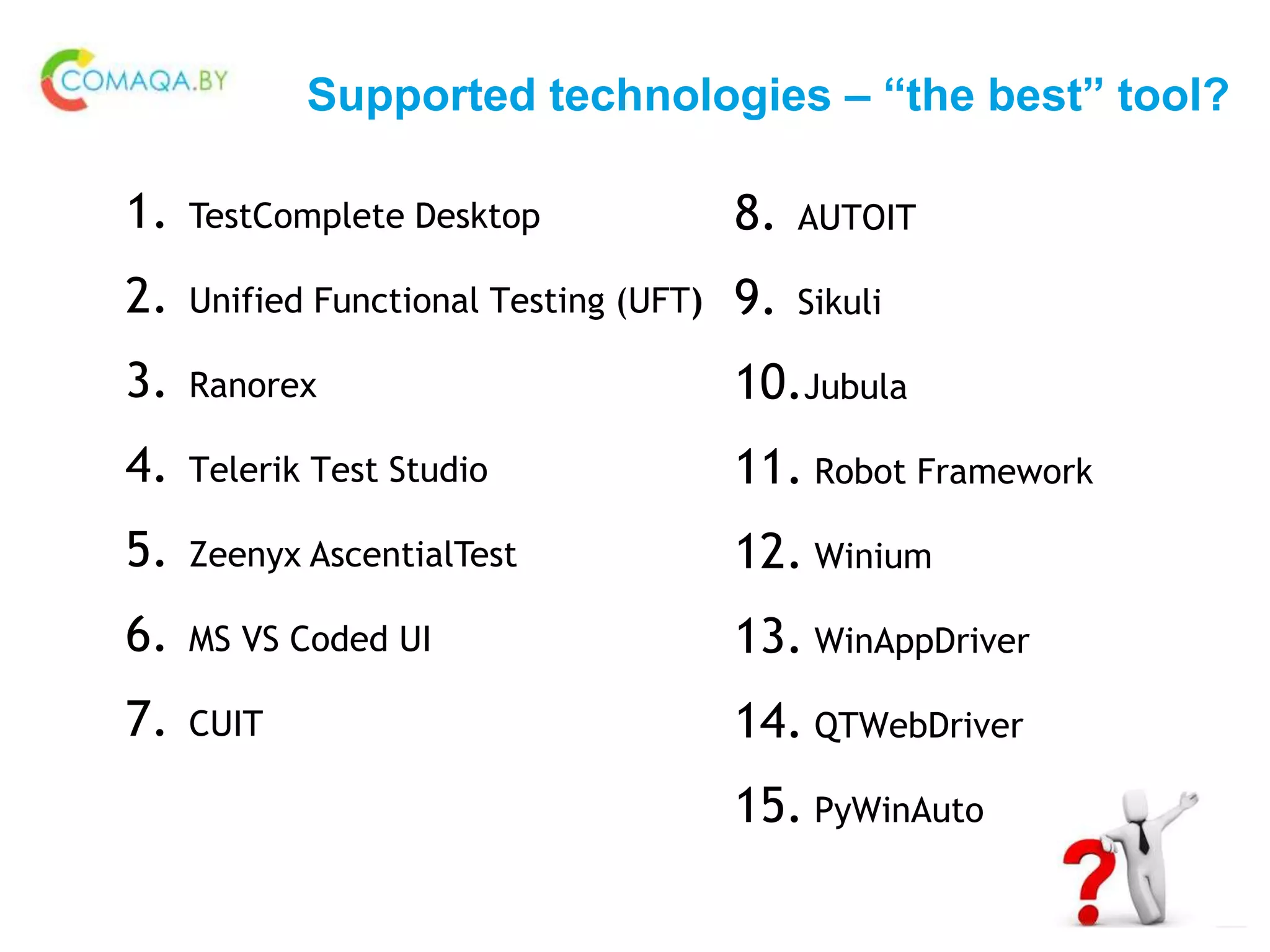

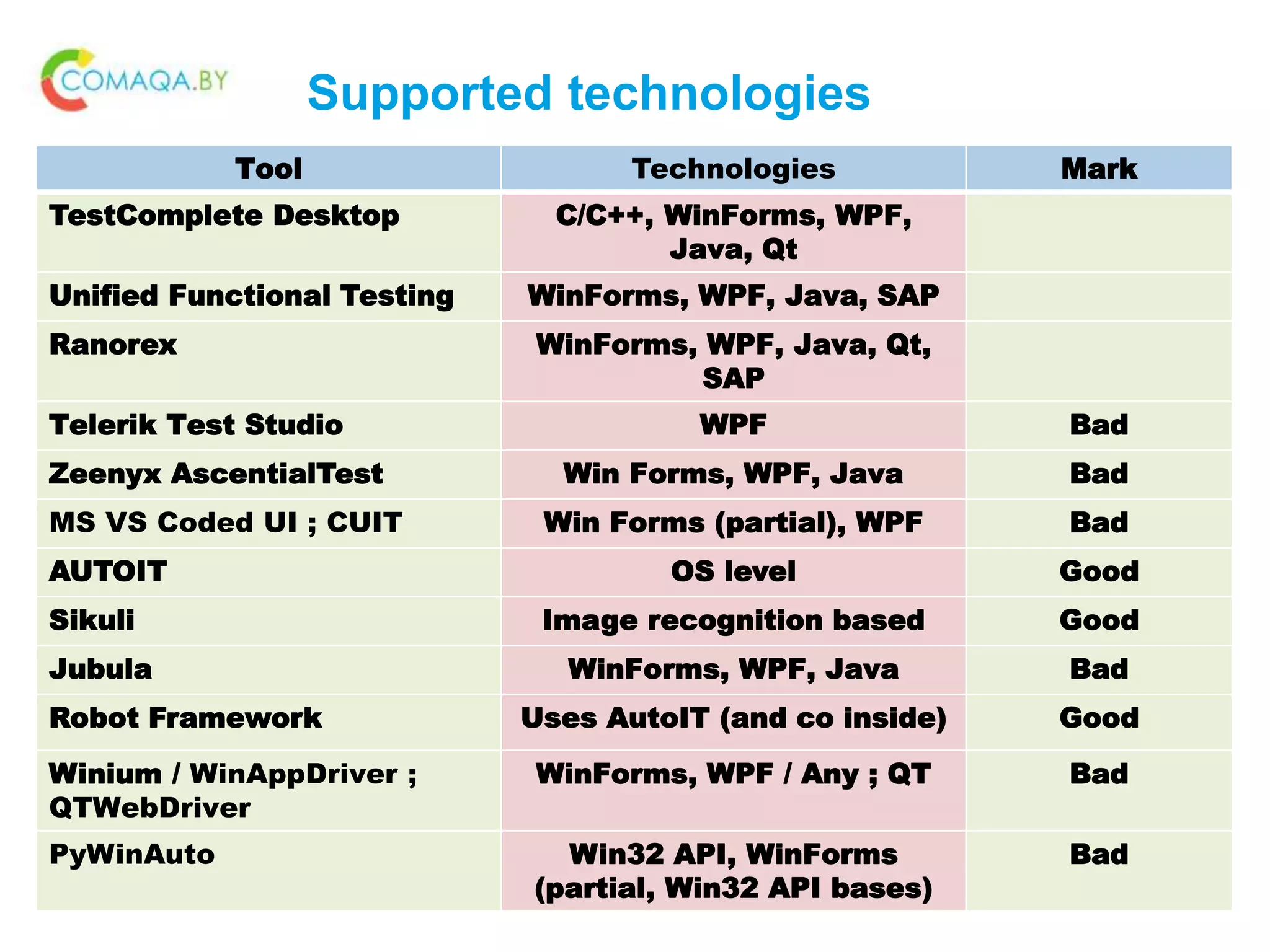

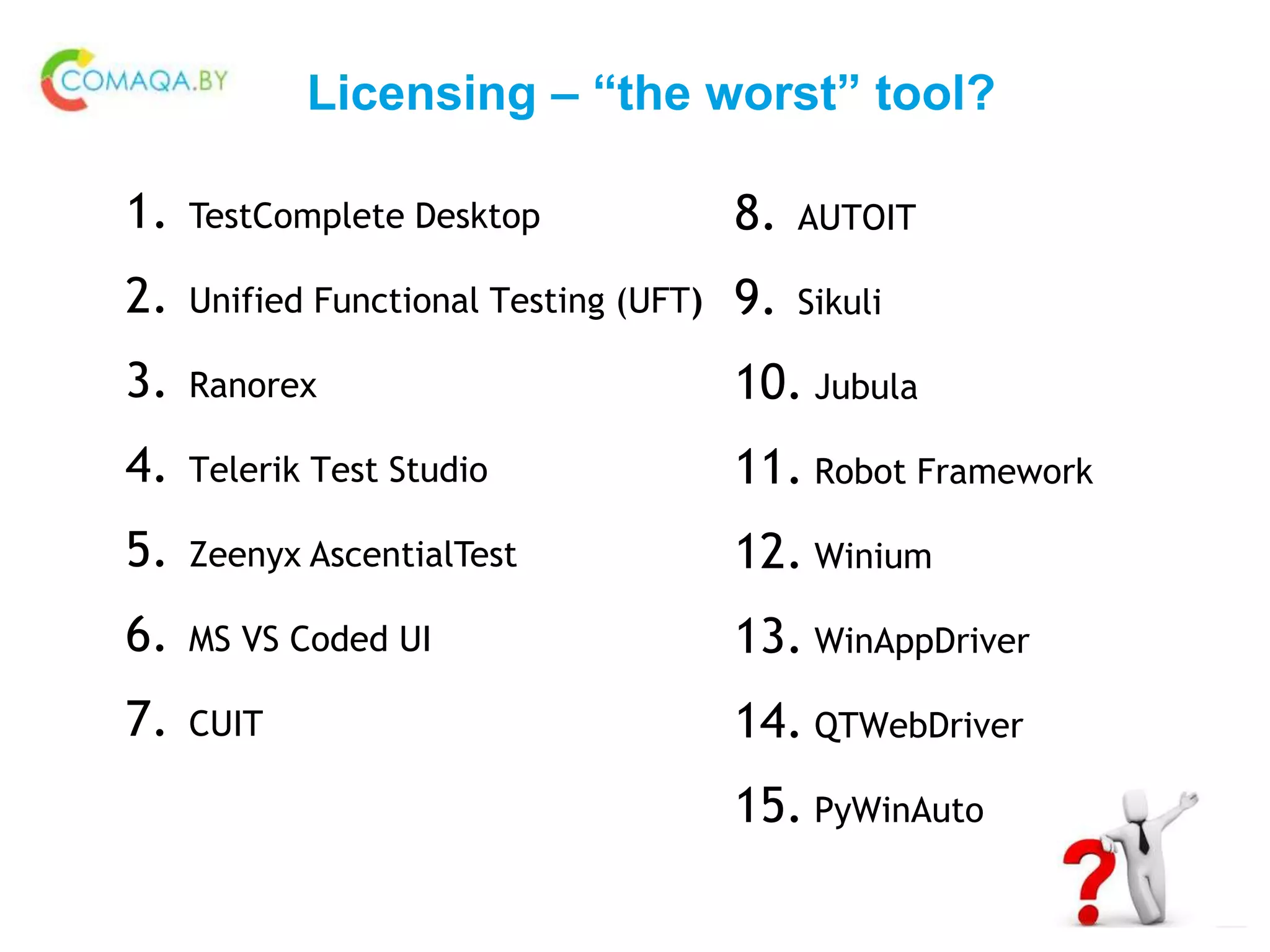

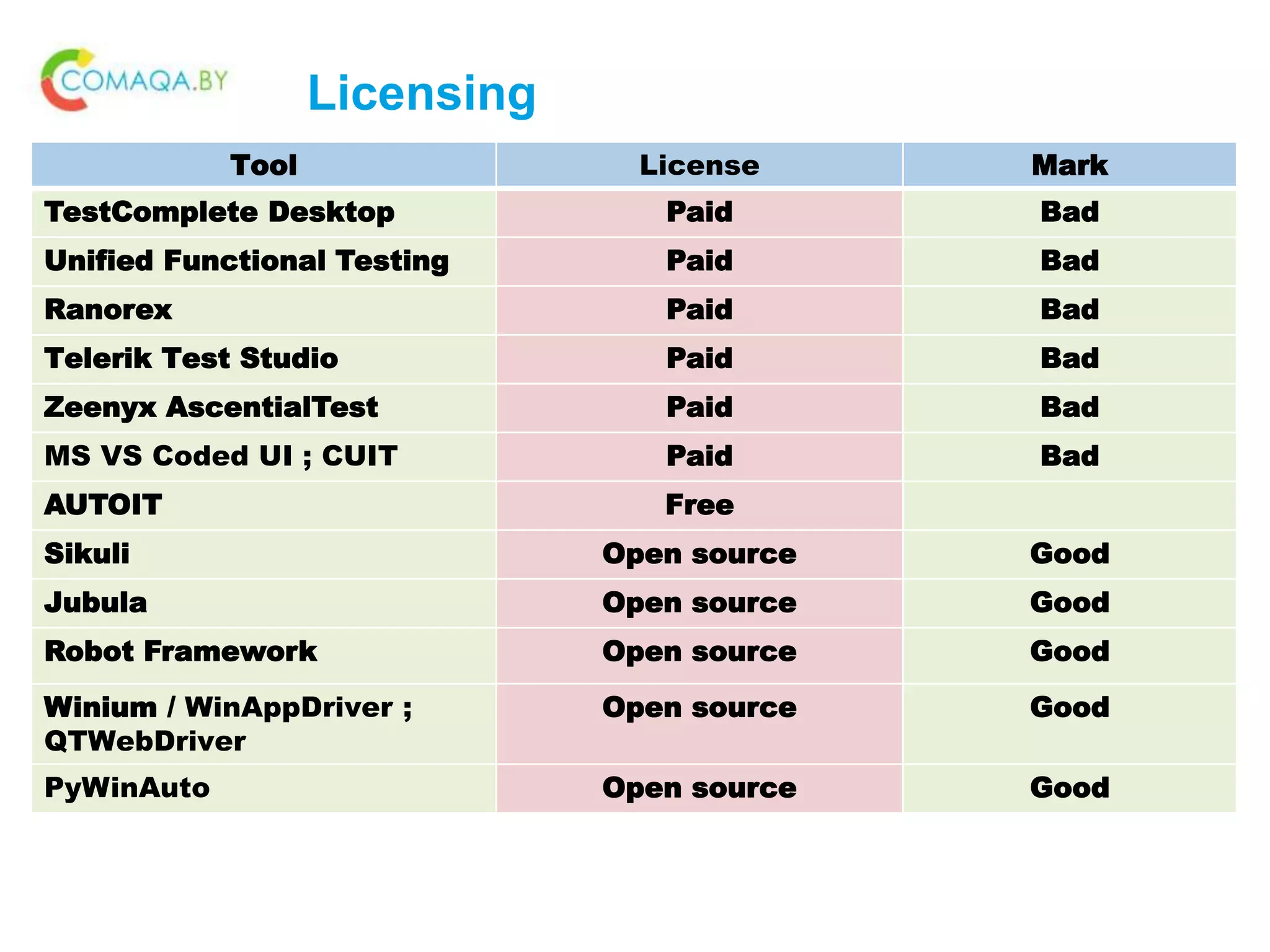

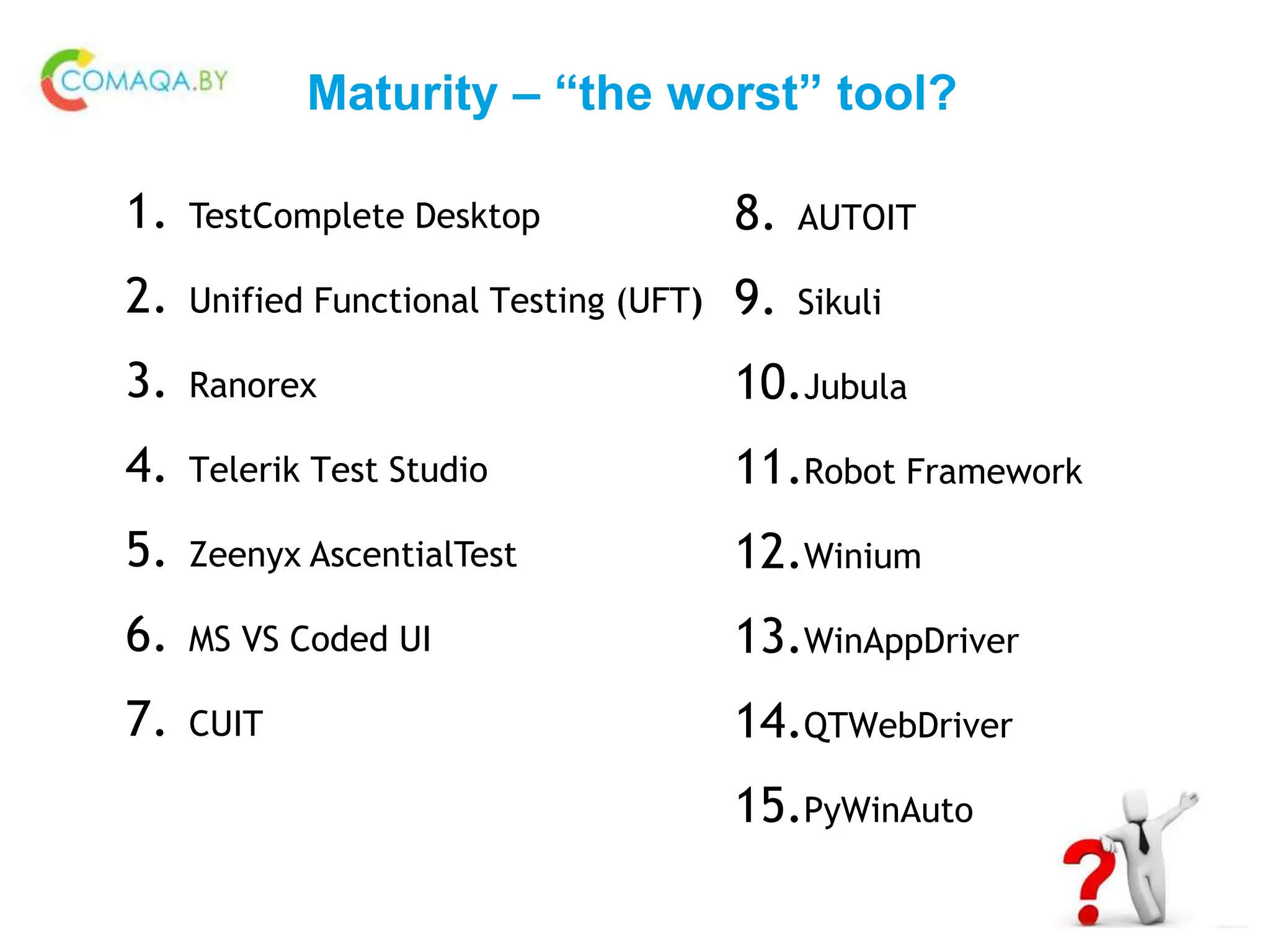

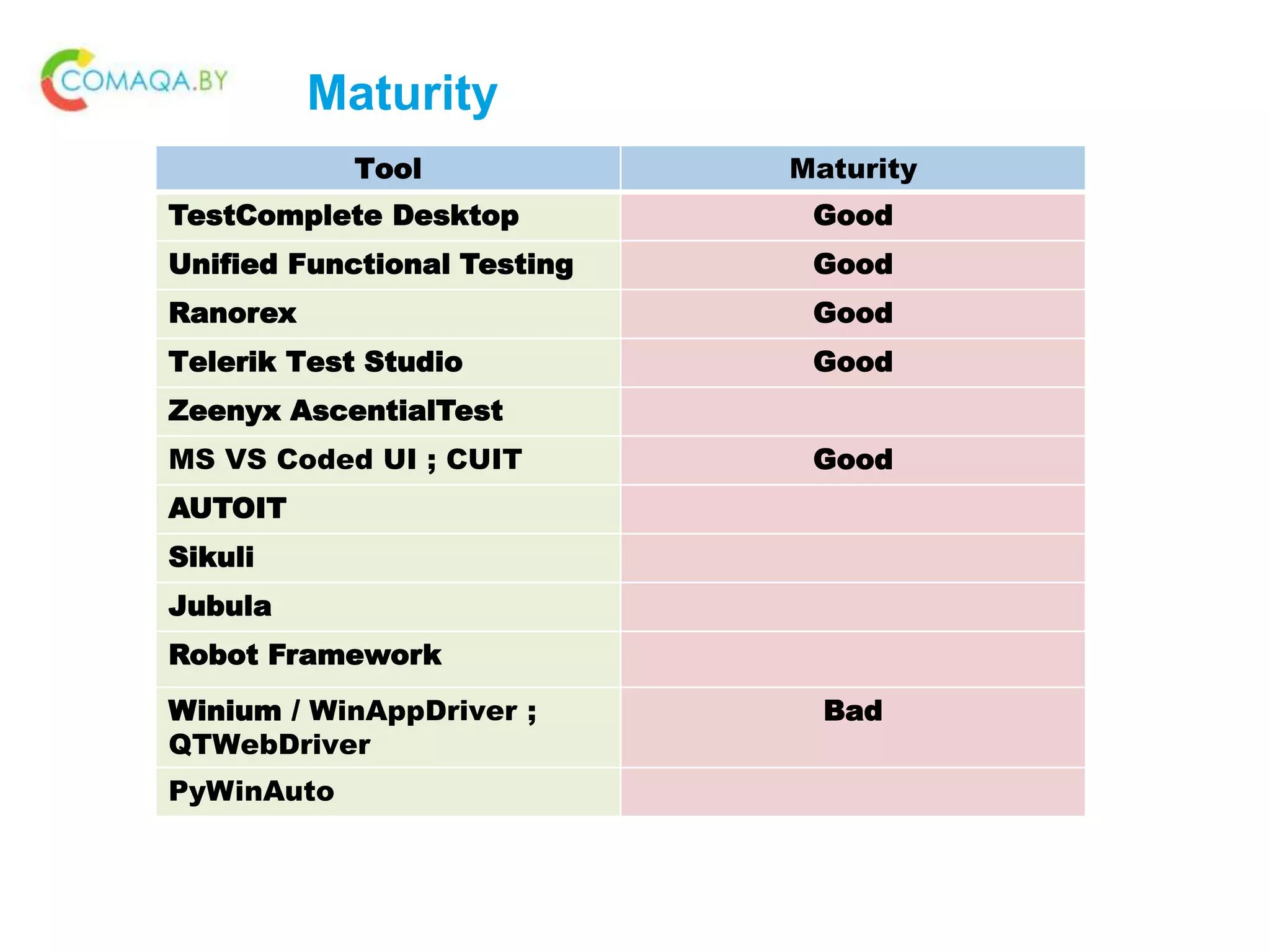

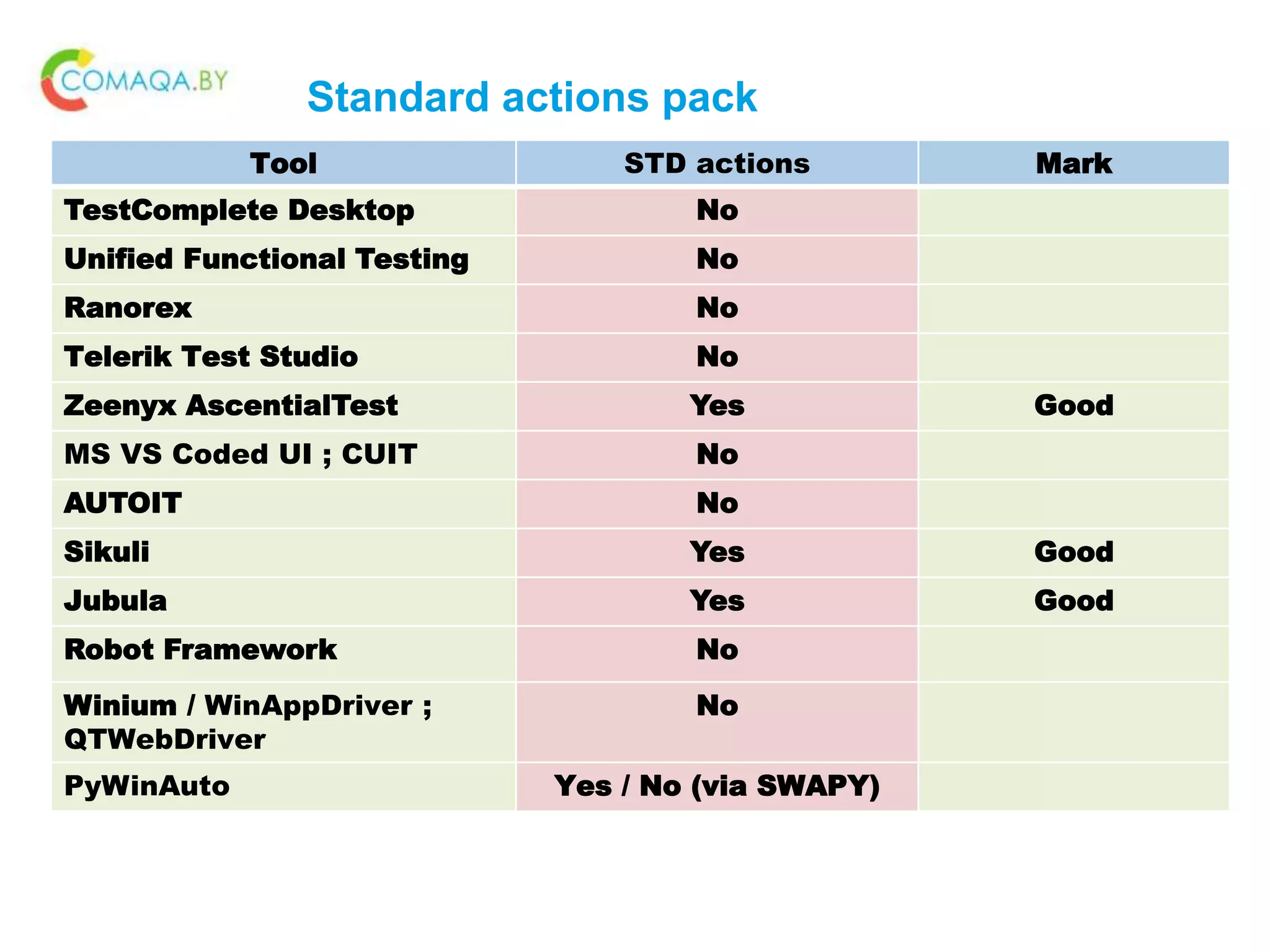

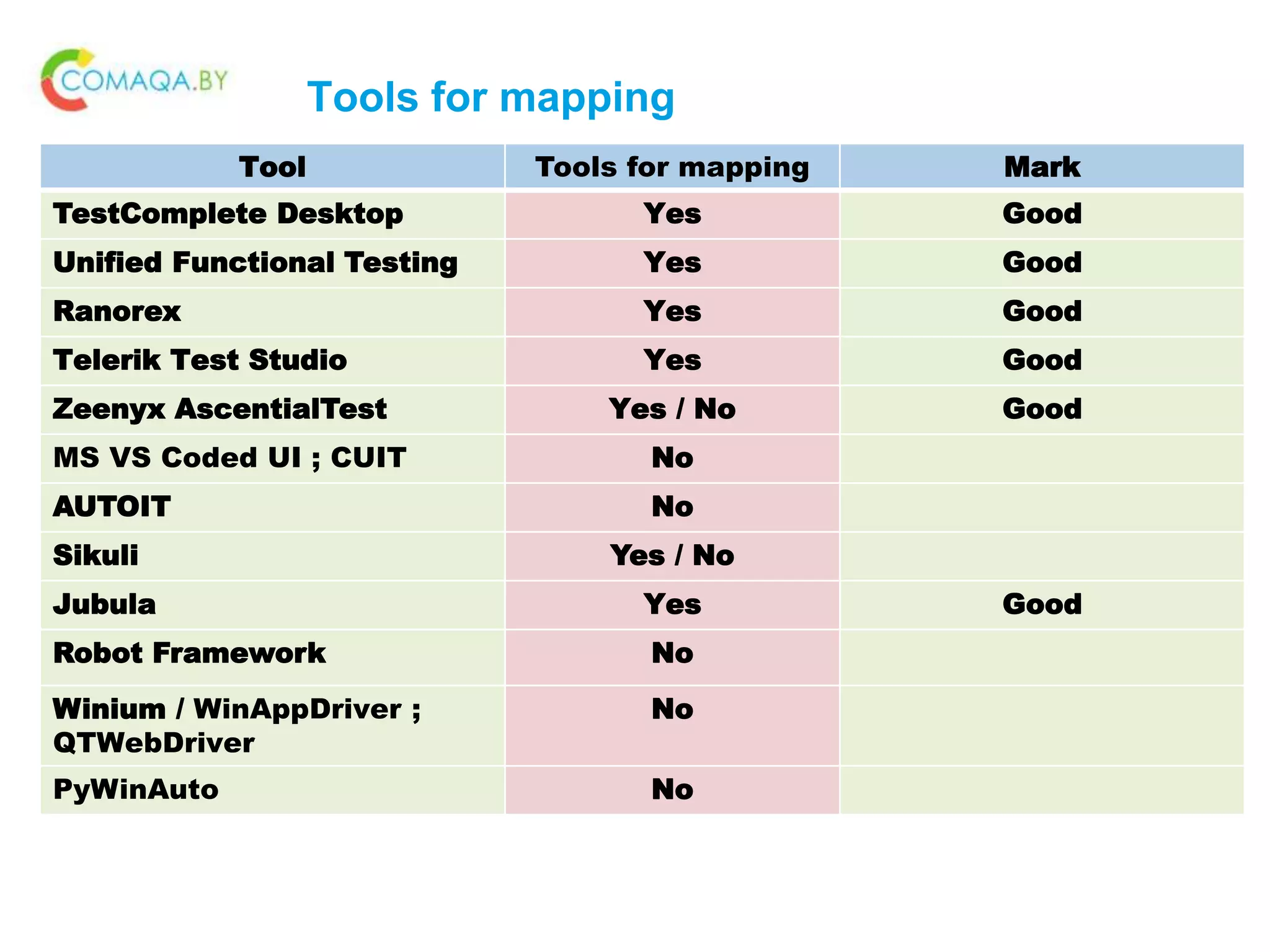

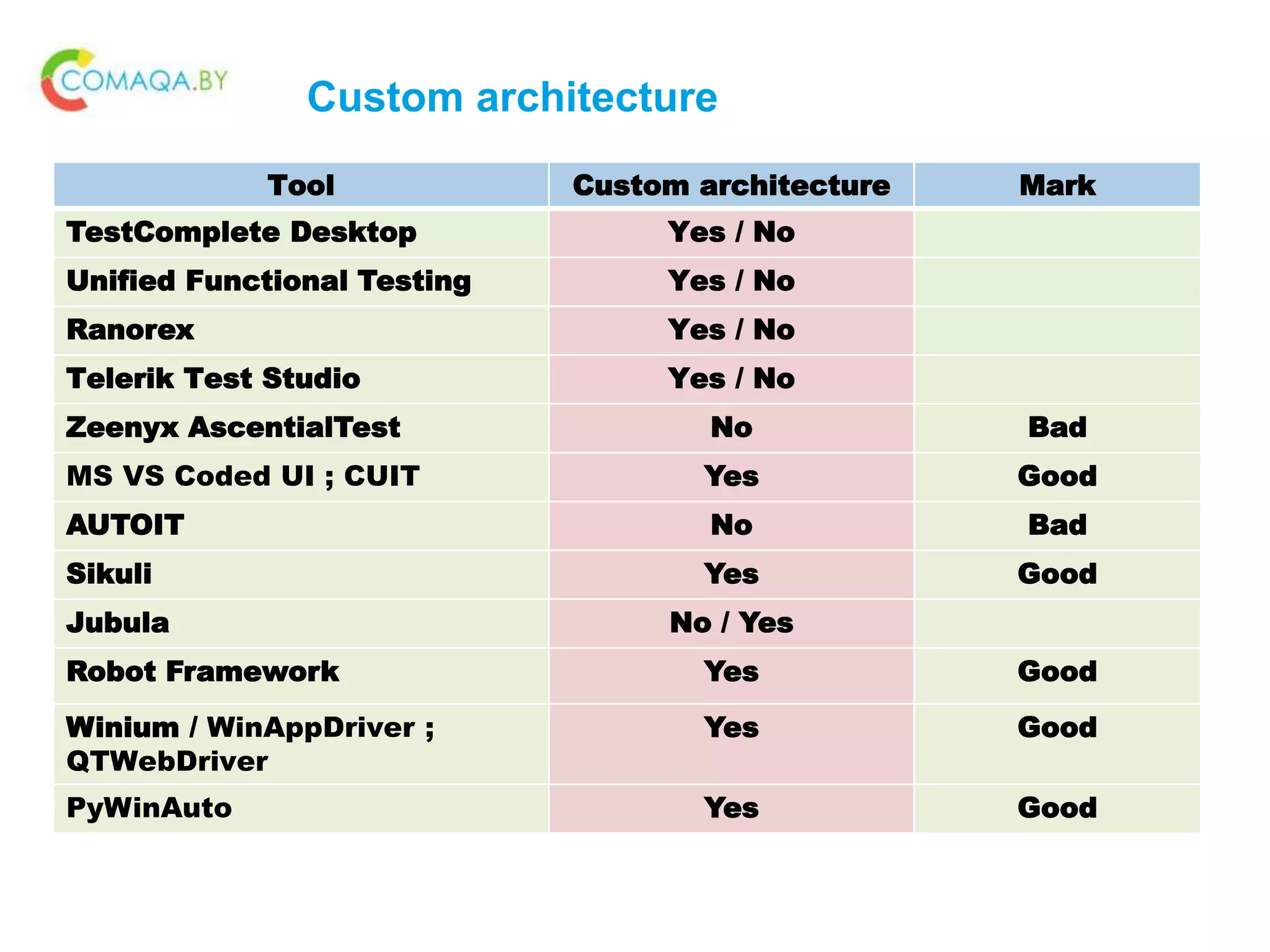

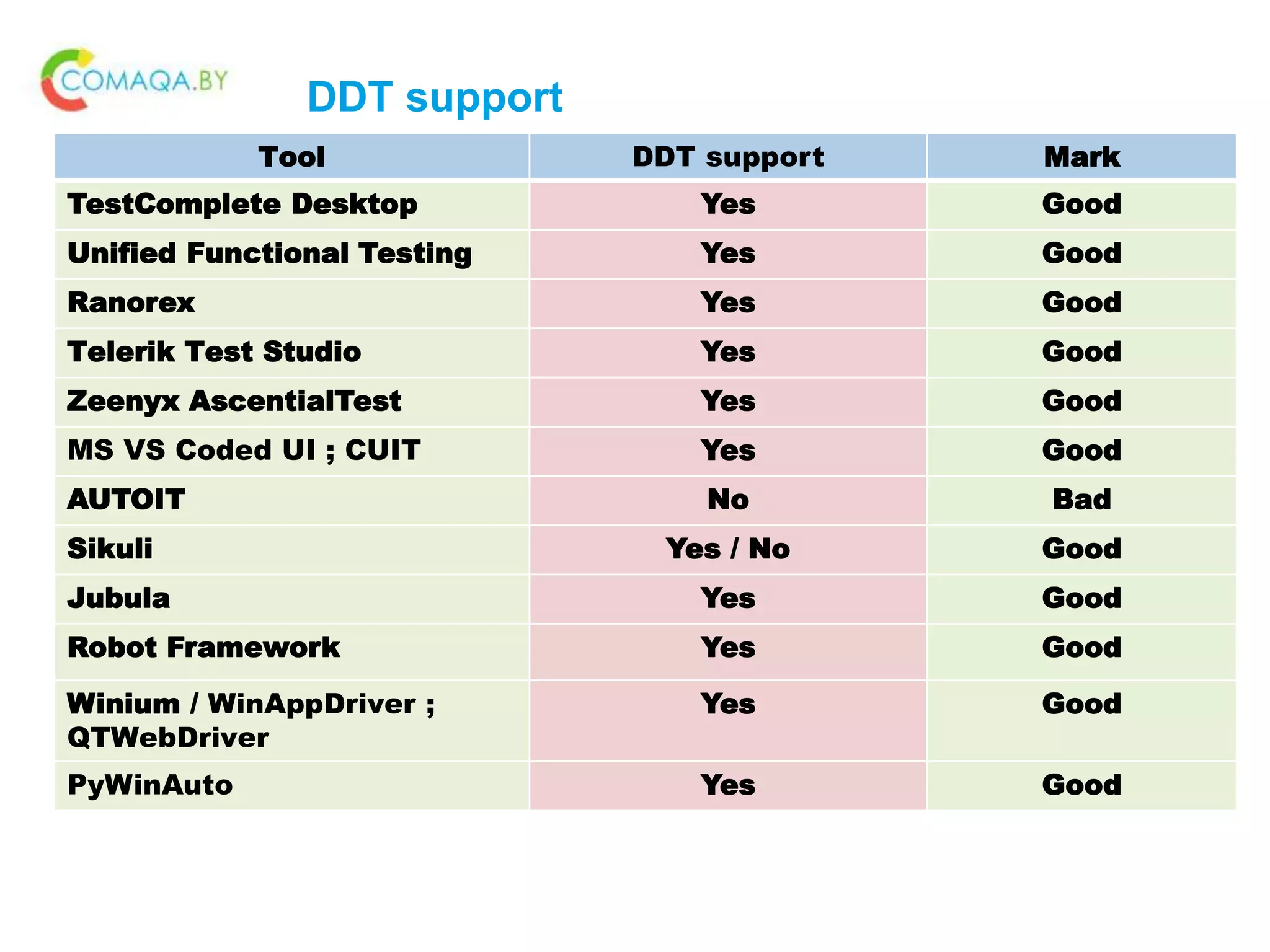

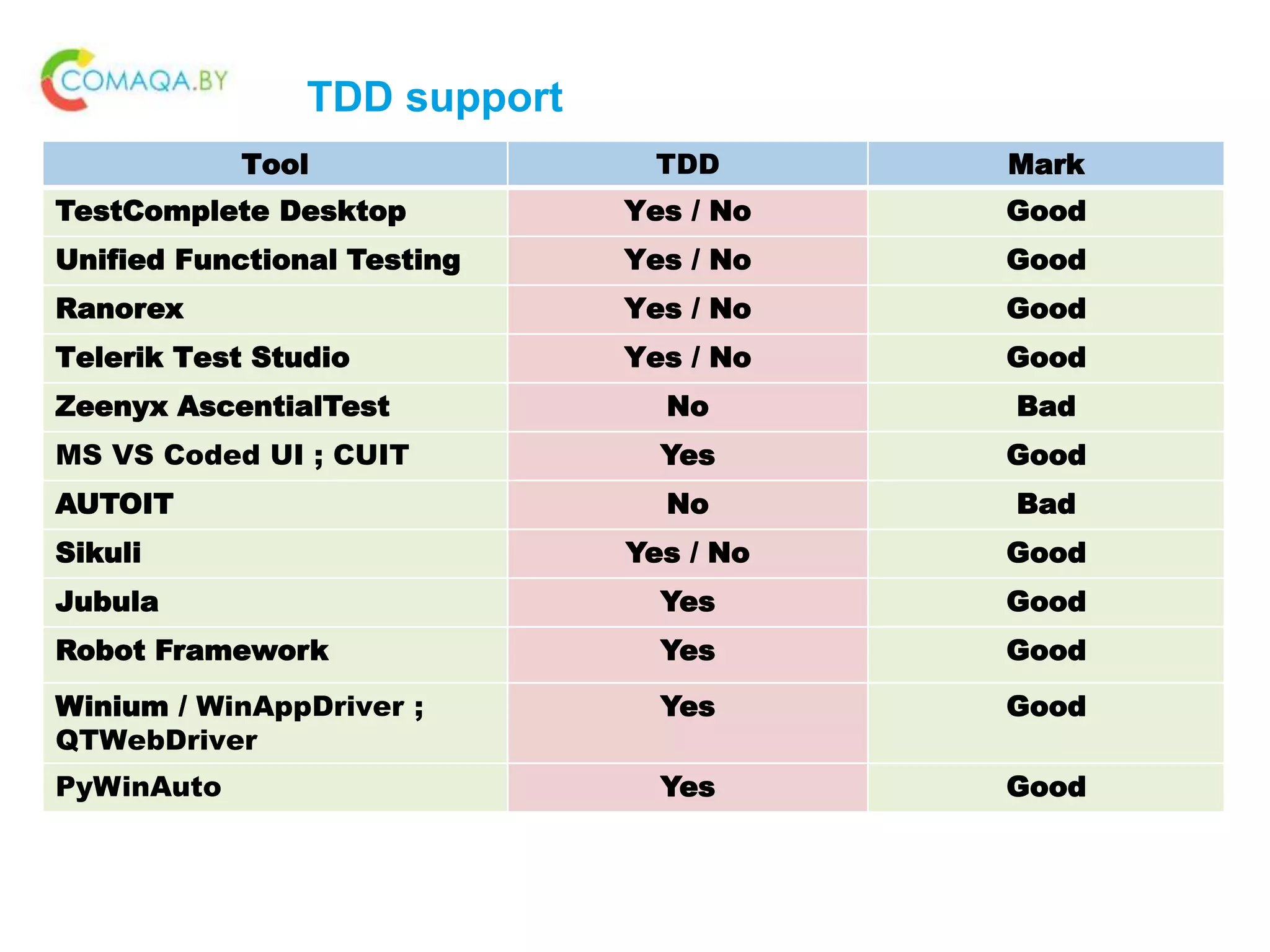

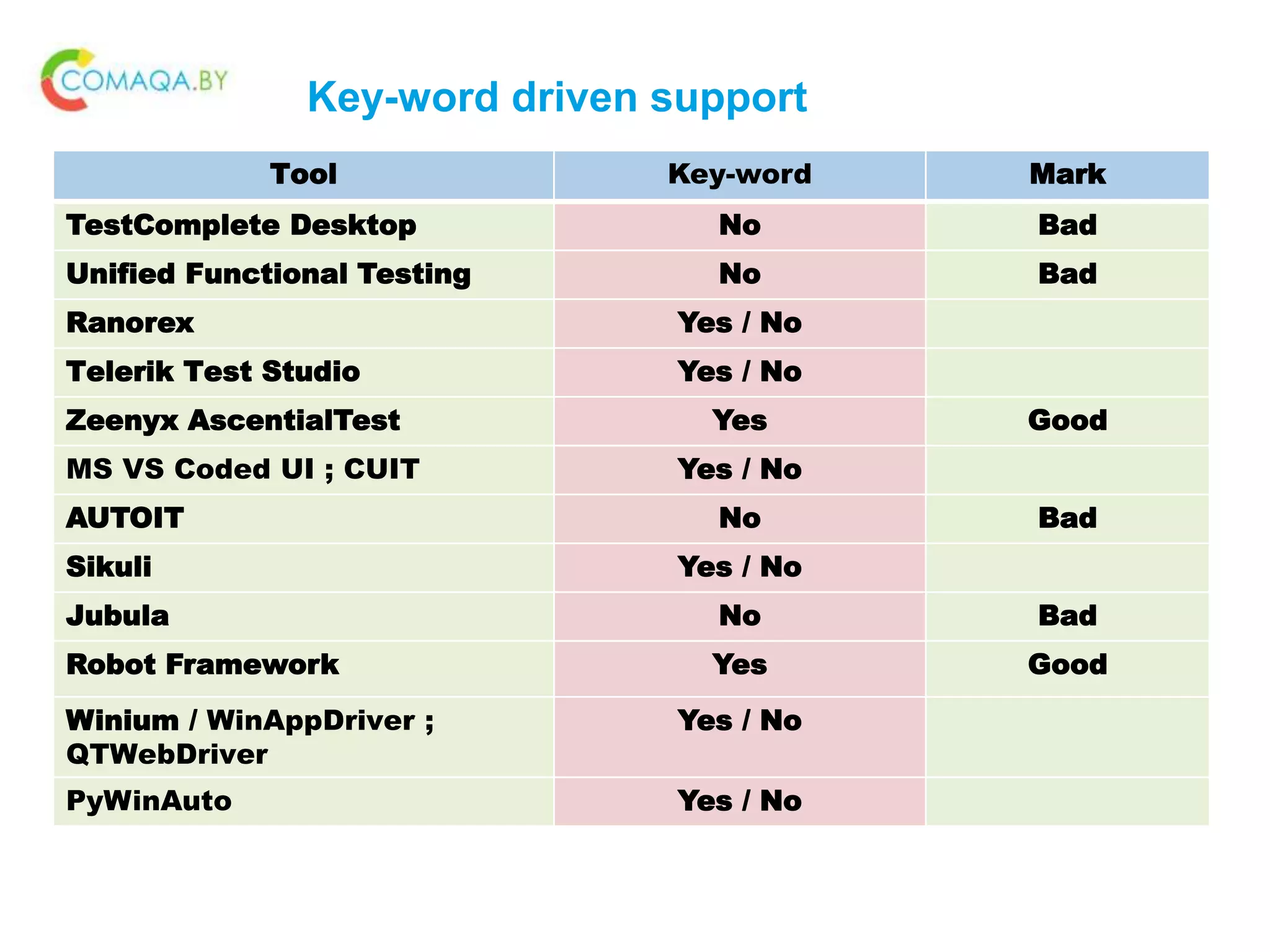

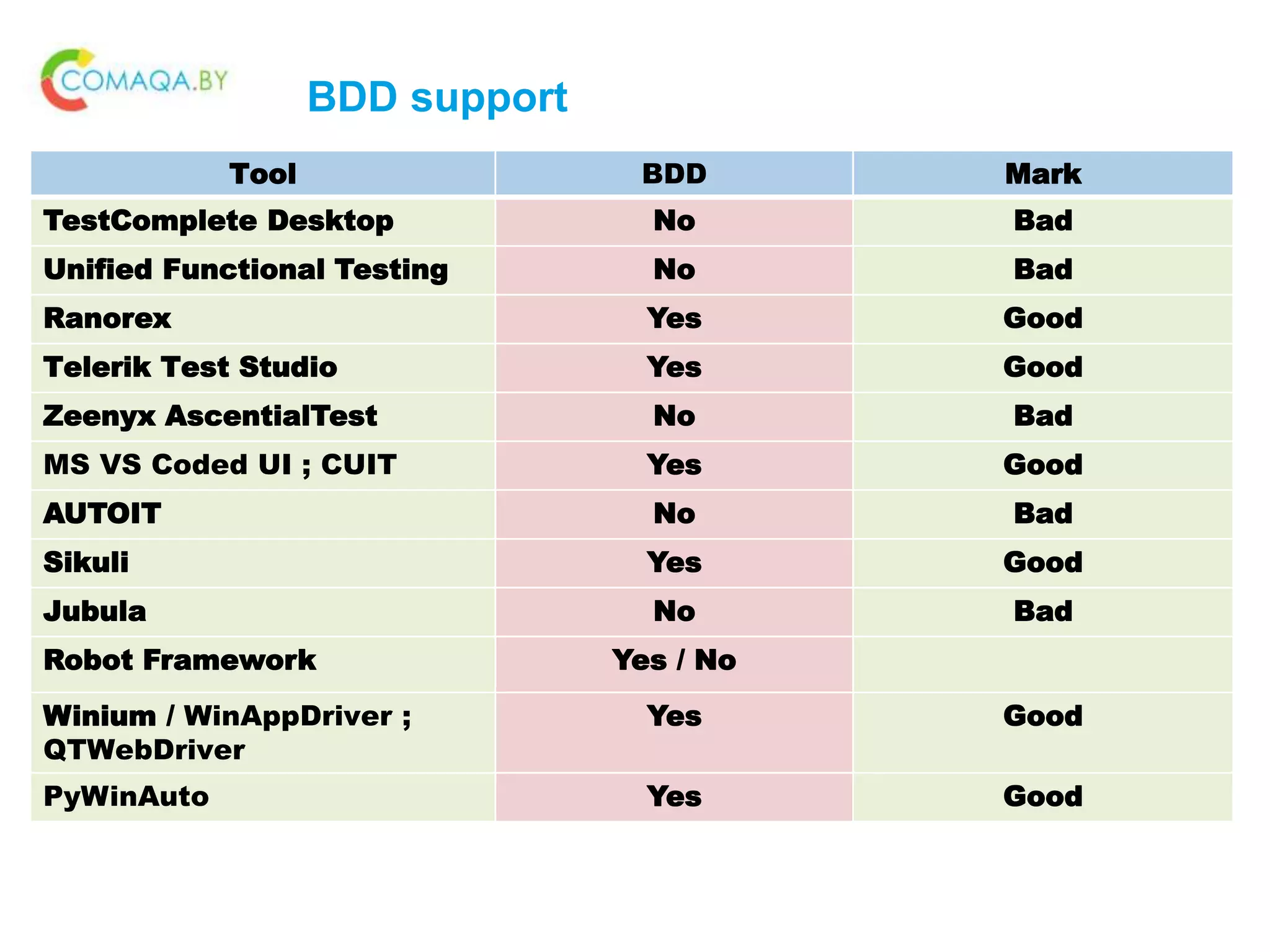

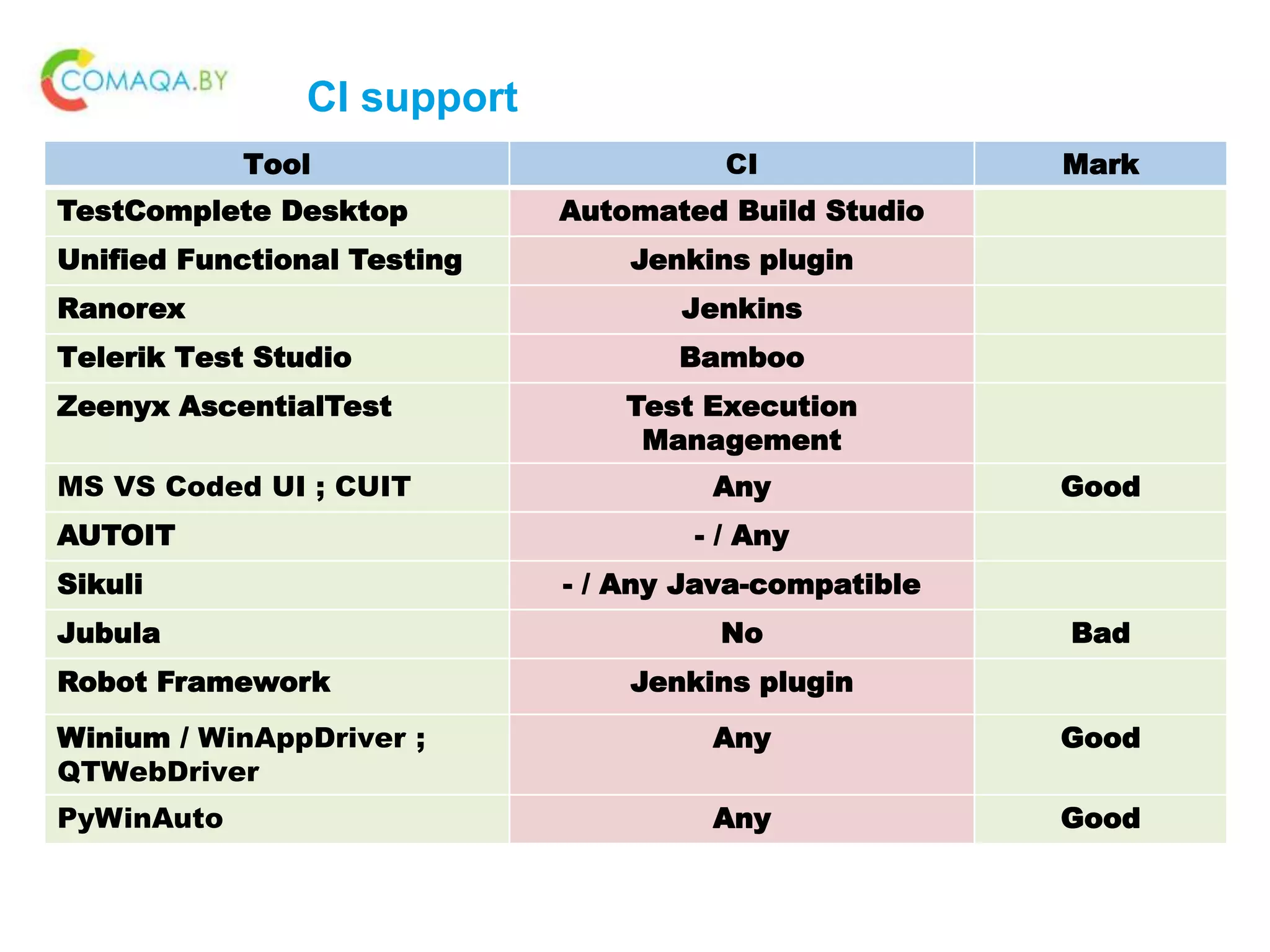

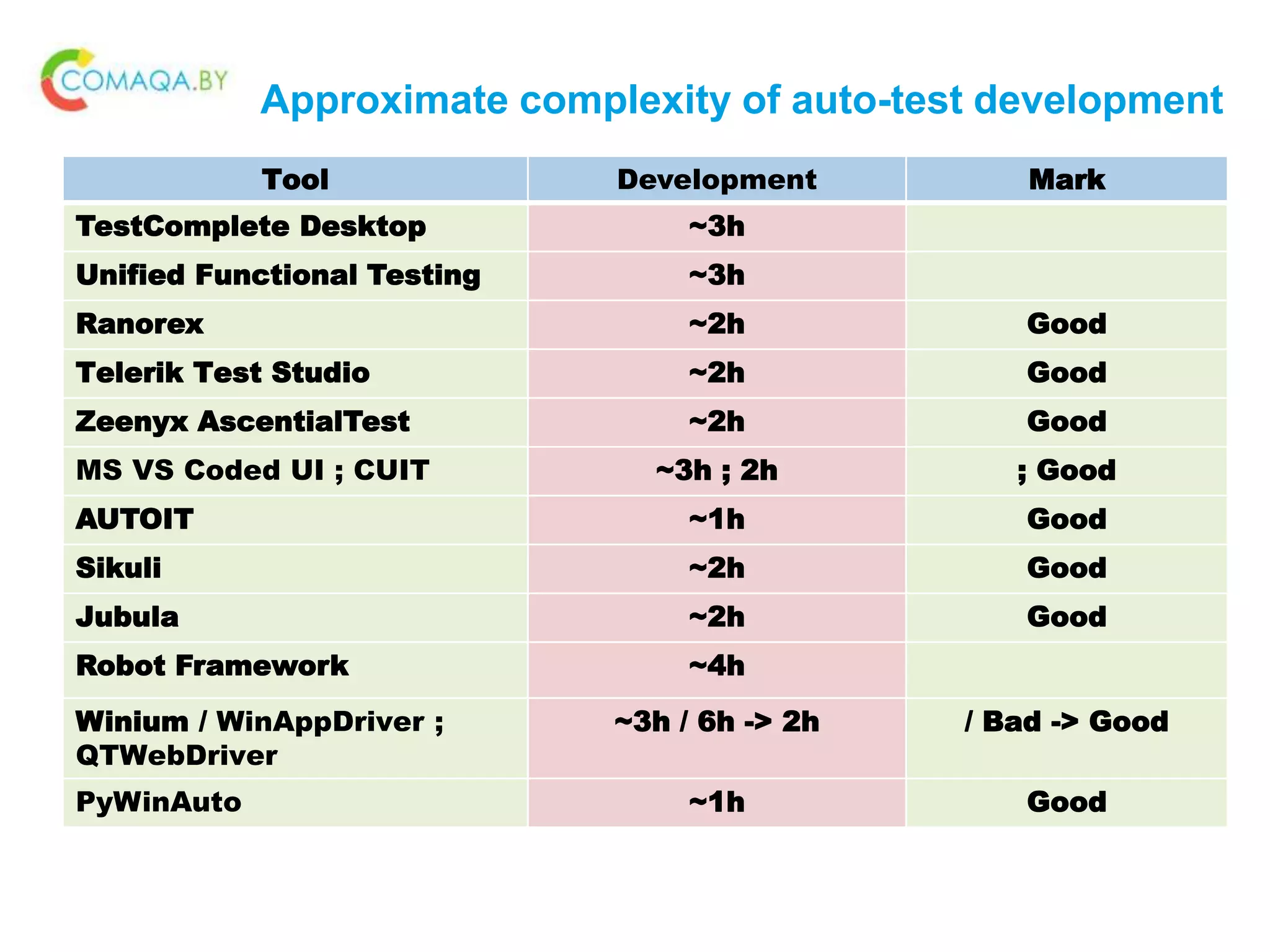

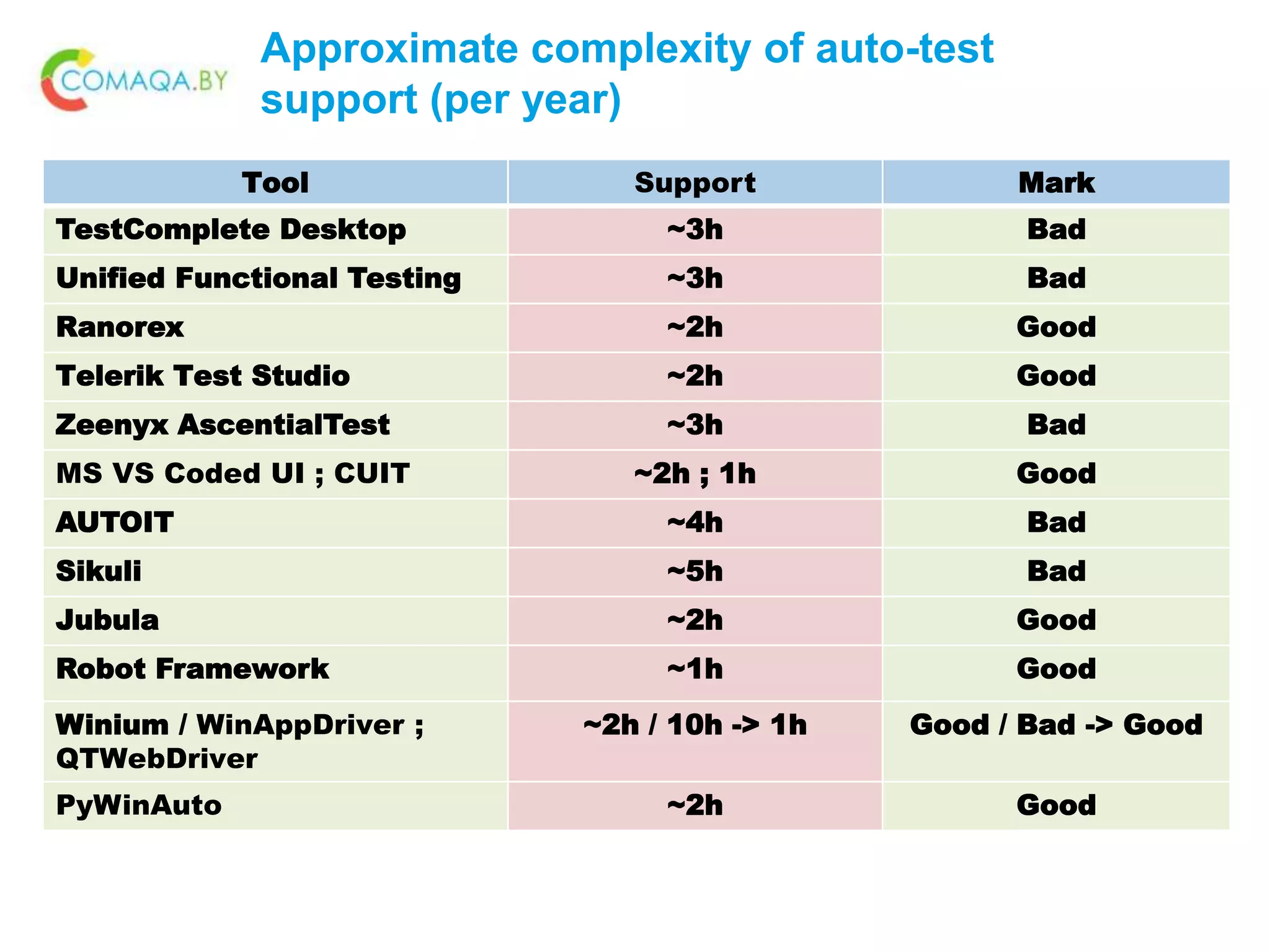

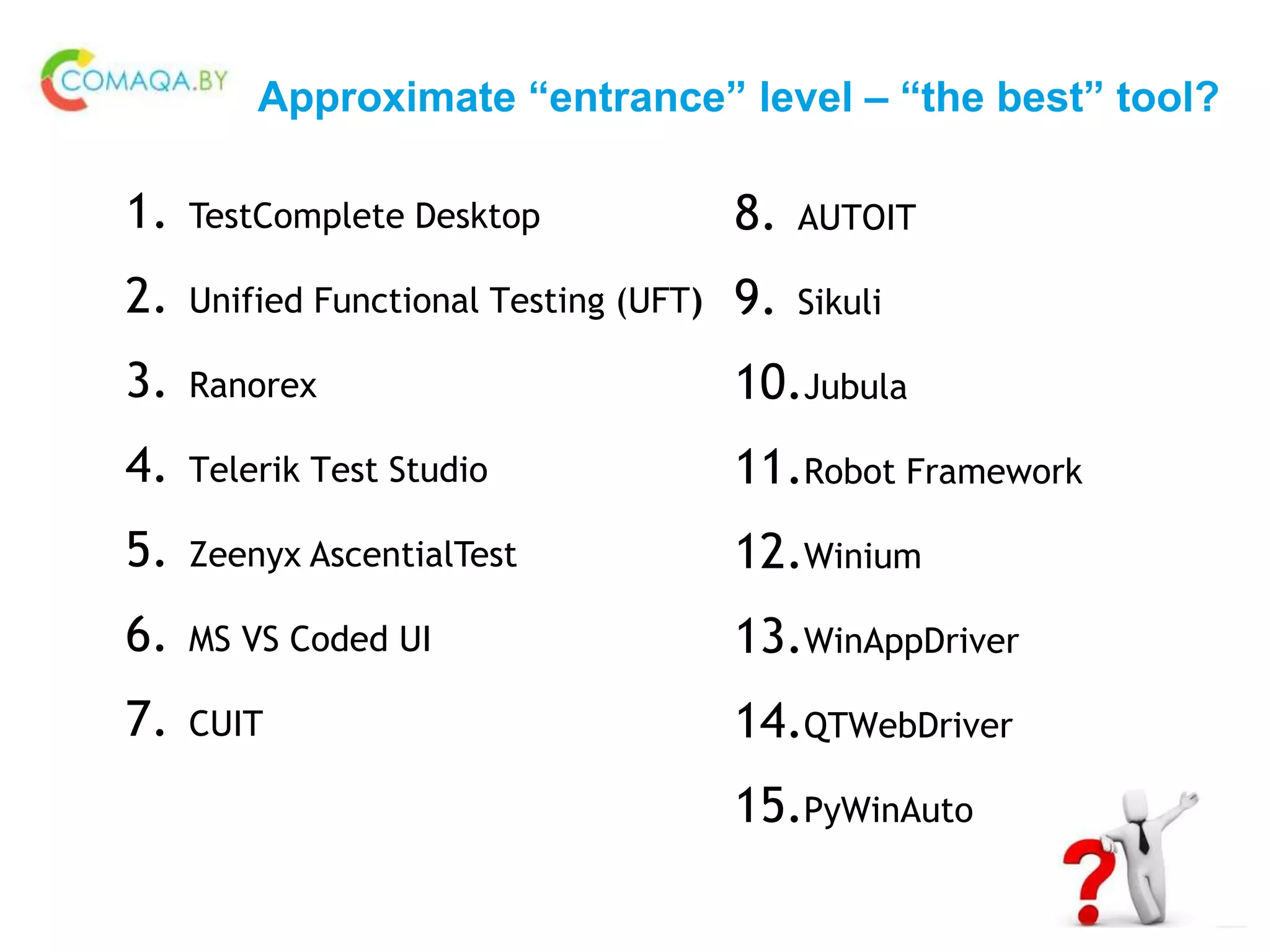

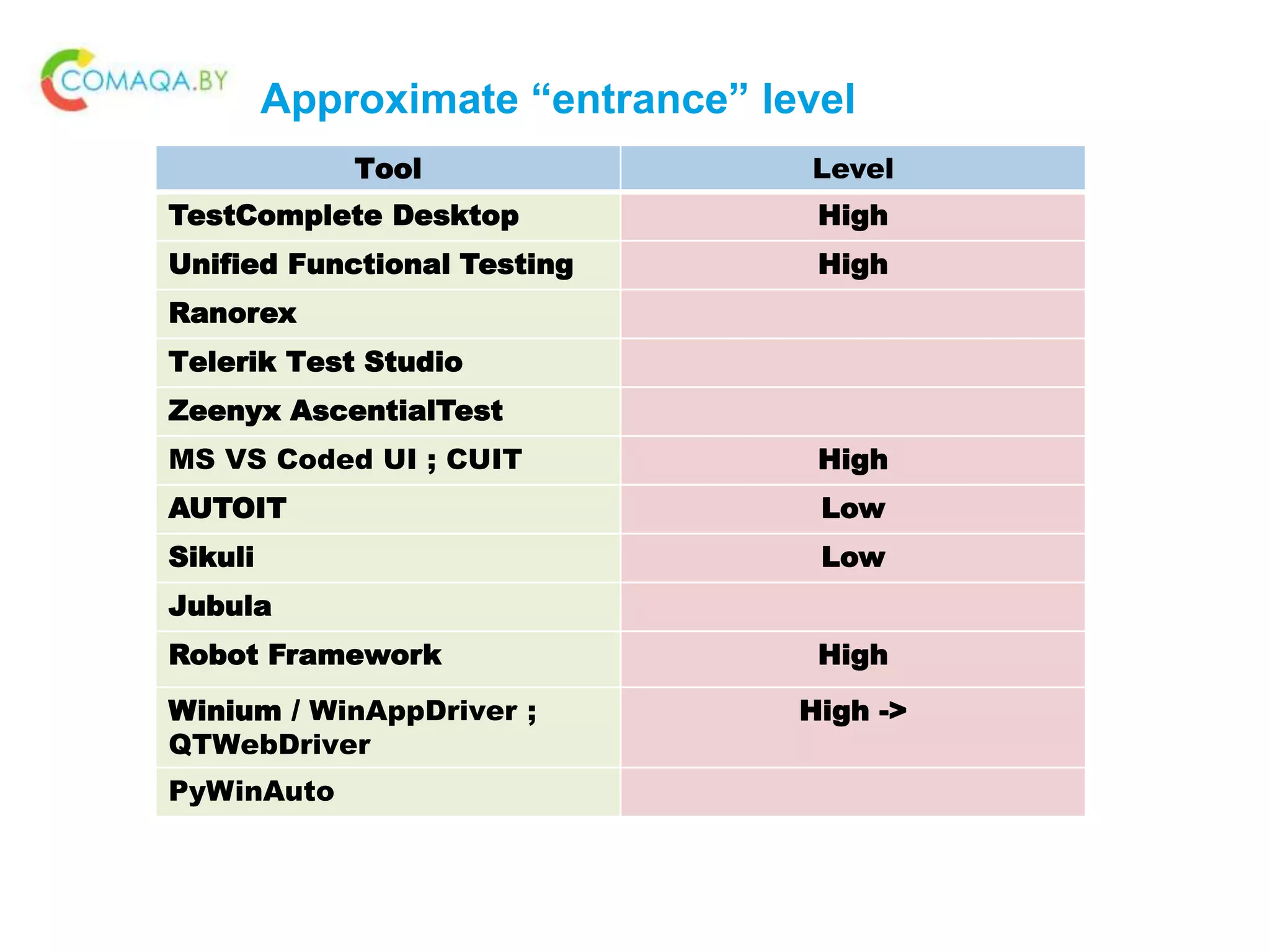

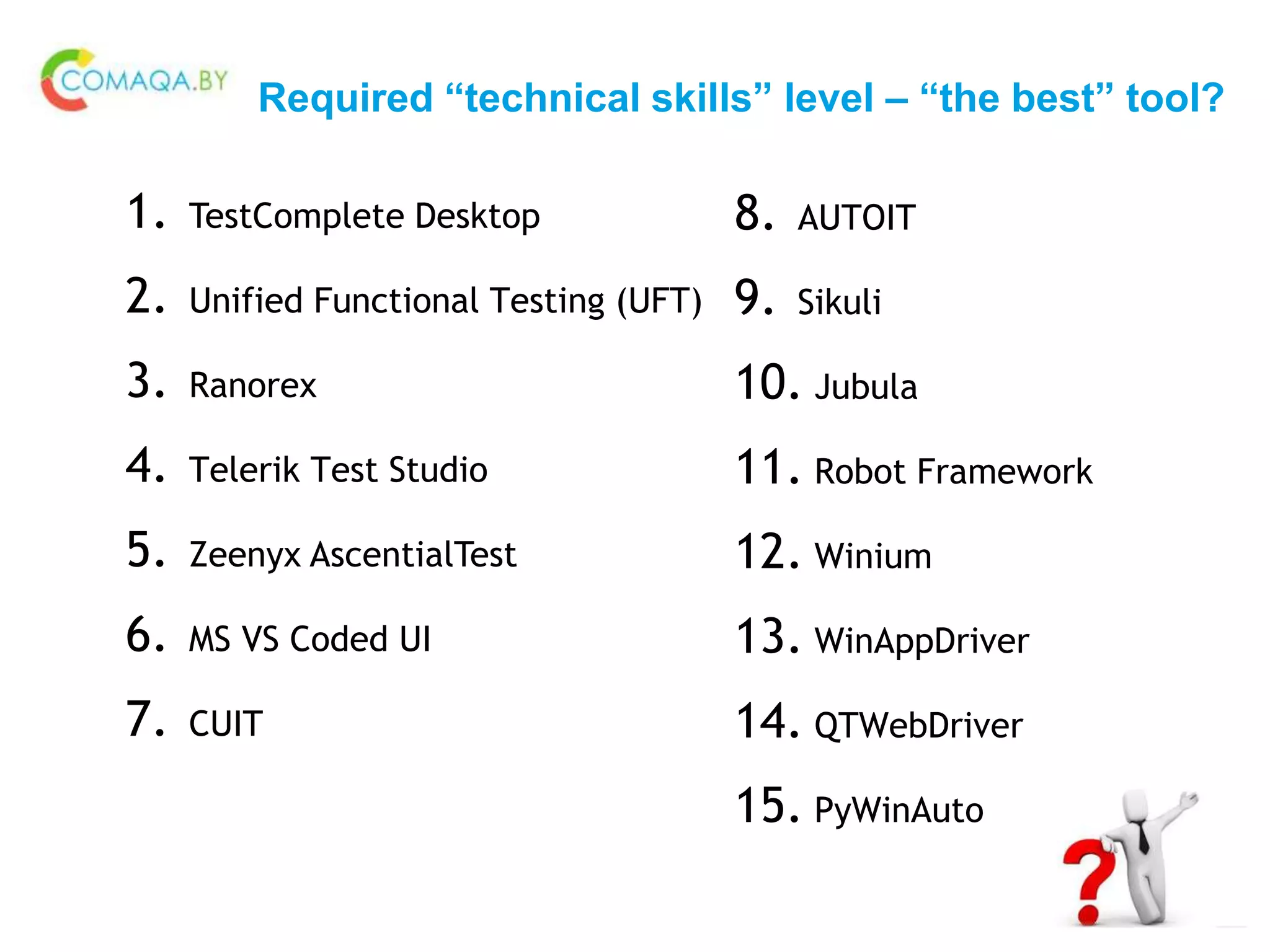

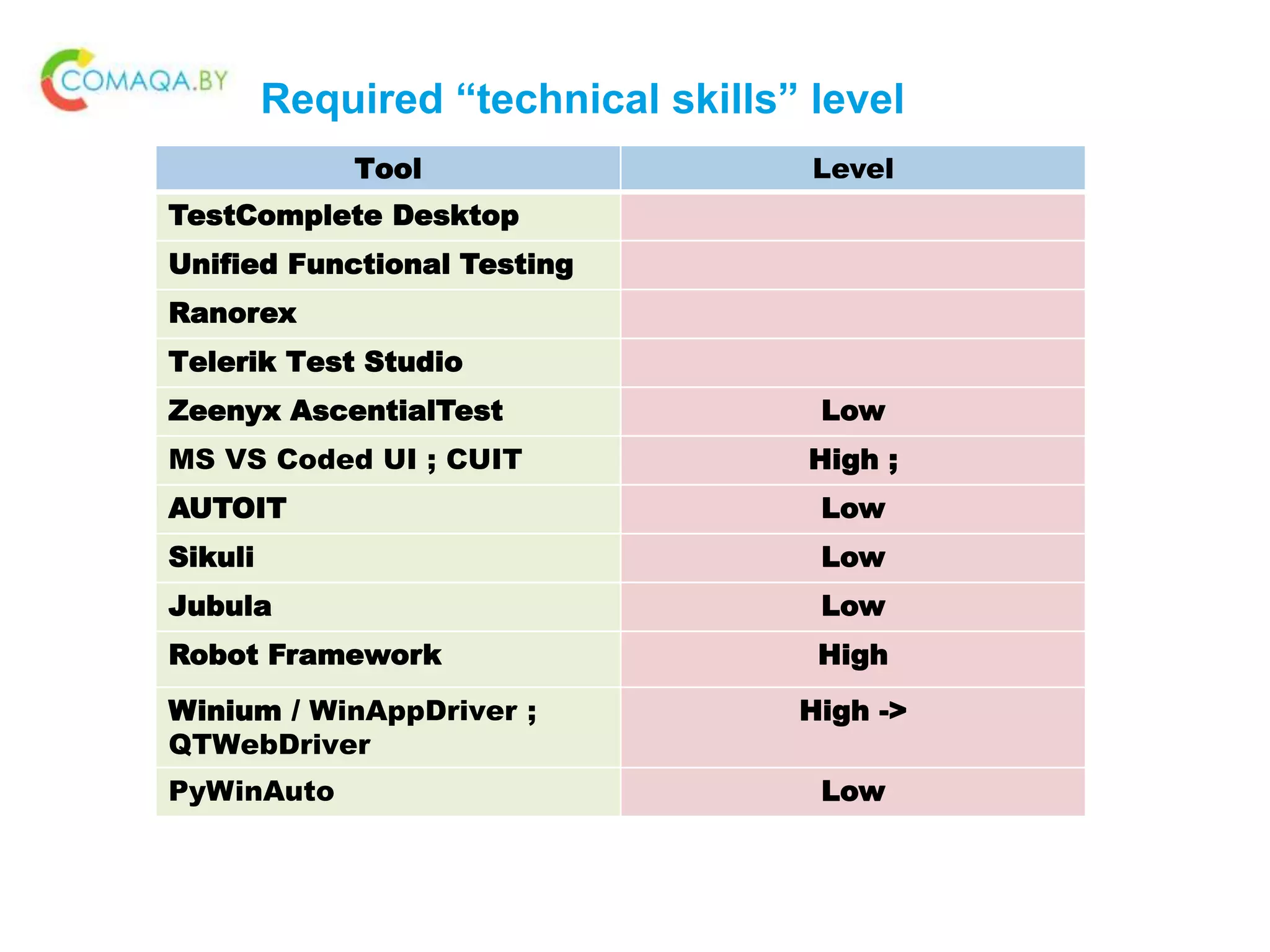

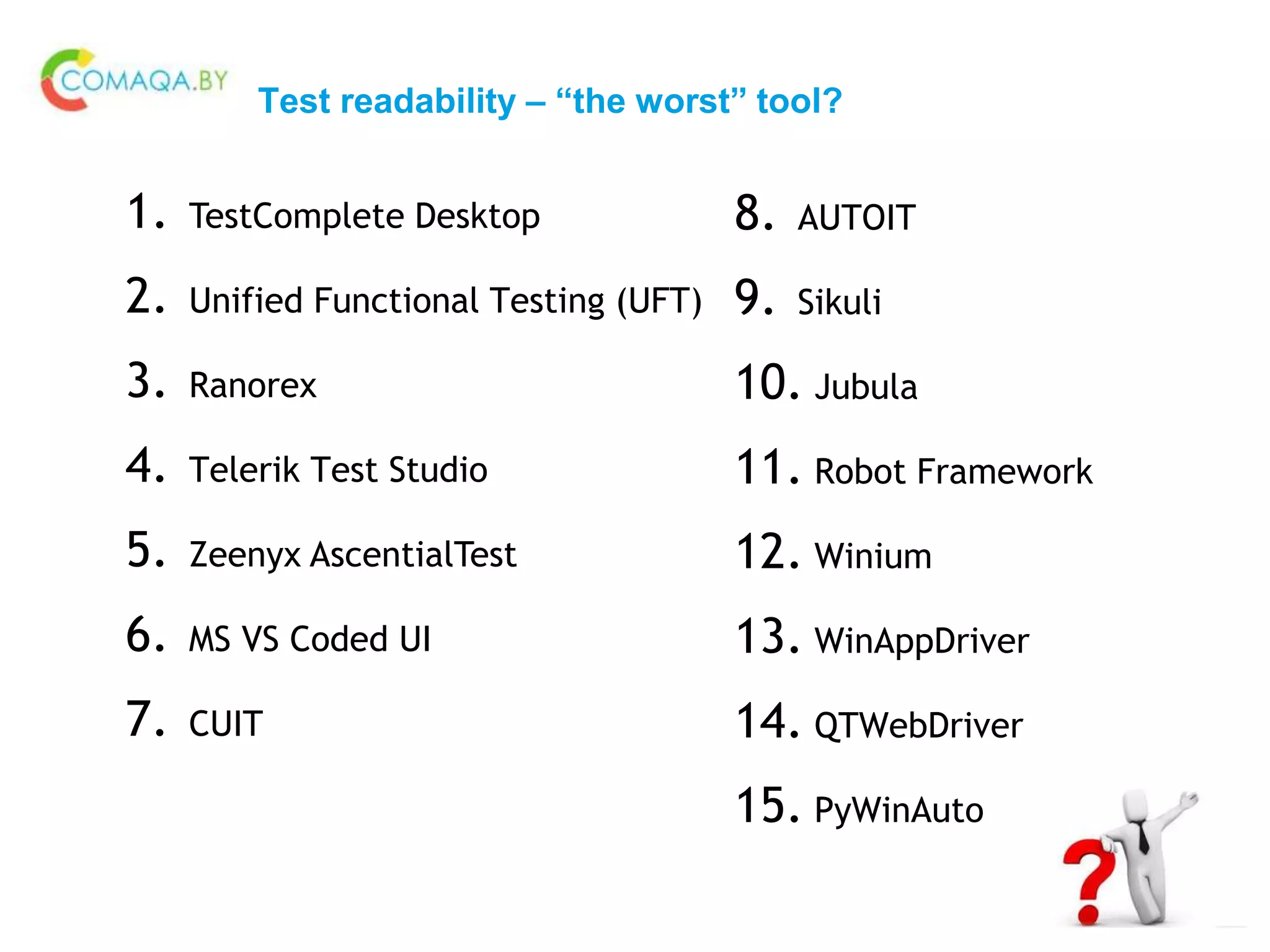

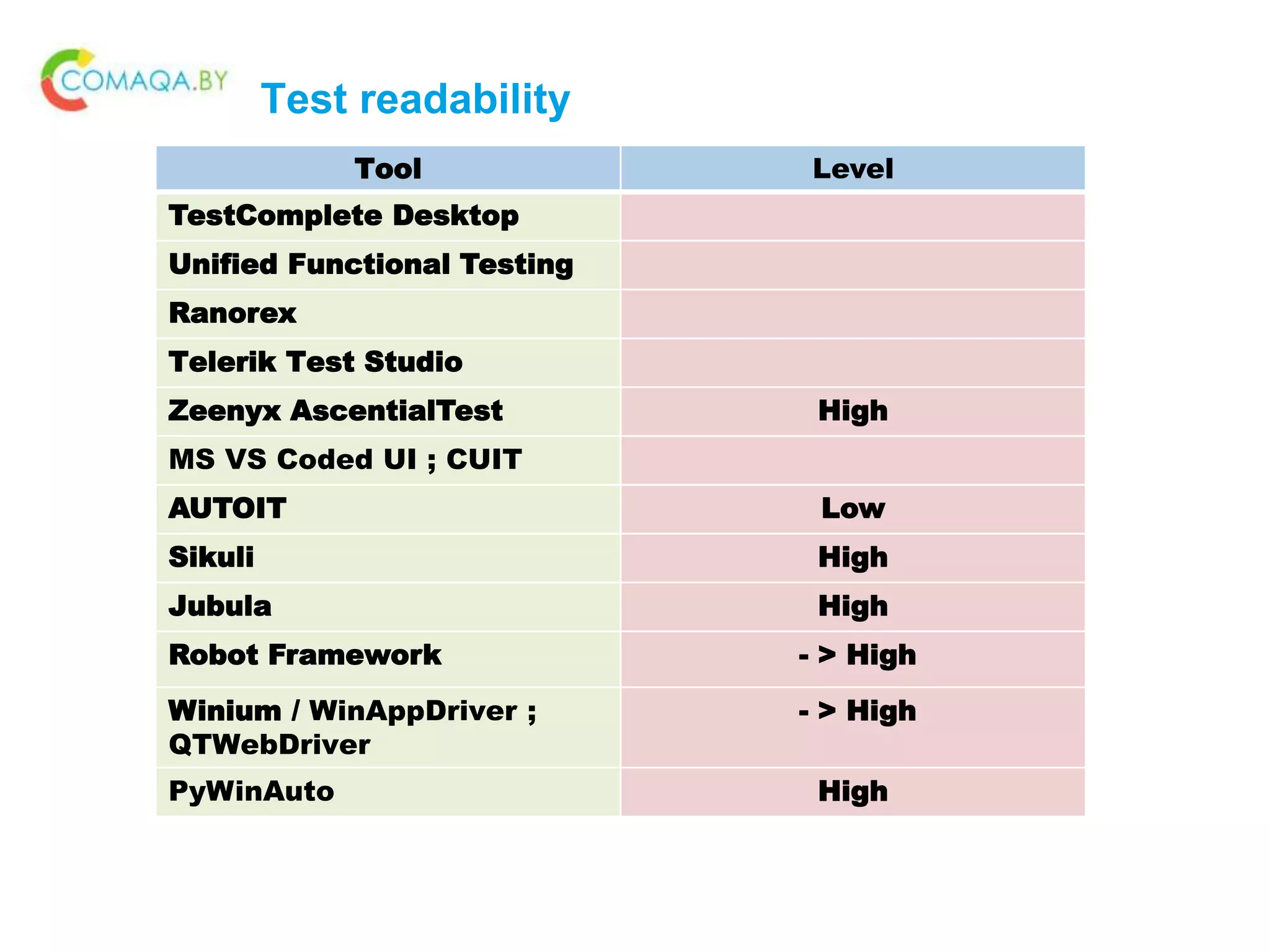

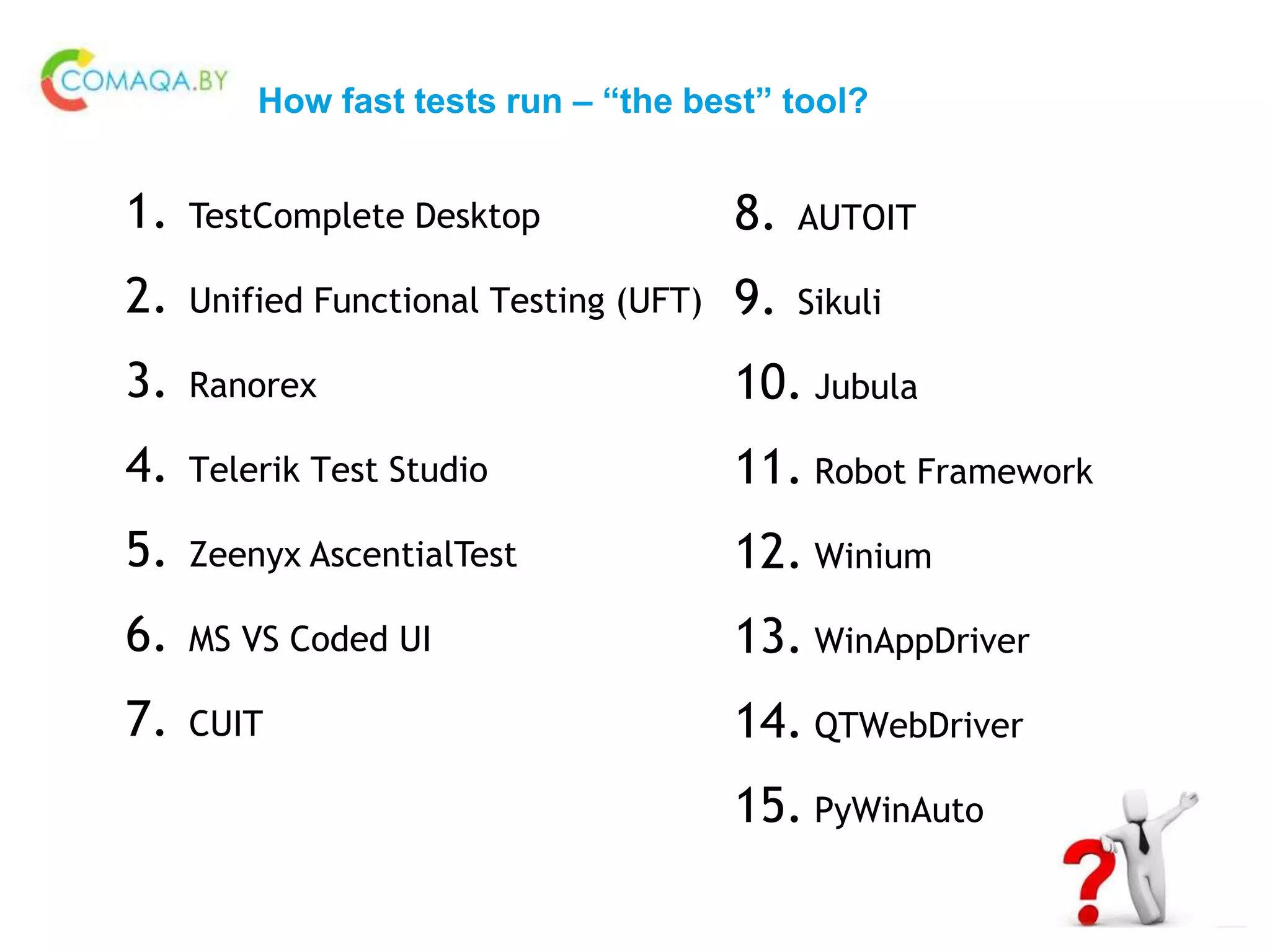

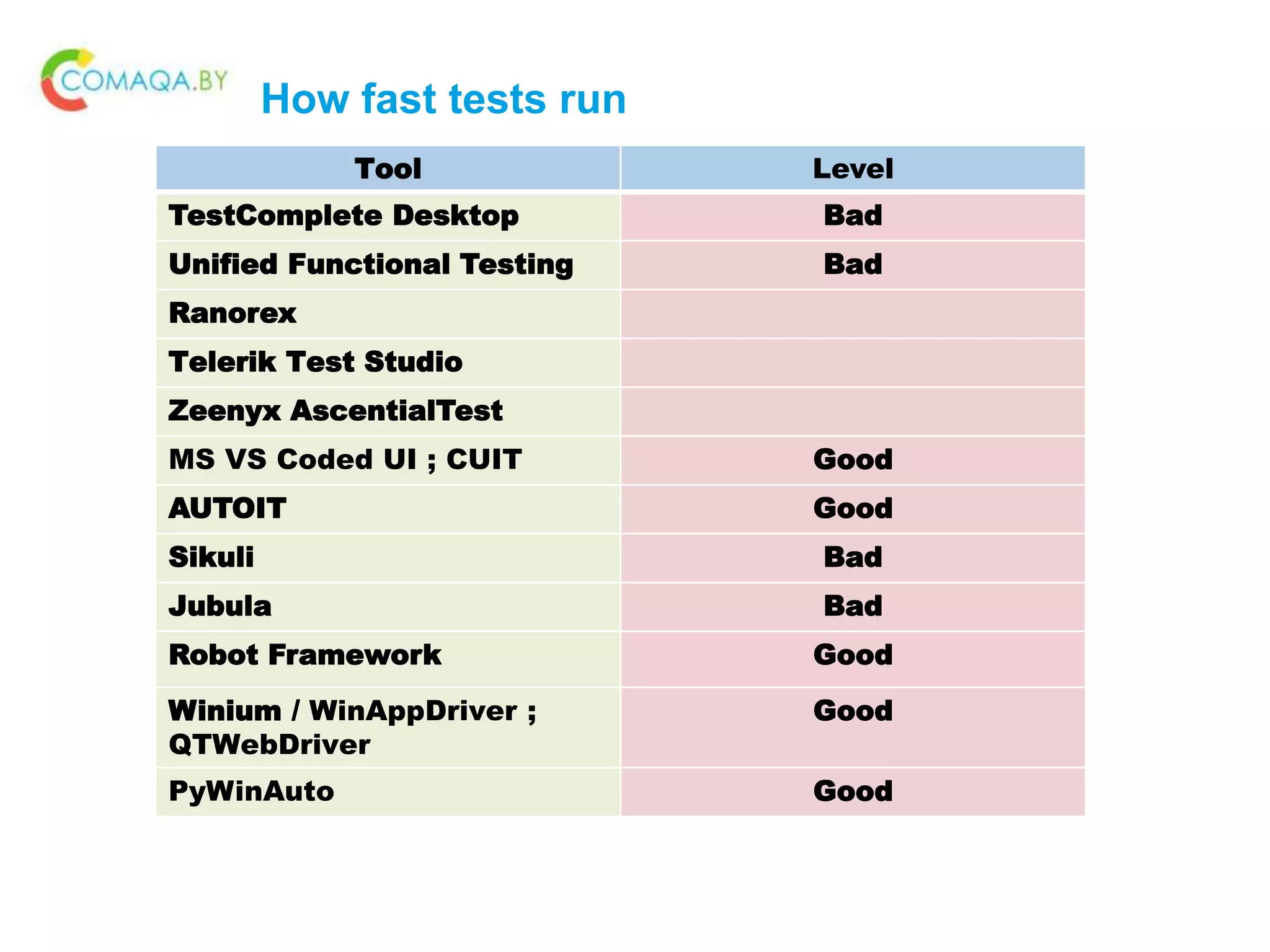

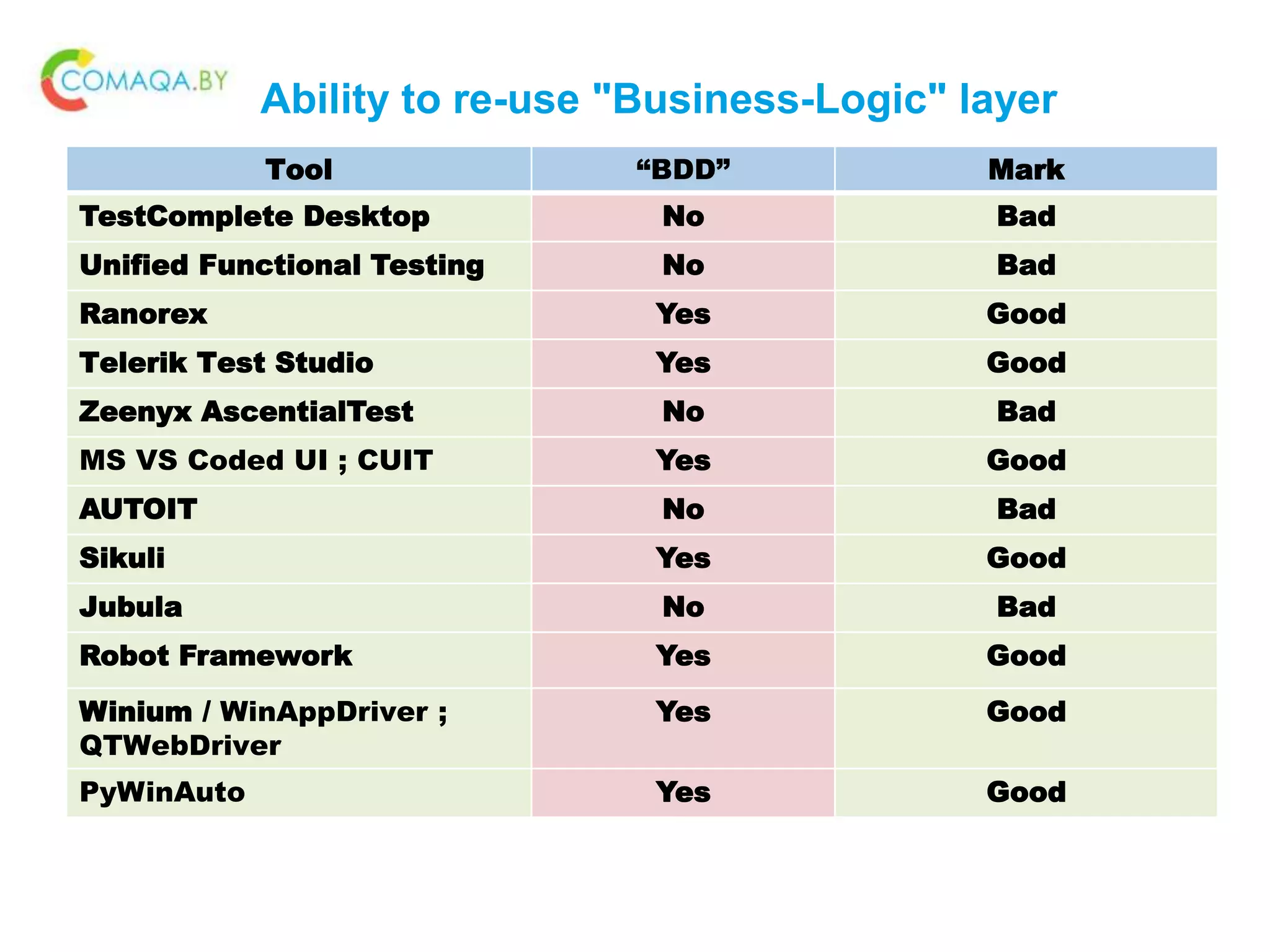

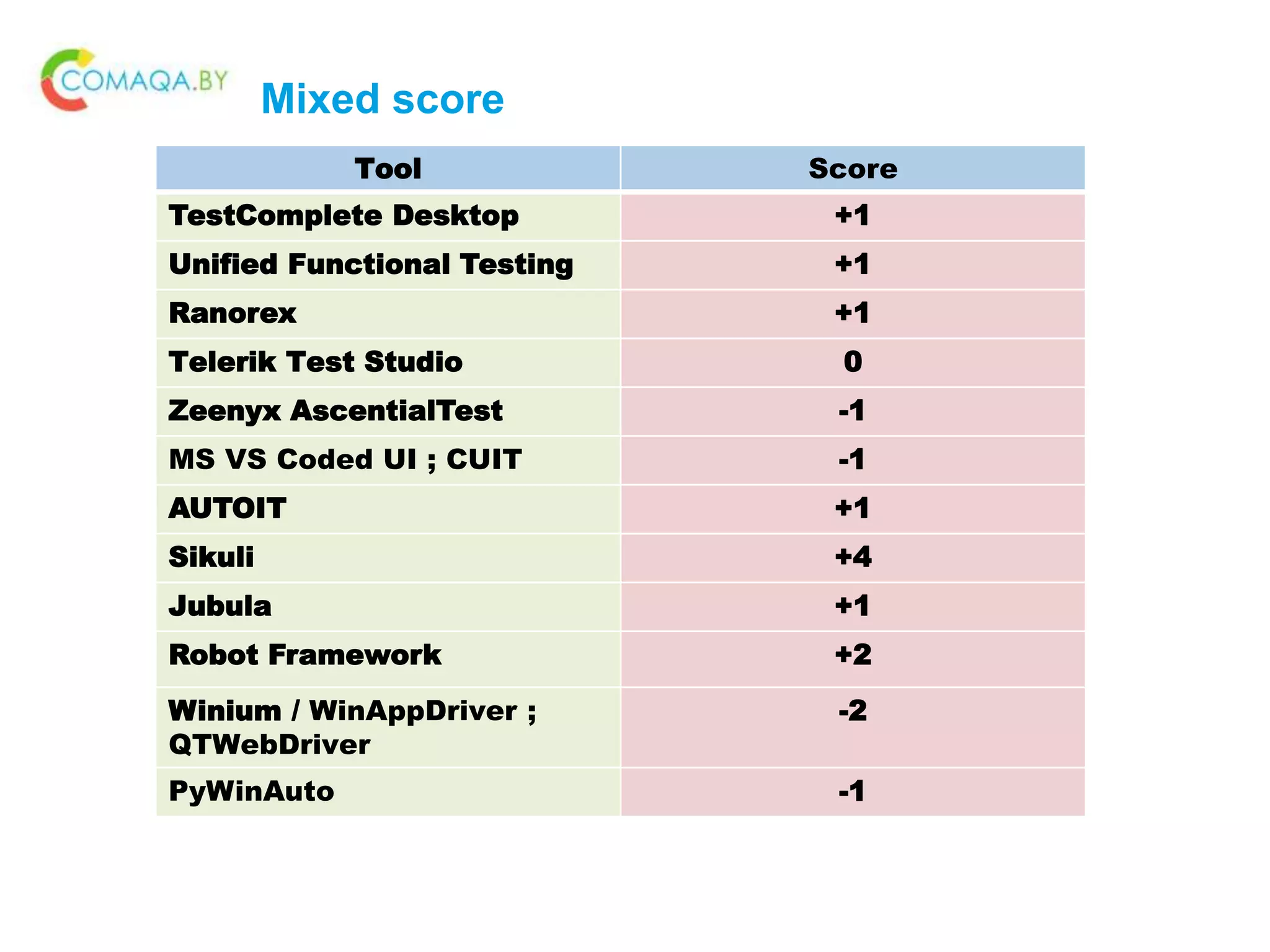

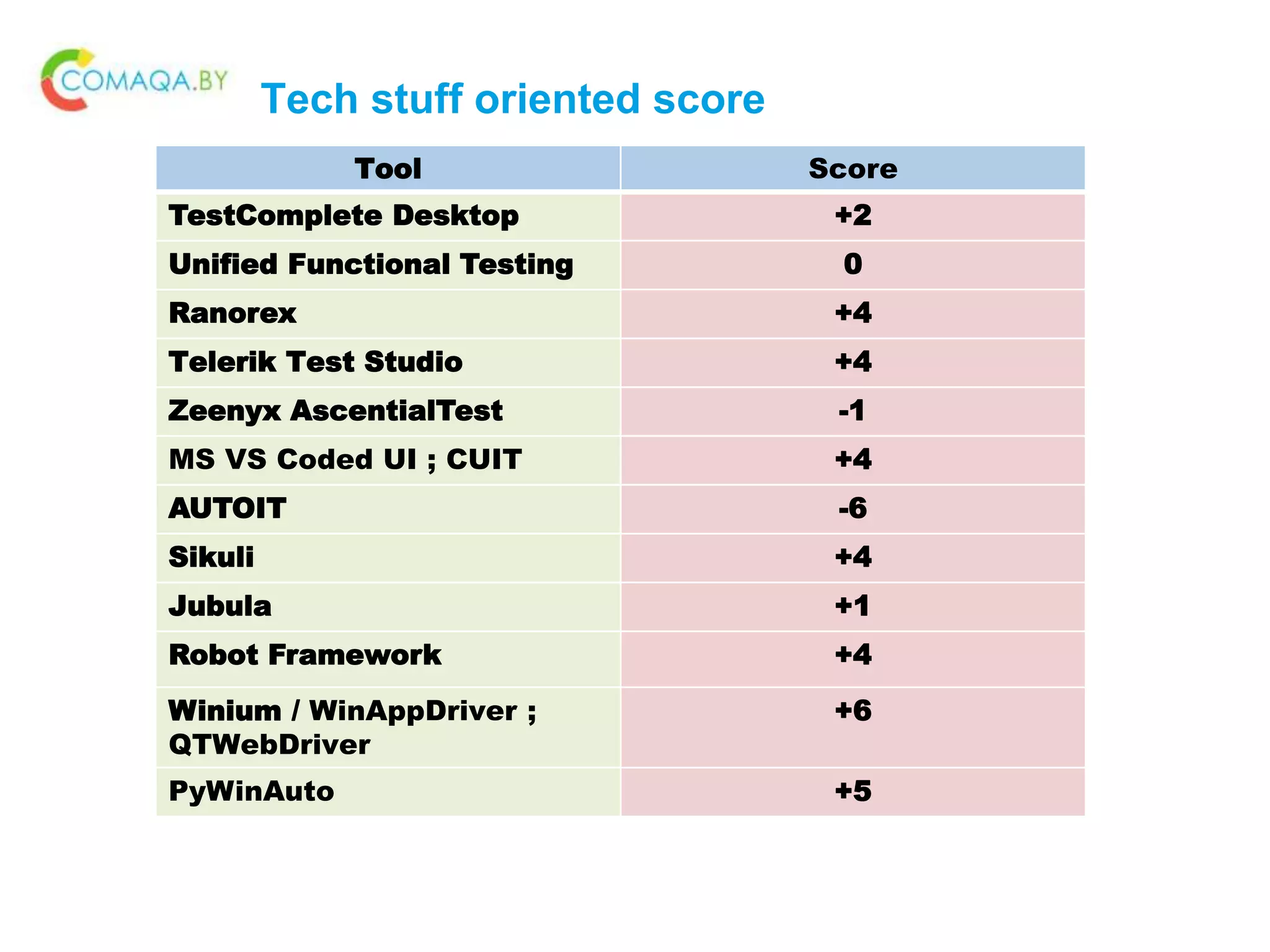

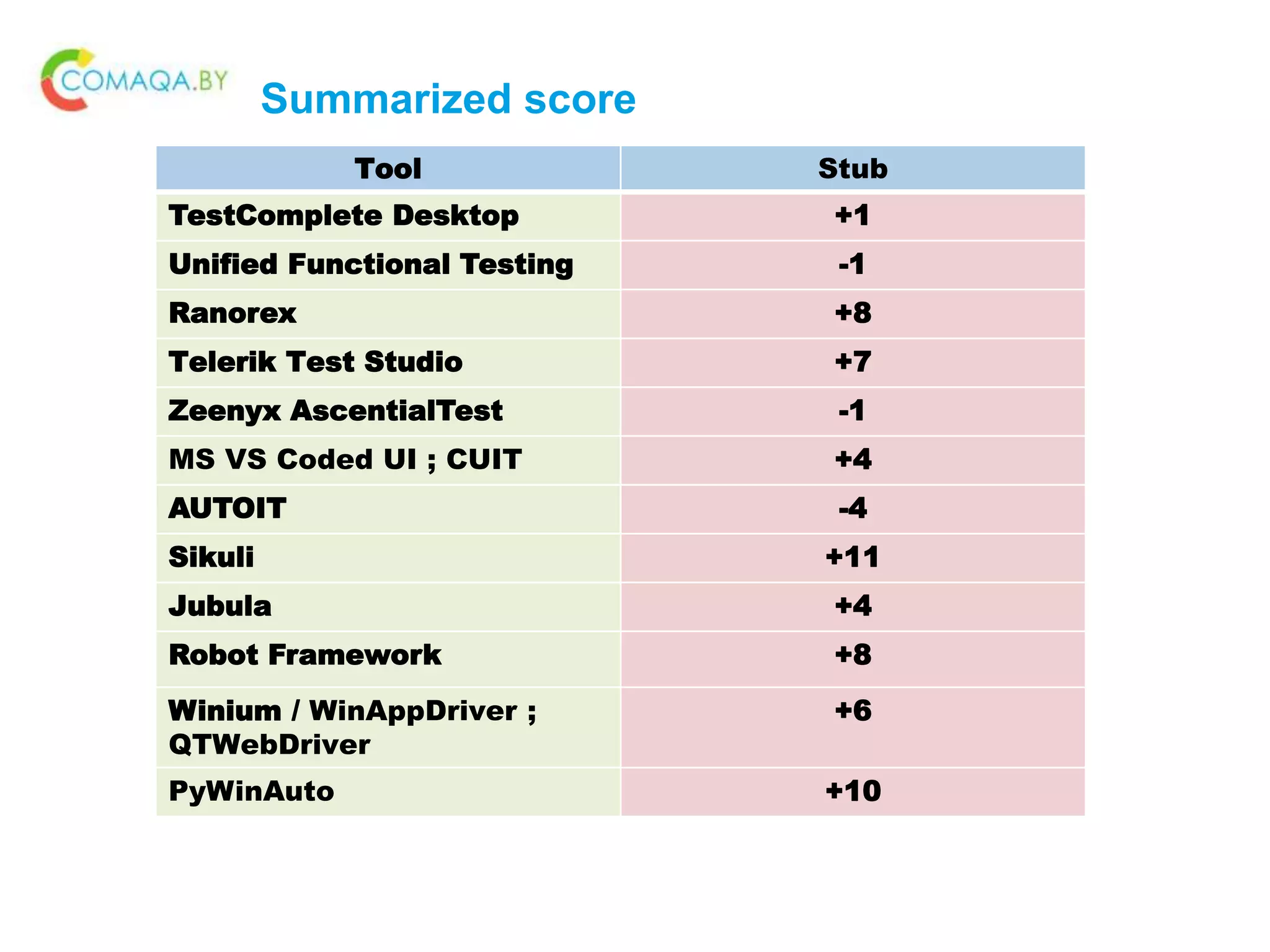

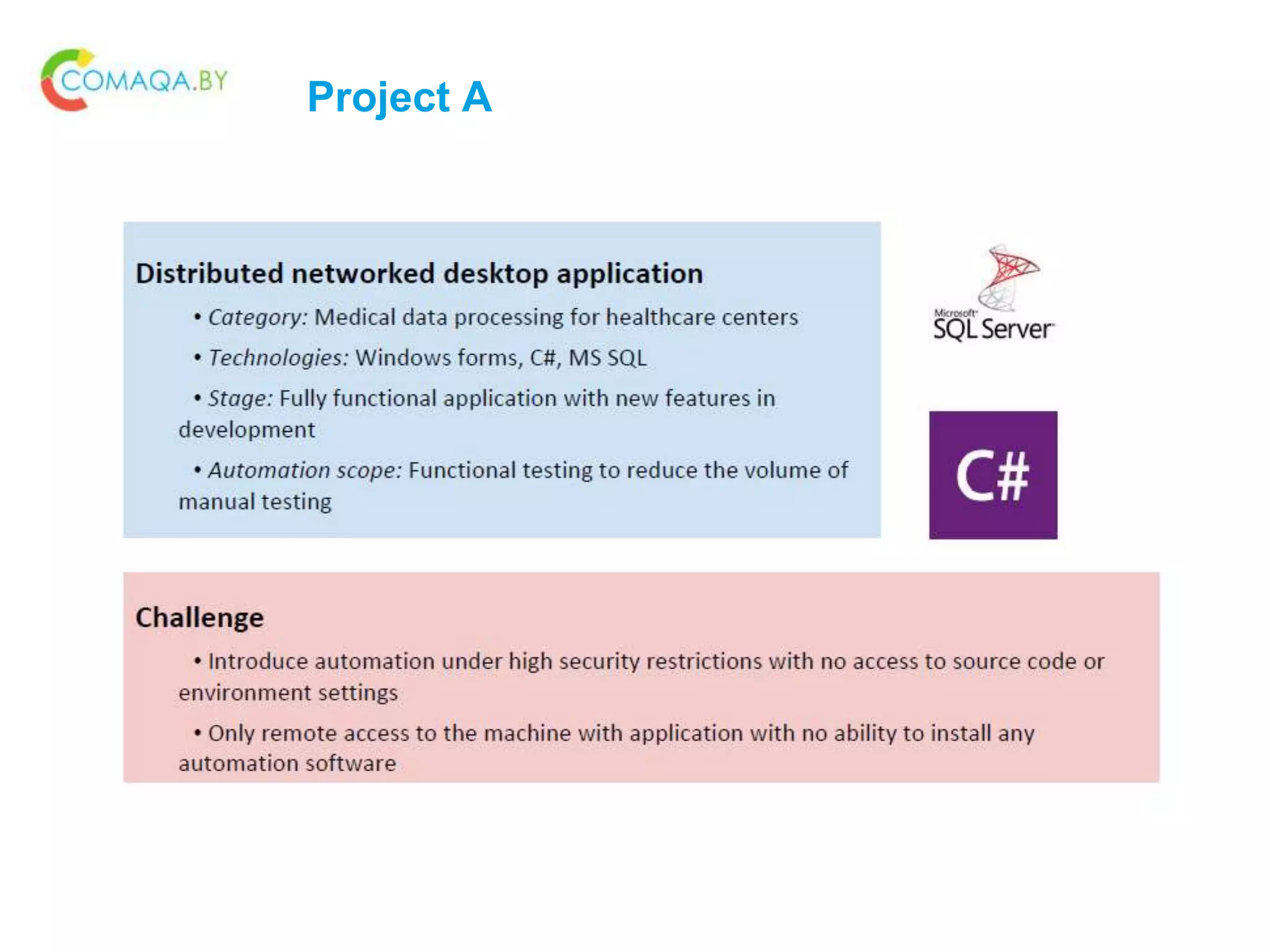

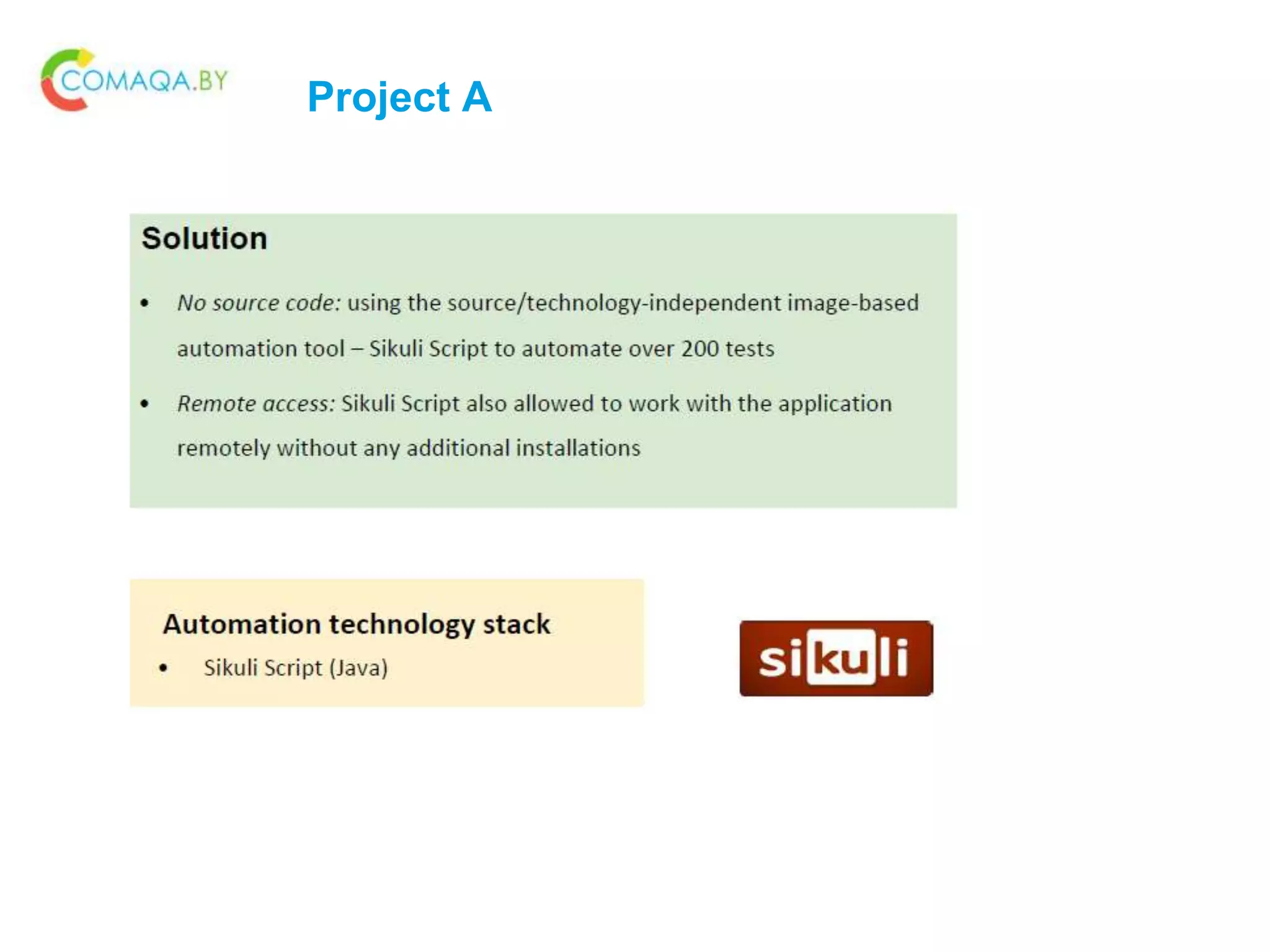

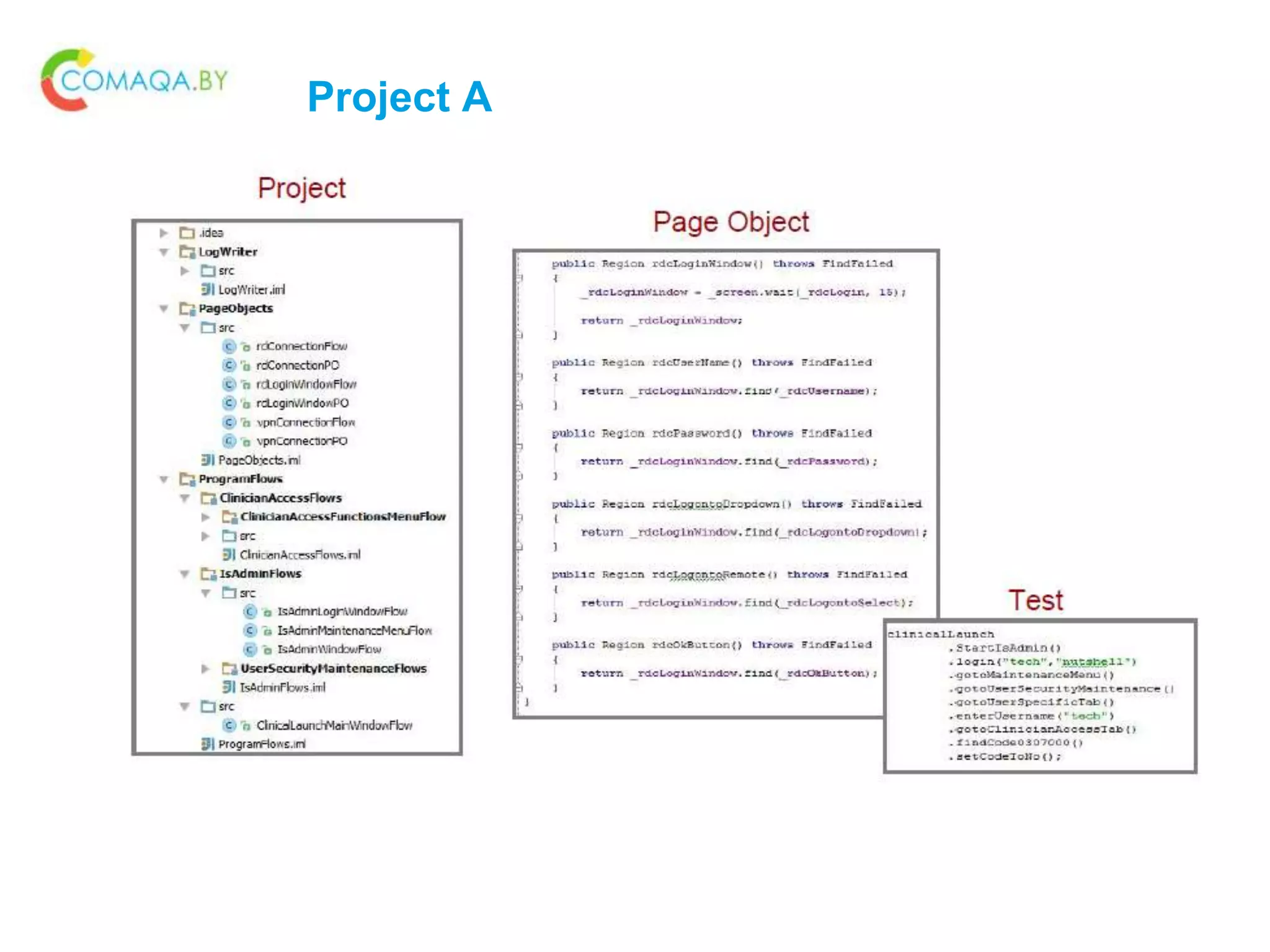

The document is a comprehensive analysis of automation tools for desktop applications, categorizing and comparing 15 different tools based on various criteria such as stakeholders' orientation, technical aspects, and mixed criteria. It includes methodologies for selecting appropriate tools and criteria tailored to specific projects, complemented by summarized scores and trends in automation. The document also discusses implications for future trends and recommendations for choosing suitable automation solutions.