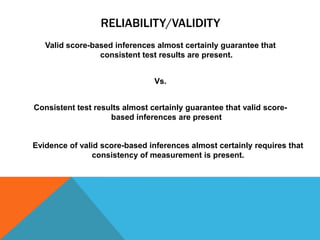

The document discusses the concept of validity in assessments, outlining three types: content-related, criterion-related, and construct-related validity. It emphasizes the importance of alignment between curricular aims and assessments and introduces methods for evaluating validity, including intervention and differential-population studies. The text highlights that validity is not inherent to the test itself but is supported through evidence and peer review.