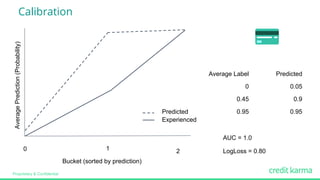

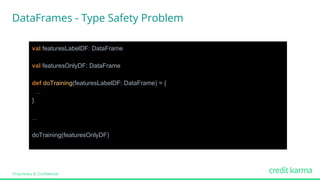

The document discusses a case study on building recommendation models using Spark DataFrames at Credit Karma to help users find suitable financial products. It highlights the importance of evaluation metrics in model comparison and introduces type safety for handling dynamic data types within DataFrames. Key takeaways emphasize the necessity of selecting appropriate metrics for model evaluation and ensuring type safety in data handling.

![Proprietary & Confidential

Product Features P(Interest) P(Approval) E[Benefit]

2% Rewards

$300 Bonus

Very High Average Average

1% Rewards

0% APR for 6m

High Average Very High

0% Rewards

$20 Annual Fee

Very Low Very High Very Low

Long term benefit](https://image.slidesharecdn.com/224abdullaalqawasmeh-170615202050/85/Building-Competing-Models-Using-Apache-Spark-DataFrames-with-Abdulla-Al-Qawasmeh-8-320.jpg)

![Proprietary & Confidential

What about other financial products?

Product Features P(Interest) P(Approval) E[Benefit]

Unsecured

7.5% APR

High High Very High

1% Rewards

0% APR for 6m

(25% APR after)

High Average High](https://image.slidesharecdn.com/224abdullaalqawasmeh-170615202050/85/Building-Competing-Models-Using-Apache-Spark-DataFrames-with-Abdulla-Al-Qawasmeh-11-320.jpg)

![Proprietary & Confidential

Row Wrapper

final case class FeaturesLabelDataRow(facts: Facts, label: Double)

extends DataRow {

def toRow: Row

}

object FeaturesLabelDataRow {

def parseRow(row: Row): FeaturesLabelDataRow

}

final case class FeaturesLabelData(dataFrame: DataFrame)

object FeaturesLabelData {

def apply(rowDataRDD: RDD[FeaturesLabelDataRow]): FeaturesLabelsData = {

val schema : StructType = FeaturesLabelsDataRow.getSchema

FeaturesLabelsData(sqlContext.createDataFrame(rowDataRDD, schema))

}

}

Type Safety Wrappers

DataFrame Wrapper](https://image.slidesharecdn.com/224abdullaalqawasmeh-170615202050/85/Building-Competing-Models-Using-Apache-Spark-DataFrames-with-Abdulla-Al-Qawasmeh-25-320.jpg)