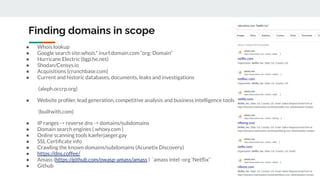

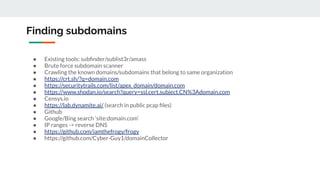

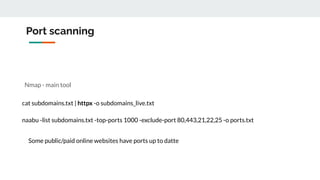

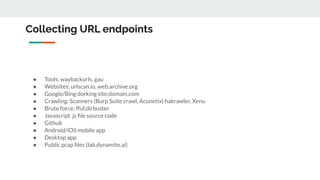

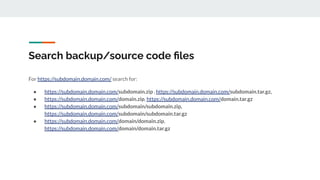

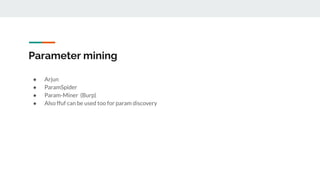

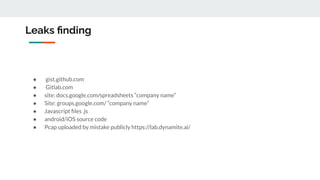

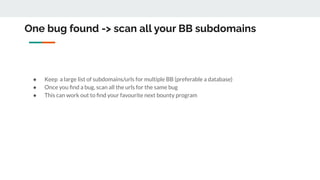

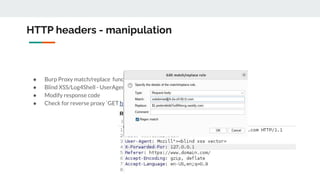

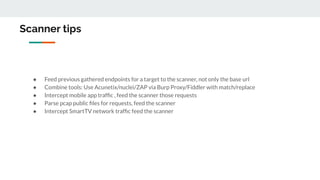

This document provides tips and techniques for reconnaissance during a bug bounty program. It discusses researching domains and subdomains using tools like Whois lookups, Shodan, Censys, and Amass. It also covers finding additional subdomains, port scanning, collecting URLs, searching for backups and source code, parameter mining, and tips for using scanners more effectively. The goal is to iteratively expand the scope of testing to uncover vulnerabilities.