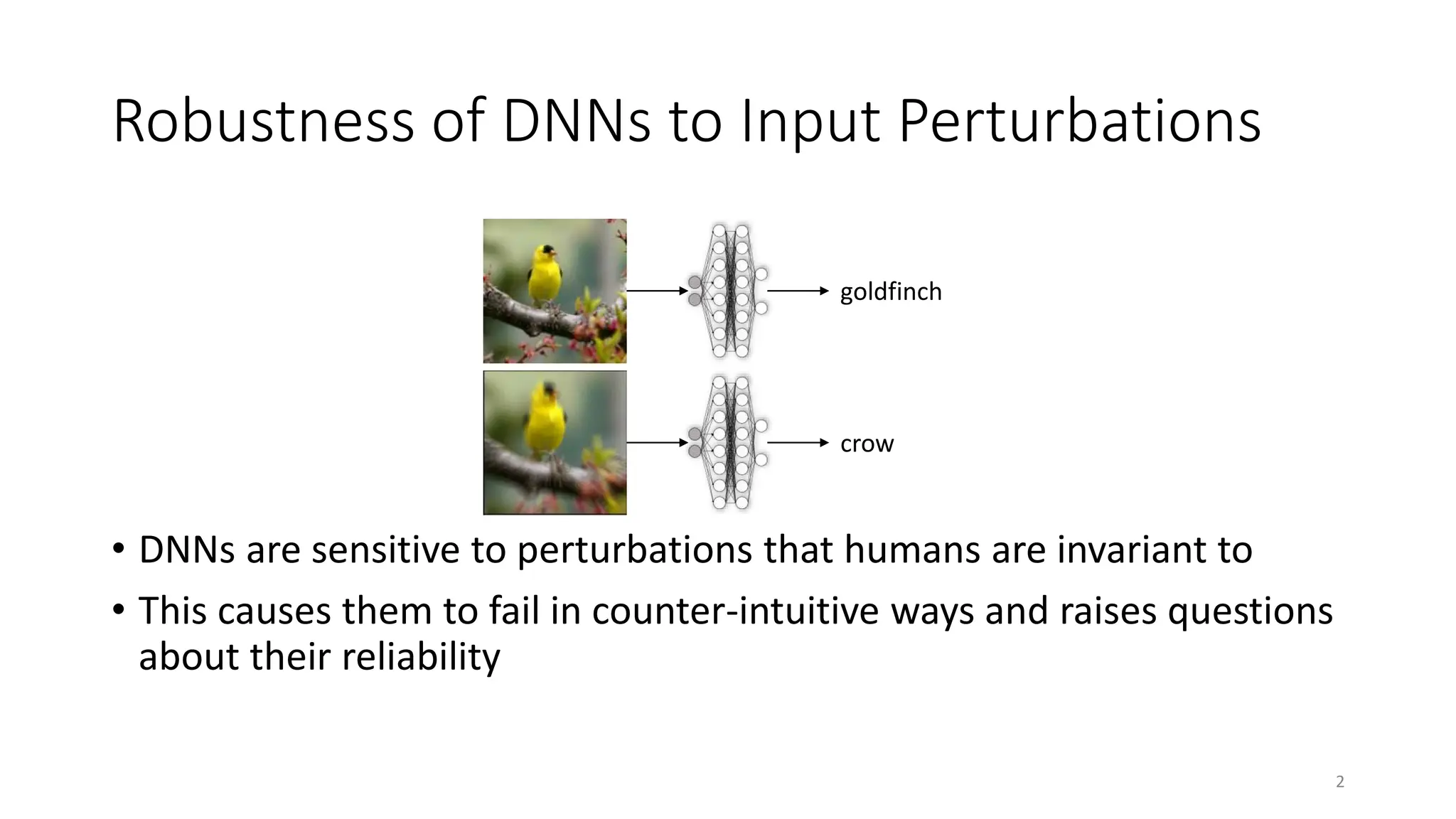

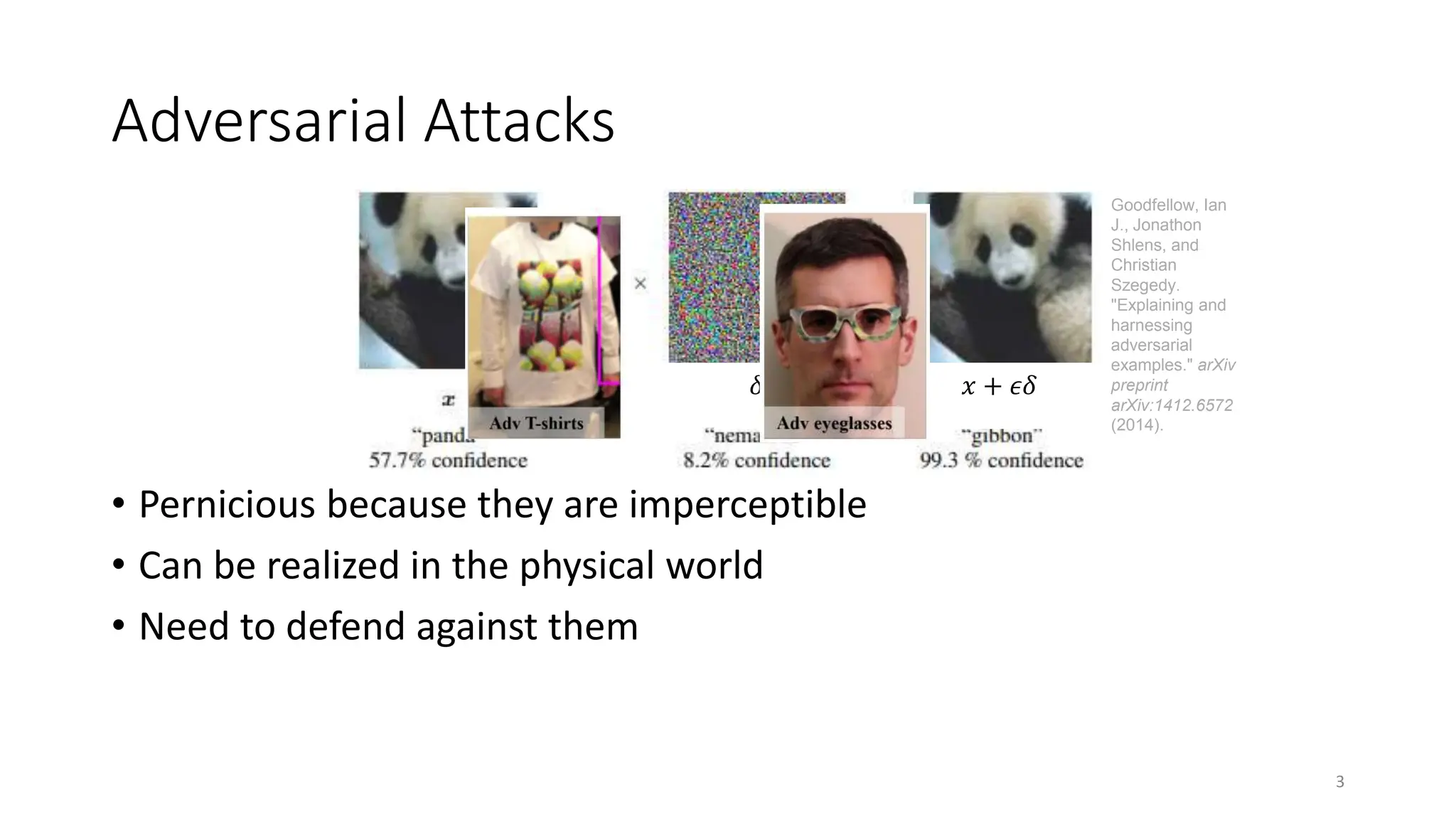

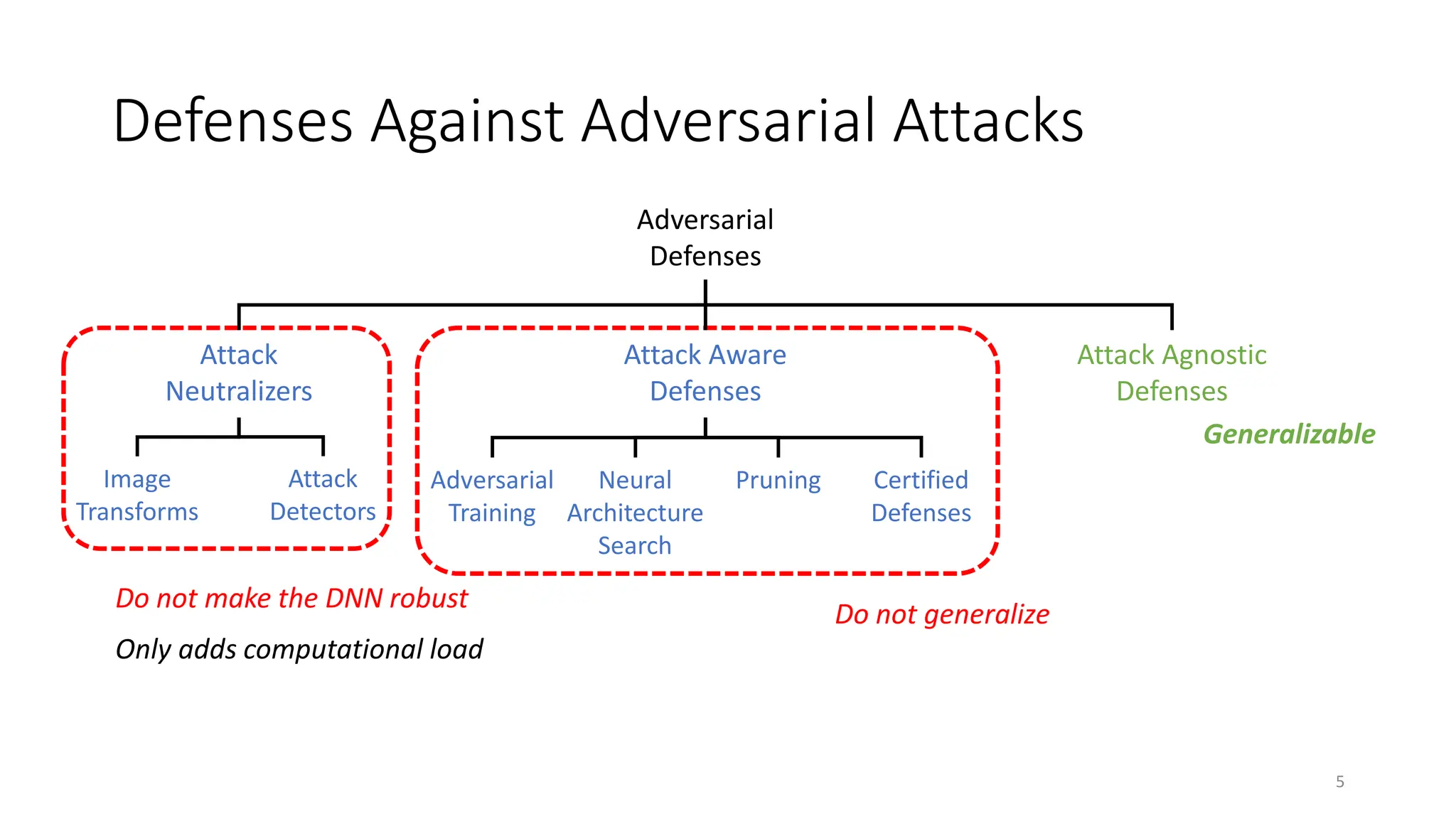

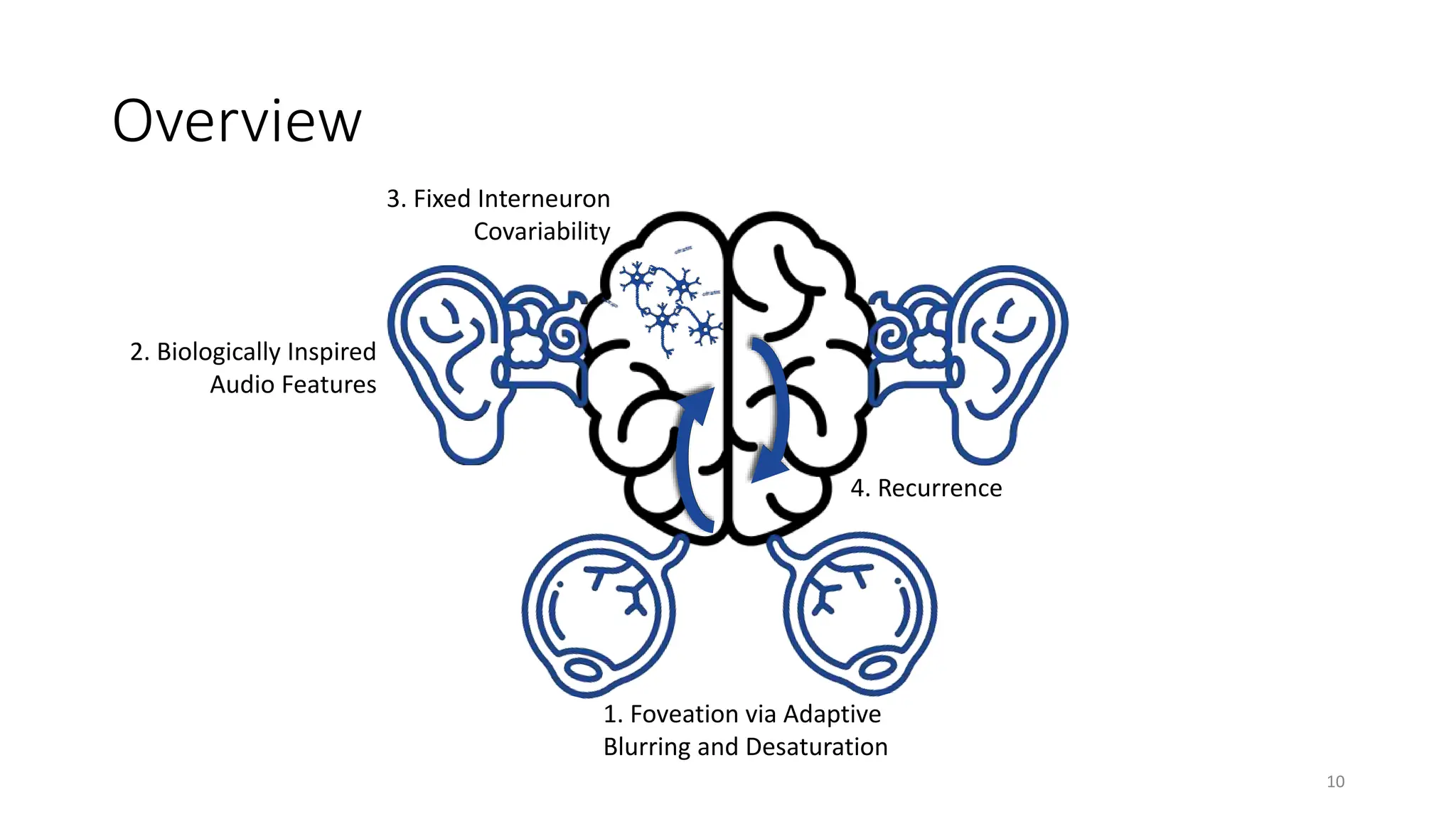

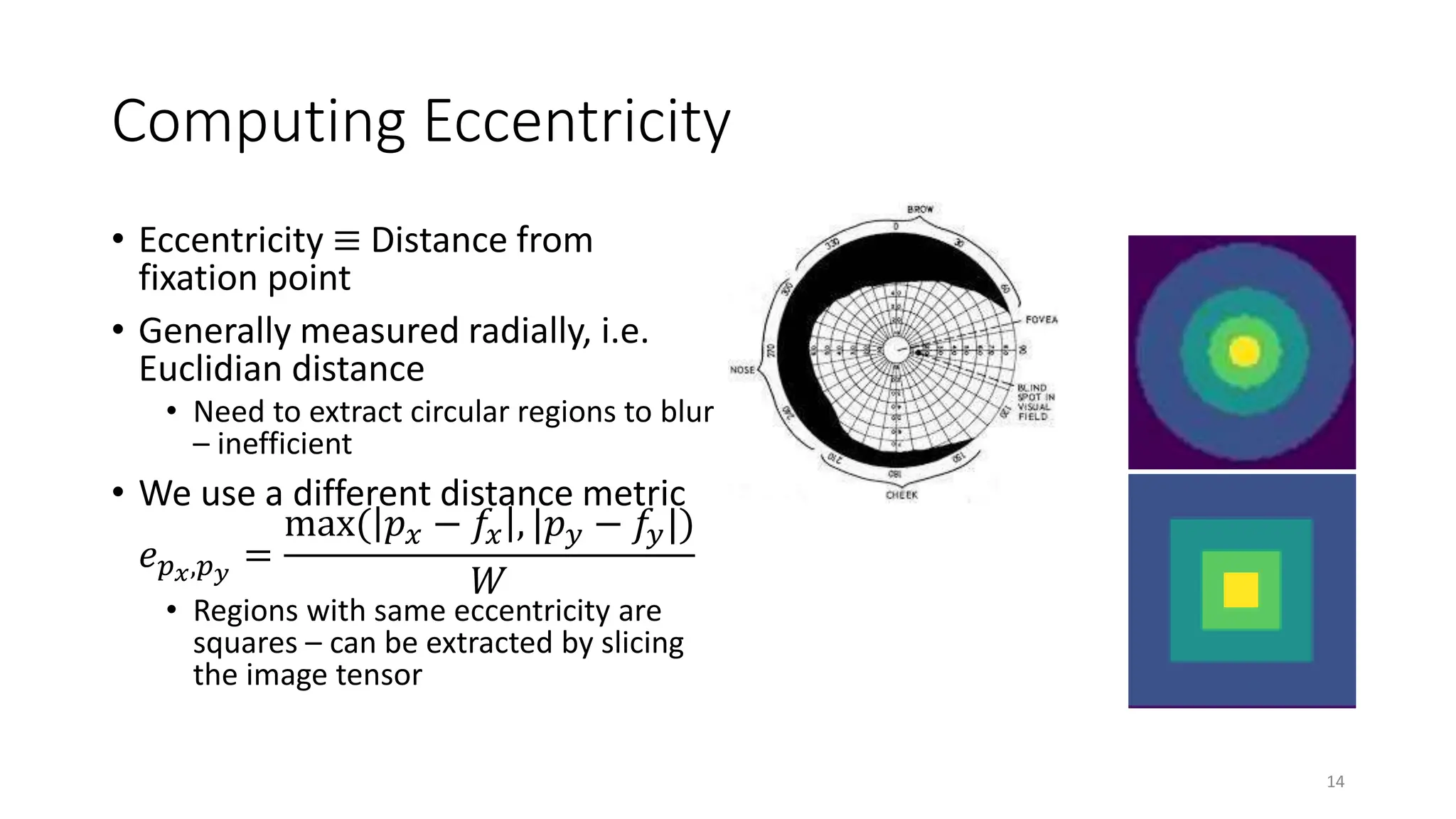

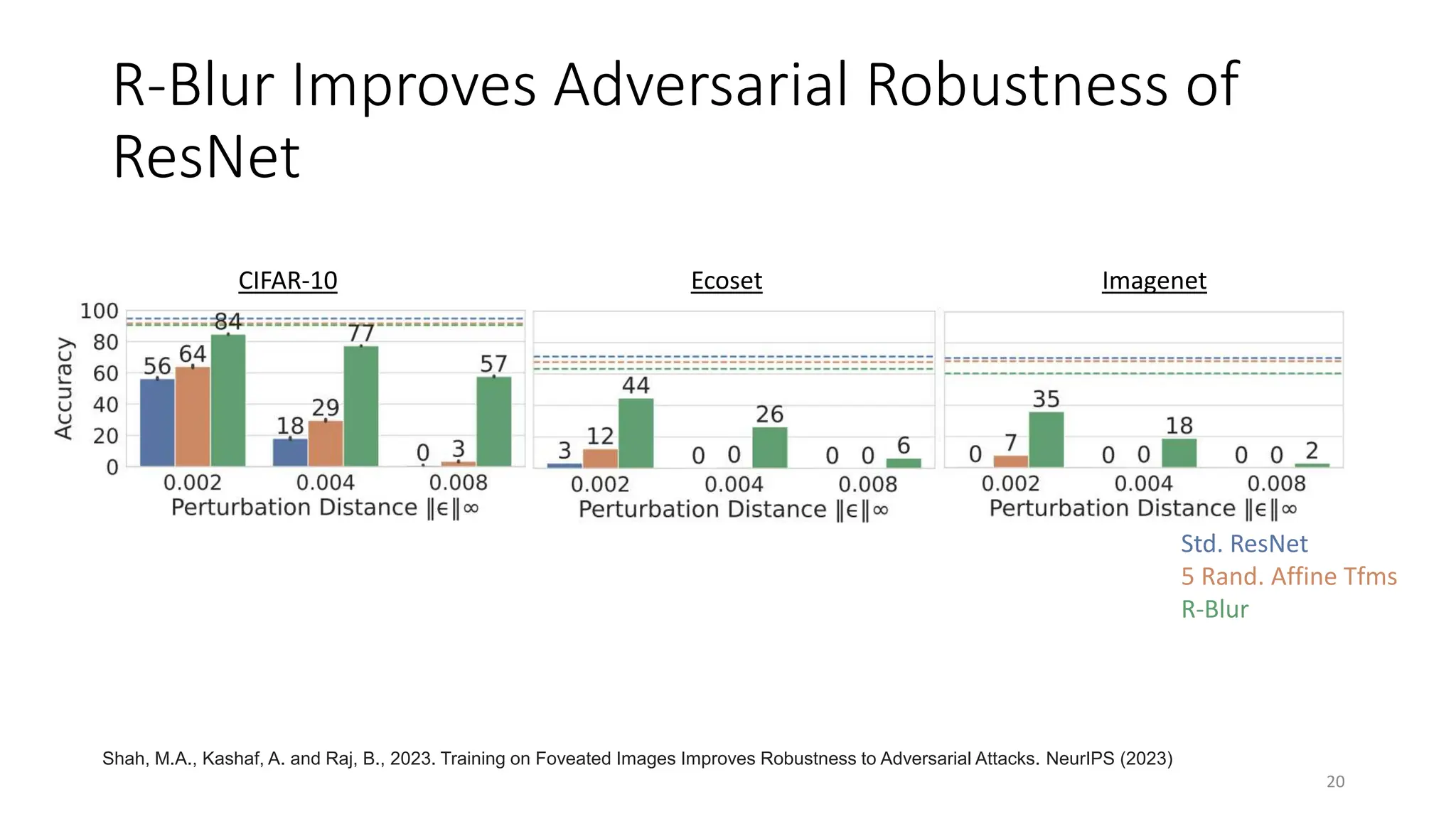

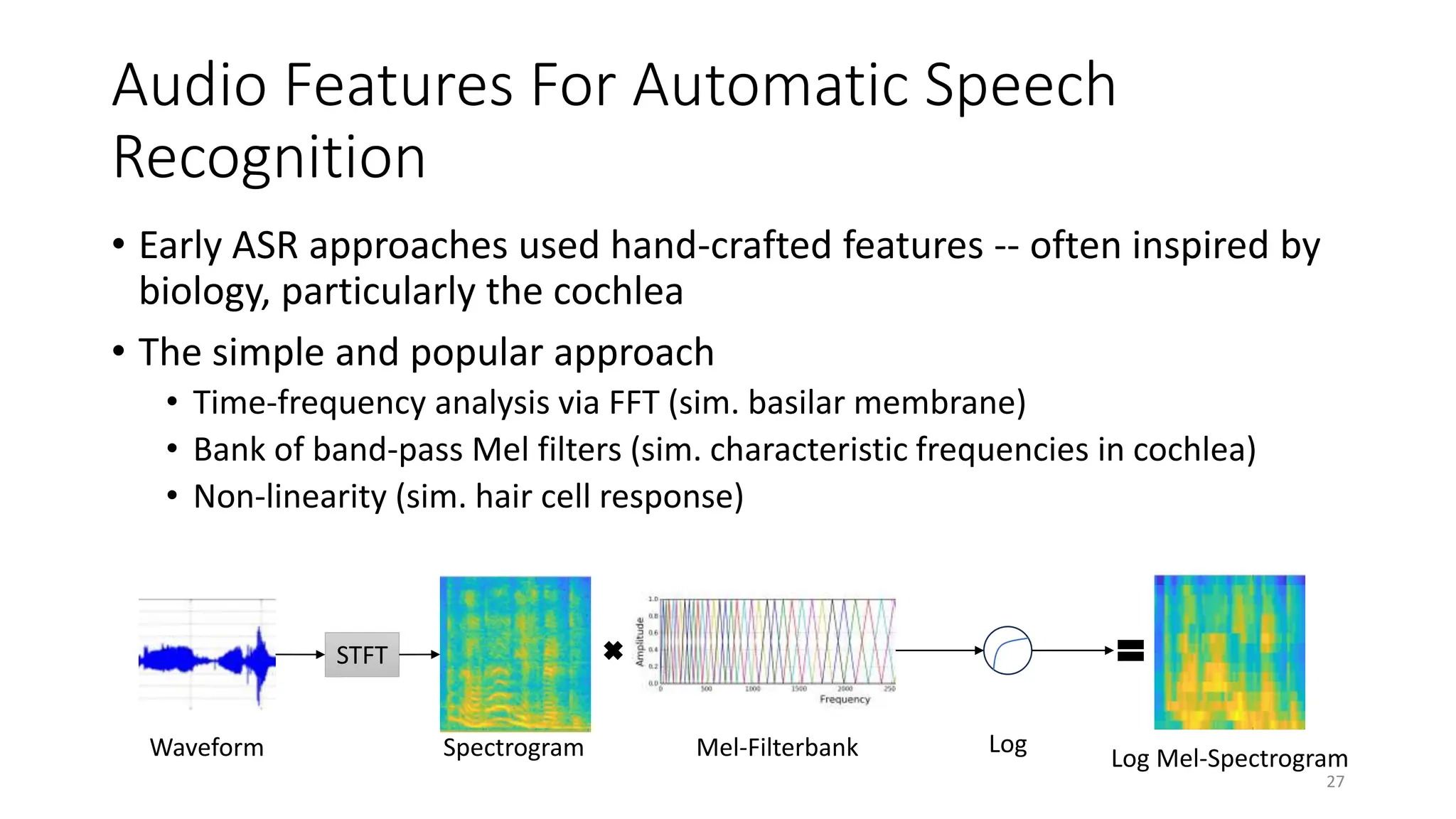

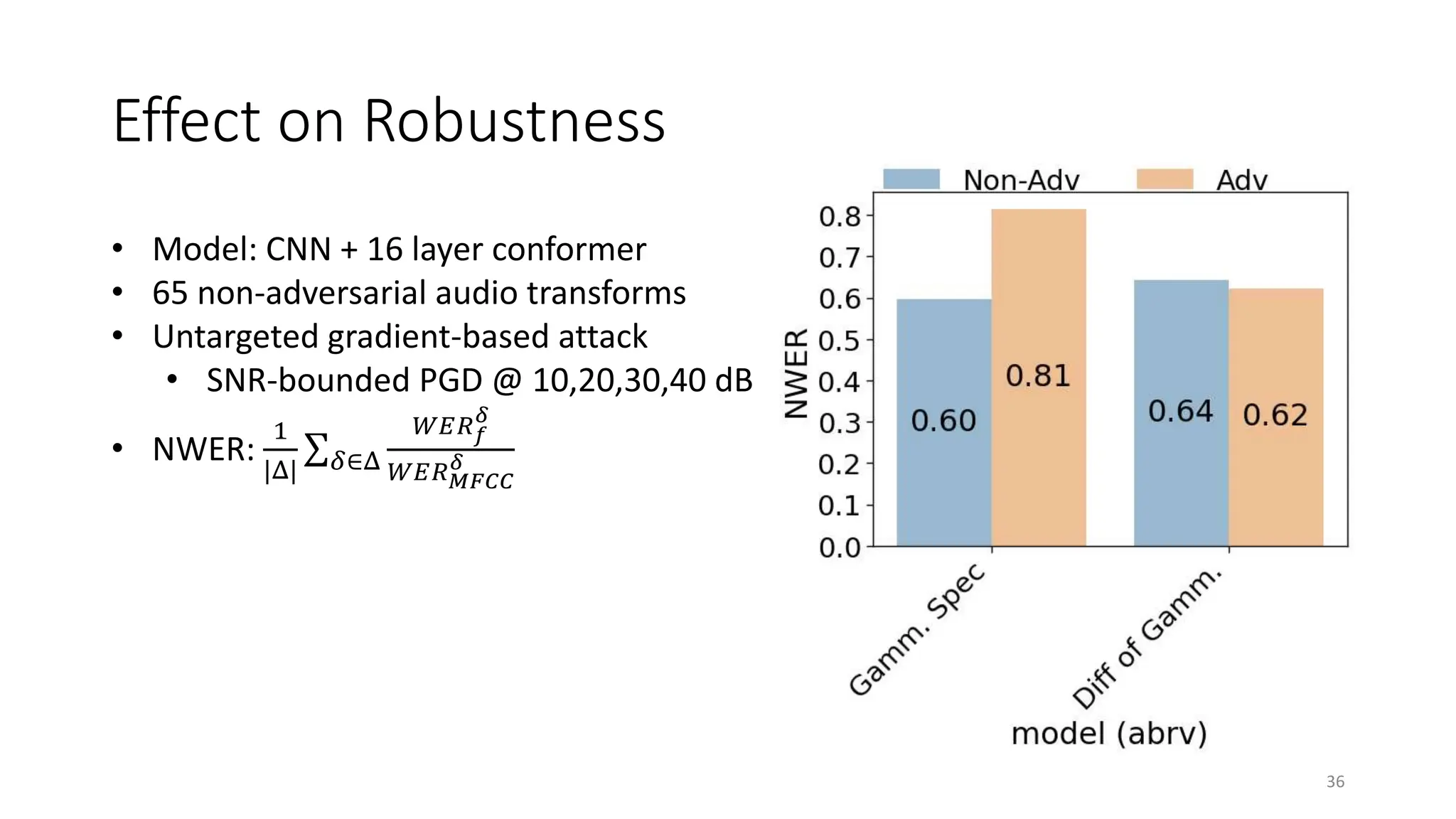

The document discusses biologically inspired strategies for improving the adversarial robustness of deep neural networks (DNNs), specifically through methods like foveation via adaptive blurring and desaturation. It emphasizes the importance of aligning DNNs with human perception to mitigate vulnerabilities against adversarial attacks and presents various biological principles that can enhance robustness. Key findings indicate that techniques like r-blur and biologically plausible audio features can significantly improve DNN performance against both adversarial and non-adversarial perturbations.

![Features Evaluated So Far

Feature Salient Feature

Log Spectrogram Time-frequency representation + non-linearity

Log Mel Spectrogram Triangular RFs with CFs on the Mel Scale

Cochleagram

[Feather+23]

Gammatone RFs with CFs on the ERB scale + power-law non-linearity

Gammatone Spectrogram Same as Cochleagram but computed by transforming the STFT

Power Normalized

Coefficients [Kim+10]

Power-normalized Gammatone RFs with CFs on the ERB scale +

temporal masking + noise suppression + power-law non-linearity

Difference of

Gammatones

Lateral suppression by frequencies around the CF

Frequency Masked

Spectrogram

Simulates simultaneous frequency masking

29](https://image.slidesharecdn.com/mit-talk-0412-240429055509-f5cb45c3/75/Biologically-Inspired-Methods-for-Adversarially-Robust-Deep-Learning-28-2048.jpg)

![Lateral Suppression via Difference of

Gammatone Filters

• Lateral Suppression:

• the response at a given CF may be suppressed by the energy at adjacent

frequencies [Stern & Morgan 12]

• Enhances responses to spectral changes and reduces impact of noise

• Proposal: take a difference of Gammatone filterbank

31](https://image.slidesharecdn.com/mit-talk-0412-240429055509-f5cb45c3/75/Biologically-Inspired-Methods-for-Adversarially-Robust-Deep-Learning-29-2048.jpg)

![Difference of Gammatone Filters

• Create 2 Gammatone

frequency response curves

with different widths, and

subtract.

• Normalize by sum of positive

values

𝐺𝑑 𝑘 = 𝐺1 𝑘 − 𝐺2 𝑘

𝐺𝑑 𝑘, 𝑡 =

𝐺𝑑[𝑘, 𝑡]

𝑡 max{𝐺𝑑[𝑘, 𝑡] , 0}

Frequency

Amplitude](https://image.slidesharecdn.com/mit-talk-0412-240429055509-f5cb45c3/75/Biologically-Inspired-Methods-for-Adversarially-Robust-Deep-Learning-30-2048.jpg)

![Applying DoG Filterbank

• Convolve the DoG Filterbank over the STFT

𝑆𝑥

𝐺 = 𝑆𝑥 ∗ 𝐺

• Half-wave Rectify

𝑆𝑥

𝐺

+

𝑘, 𝑡 = max 𝑆𝑥

𝐺 𝑘, 𝑡 , 0

• Non-linear Compression

𝑆𝑥[𝑘, 𝑡] = 𝑆𝑥

𝐺

+

𝑘, 𝑡 0.3

34](https://image.slidesharecdn.com/mit-talk-0412-240429055509-f5cb45c3/75/Biologically-Inspired-Methods-for-Adversarially-Robust-Deep-Learning-32-2048.jpg)

![Frequency Masked Spectrogram

• Estimate the masking threshold

for each (FFT) frequency [Qin+19,

Lin+15]

• Zero-out regions of the

spectrogram where Power-

Spectral Density (PSD) falls below

the threshold

38

Time (window) Time (window)

Frequency

(FFT

bin)

Frequency

(FFT

bin)](https://image.slidesharecdn.com/mit-talk-0412-240429055509-f5cb45c3/75/Biologically-Inspired-Methods-for-Adversarially-Robust-Deep-Learning-36-2048.jpg)

![Estimating the Masking Threshold [Qin+19]

1. Smoothed Normalized PSD

𝑝𝑥 𝑘 = 20log10 𝑠𝑥 𝑘

1

𝑁

𝑝𝑥 𝑘 = 96 − max

𝑘

𝑝𝑥 𝑘 + 𝑝𝑥 𝑘

𝑝𝑥

𝑚

𝑘

= 10log10 10𝑝𝑥 𝑘−1

+ 10𝑝𝑥 𝑘

+ 10𝑝𝑥 𝑘+1

2. Two-sided spreading function

𝑆𝐹 𝑖, 𝑗 =

27Δ𝑏𝑖𝑗 Δ𝑏𝑖𝑗 > 0

𝐺 𝑖 ⋅ Δ𝑏𝑖𝑗 𝑜𝑤

Δ𝑏𝑖𝑗 = 𝑏𝑎𝑟𝑘 𝑓𝑗 − 𝑏𝑎𝑟𝑘 𝑓𝑖

𝐺 𝑖 = −27 + 0.37max{𝑝𝑥 𝑖 − 40,0}

39

masker

maskee](https://image.slidesharecdn.com/mit-talk-0412-240429055509-f5cb45c3/75/Biologically-Inspired-Methods-for-Adversarially-Robust-Deep-Learning-37-2048.jpg)

![Estimating the Masking Threshold (cont.)

3. Pairwise Threshold

𝑇 𝑖, 𝑗 = 𝑝𝑥

𝑚

𝑖 + Δ𝑚 𝑖 + 𝑆𝐹 𝑖, 𝑗

Δ𝑚 𝑖 = −6.025 − 0.275 ⋅ 𝑏𝑎𝑟𝑘(𝑖)

4. Global Threshold

𝜃𝑥 𝑘 = 10log10 10

𝐴𝑇𝐻(𝑖)

10 +

𝑖

10

𝑇[𝑖,𝑘]

10

40

Time

PSD](https://image.slidesharecdn.com/mit-talk-0412-240429055509-f5cb45c3/75/Biologically-Inspired-Methods-for-Adversarially-Robust-Deep-Learning-38-2048.jpg)

![Key Takeaway and Future Work

• Certain biological phenomenon (lateral suppression) improves

robustness to adversarial attack

• While others (temporal masking) do not

• Gammatone FB generally improves robustness

• The gammatone spectrogram has lowest WER on clean data and non-

adversarial perturbations

• Simulate detailed cochlear models (e.g. CARFAC [Lyon 12], Seneff)

• Creating efficient PyTorch implementation taking time.

44](https://image.slidesharecdn.com/mit-talk-0412-240429055509-f5cb45c3/75/Biologically-Inspired-Methods-for-Adversarially-Robust-Deep-Learning-42-2048.jpg)

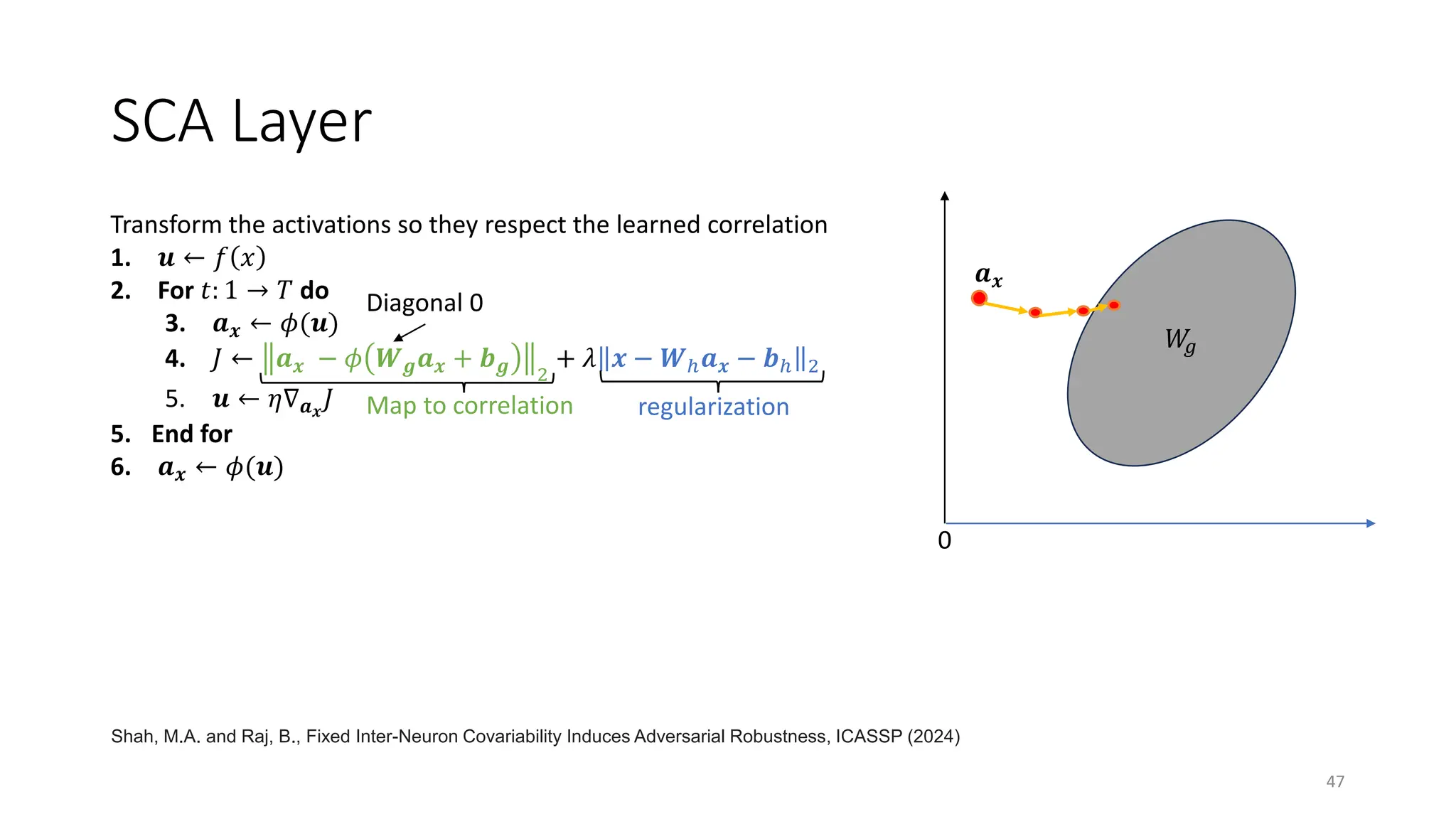

![Fixed Inter-Neuron Covariability Induces

Adversarial Robustness

46

• Inter-neuron correlations in the brain are

rigid [Hennig+21]

• Inter-neuron correlations in DNNs are

flexible

• Change based on stimulus distribution

Shah, M.A. and Raj, B., Fixed Inter-Neuron Covariability Induces Adversarial Robustness, ICASSP

(2024)](https://image.slidesharecdn.com/mit-talk-0412-240429055509-f5cb45c3/75/Biologically-Inspired-Methods-for-Adversarially-Robust-Deep-Learning-44-2048.jpg)

![Recurrent Connections in the Brain

• Recurrent circuits are wide spread in the brain

[Bullier+01, Briggs+20]

• Lateral connections between neurons in the

same region

• Feedback connections from higher cognitive

areas to lower areas

• May fill in missing information due to crowding or

occlusion [Spoerer+17, Boutin+21]

• Not represented in DNNs

51](https://image.slidesharecdn.com/mit-talk-0412-240429055509-f5cb45c3/75/Biologically-Inspired-Methods-for-Adversarially-Robust-Deep-Learning-49-2048.jpg)