This document summarizes Stefan Dietze's presentation on big data in learning analytics. Some key points:

- Learning analytics has traditionally focused on formal learning environments but there is interest in expanding to informal learning online.

- Examples of potential big data sources mentioned include activity streams, social networks, behavioral traces, and large web crawls.

- Challenges include efficiently analyzing large datasets to understand learning resources and detect learning activities without traditional assessments.

- Initial models show potential to predict learner competence from behavioral traces with over 90% accuracy.

![1

10

100

1000

10000

100000

1000000

10000000

1 51 101 151 201

count(log)

PLD (ranked)

# entities # statements

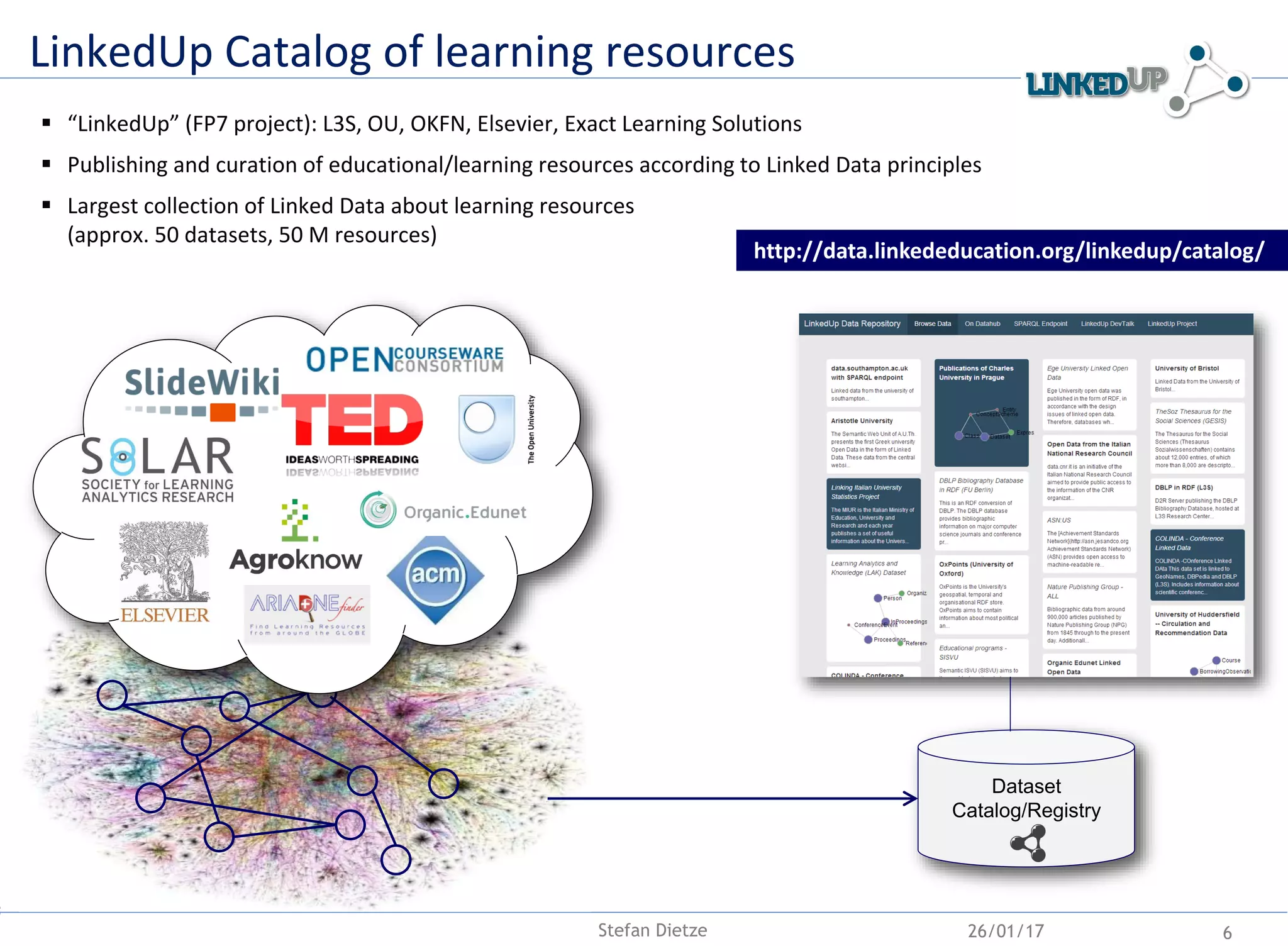

Learning Resources annotations on the Web?

“Learning Resources Metadata Intiative (LRMI)”:

schema.org vocabulary for annotation of learning

resources in Web documents (schema.org etc)

Approx. 5000 PLDs in “Common Crawl” (2 bn Web

documents)

LRMI-Adaptation on the Web (WDC) [LILE16]:

2015: 44.108.511 quads, 6.243.721 resources

2014: 30.599.024 quads, 4.182.541 resources

2013: 10.636873 quads, 1.461.093 resources

23/02/17 7

Power law distribution across providers

4805 Providers / PLDs

Taibi, D., Dietze, S., Towards embedded markup of learning resources

on the Web: a quantitative Analysis of LRMI Terms Usage, in

Companion Publication of the IW3C2 WWW 2016 Conference, IW3C2

2016, Montreal, Canada, April 11, 2016

Stefan Dietze, Besnik Fetahu, Ujwal Gadiraju

http://lrmi.itd.cnr.it/](https://image.slidesharecdn.com/bigdata-learninganalytics-learntec2017-170124114814/75/Big-Data-in-Learning-Analytics-Analytics-for-Everyday-Learning-7-2048.jpg)

![23/02/17 13Stefan Dietze

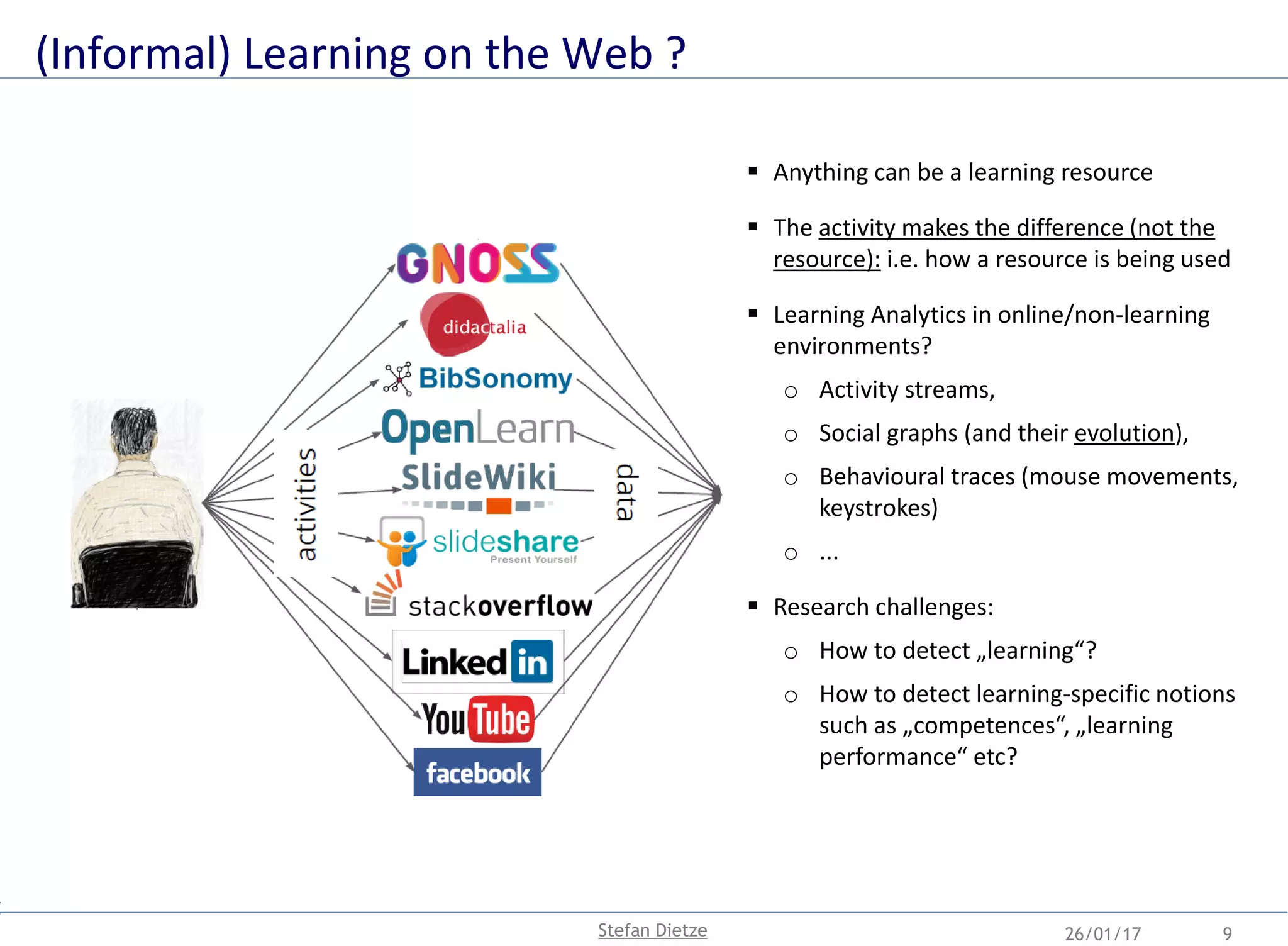

Predicting competence from behavioural traces?

Training data

Manual annotation of 1800 assessments

Performance types [CHI15]:

o “Competent Worker” ,

o “Diligent Worker”

o “Fast Deceiver”

o “Incompetent Worker”

o “Rule Breaker”

o “Smart Deceiver”

o “Sloppy Worker”

Prediction of performance types from

behavioral traces?

Predicting learner types from behavioral traces

“Random Forest Classifier” (per task)

10-fold cross validation

Prediction performance: Accuracy, F-Measure

Results

Longer assessments more signals

Simpler assessments more conclusive signals

“Competent Workers” (CW, DW): accuracy of 91% respectively 87%

Most significant features: “TotalTime”, “TippingPoint”,

“MouseMovementFrequency”, “WindowFocusFrequency”](https://image.slidesharecdn.com/bigdata-learninganalytics-learntec2017-170124114814/75/Big-Data-in-Learning-Analytics-Analytics-for-Everyday-Learning-13-2048.jpg)