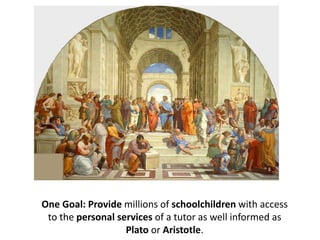

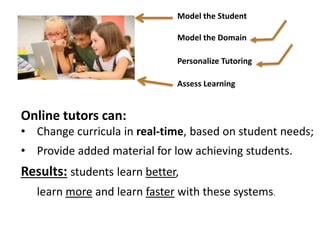

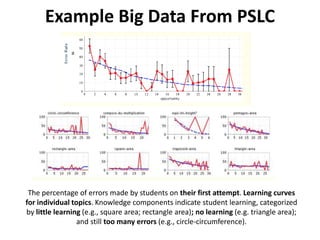

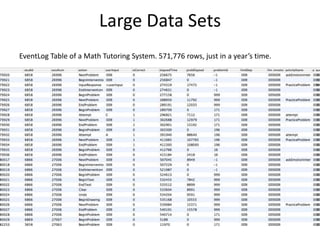

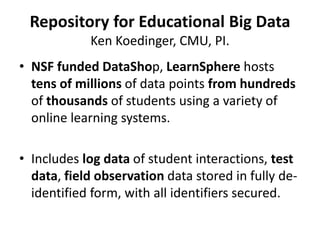

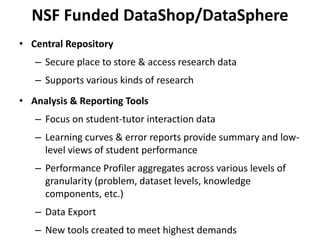

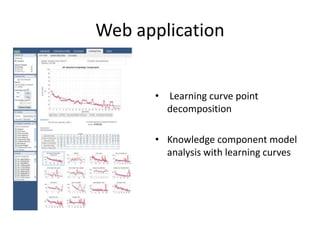

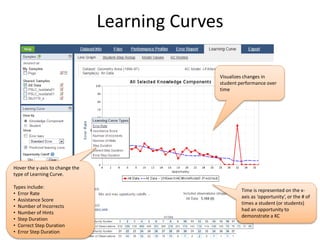

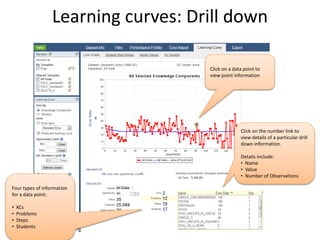

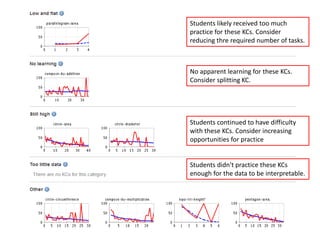

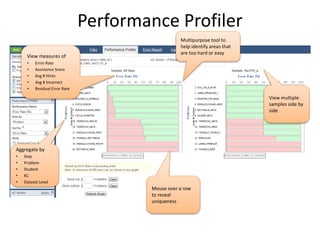

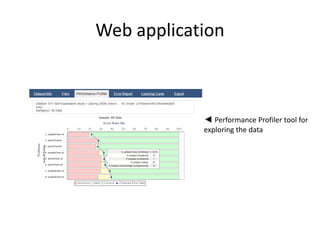

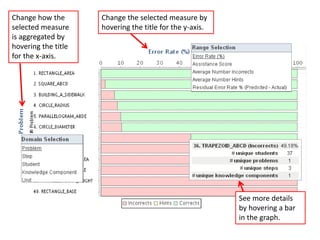

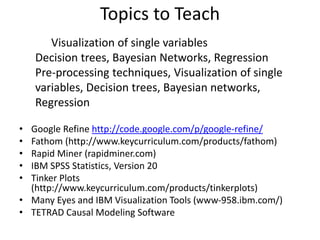

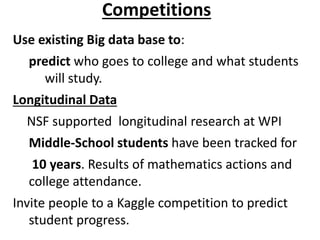

The document discusses challenges and advancements in data-driven education, aiming to personalize tutoring for millions of schoolchildren using extensive educational data. It highlights the need for predictive analytics to assess student behavior and performance through large datasets and various analytical tools like learning curves and performance profilers. Additionally, it outlines workshops, competitions, and training aimed at empowering educators and researchers to leverage big data in educational settings.