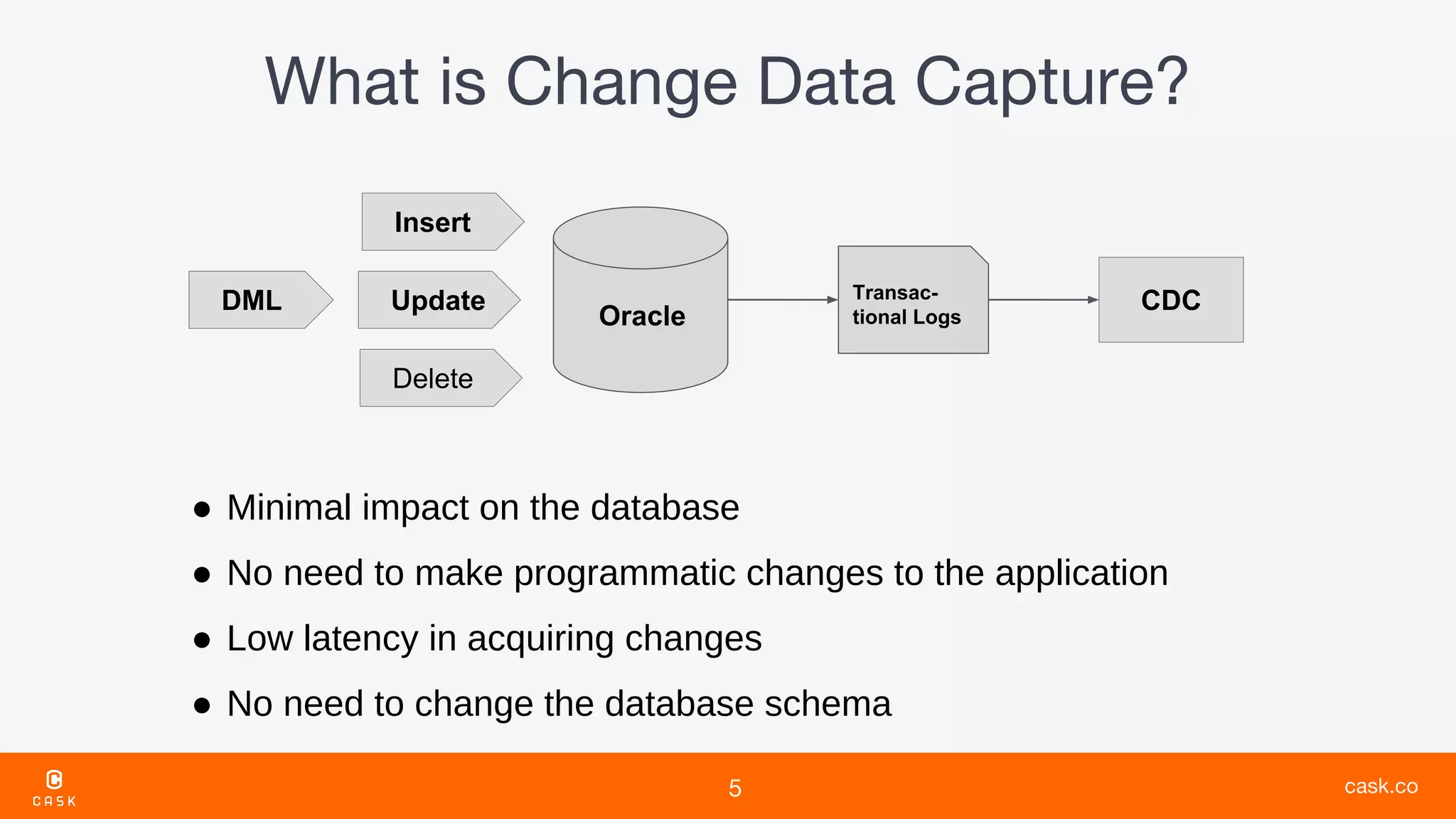

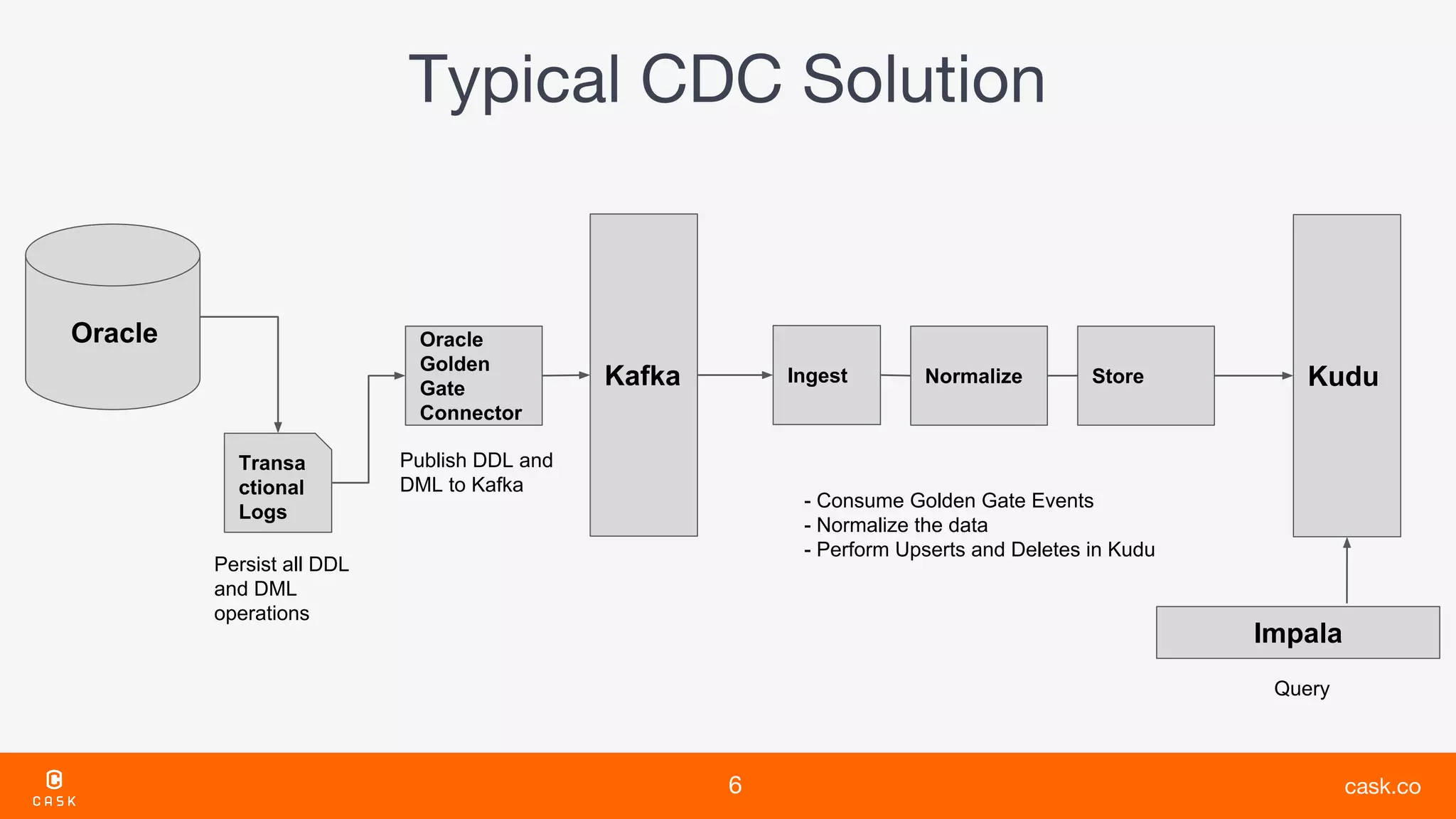

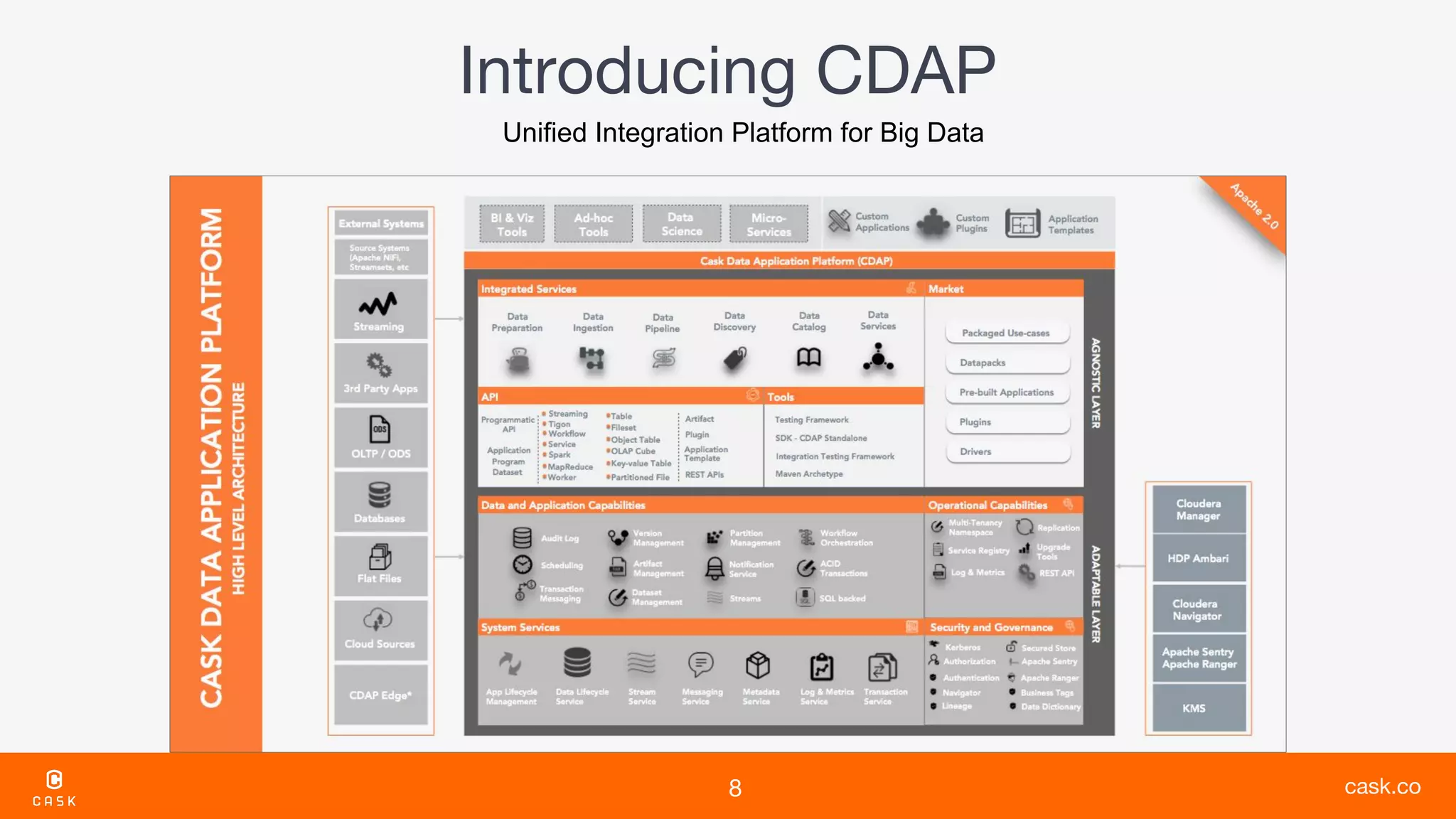

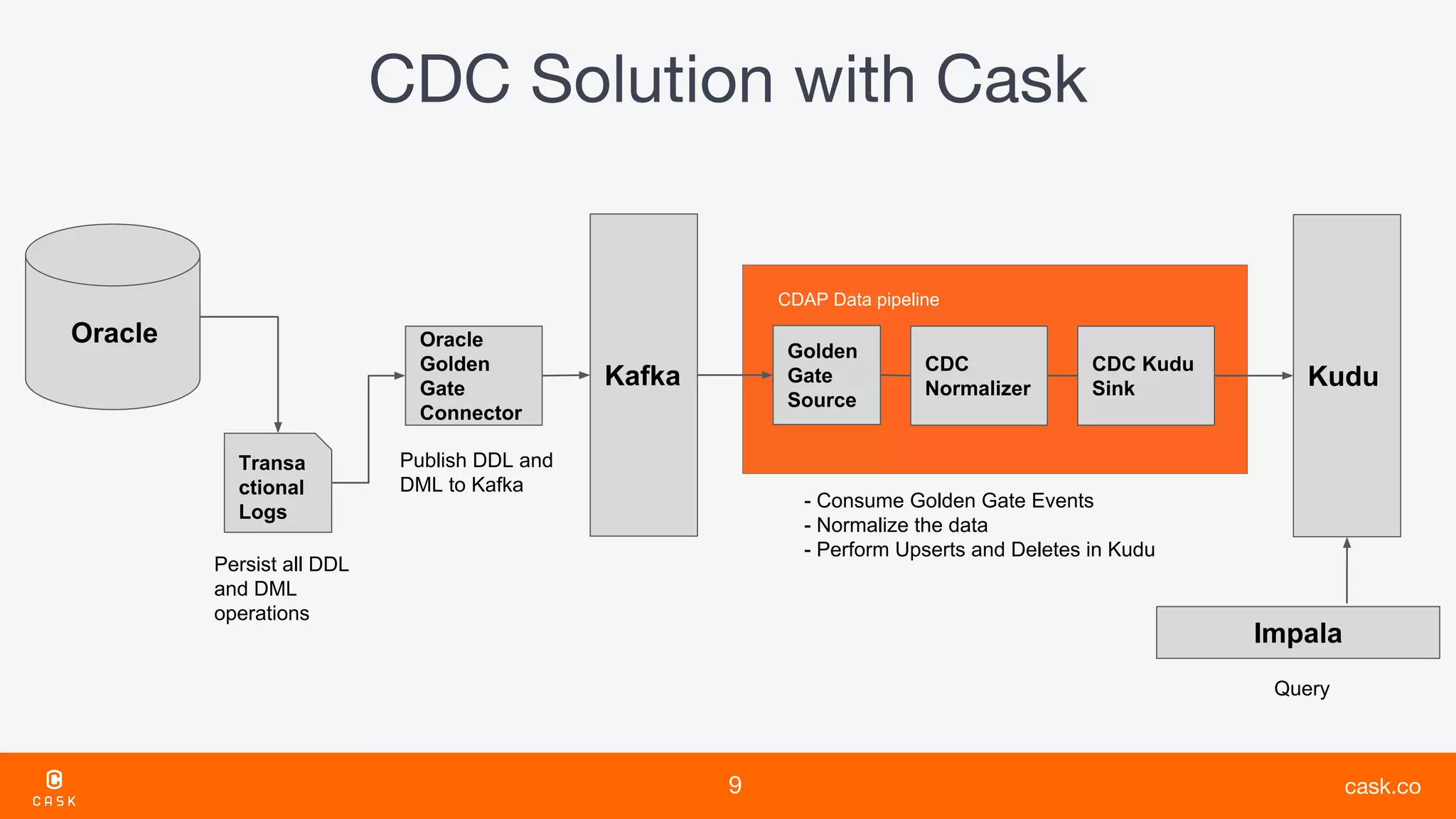

The document discusses the challenges of traditional data warehouses, including high costs, limitations in handling large and unstructured data, and low concurrency. It presents a solution through Change Data Capture (CDC) with Oracle's transactional logs and integration with tools like Kafka and Kudu for efficient data ingestion and processing. Additionally, it highlights the benefits of self-service data pipelines and an integrated platform for managing big data with operational metrics and security features.