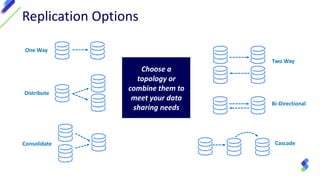

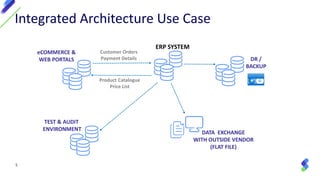

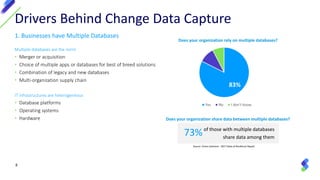

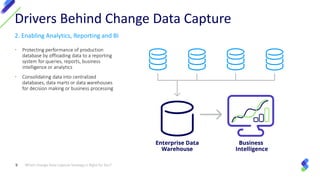

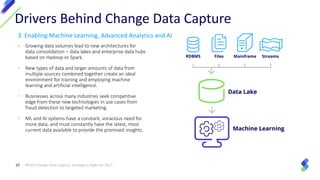

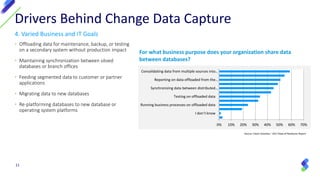

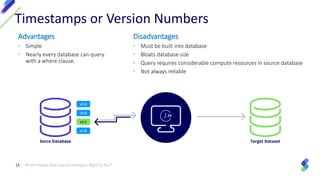

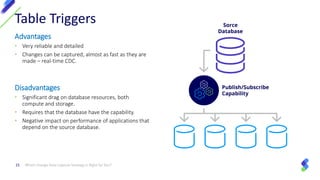

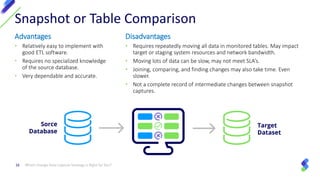

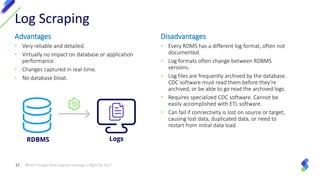

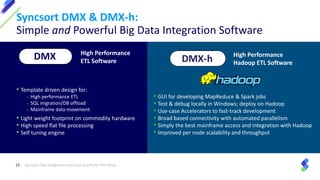

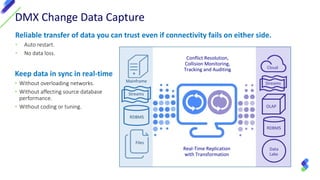

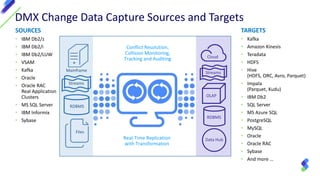

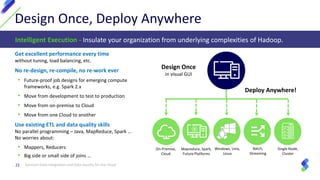

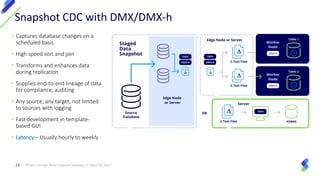

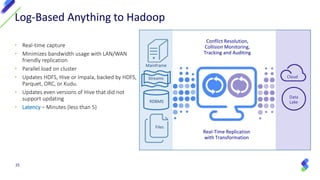

The document discusses change data capture (CDC) strategies that organizations can implement to ensure timely data synchronization across multiple databases. CDC is vital for businesses dealing with significant transactional data and enables analytics, reporting, and machine learning applications. Various CDC methods such as replication options, log scraping, and table triggers are detailed, along with their advantages and disadvantages, to help organizations choose the best strategy for their data integration needs.