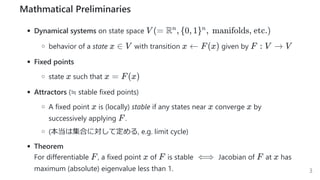

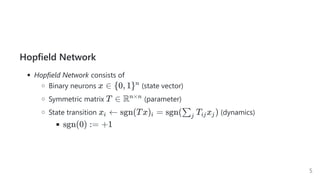

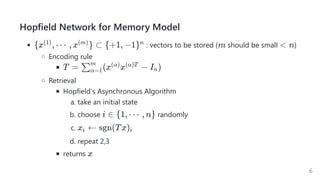

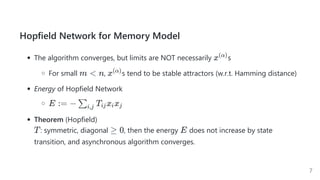

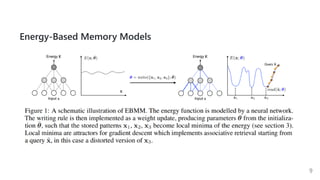

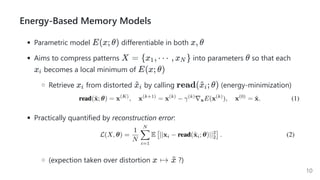

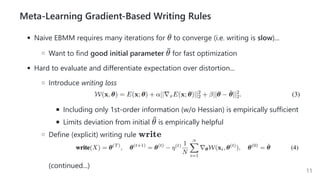

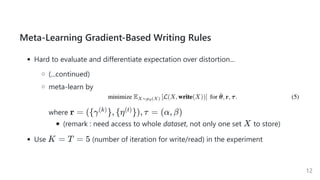

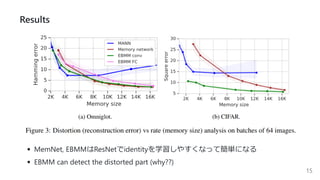

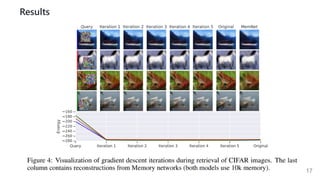

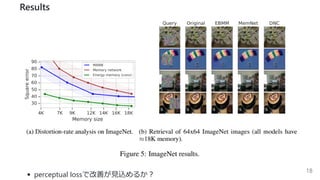

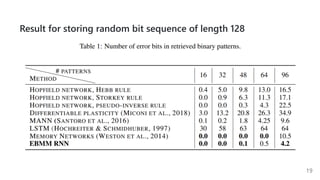

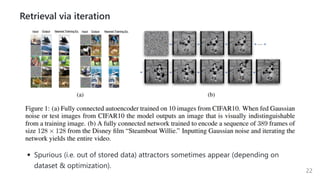

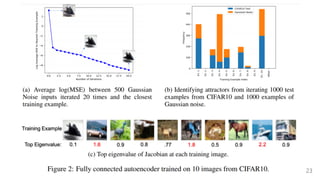

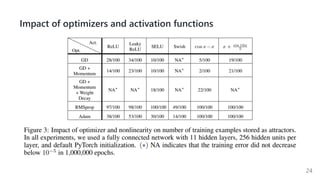

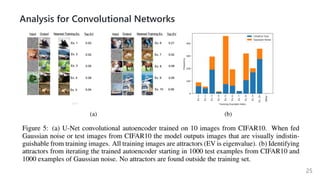

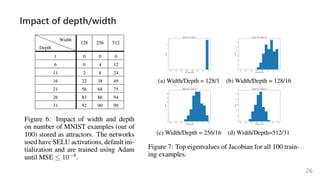

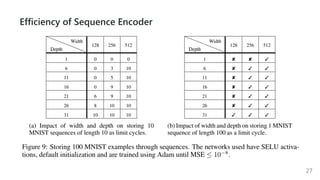

This document summarizes several papers on associative memory models. It discusses Hopfield networks, energy-based deep learning models, and how overparameterized neural networks can implement associative memory through their dynamics. Key points include: Hopfield networks use binary neurons and symmetric weights to retrieve stored patterns via network dynamics; energy-based models aim to store patterns as local minima and retrieve them through energy minimization; overparameterized autoencoders can exhibit associative memory properties without explicit energy functions through their iterative dynamics.