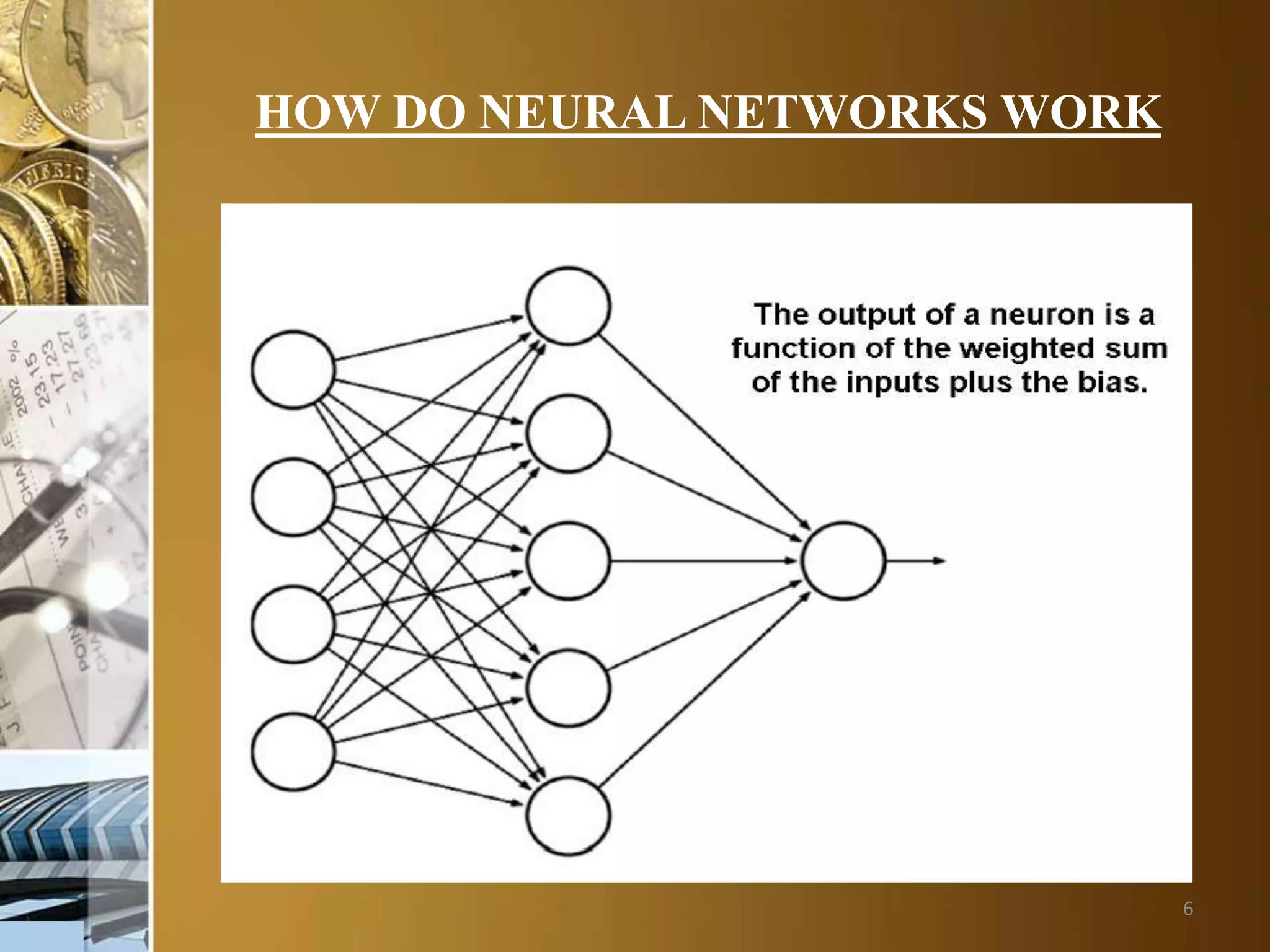

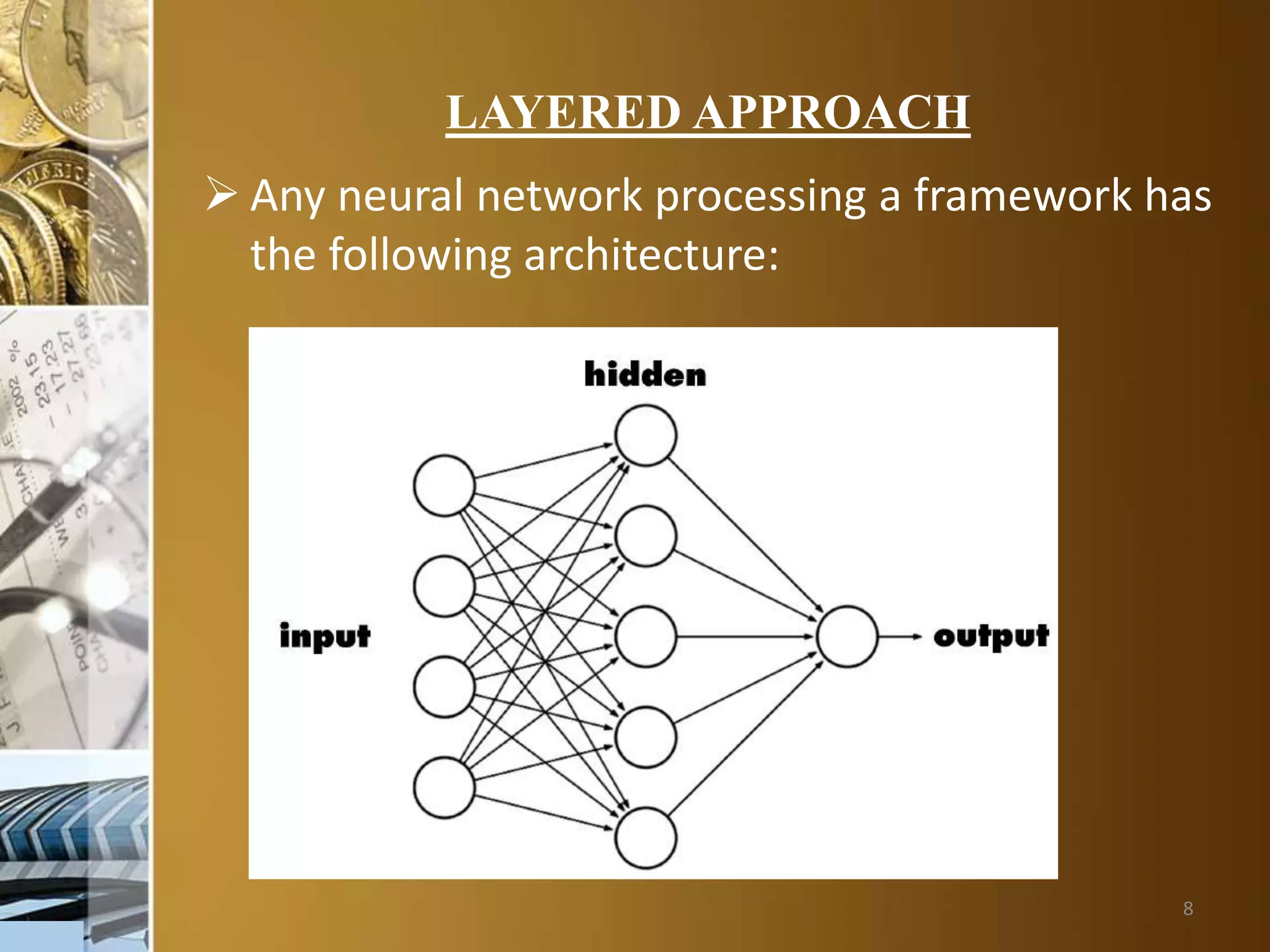

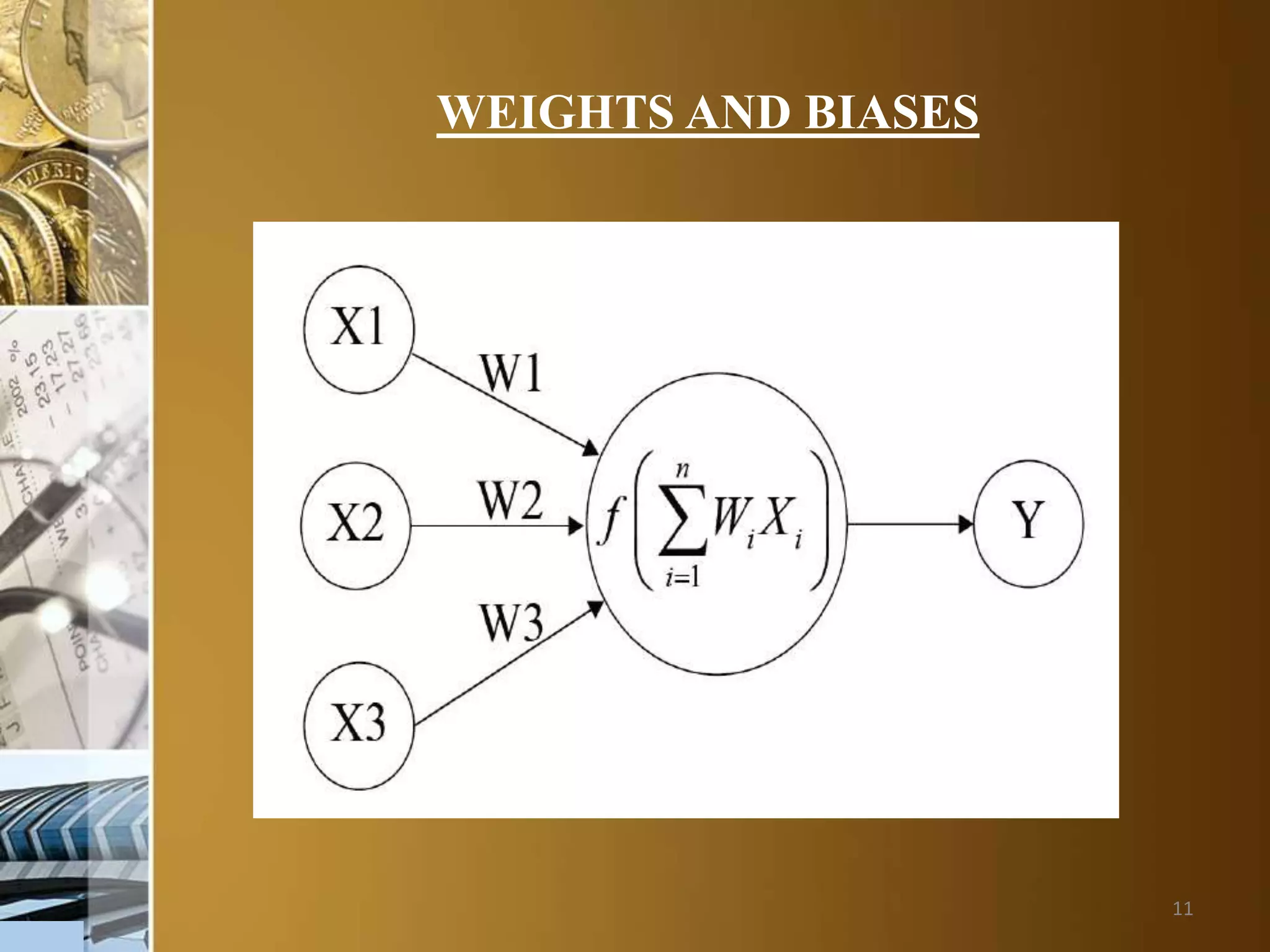

The document covers the fundamentals of artificial neural networks (ANNs) and their functioning in the context of artificial intelligence (AI) and machine learning. It explains concepts such as input layers, hidden layers, and output layers, as well as the importance of weights, biases, and activation functions in neural network processing. Additionally, it distinguishes between supervised and unsupervised learning and outlines the processes of forward propagation and backpropagation in training neural networks.