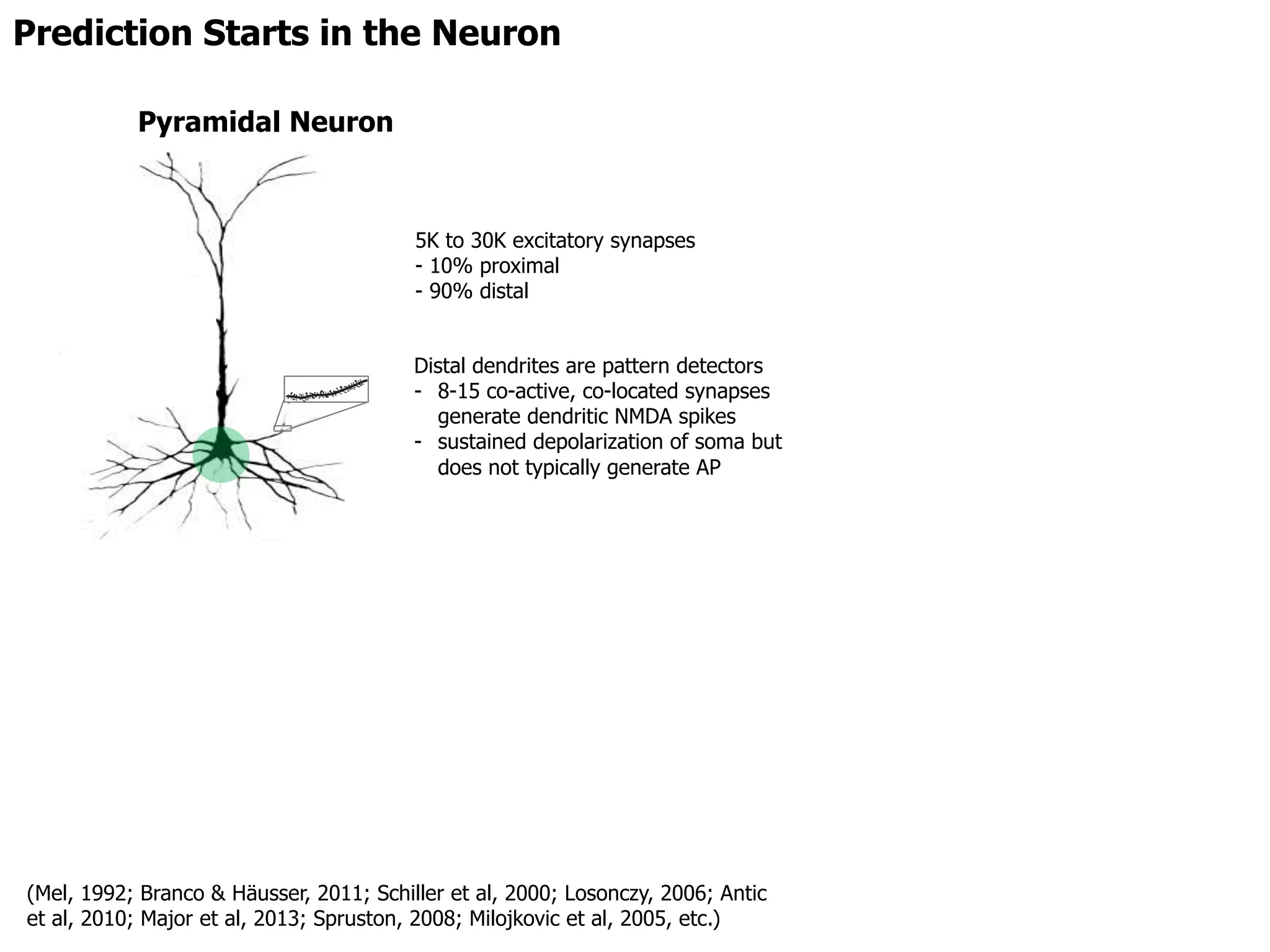

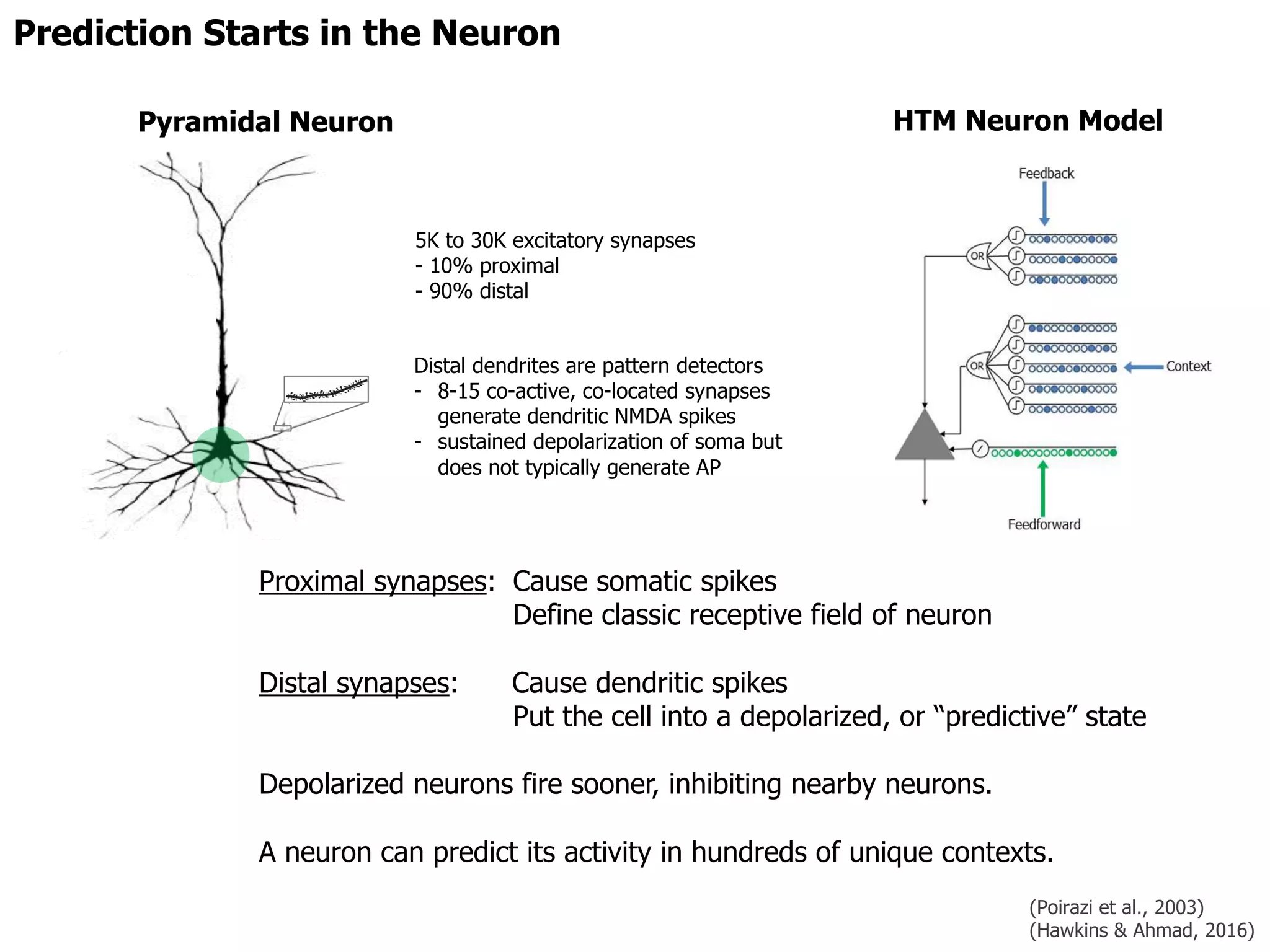

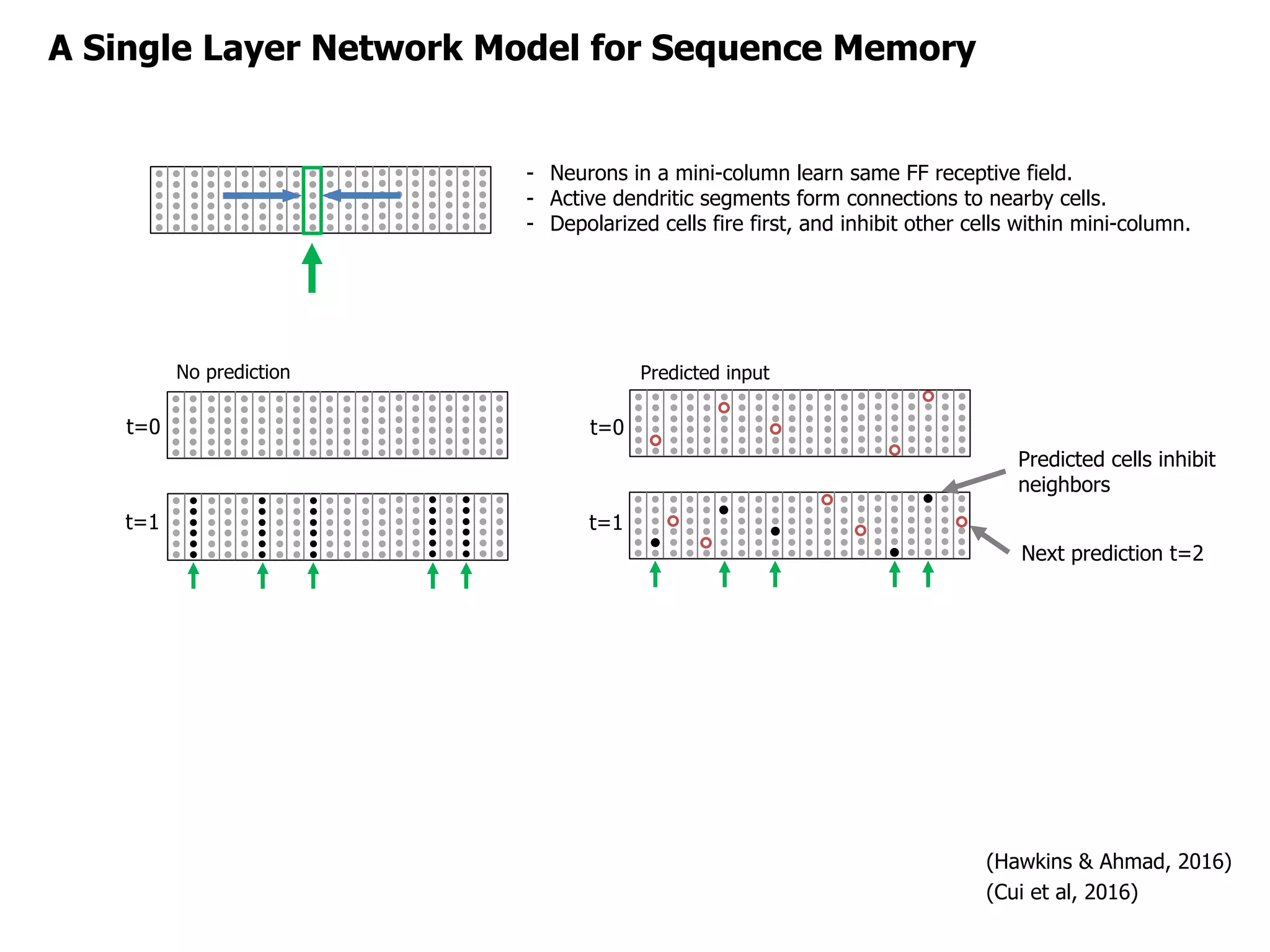

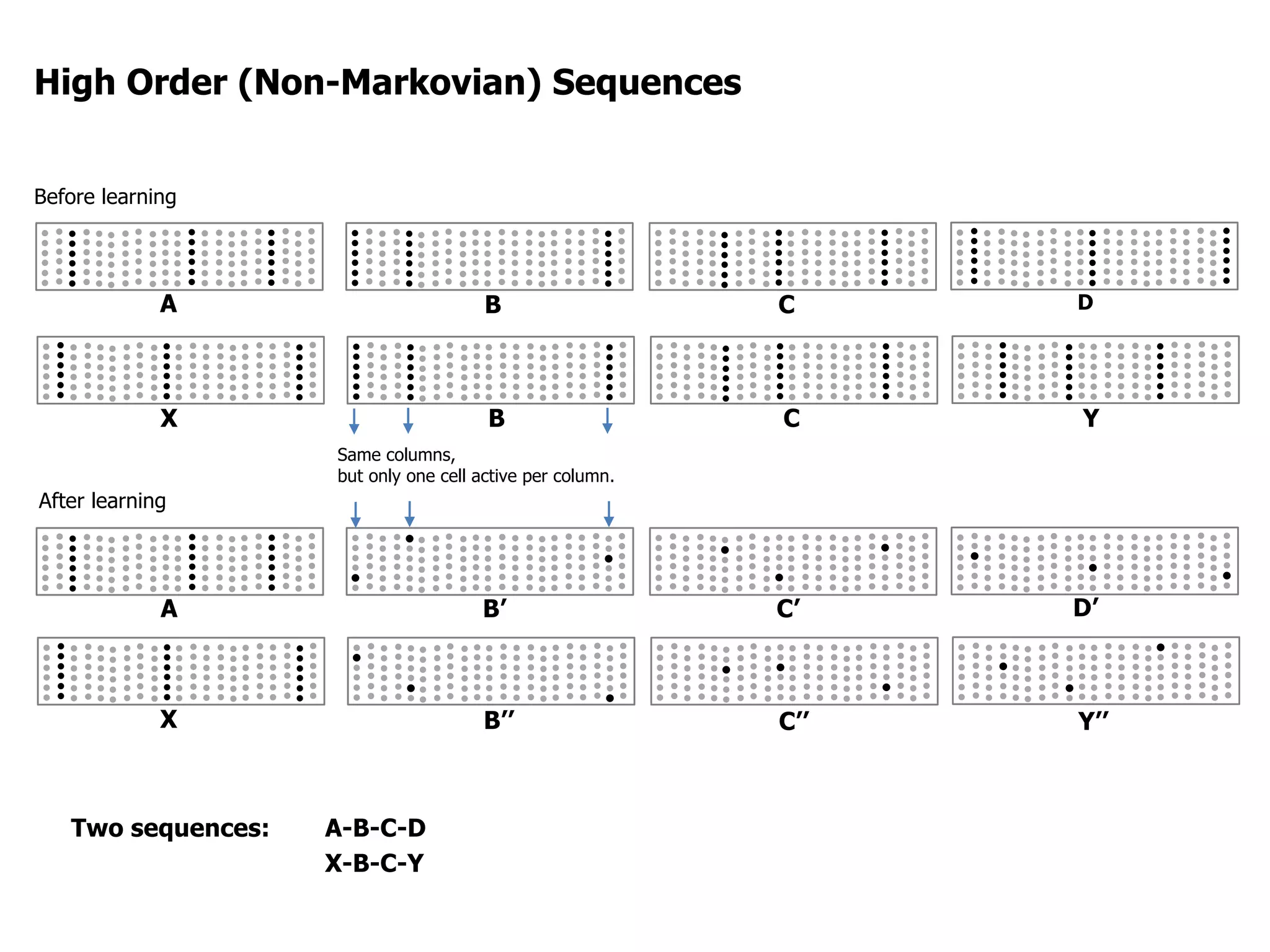

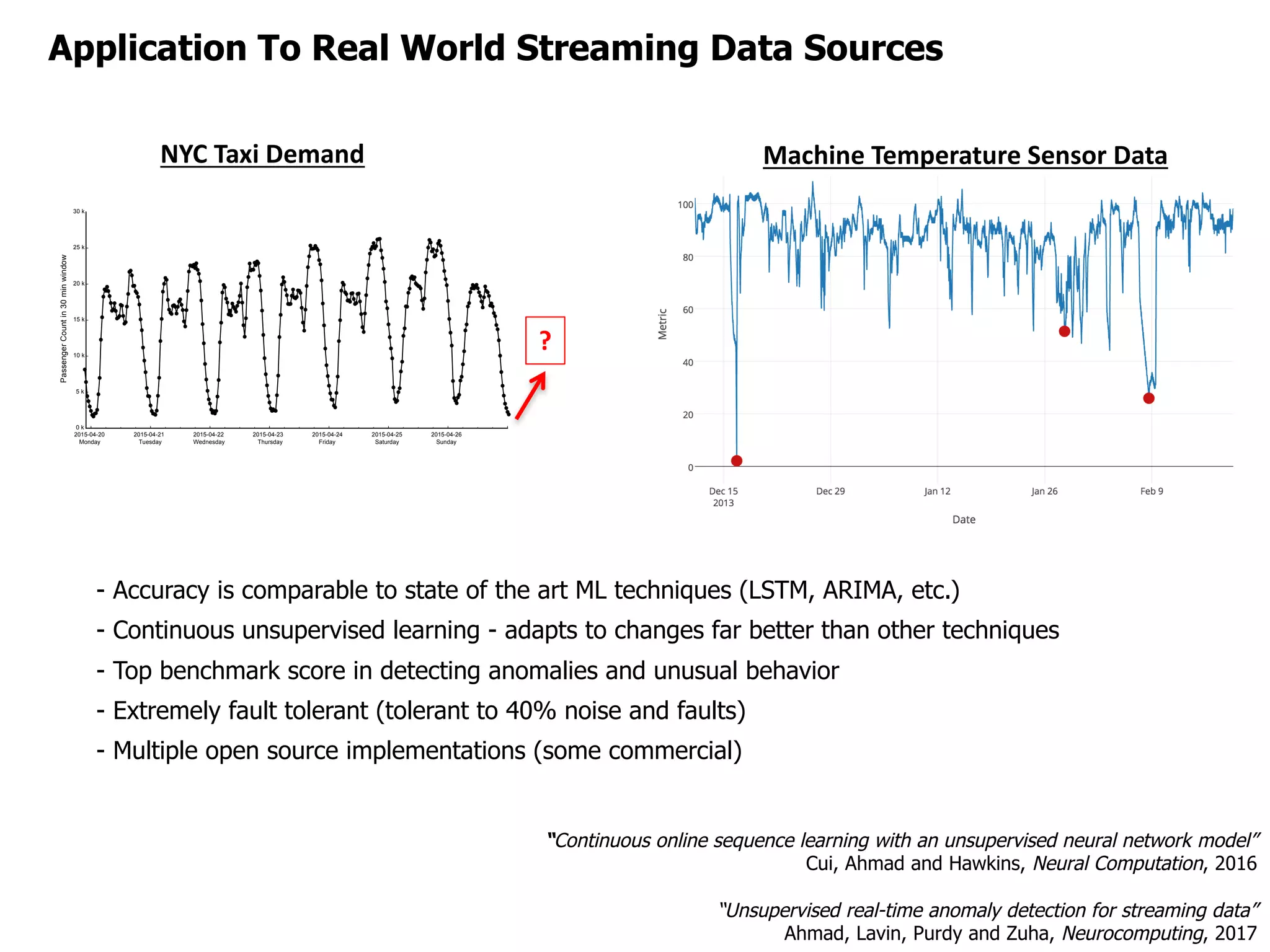

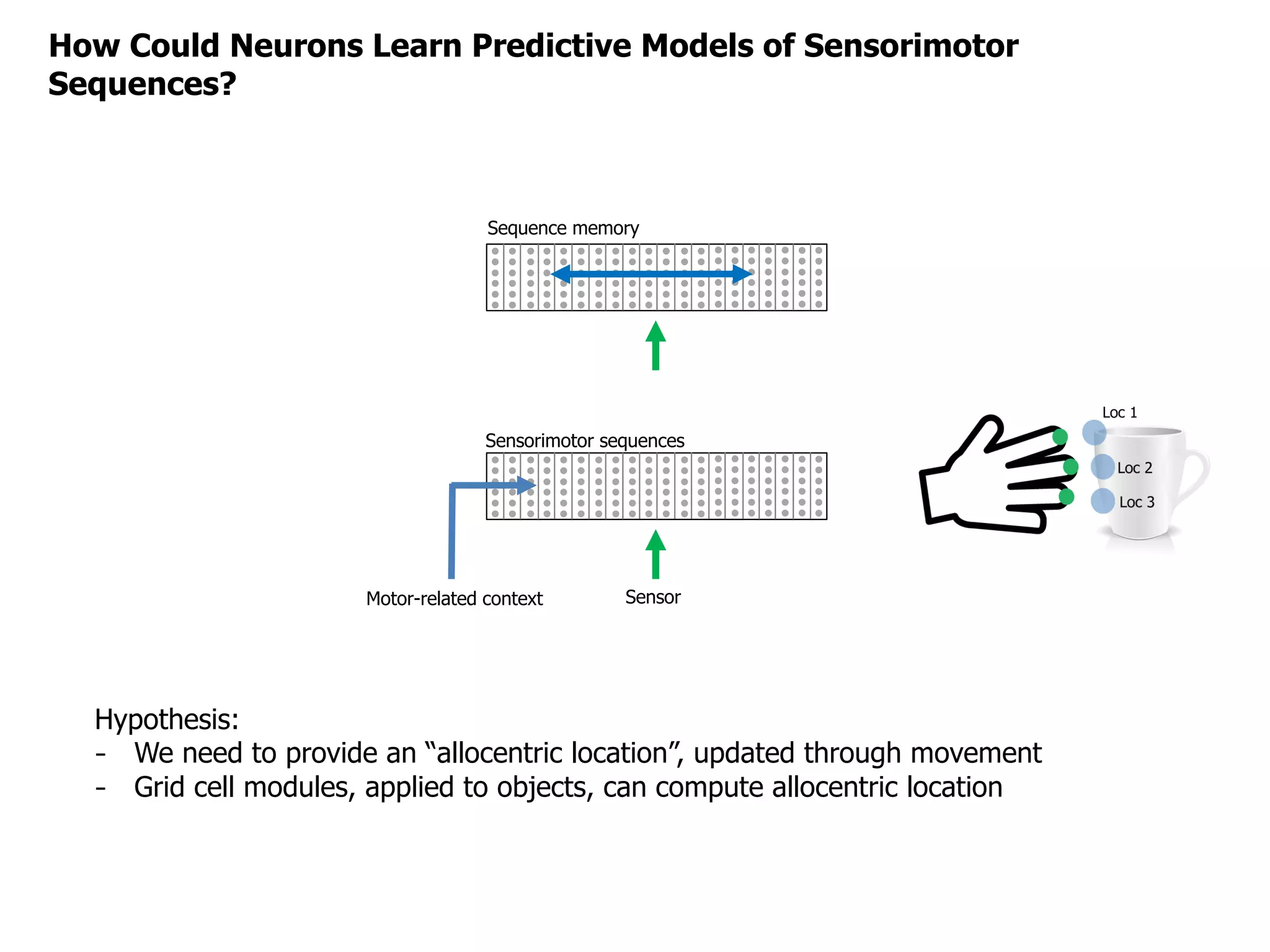

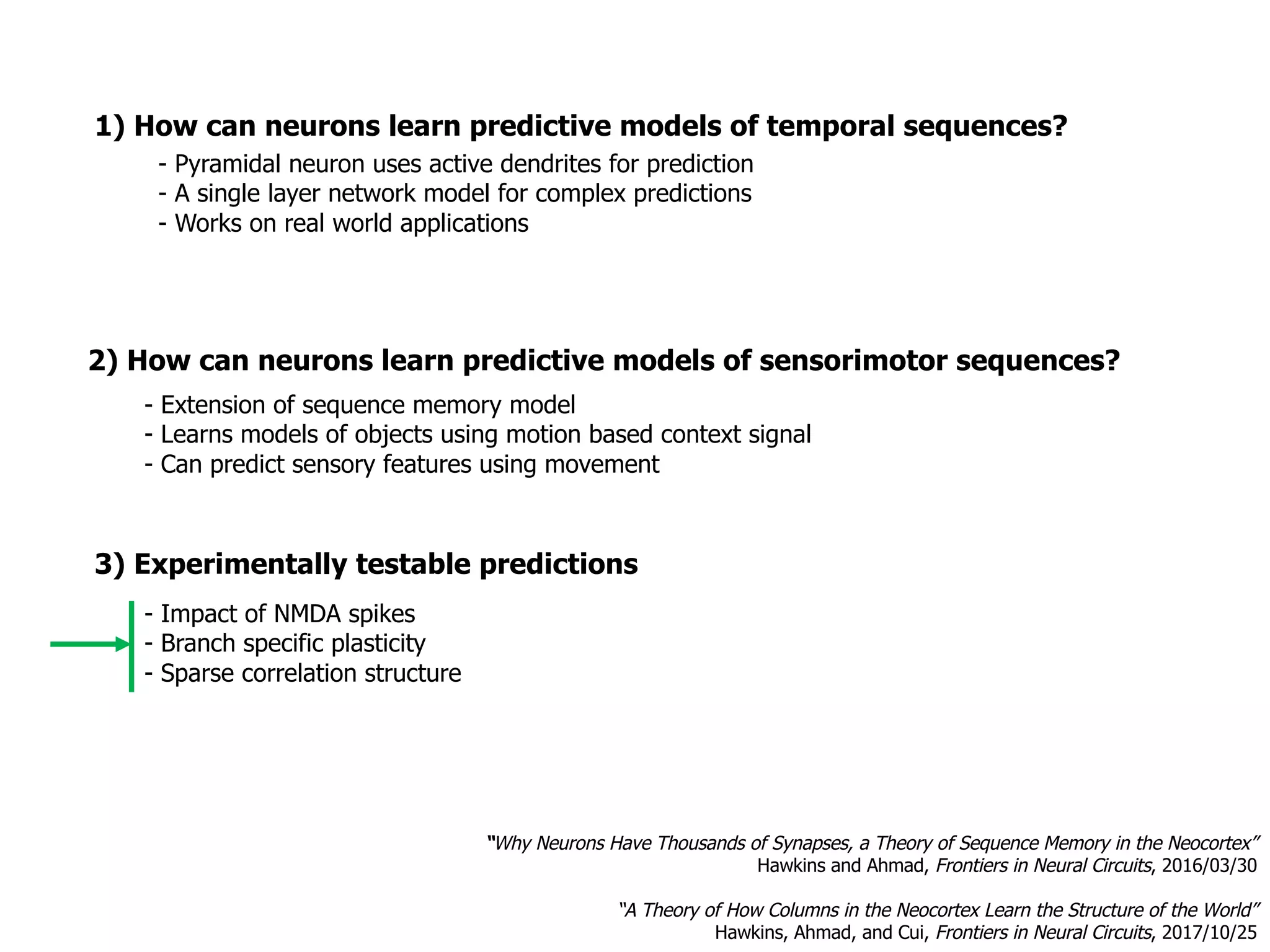

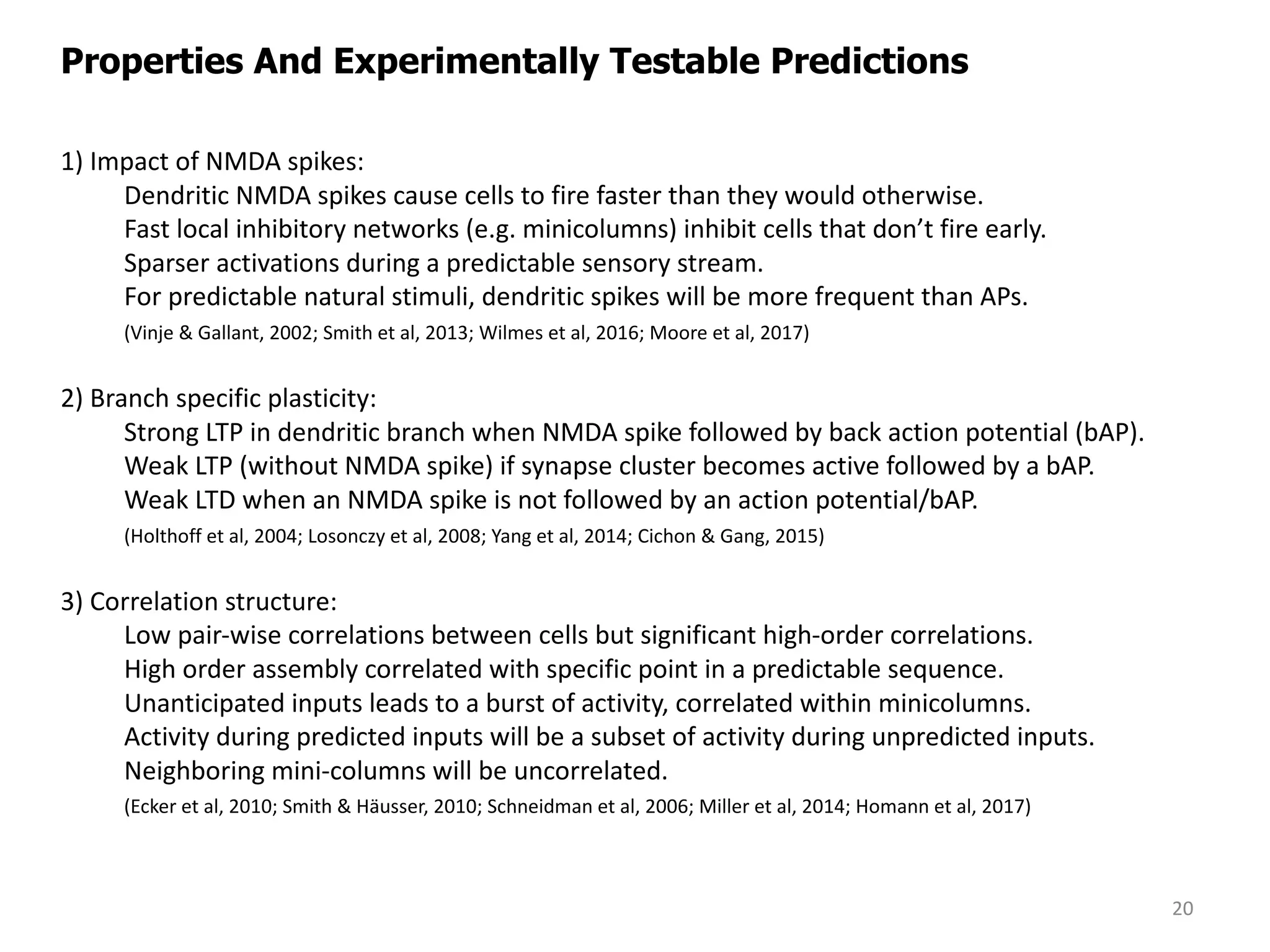

The workshop discussed how active dendrites enable spatiotemporal computation in the neocortex, emphasizing the neocortex's predictive capabilities. Key areas of research include the learning of predictive models in both temporal and sensorimotor sequences, supported by experimentally testable predictions regarding NMDA spikes and branch-specific plasticity. The findings suggest the potential for developing intelligent machines based on these cortical principles, with real-world applications demonstrating superior performance in anomaly detection and continuous learning.