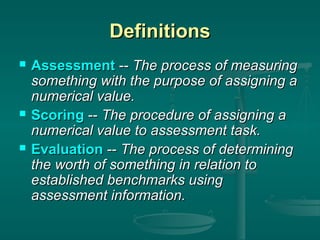

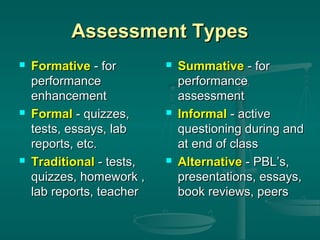

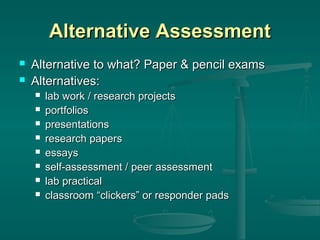

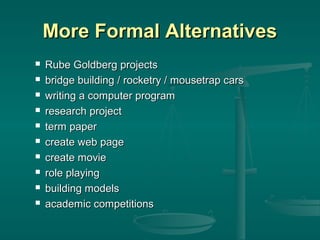

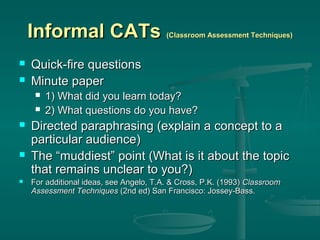

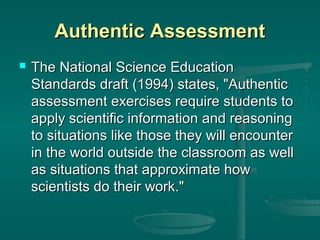

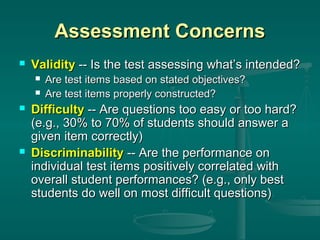

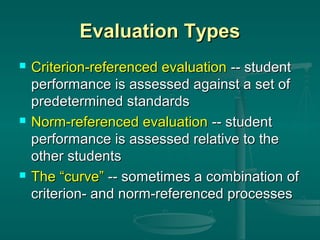

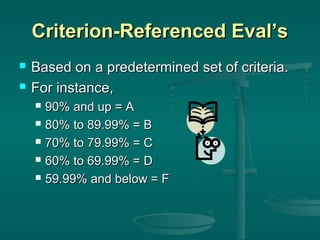

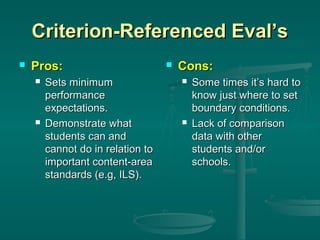

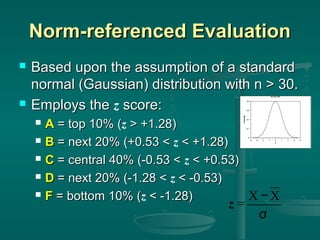

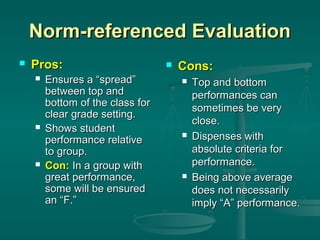

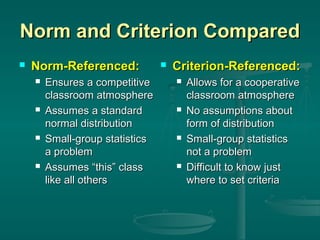

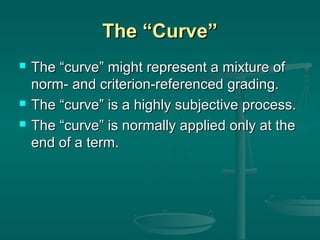

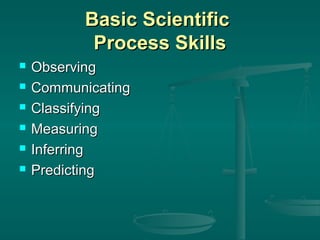

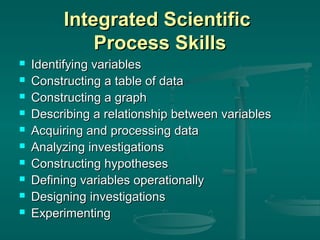

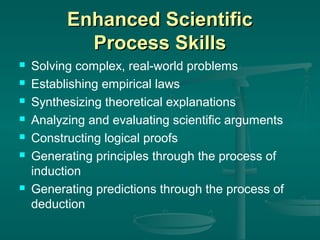

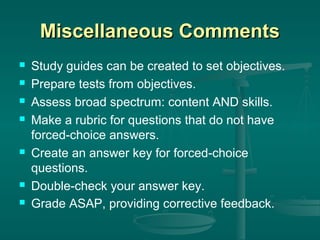

This document discusses assessment, scoring, and evaluation in education. It defines key terms like assessment, scoring, and evaluation. It describes different types of assessments like formative and summative assessments. It discusses alternative assessments like projects, presentations, and portfolios. It also covers criteria for effective assessments like validity, difficulty, and discriminability. Evaluation can be criterion-referenced, norm-referenced, or use a curve. The document provides examples of assessing scientific process skills and tips for grading, handling appeals, using portfolios, and record keeping.