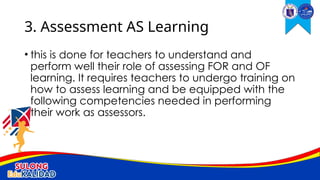

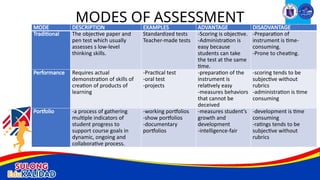

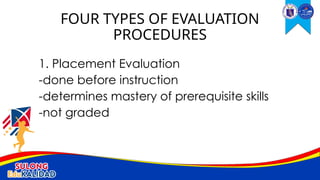

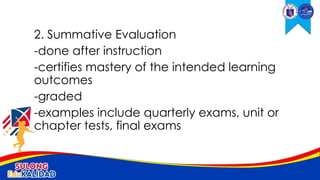

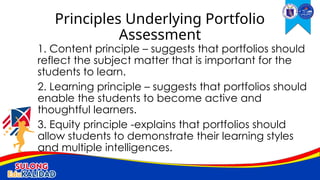

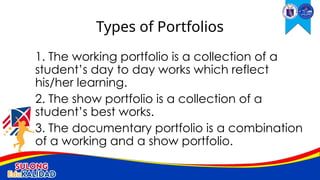

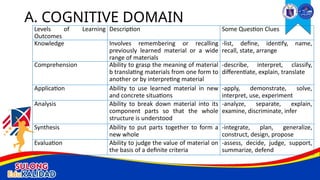

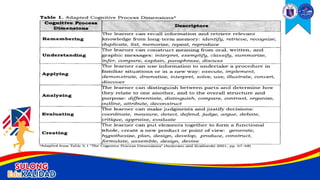

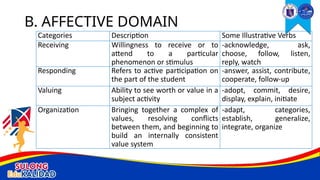

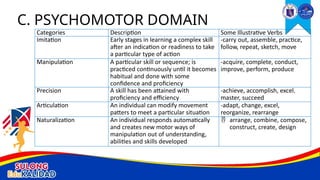

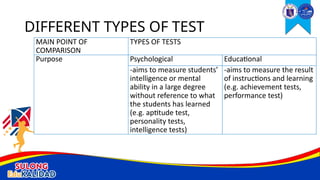

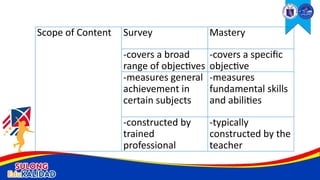

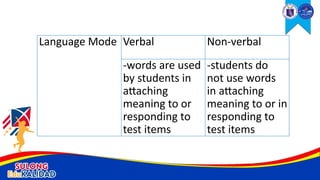

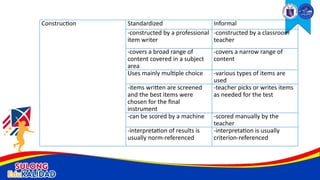

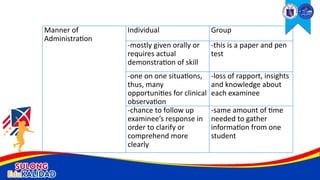

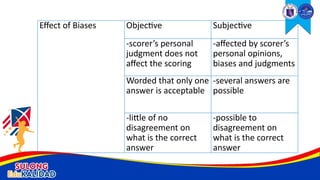

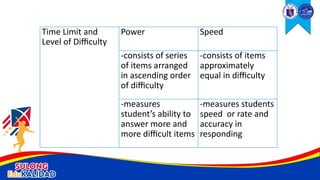

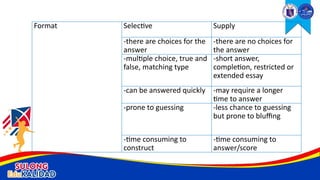

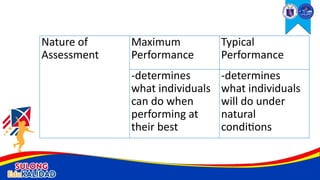

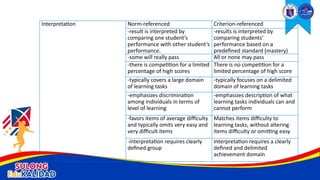

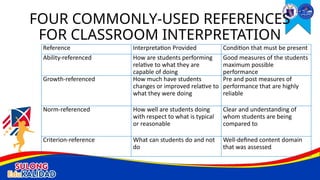

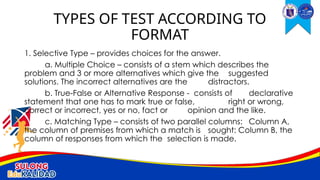

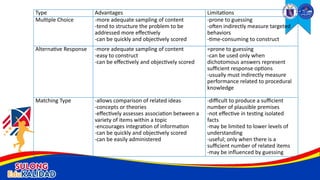

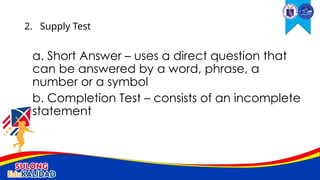

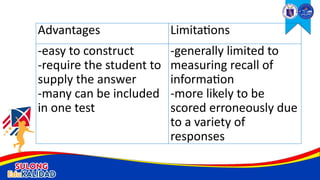

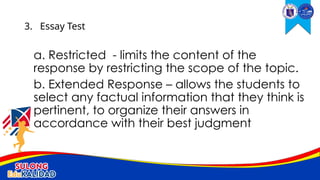

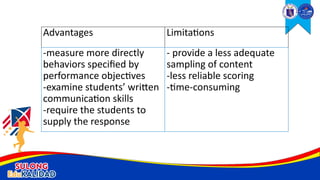

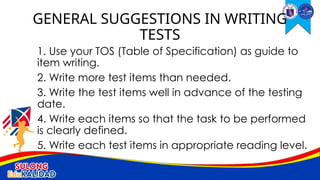

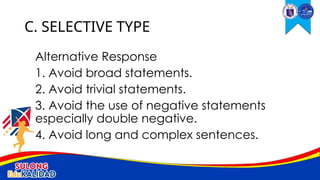

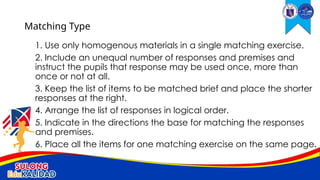

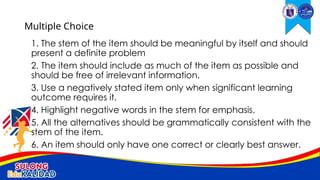

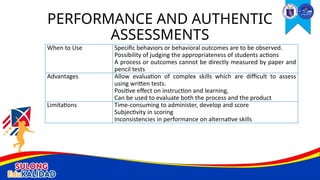

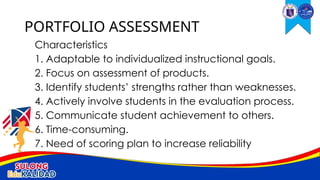

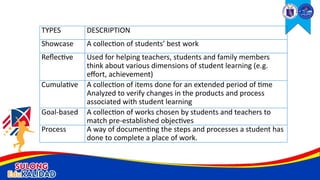

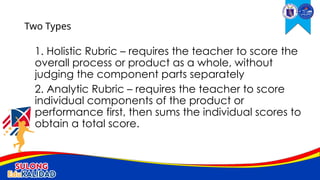

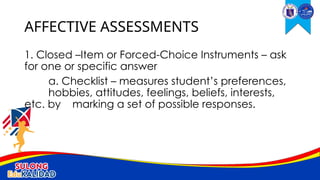

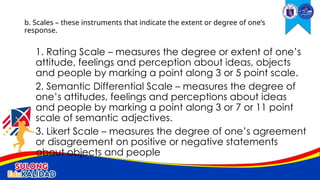

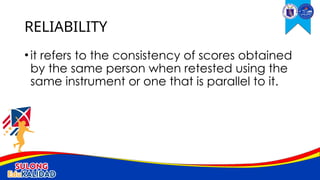

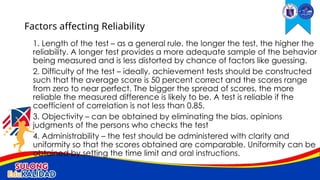

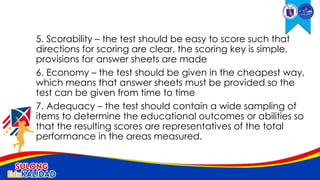

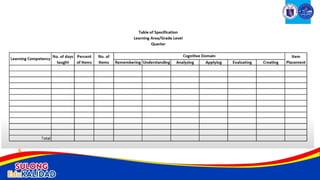

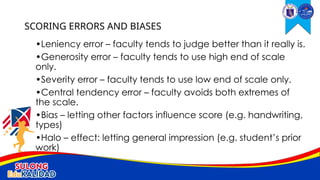

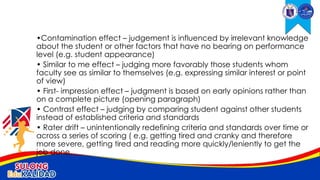

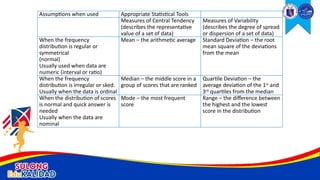

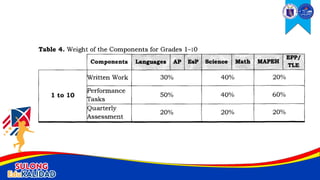

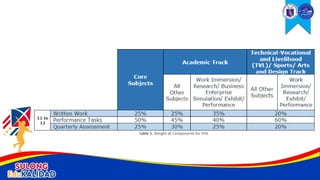

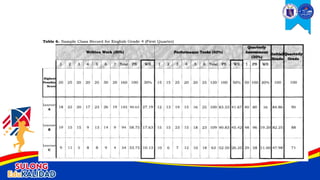

The document provides an extensive overview of assessment types and methods, including traditional, alternative, and authentic assessments, alongside their purpose in education, such as placement, formative, and summative evaluations. It outlines principles and criteria for high-quality assessments, focusing on validity, reliability, fairness, and communication, while discussing various testing formats and their advantages and limitations. The text emphasizes the importance of ongoing assessment in the learning process and the need for educators to effectively communicate assessment results to support student growth.