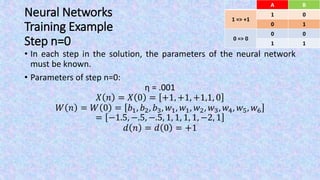

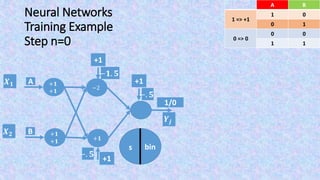

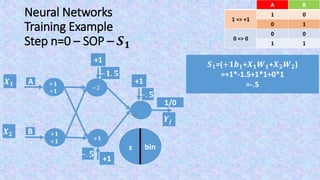

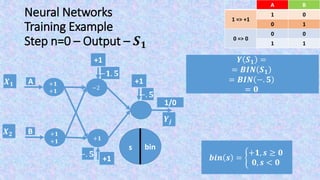

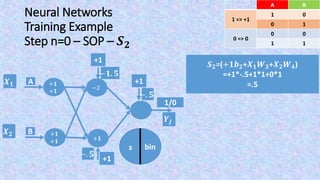

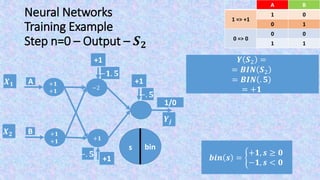

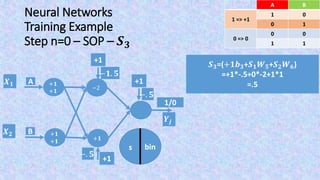

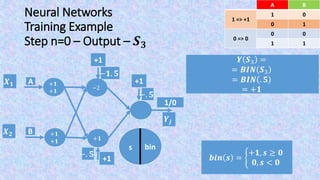

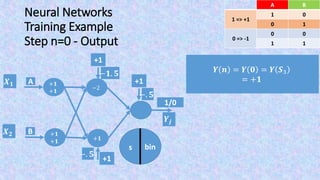

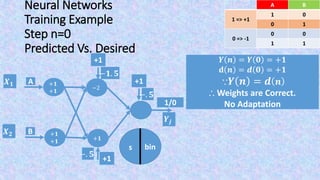

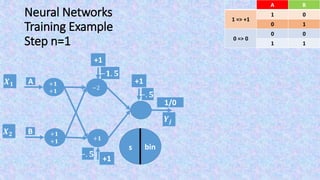

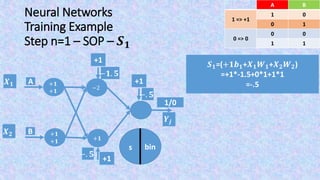

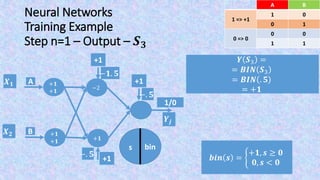

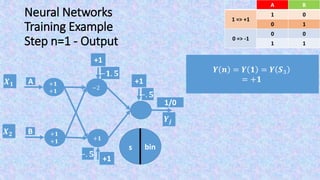

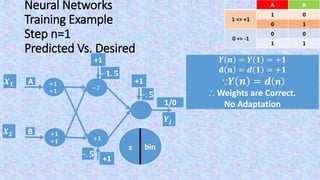

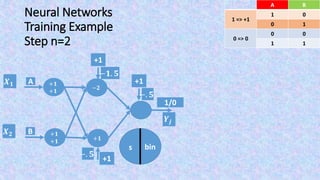

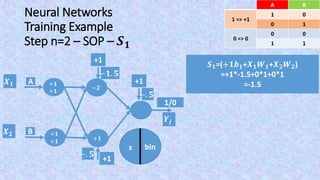

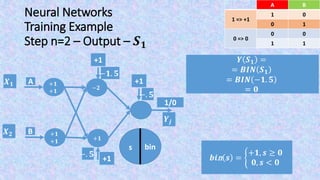

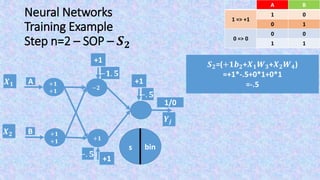

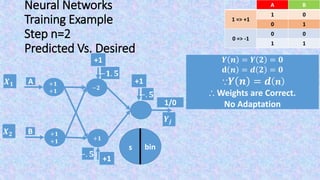

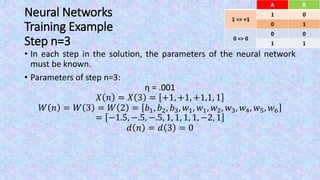

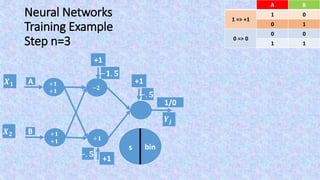

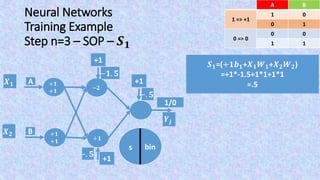

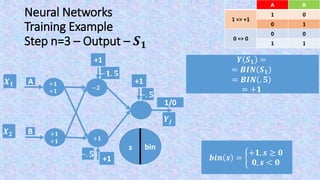

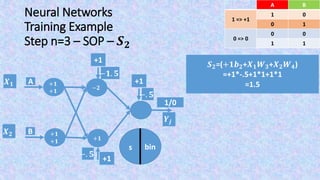

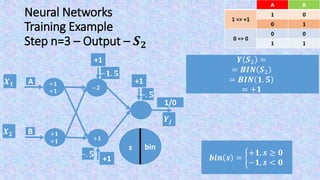

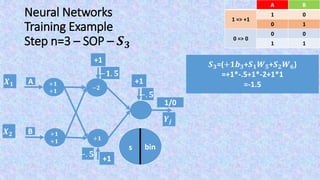

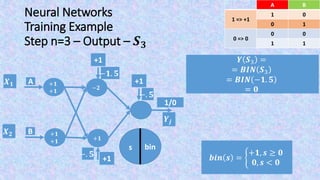

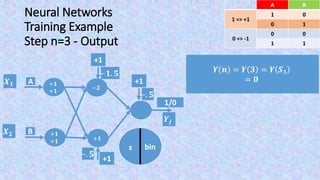

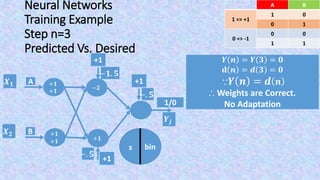

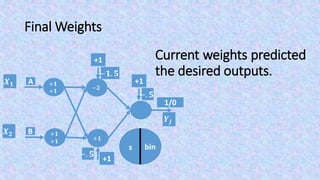

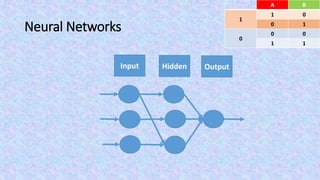

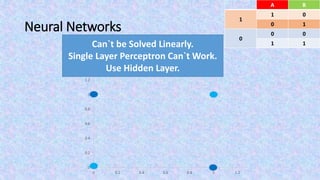

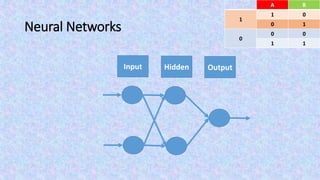

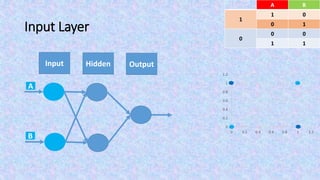

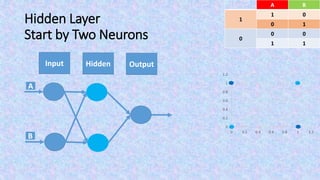

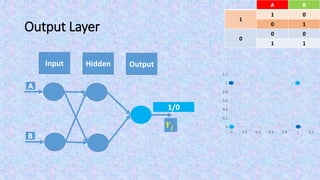

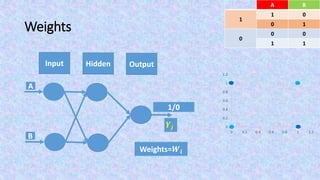

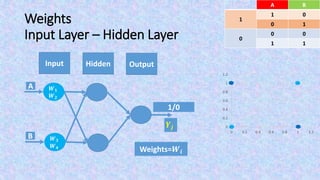

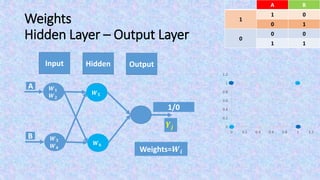

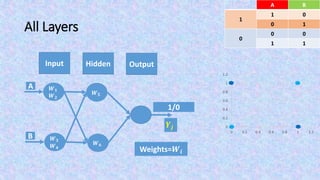

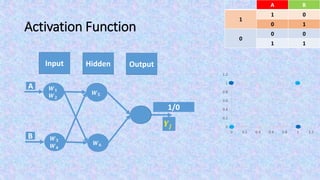

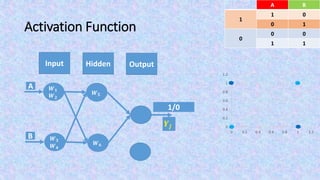

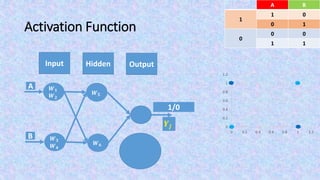

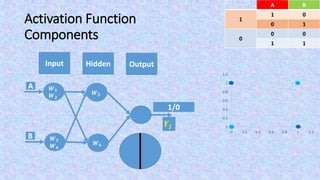

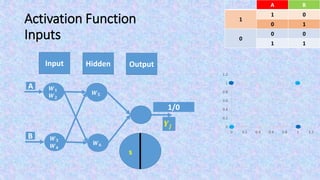

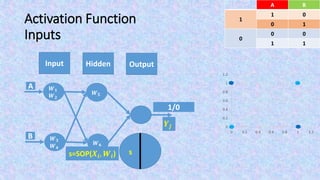

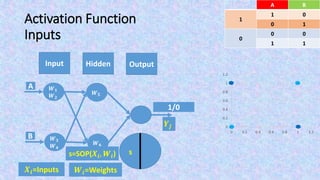

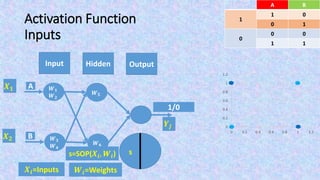

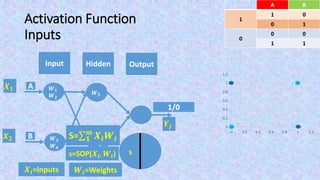

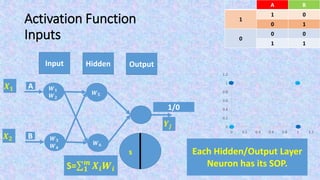

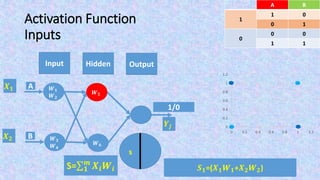

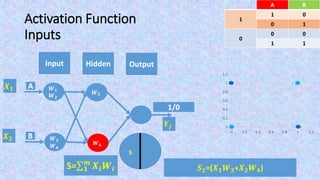

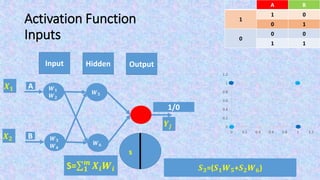

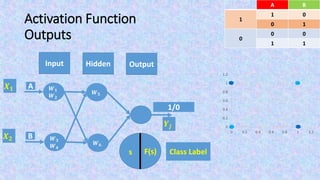

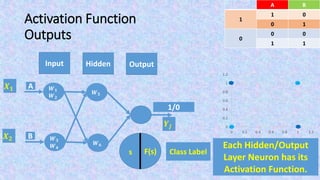

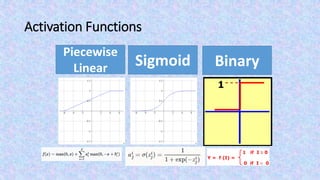

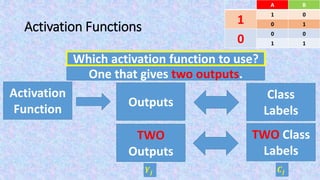

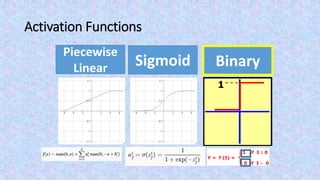

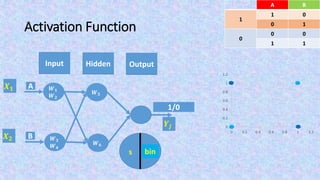

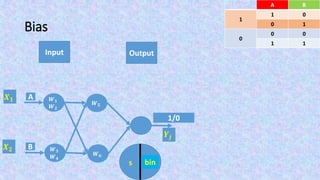

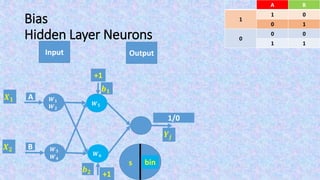

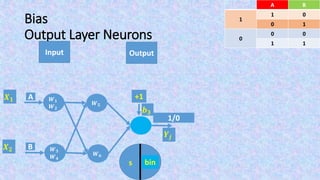

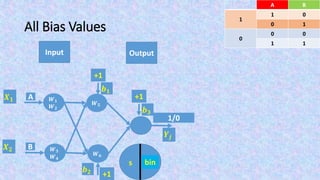

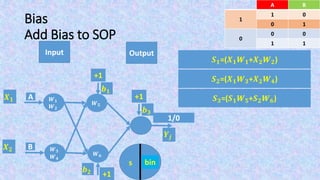

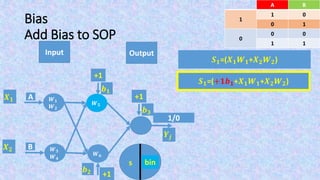

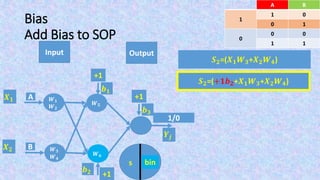

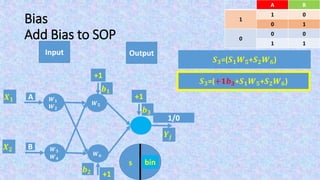

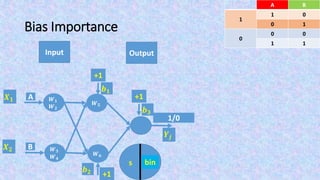

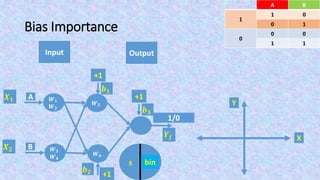

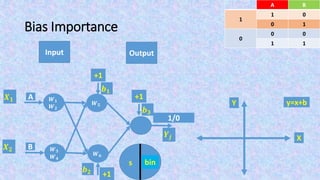

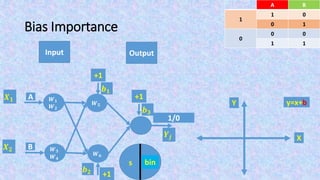

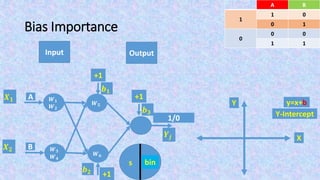

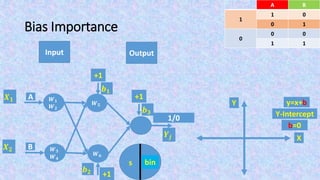

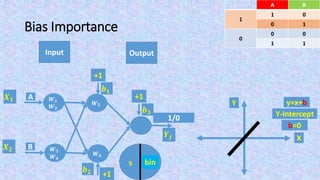

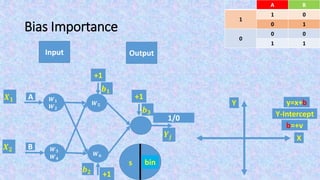

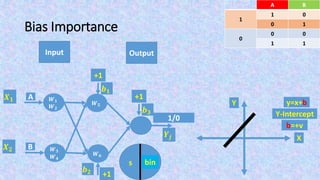

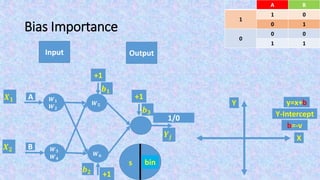

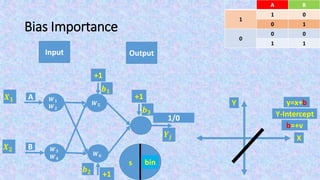

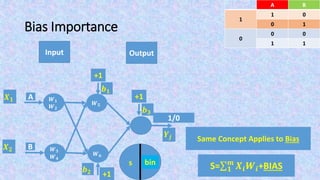

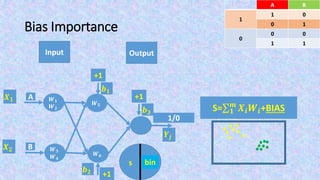

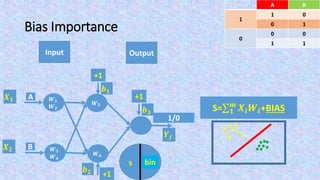

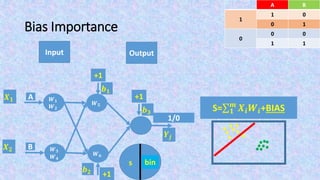

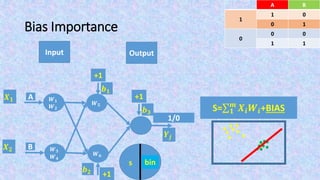

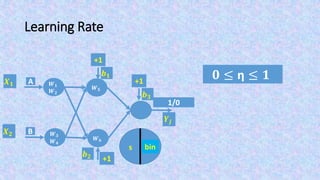

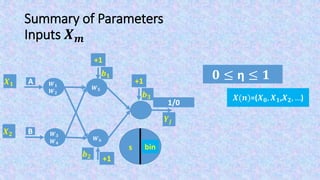

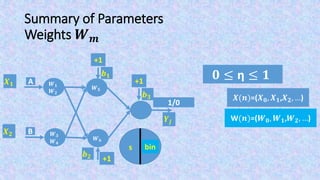

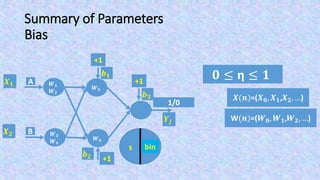

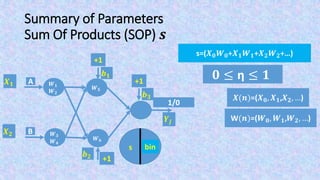

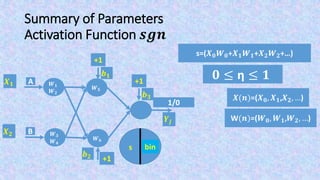

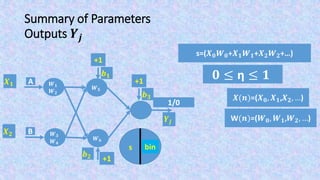

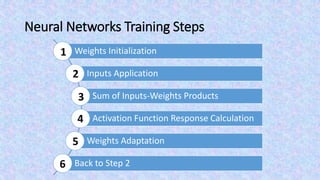

The document discusses artificial neural networks (ANNs) and their application in solving the XOR problem, which cannot be solved linearly with a single-layer perceptron. It explains the structure of neural networks that includes input, hidden, and output layers, along with the use of weights and activation functions in processing and classifying data. Additionally, it presents the importance of biases and their role in improving the performance of neural networks.

![Regarding 5th Step: Weights Adaptation

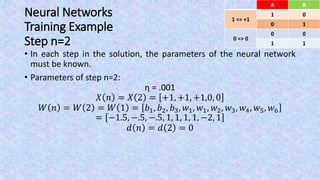

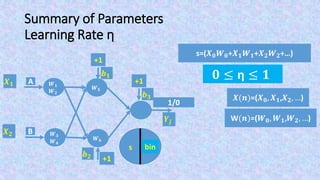

• If the predicted output Y is not the same as the desired output d,

then weights are to be adapted according to the following equation:

𝑾 𝒏 + 𝟏 = 𝑾 𝒏 + η 𝒅 𝒏 − 𝒀 𝒏 𝑿(𝒏)

Where

𝑾 𝒏 = [𝒃 𝒏 , 𝑾 𝟏(𝒏), 𝑾 𝟐(𝒏), 𝑾 𝟑(𝒏), … , 𝑾 𝒎(𝒏)]](https://image.slidesharecdn.com/artificialneuralnetworksanns-xor-step-by-step-170503023140/85/Artificial-Neural-Networks-ANNs-XOR-Step-By-Step-68-320.jpg)