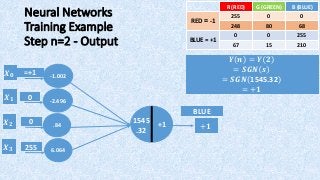

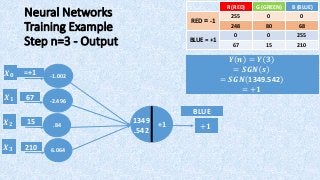

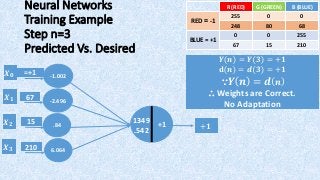

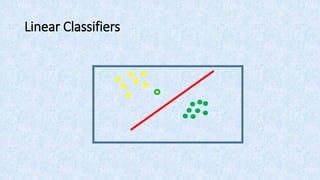

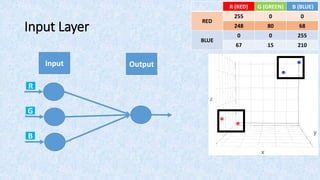

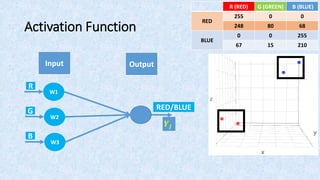

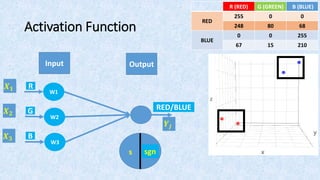

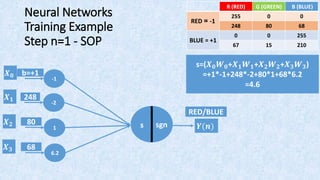

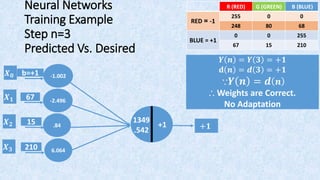

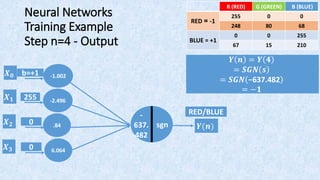

The document provides a step-by-step guide on training and testing artificial neural networks (ANNs) at Menoufia University, focusing on classification techniques. It covers key concepts including linear and nonlinear classifiers, the structure of neural networks with input, hidden, and output layers, and the use of various activation functions. Additionally, the document discusses the importance of weights and biases in neural network training.

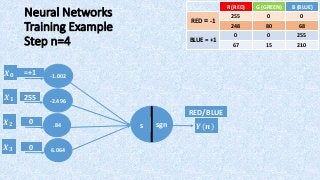

![Regarding 5th Step: Weights Adaptation

• If the predicted output Y is not the same as the desired output d,

then weights are to be adapted according to the following equation:

𝑾 𝒏 + 𝟏 = 𝑾 𝒏 + η 𝒅 𝒏 − 𝒀 𝒏 𝑿(𝒏)

Where

𝑾 𝒏 = [𝒃 𝒏 , 𝑾 𝟏(𝒏), 𝑾 𝟐(𝒏), 𝑾 𝟑(𝒏), … , 𝑾 𝒎(𝒏)]](https://image.slidesharecdn.com/neuralnetwork-trainingexample-shared-170428161918/85/Introduction-to-Artificial-Neural-Networks-ANNs-Step-by-Step-Training-Testing-Example-68-320.jpg)