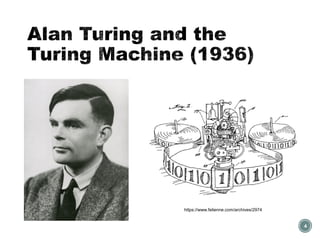

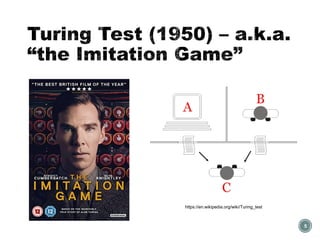

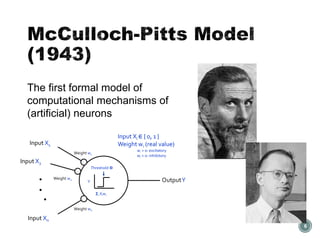

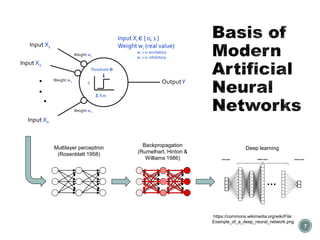

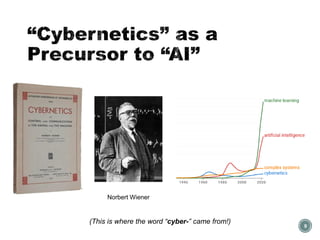

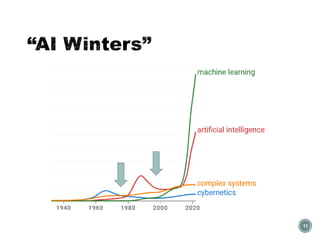

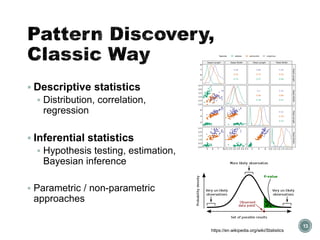

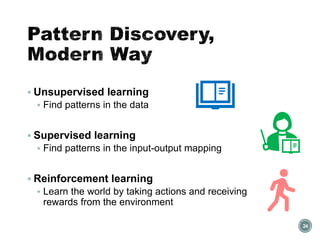

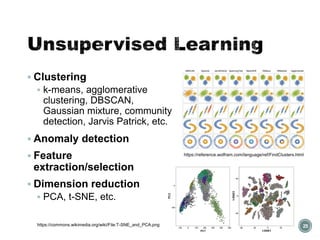

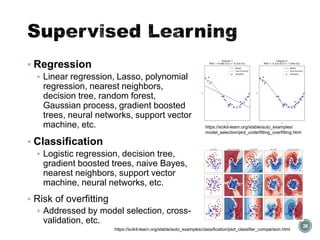

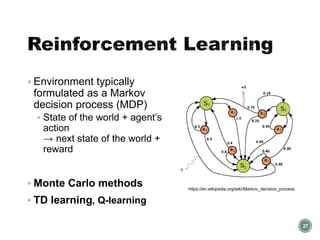

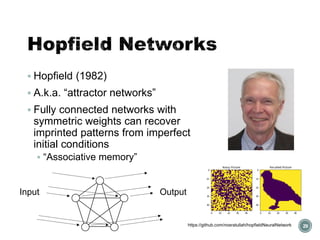

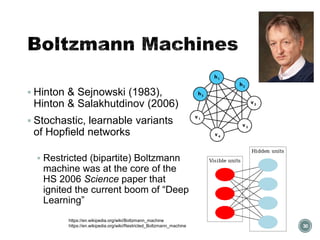

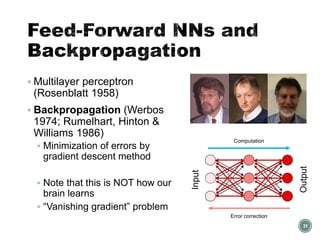

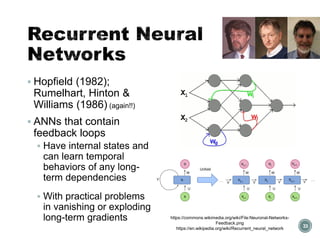

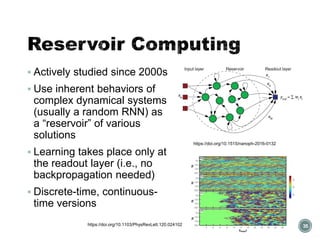

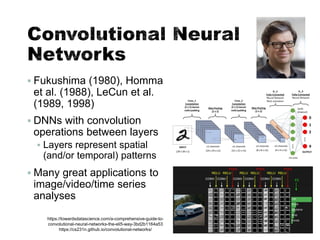

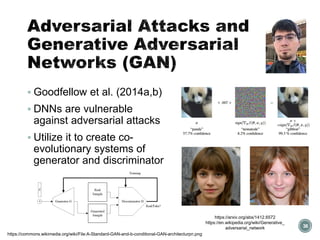

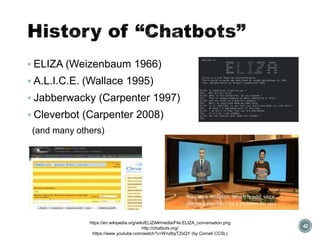

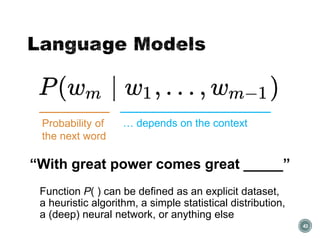

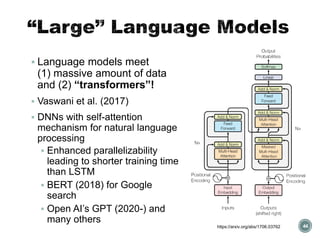

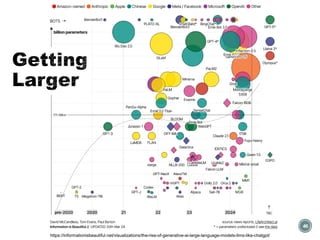

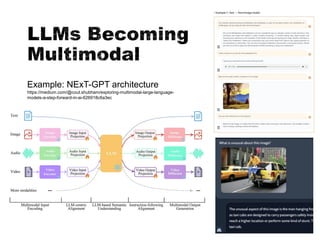

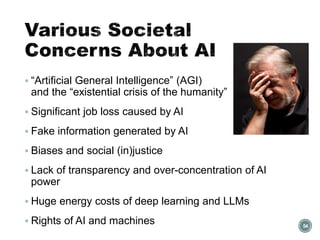

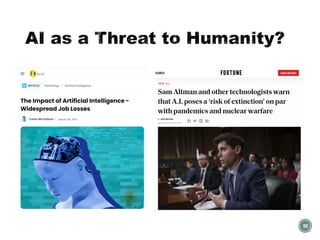

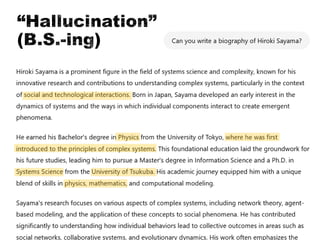

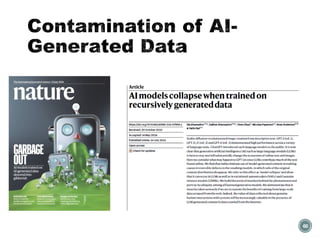

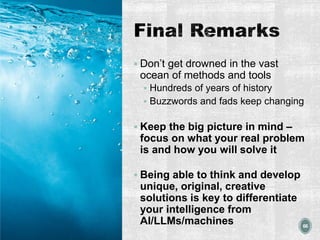

The document provides a comprehensive overview of artificial intelligence (AI), including its origins, key concepts such as machine learning, neural networks, and large language models, along with their challenges and societal implications. It discusses historical developments from Turing's contributions to modern advancements in deep learning and various architectures like CNNs and GANs. Moreover, it addresses the potential threats AI poses, including job displacement and bias, and emphasizes the importance of maintaining a broad perspective on AI's evolving landscape.