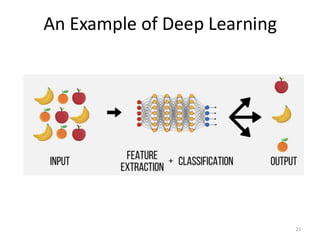

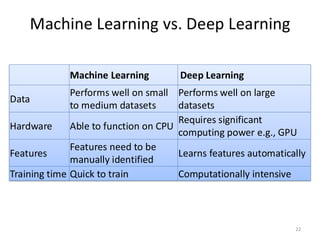

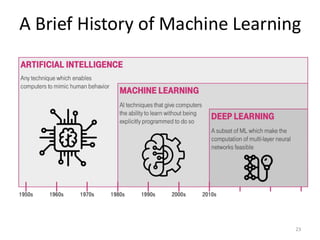

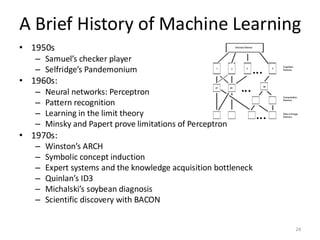

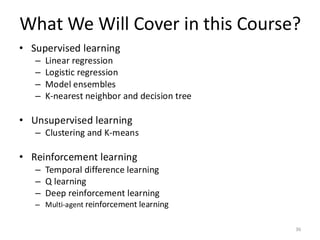

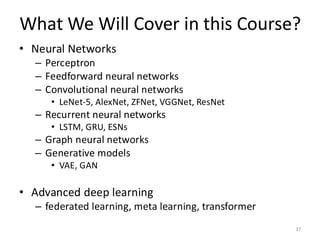

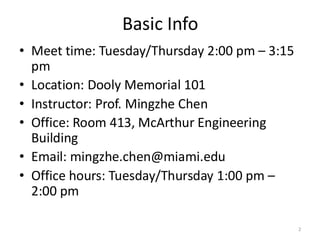

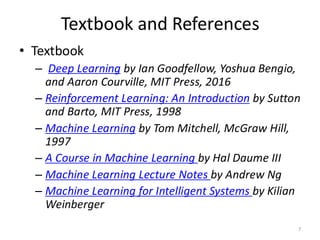

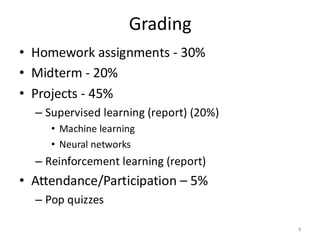

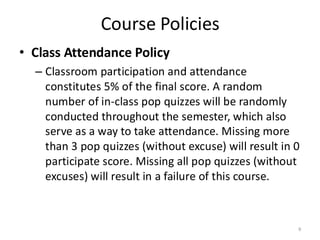

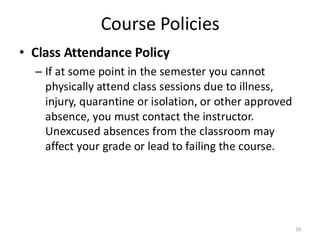

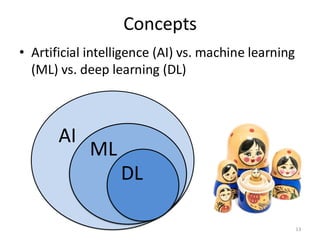

The document outlines the course information for ECE553/653 on neural networks, including class schedules, instructor details, grading policies, and course content focusing on artificial intelligence, machine learning, and deep learning. Key topics include supervised and unsupervised learning, neural network architectures, and the historical development of machine learning. The document also emphasizes academic integrity and attendance policies for the course.

![Examples of Artificial Intelligence

16

Problem Rules Code

Problem

Find a maximum

number

Rules

Sort the number

Code

[1,3,5,4,2] [1,2,3,4,5]

• Programming](https://image.slidesharecdn.com/lecture1-241220091525-0b5d49ad/85/Lecture-1-neural-network-covers-the-basic-16-320.jpg)