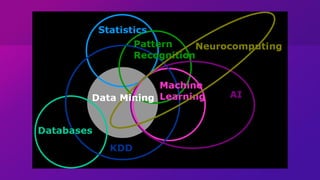

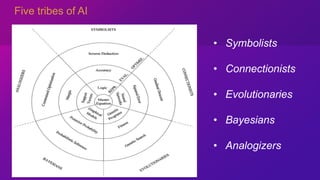

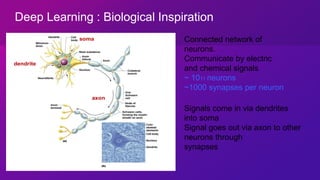

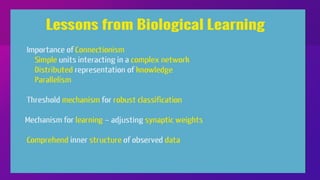

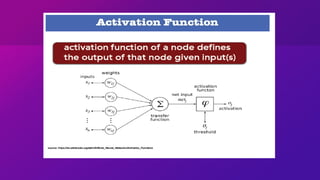

This document provides biographical information about Şaban Dalaman and summaries of key concepts in artificial intelligence and machine learning. It summarizes Şaban Dalaman's educational and professional background, then discusses Alan Turing's universal machine concept, the 1956 Dartmouth workshop proposal that helped define the field of AI, and definitions of AI, machine learning, deep learning, and data science. It also lists different tribes and algorithms within machine learning.