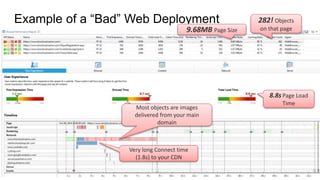

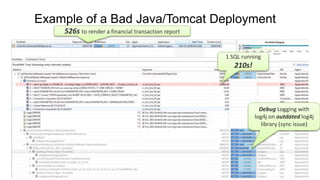

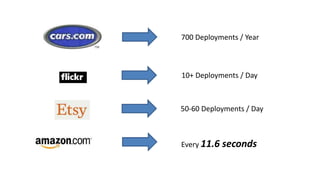

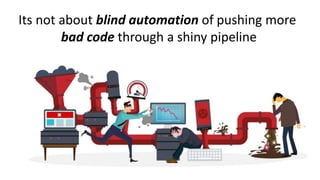

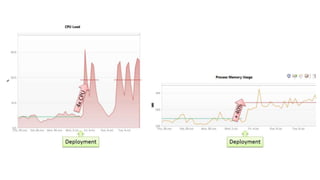

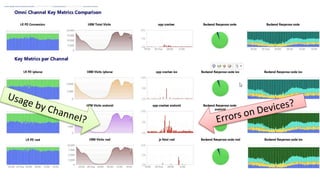

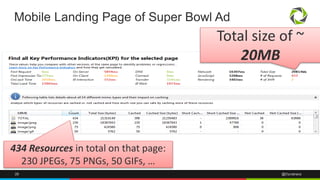

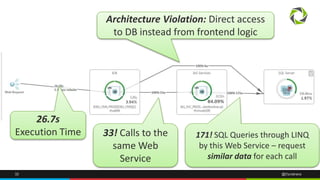

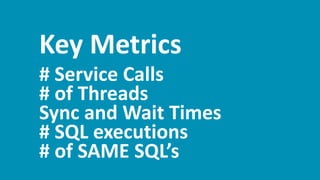

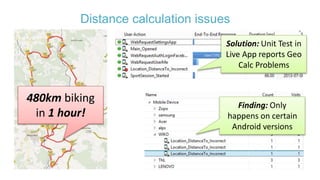

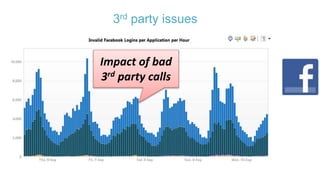

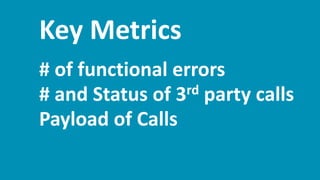

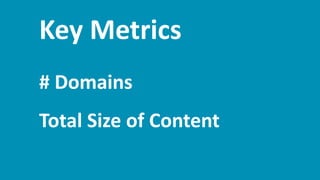

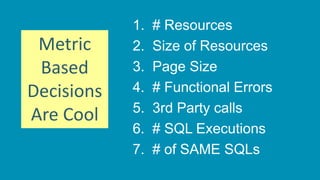

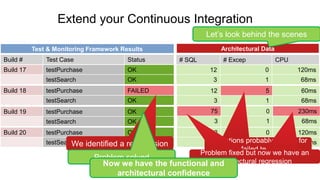

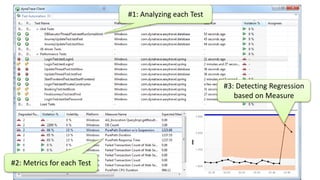

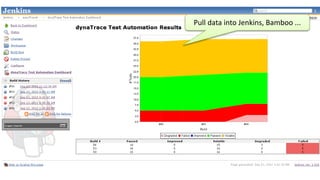

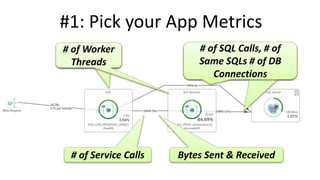

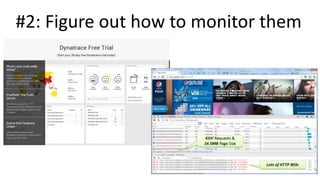

The document discusses best practices for application quality in software deployments, emphasizing the importance of metrics and learning from past mistakes. It provides examples of poor performance in web and Java deployments and outlines strategies to improve quality, such as avoiding blind automation and integrating comprehensive metrics into the CI/CD pipeline. Key takeaways include focusing on resource management, monitoring third-party calls, and addressing architectural issues to enhance application performance.