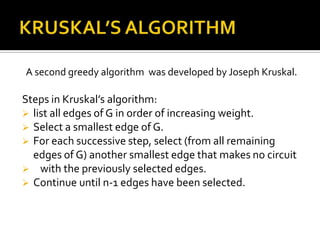

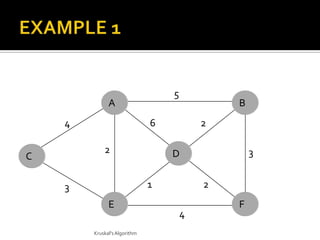

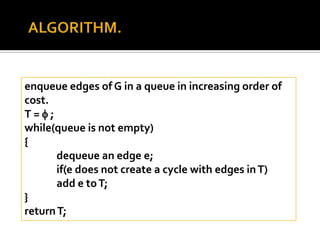

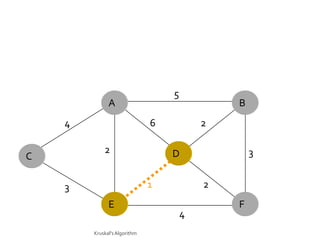

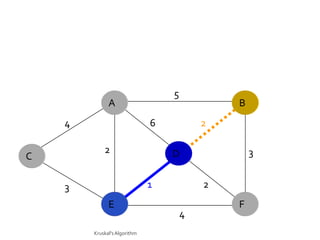

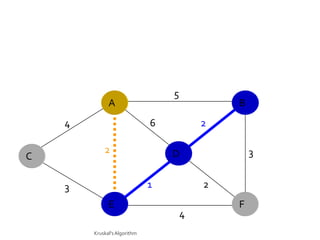

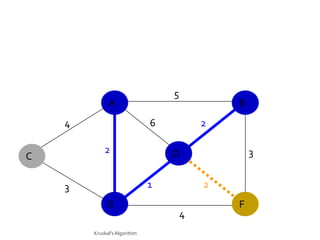

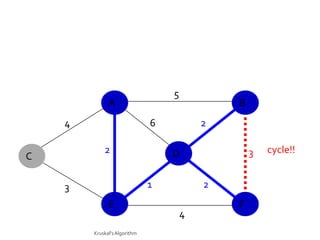

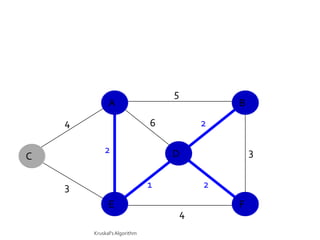

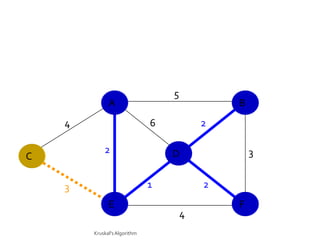

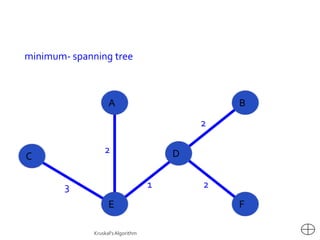

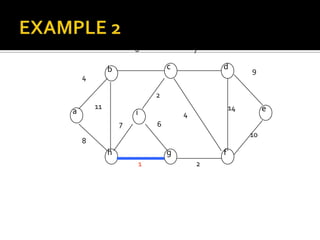

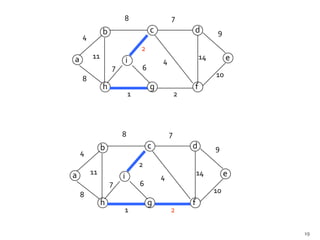

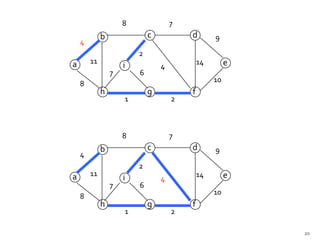

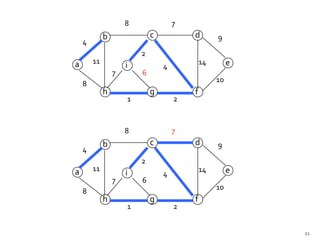

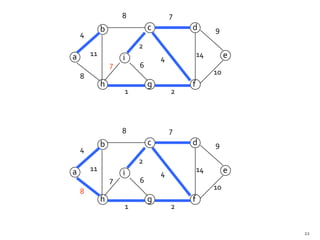

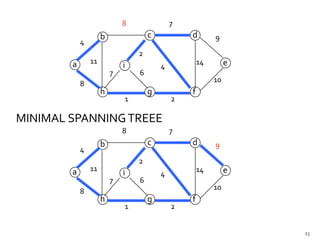

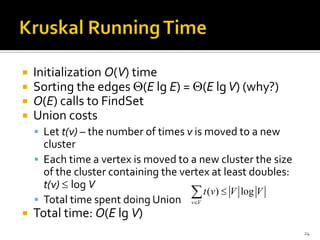

This document discusses the greedy algorithm approach for finding minimum spanning trees. It explains that greedy algorithms make locally optimal choices at each step to arrive at a global solution. Kruskal's algorithm is presented as an example greedy algorithm for finding minimum spanning trees. It works by sorting the edges by weight and then adding edges one by one if they do not form cycles. While greedy algorithms are faster, they do not always find the true optimal solution.