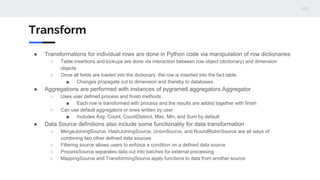

Pygrametl is a Python framework designed for developing extract-transform-load (ETL) processes, supporting both CPython and Jython with functionality for database connections and data manipulation. It allows users to define data sources and transformations, facilitating data loading into Python, while providing interfaces for insertions and lookups with tools like fact tables and dimensions. Additional resources and a demo are available online to help users understand and implement Pygrametl effectively.