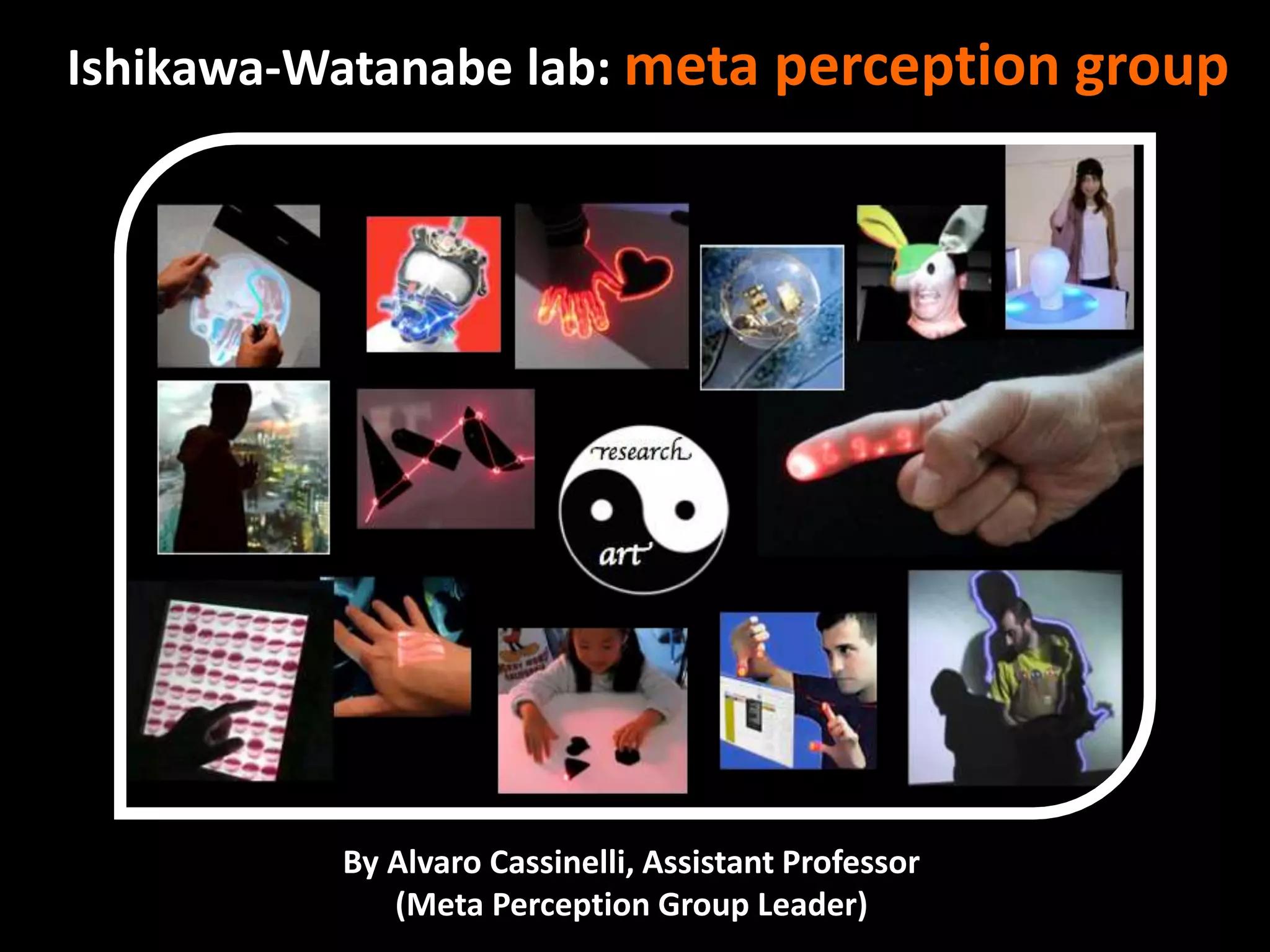

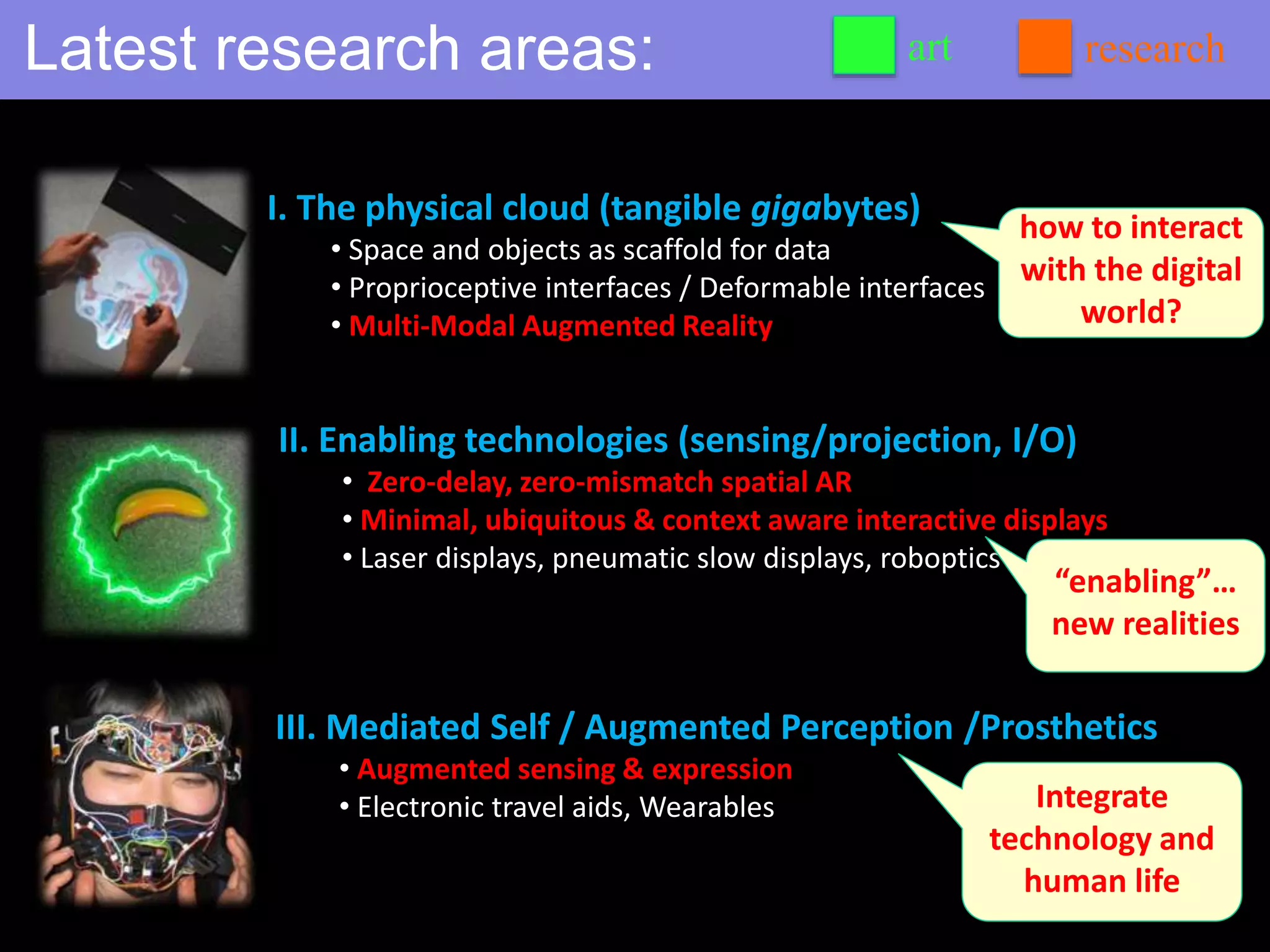

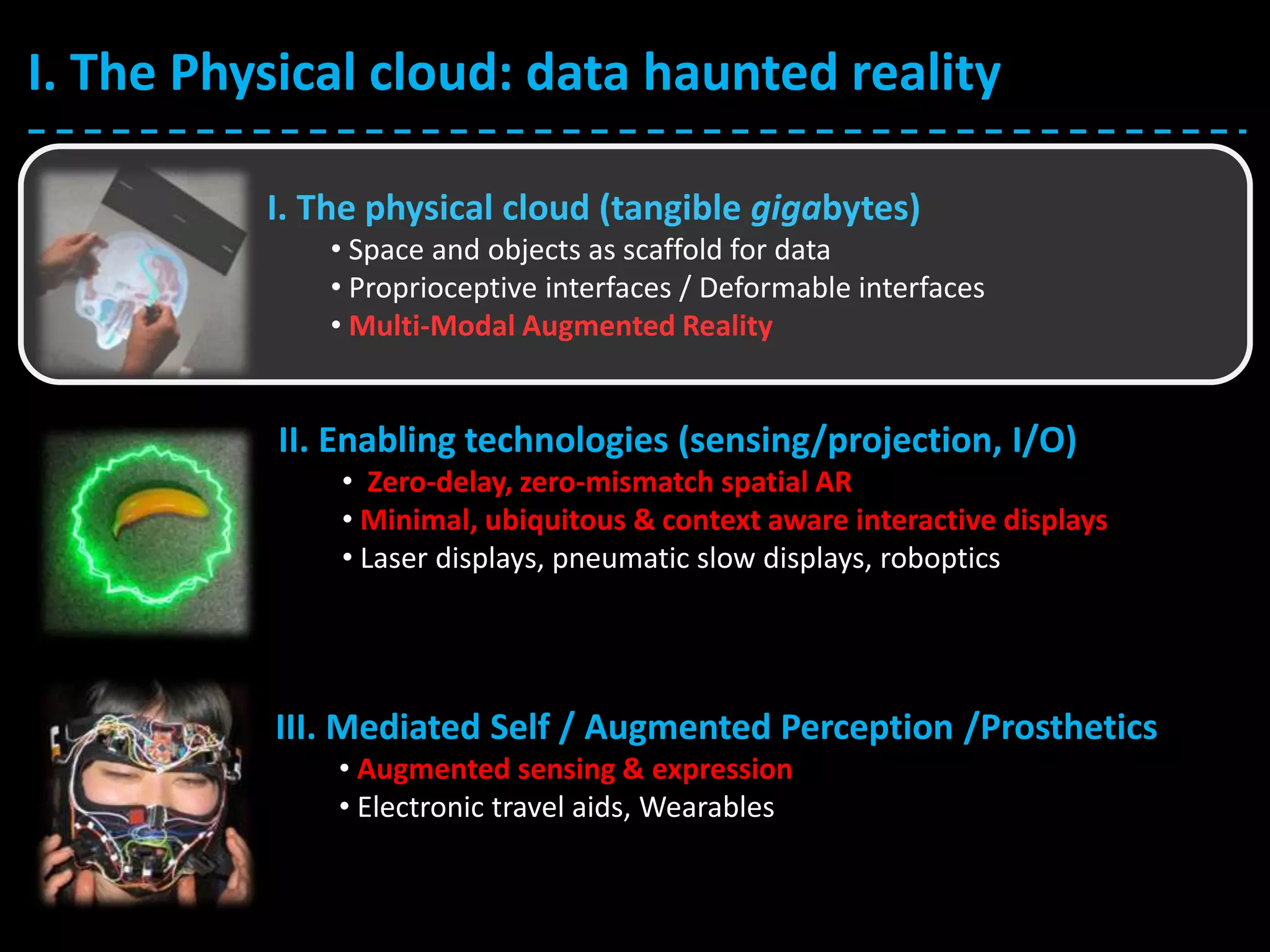

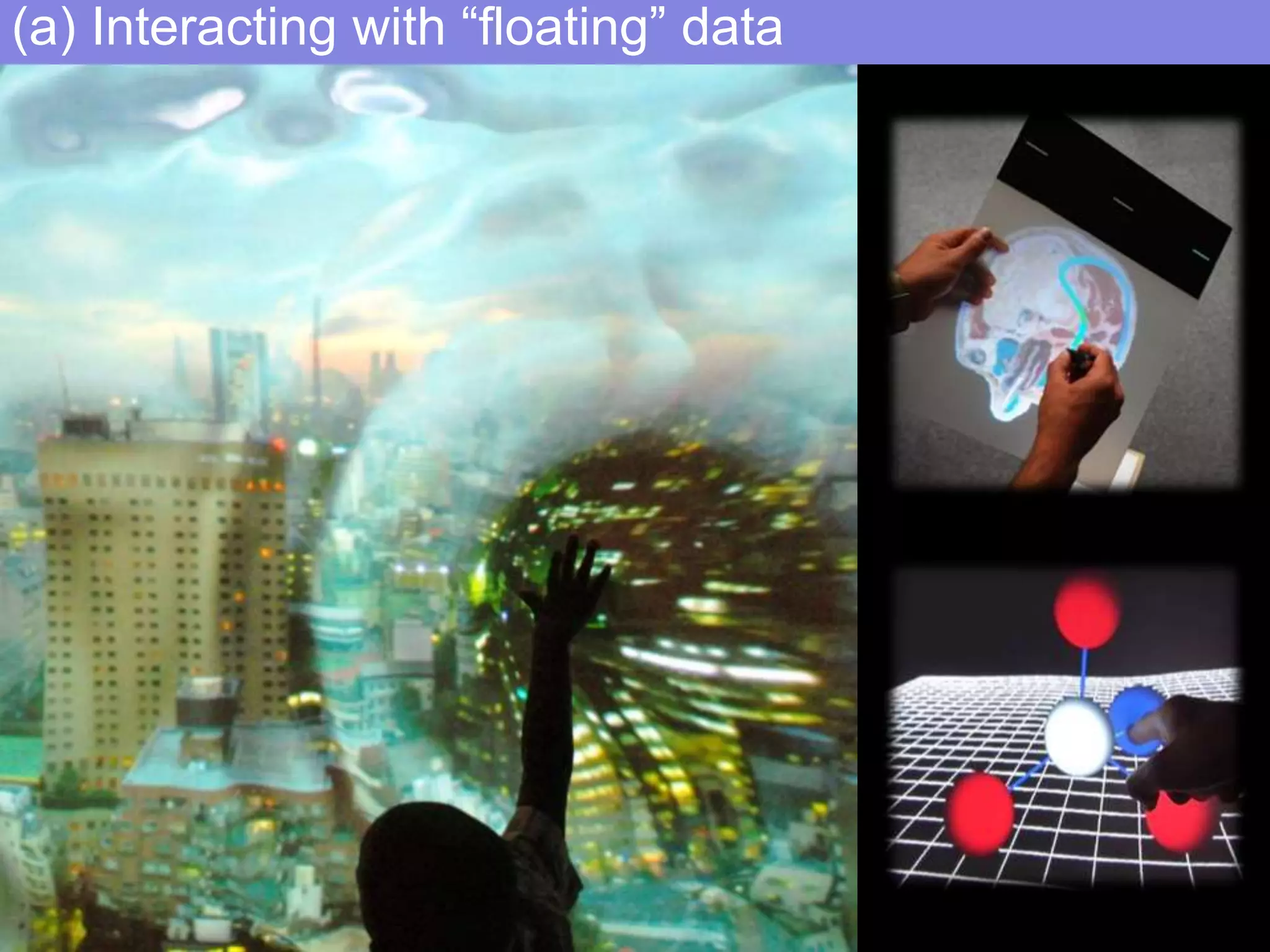

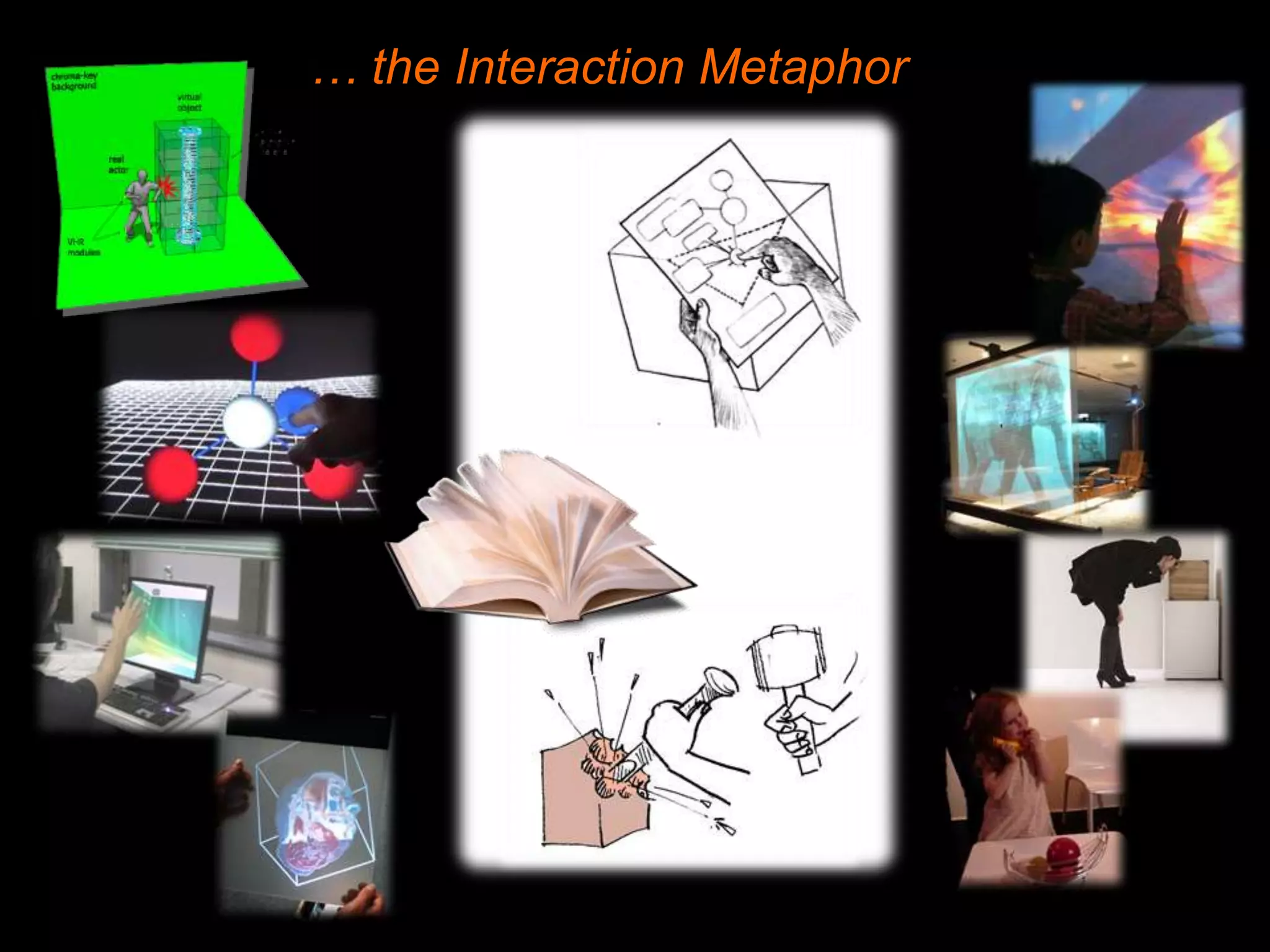

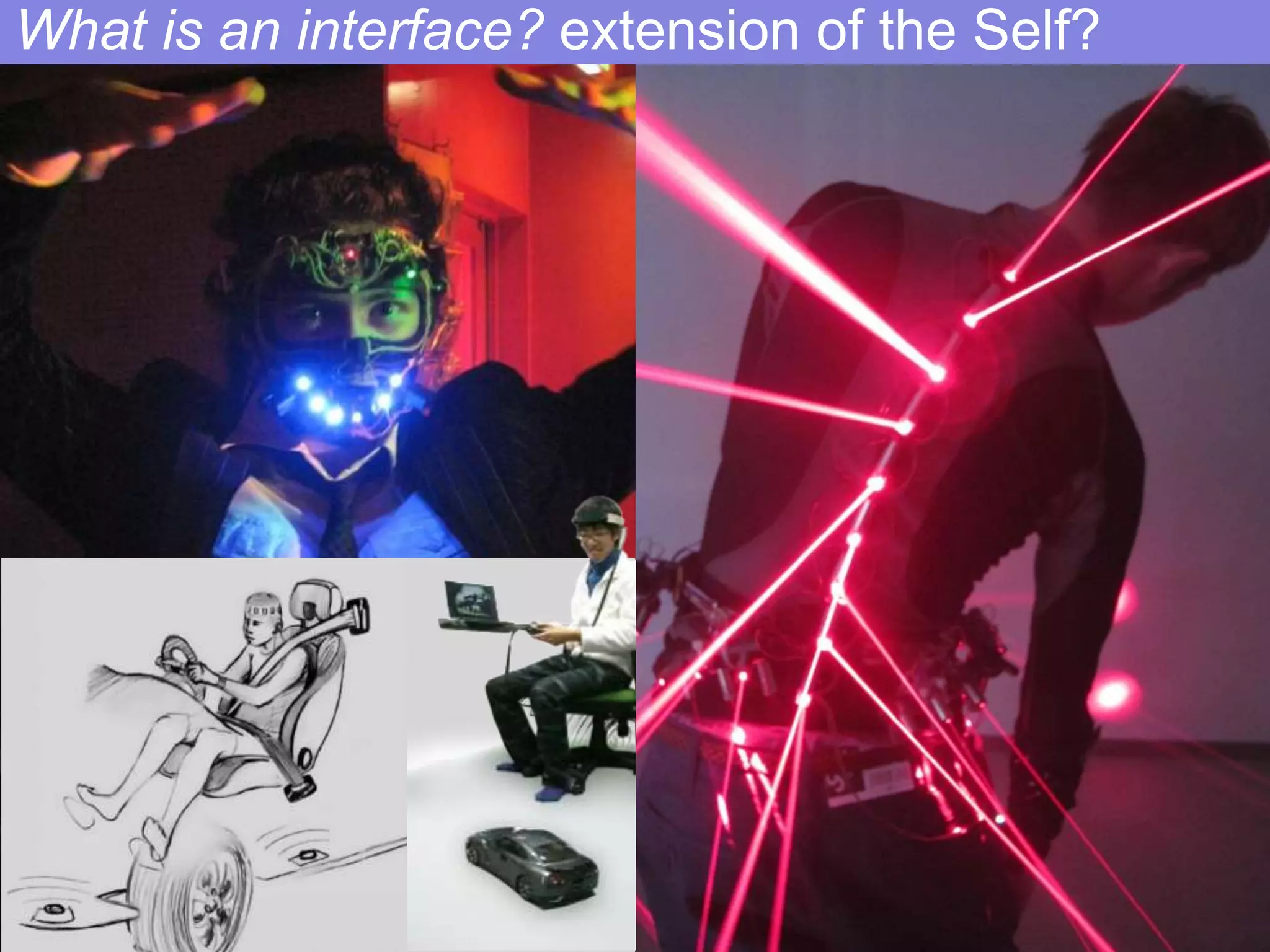

The document outlines the research directions of the Watanabe Laboratory's Meta Perception Group, led by Alvaro Cassinelli, focusing on integrating technology with human interaction through tangible data and augmented reality. It discusses enabling technologies such as zero-delay spatial AR and unique interfaces for augmented expression and perception. The goal is to create a seamless blend of the physical and digital worlds to enhance human experience and interaction.

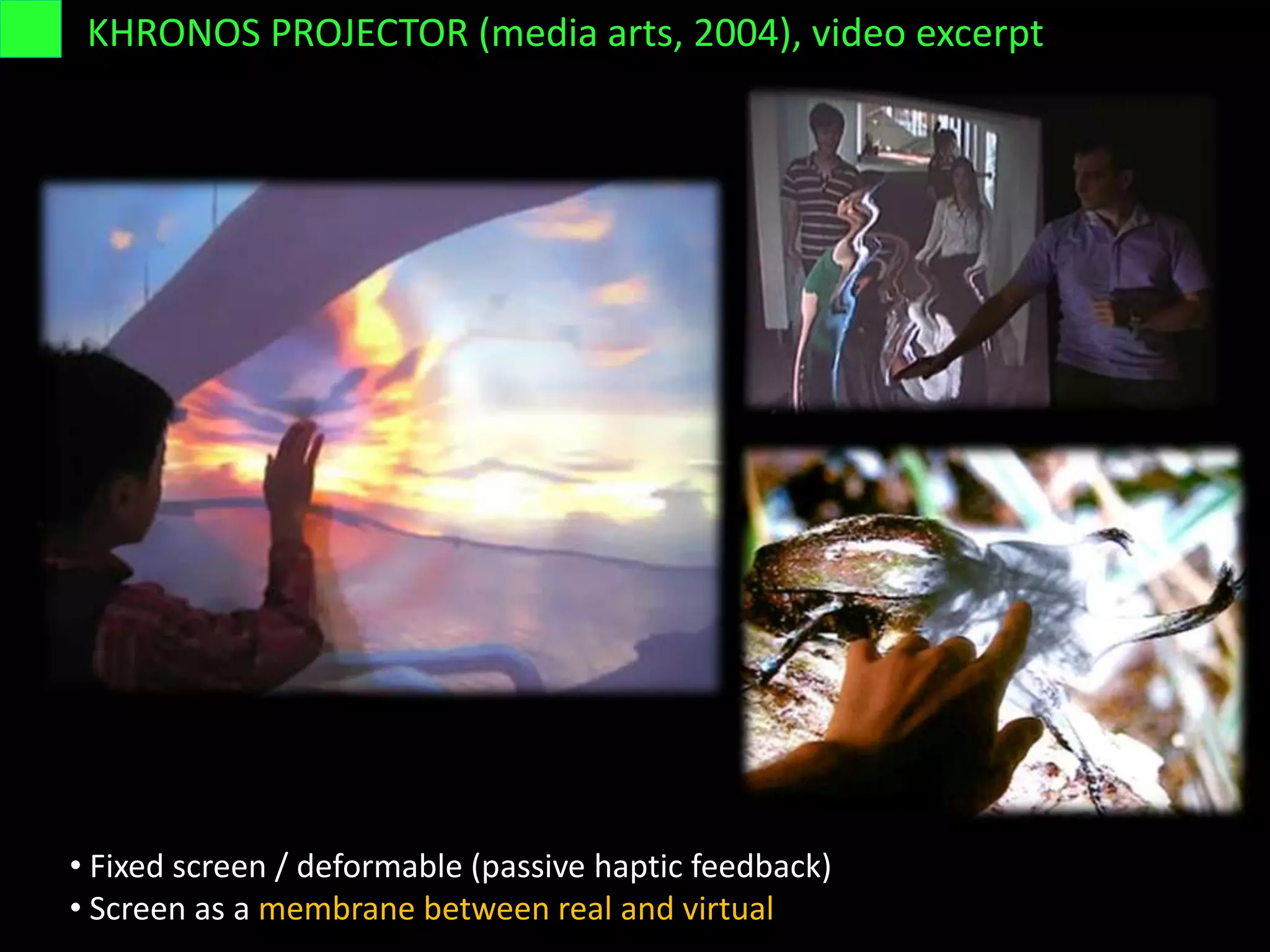

![KHRONOS PROJECTOR [2005]

• Deformable screen / fixed in space

• Screen as a controller](https://image.slidesharecdn.com/seminar472014alvaronovideo-140724023551-phpapp02/75/Alvaro-Cassinelli-Meta-Perception-Group-leader-9-2048.jpg)

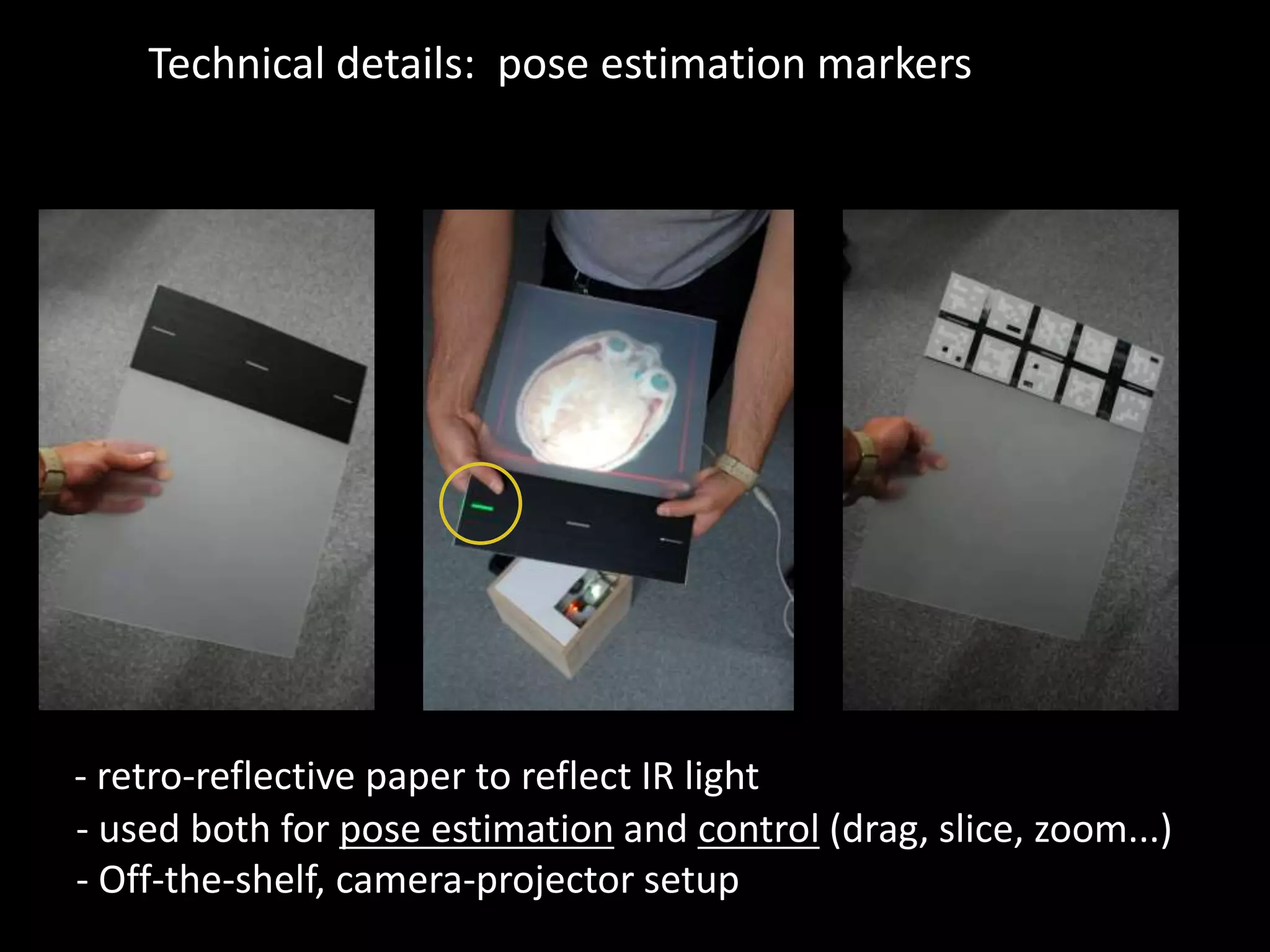

![Precise control possible [2008]

…a physical attribute makes manipulation more precise,

even if this is PASSIVE FORCE FEEDBACK](https://image.slidesharecdn.com/seminar472014alvaronovideo-140724023551-phpapp02/75/Alvaro-Cassinelli-Meta-Perception-Group-leader-11-2048.jpg)

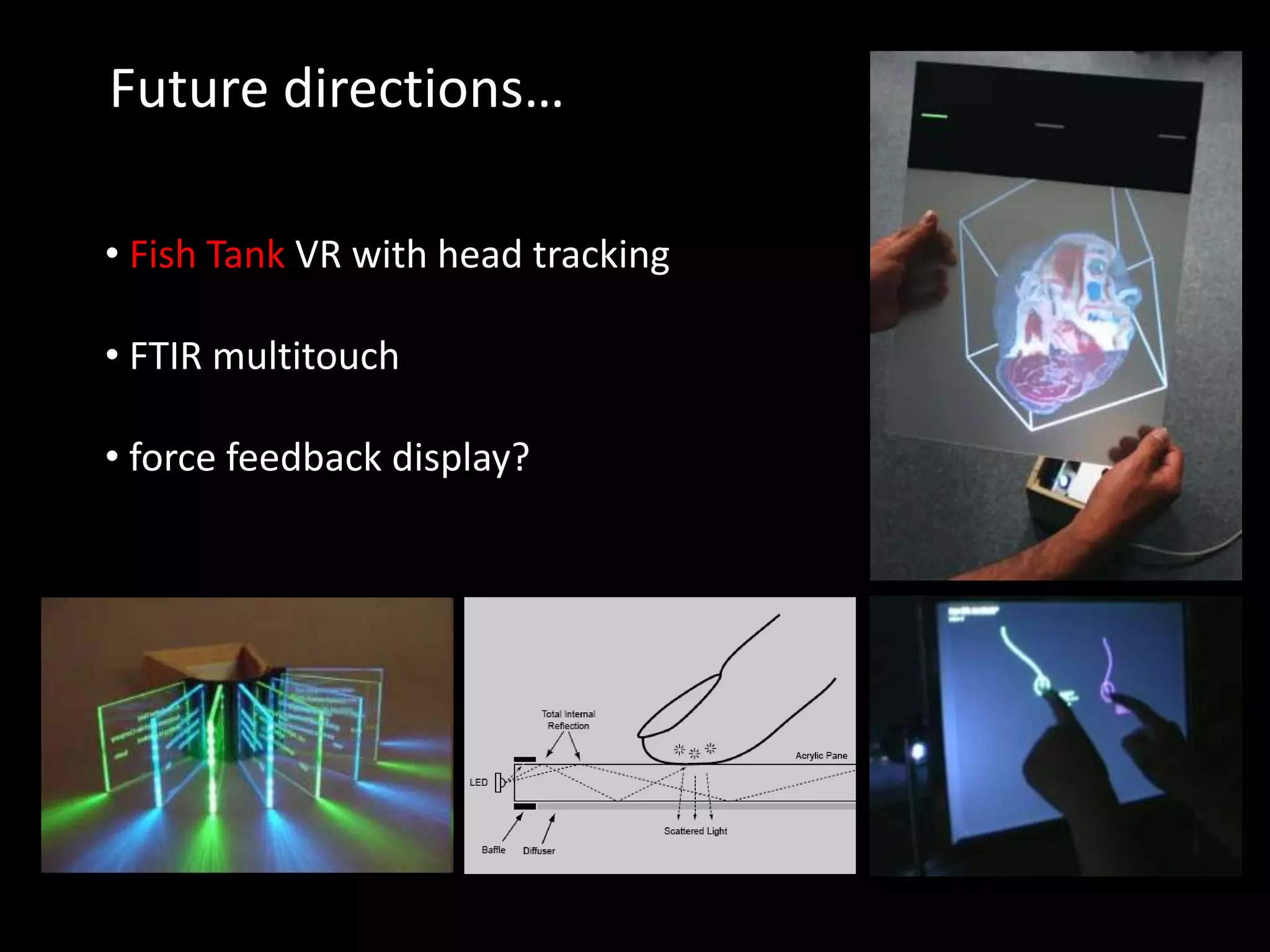

![• Rigid screen / moving on space (proprioception)

• Screen as a controller

Volumetric data visualization & interaction [2006]](https://image.slidesharecdn.com/seminar472014alvaronovideo-140724023551-phpapp02/75/Alvaro-Cassinelli-Meta-Perception-Group-leader-12-2048.jpg)

![Memory block application: the Portable Desk [2013]

Classic Jazz

Rock](https://image.slidesharecdn.com/seminar472014alvaronovideo-140724023551-phpapp02/75/Alvaro-Cassinelli-Meta-Perception-Group-leader-15-2048.jpg)

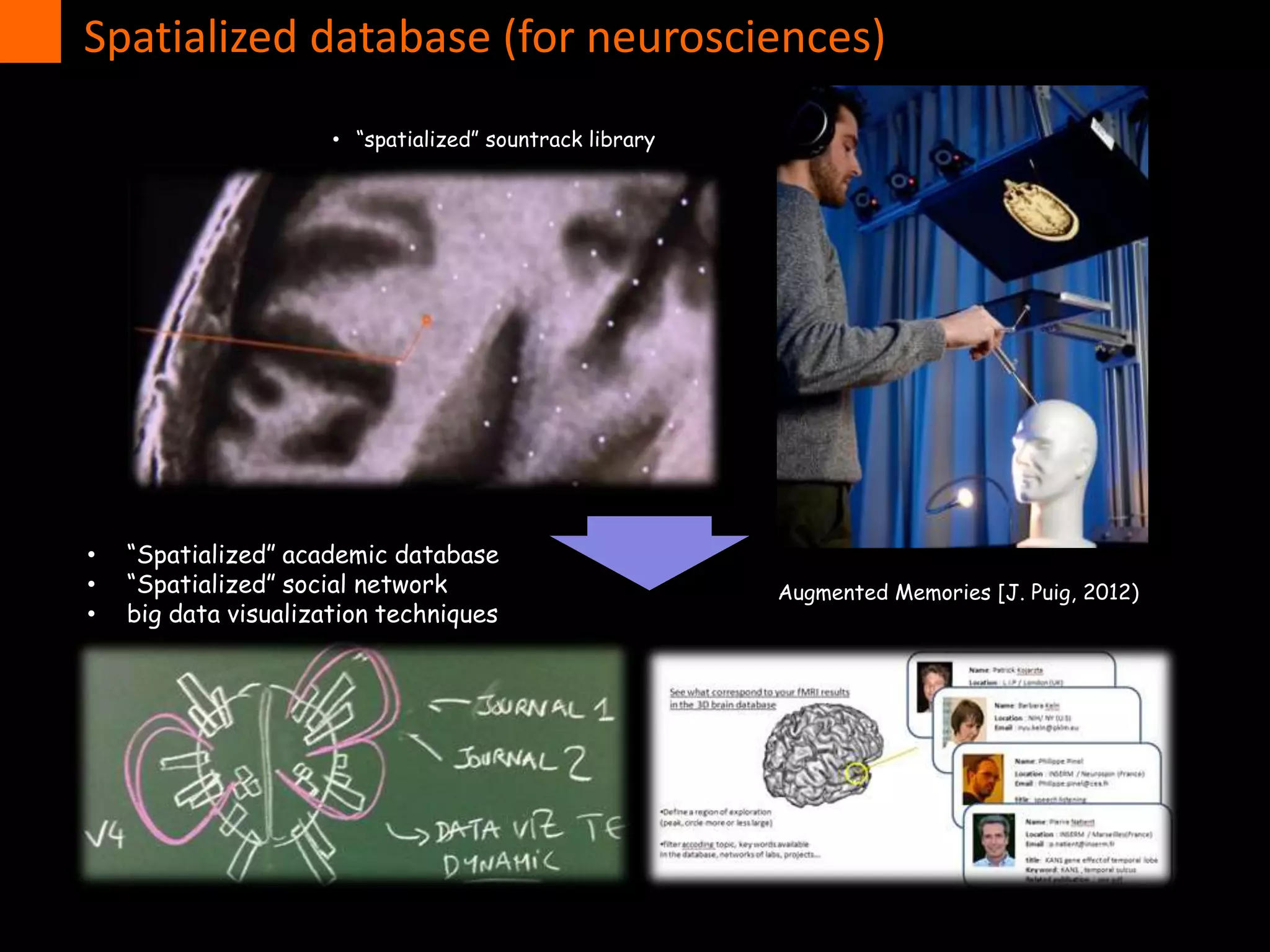

![Application to Neurosciences: twitterBrain [2013~]

Multimodal virtual

presence of data

Extension: portable “memory

block” to store personal data

(music, books, etc)

Room, public space as “virtual

bookshelf”

• Common virtual support to query academic publications

• Real time communication between researchers

• Proprioception, spatial memory, procedural memory

A shared “physical” database](https://image.slidesharecdn.com/seminar472014alvaronovideo-140724023551-phpapp02/75/Alvaro-Cassinelli-Meta-Perception-Group-leader-16-2048.jpg)

![From Deformable workspace to…

Deformable User Interface [2014]

• Skeuomorph,

• Shape and

meaning…

(beyond the “soap bar smartphone”)](https://image.slidesharecdn.com/seminar472014alvaronovideo-140724023551-phpapp02/75/Alvaro-Cassinelli-Meta-Perception-Group-leader-18-2048.jpg)

![Haptic interaction

with virtual 3d

objects

“embedded” in

the real world…

“force

field”

Virtual Haptic Radar [2008]

- Importance of multi-modal immersion (or partial immersion) -](https://image.slidesharecdn.com/seminar472014alvaronovideo-140724023551-phpapp02/75/Alvaro-Cassinelli-Meta-Perception-Group-leader-19-2048.jpg)

![High Speed Gesture UI for 3d Display (zSpace) [2013]

- Importance of instant feedback to feel virtual objects as “real” -](https://image.slidesharecdn.com/seminar472014alvaronovideo-140724023551-phpapp02/75/Alvaro-Cassinelli-Meta-Perception-Group-leader-20-2048.jpg)

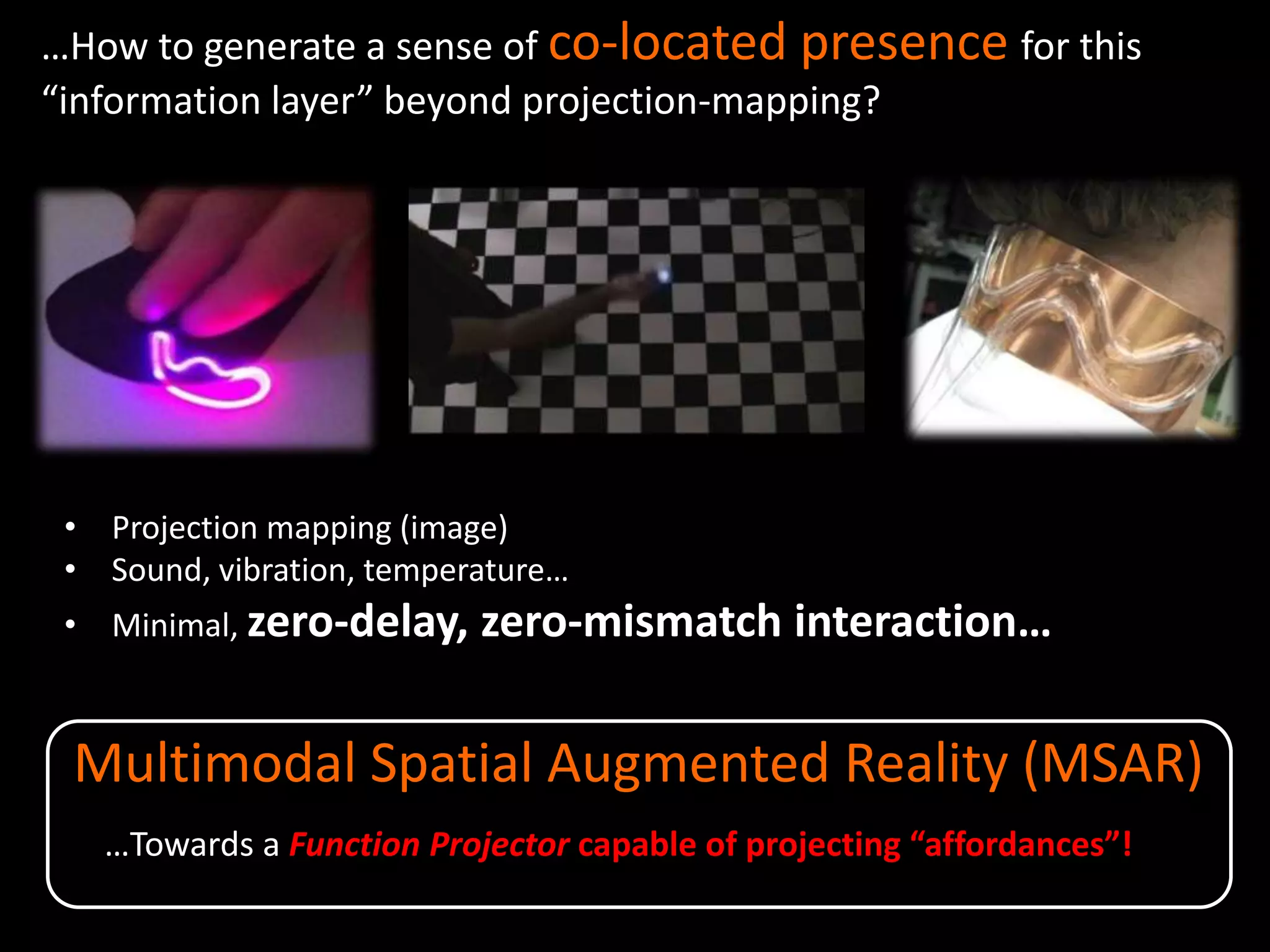

![Invoked Computing (Function Projector) [2011]

“Augmented Reality as the graphic front-end of Ubiquity.

And Ubiquity as the killer-app of Sustainability.”

Bruce Sterling (Wired Blog on “Invoked Computing”)](https://image.slidesharecdn.com/seminar472014alvaronovideo-140724023551-phpapp02/75/Alvaro-Cassinelli-Meta-Perception-Group-leader-23-2048.jpg)

![Visual and Tactile + high speed interaction [2012]](https://image.slidesharecdn.com/seminar472014alvaronovideo-140724023551-phpapp02/75/Alvaro-Cassinelli-Meta-Perception-Group-leader-25-2048.jpg)

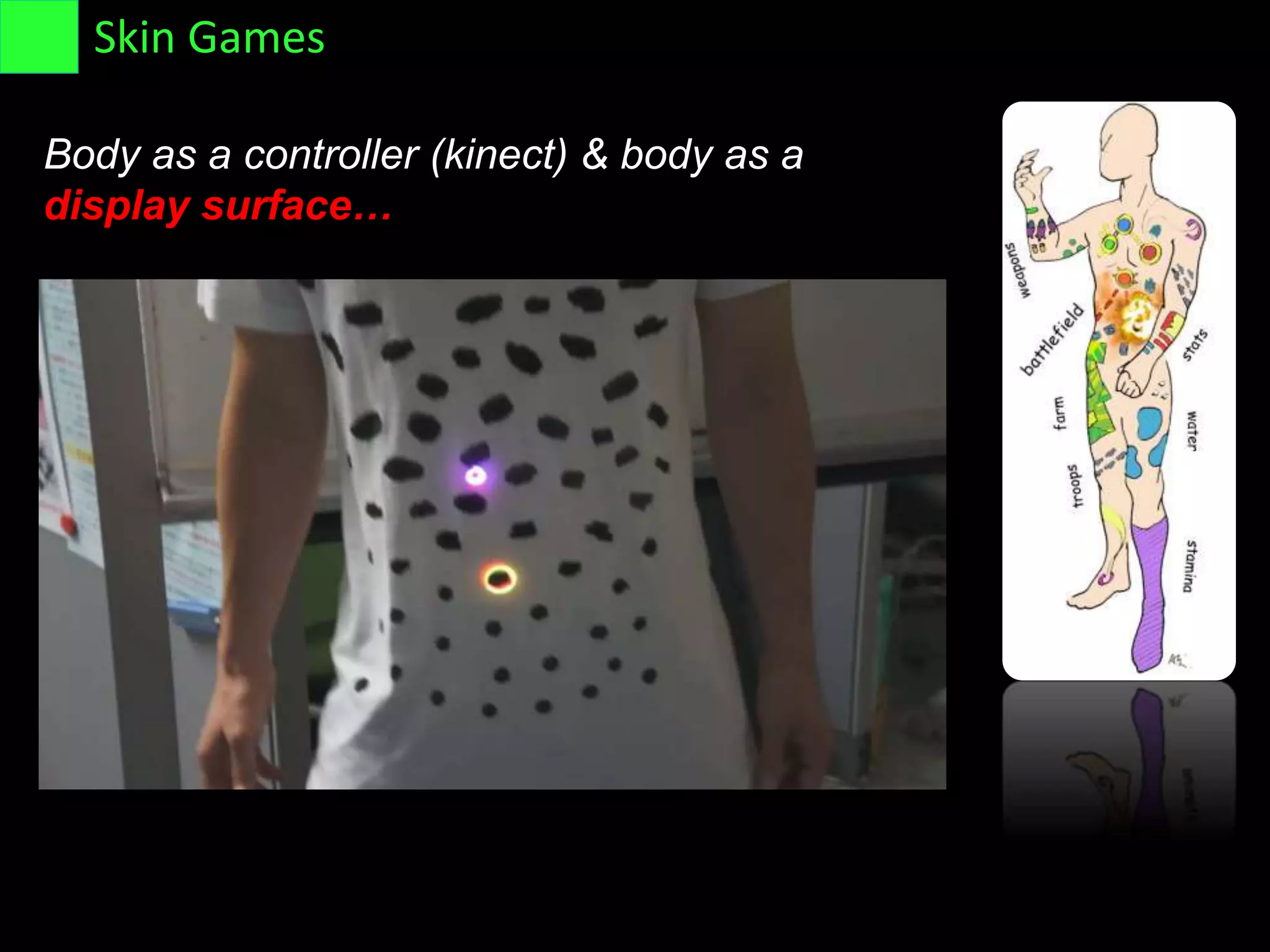

![OmniTouch (Microsoft research)

Enabling Technology : Smart Laser Sensing [2003~]

• no delay, no misalignment

• projection on mobile, deformable surfaces

• Real time sensing

Vision based

Smart sensing

vs.

Skin Games

What?

How?

• Smart sensing

• Laser Sensing Display](https://image.slidesharecdn.com/seminar472014alvaronovideo-140724023551-phpapp02/75/Alvaro-Cassinelli-Meta-Perception-Group-leader-30-2048.jpg)

![Camera-less

active tracking

principle

Markerless laser tracking (I/O interface) [2003]

2004

2004](https://image.slidesharecdn.com/seminar472014alvaronovideo-140724023551-phpapp02/75/Alvaro-Cassinelli-Meta-Perception-Group-leader-32-2048.jpg)

![• artificial synesthesia

• real-time interaction

• new interfaces for musical expression

scoreLight: a human sized pick-up head [2009]

(in collaboration with Daito Manabe)](https://image.slidesharecdn.com/seminar472014alvaronovideo-140724023551-phpapp02/75/Alvaro-Cassinelli-Meta-Perception-Group-leader-34-2048.jpg)

![Cameraless interactive display (no calibration needed)

Laser GUI: minimal interface [2013~]](https://image.slidesharecdn.com/seminar472014alvaronovideo-140724023551-phpapp02/75/Alvaro-Cassinelli-Meta-Perception-Group-leader-36-2048.jpg)

![…minimal displays by no means imply small

Laserinne [2009]](https://image.slidesharecdn.com/seminar472014alvaronovideo-140724023551-phpapp02/75/Alvaro-Cassinelli-Meta-Perception-Group-leader-39-2048.jpg)

![Real-world special effects…

…Real world “shader”?

Saccade Display + laser sensing [2012~]

Why laser?

• Stronger persistence of vision effect

• Very long distances! (on a car, etc…)

example of a

“context aware

display”

[+DIC]

video](https://image.slidesharecdn.com/seminar472014alvaronovideo-140724023551-phpapp02/75/Alvaro-Cassinelli-Meta-Perception-Group-leader-40-2048.jpg)

![Haptic Radar for extended spatial awareness [2006]

• optical “antennae” for human, not devices

• New sensorial modality (this is not TVSS)

• Extension of the body, 360 degrees](https://image.slidesharecdn.com/seminar472014alvaronovideo-140724023551-phpapp02/75/Alvaro-Cassinelli-Meta-Perception-Group-leader-44-2048.jpg)

![Laser Aura: externalizing emotions [2011]

Example of a “minimal display” inspired by manga graphical conventions…](https://image.slidesharecdn.com/seminar472014alvaronovideo-140724023551-phpapp02/75/Alvaro-Cassinelli-Meta-Perception-Group-leader-48-2048.jpg)