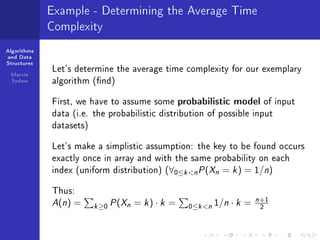

The document discusses algorithms and their complexity. It provides an example of a search algorithm and analyzes its time complexity. The dominating operations are comparisons, and the data size is the length of the array. The algorithm's worst-case time complexity is O(n), as it may require searching through the entire array of length n. The average time complexity depends on the probability distribution of the input data.

![Algorithms

and Data

Structures

Marcin

Sydow

Example - the Search Problem

Problem of searching a key in an array

What does the amount of work of this algorithm depend on?

find(arr, len, key)

Specication:

input: arr - array of integers, len - it's length, key - integer to be found

output: integer 0 ≤ i len being the index in arr, under which the key is

stored (is it a complete/clear specication?) or the value of -1 when there

is no specied key in (rst len positions of) the array

code:

find(arr, len, key){

i = 0

while(i len){

if(arr[i] == key)

return i

i++

}

return -1

}

What does the amount of work of this algorithm depend on?](https://image.slidesharecdn.com/algorithemcomplexityindatasructure-130512045008-phpapp02/85/Algorithem-complexity-in-data-sructure-6-320.jpg)

![Algorithms

and Data

Structures

Marcin

Sydow

Example - determining operating operations

What can be the dominating operation set in the following

algorithm?

find(arr, len, key){

i = 0

while(i len){

if(arr[i] == key)

return i

i++

}

return -1

}

assignment i = 0 ?](https://image.slidesharecdn.com/algorithemcomplexityindatasructure-130512045008-phpapp02/85/Algorithem-complexity-in-data-sructure-10-320.jpg)

![Algorithms

and Data

Structures

Marcin

Sydow

Example - determining operating operations

What can be the dominating operation set in the following

algorithm?

find(arr, len, key){

i = 0

while(i len){

if(arr[i] == key)

return i

i++

}

return -1

}

assignment i = 0 ? no

comparison i len ?](https://image.slidesharecdn.com/algorithemcomplexityindatasructure-130512045008-phpapp02/85/Algorithem-complexity-in-data-sructure-11-320.jpg)

![Algorithms

and Data

Structures

Marcin

Sydow

Example - determining operating operations

What can be the dominating operation set in the following

algorithm?

find(arr, len, key){

i = 0

while(i len){

if(arr[i] == key)

return i

i++

}

return -1

}

assignment i = 0 ? no

comparison i len ? yes

comparison arr[i] == key ?](https://image.slidesharecdn.com/algorithemcomplexityindatasructure-130512045008-phpapp02/85/Algorithem-complexity-in-data-sructure-12-320.jpg)

![Algorithms

and Data

Structures

Marcin

Sydow

Example - determining operating operations

What can be the dominating operation set in the following

algorithm?

find(arr, len, key){

i = 0

while(i len){

if(arr[i] == key)

return i

i++

}

return -1

}

assignment i = 0 ? no

comparison i len ? yes

comparison arr[i] == key ? yes

both the above?](https://image.slidesharecdn.com/algorithemcomplexityindatasructure-130512045008-phpapp02/85/Algorithem-complexity-in-data-sructure-13-320.jpg)

![Algorithms

and Data

Structures

Marcin

Sydow

Example - determining operating operations

What can be the dominating operation set in the following

algorithm?

find(arr, len, key){

i = 0

while(i len){

if(arr[i] == key)

return i

i++

}

return -1

}

assignment i = 0 ? no

comparison i len ? yes

comparison arr[i] == key ? yes

both the above? yes

return statement return i ?](https://image.slidesharecdn.com/algorithemcomplexityindatasructure-130512045008-phpapp02/85/Algorithem-complexity-in-data-sructure-14-320.jpg)

![Algorithms

and Data

Structures

Marcin

Sydow

Example - determining operating operations

What can be the dominating operation set in the following

algorithm?

find(arr, len, key){

i = 0

while(i len){

if(arr[i] == key)

return i

i++

}

return -1

}

assignment i = 0 ? no

comparison i len ? yes

comparison arr[i] == key ? yes

both the above? yes

return statement return i ? no

index increment i++ ?](https://image.slidesharecdn.com/algorithemcomplexityindatasructure-130512045008-phpapp02/85/Algorithem-complexity-in-data-sructure-15-320.jpg)

![Algorithms

and Data

Structures

Marcin

Sydow

Example - determining operating operations

What can be the dominating operation set in the following

algorithm?

find(arr, len, key){

i = 0

while(i len){

if(arr[i] == key)

return i

i++

}

return -1

}

assignment i = 0 ? no

comparison i len ? yes

comparison arr[i] == key ? yes

both the above? yes

return statement return i ? no

index increment i++ ? yes](https://image.slidesharecdn.com/algorithemcomplexityindatasructure-130512045008-phpapp02/85/Algorithem-complexity-in-data-sructure-16-320.jpg)

![Algorithms

and Data

Structures

Marcin

Sydow

Example, cont. - determining the data size

What is the data size in the following algorithm?

find(arr, len, key){

i = 0

while(i len){

if(arr[i] == key)

return i

i++

}

return -1

}](https://image.slidesharecdn.com/algorithemcomplexityindatasructure-130512045008-phpapp02/85/Algorithem-complexity-in-data-sructure-17-320.jpg)

![Algorithms

and Data

Structures

Marcin

Sydow

Example, cont. - determining the data size

What is the data size in the following algorithm?

find(arr, len, key){

i = 0

while(i len){

if(arr[i] == key)

return i

i++

}

return -1

}

Data size: length of array arr](https://image.slidesharecdn.com/algorithemcomplexityindatasructure-130512045008-phpapp02/85/Algorithem-complexity-in-data-sructure-18-320.jpg)

![Algorithms

and Data

Structures

Marcin

Sydow

Example, cont. - determining the data size

What is the data size in the following algorithm?

find(arr, len, key){

i = 0

while(i len){

if(arr[i] == key)

return i

i++

}

return -1

}

Data size: length of array arr

Having determined the dominating operation and data size

we can determine time complexity of the algorithm](https://image.slidesharecdn.com/algorithemcomplexityindatasructure-130512045008-phpapp02/85/Algorithem-complexity-in-data-sructure-19-320.jpg)

![Algorithms

and Data

Structures

Marcin

Sydow

Example - time complexity of algorithm

find(arr, len, key){

i = 0

while(i len){

if(arr[i] == key)

return i

i++

}

return -1

}

Assume:

dominating operation: comparison arr[i] == key

data size: the length of array (denote by n)

Thus, the number of dominating operations executed by this

algorithm ranges:

from 1 (the key was found under the rst index)

to n (the key is absent or under the last index)

There is no single function which could express the dependence of the number of

executed dominating operations on the data size for this algorithm.](https://image.slidesharecdn.com/algorithemcomplexityindatasructure-130512045008-phpapp02/85/Algorithem-complexity-in-data-sructure-21-320.jpg)