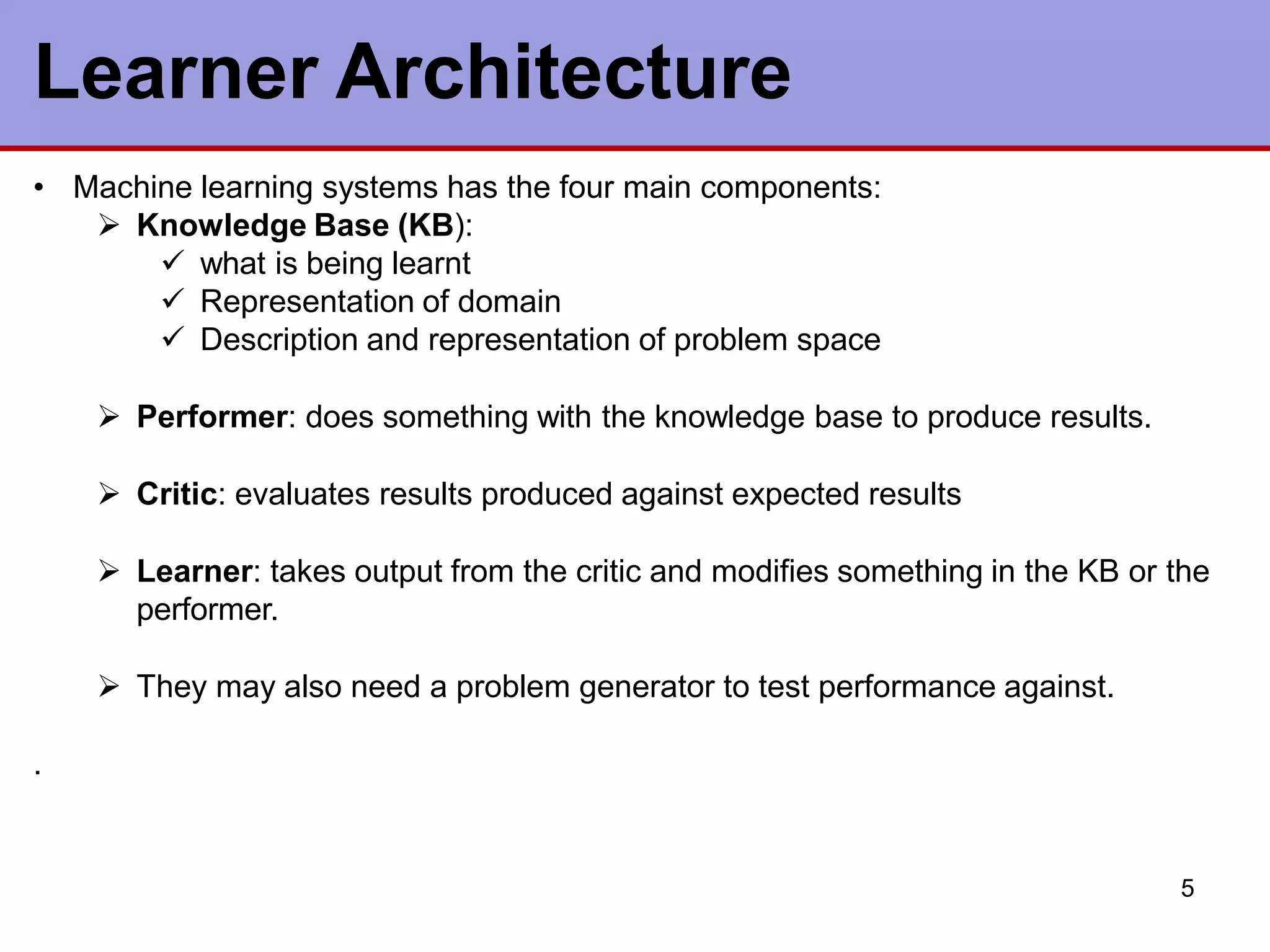

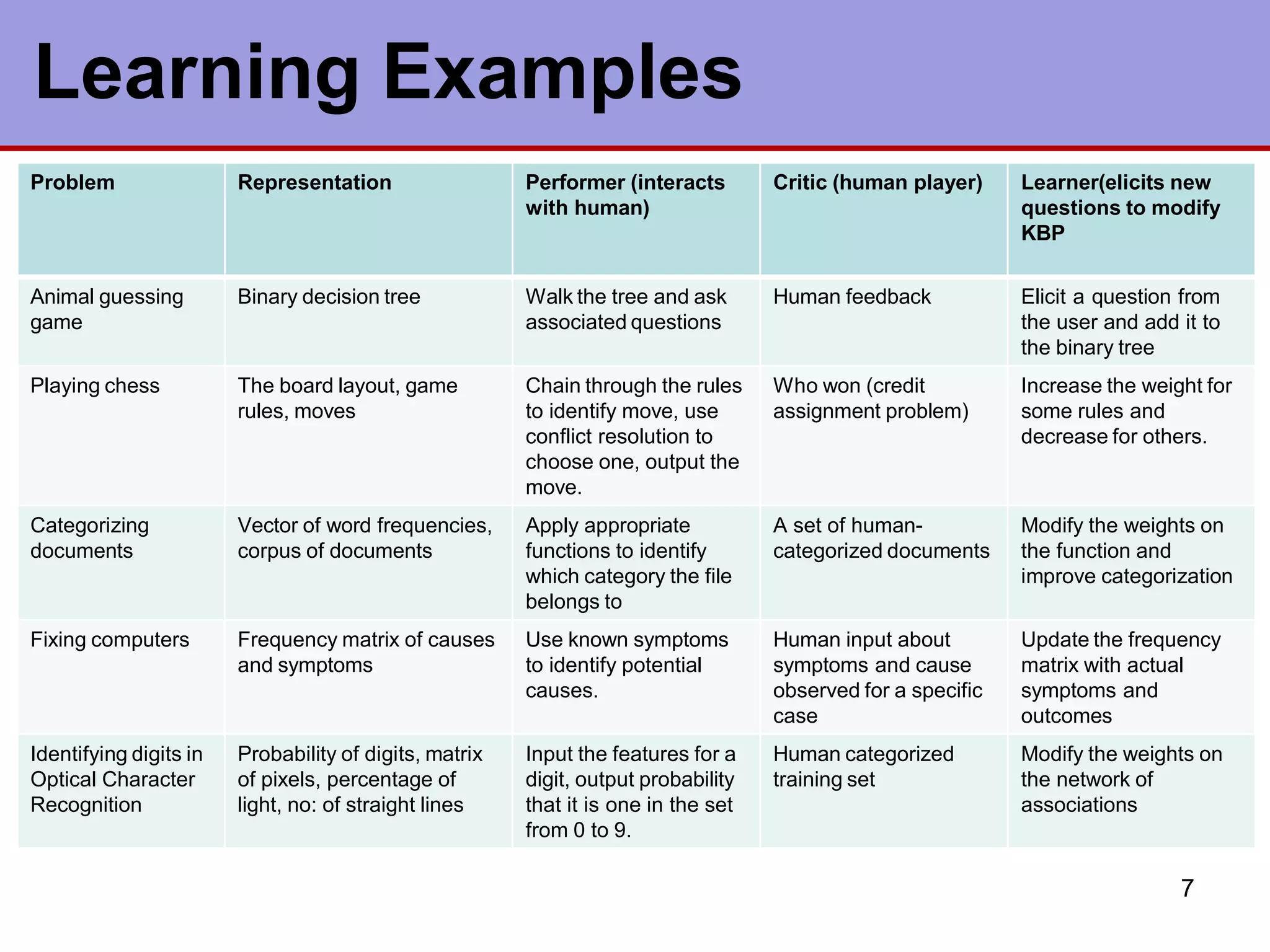

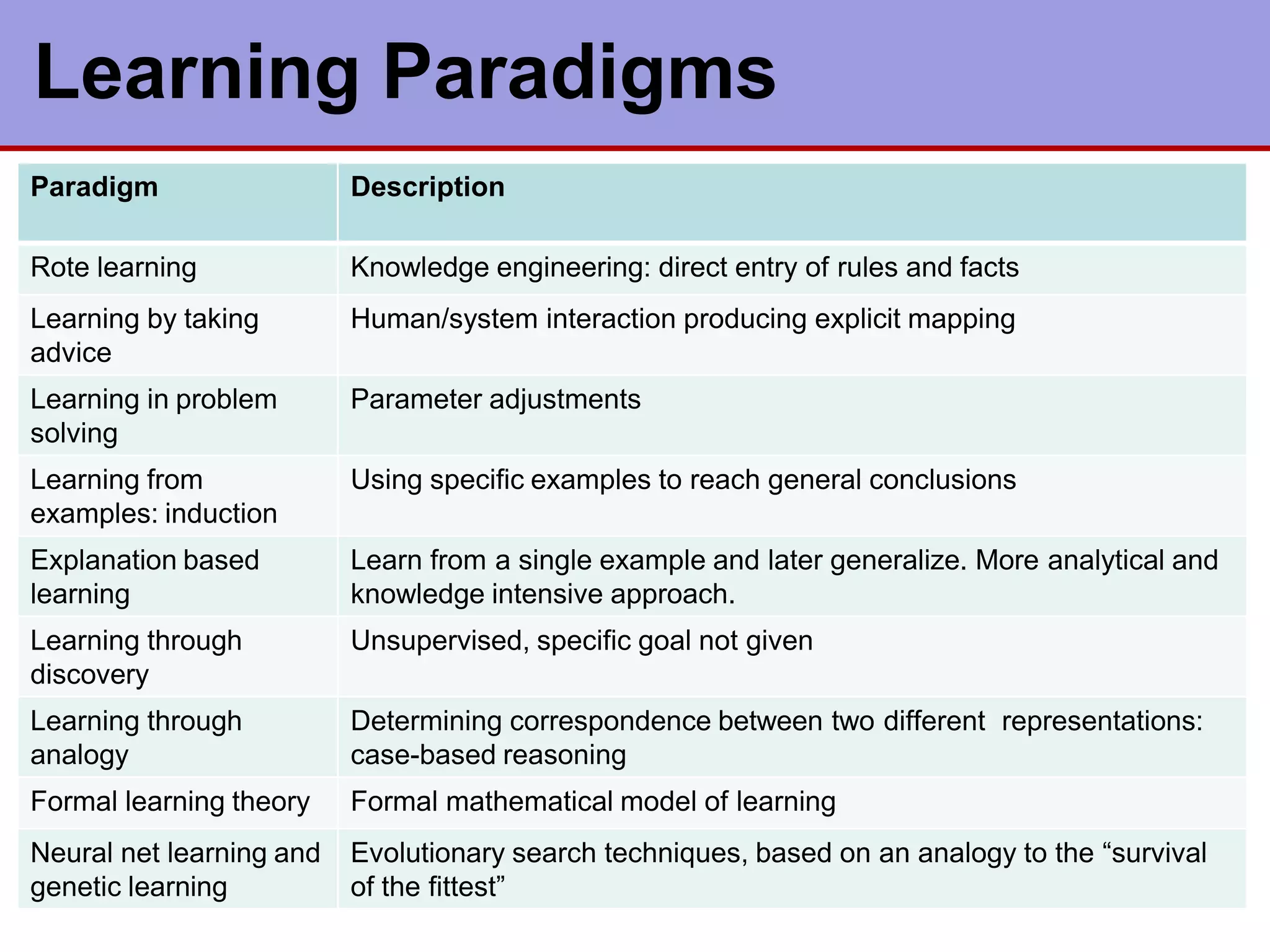

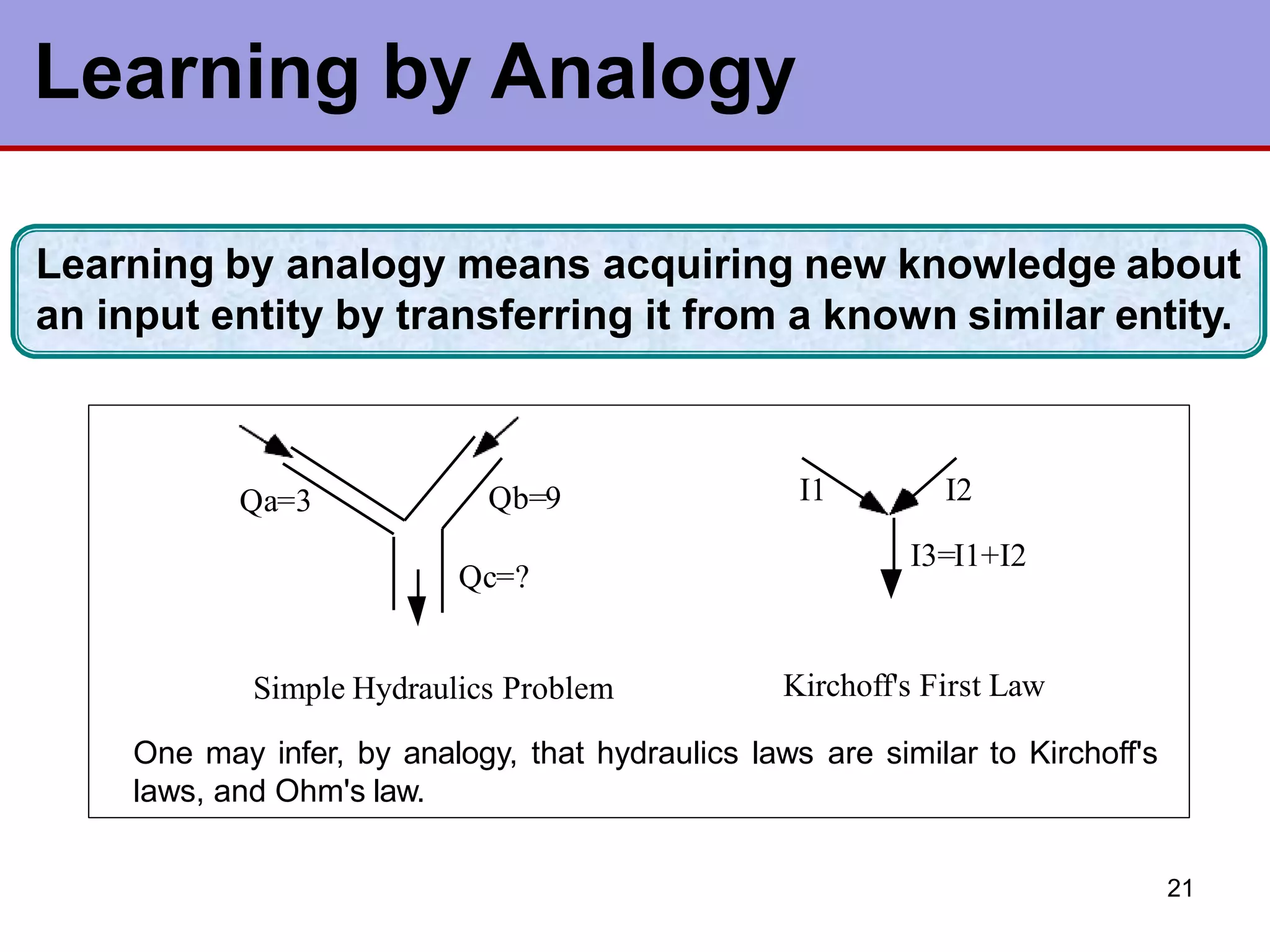

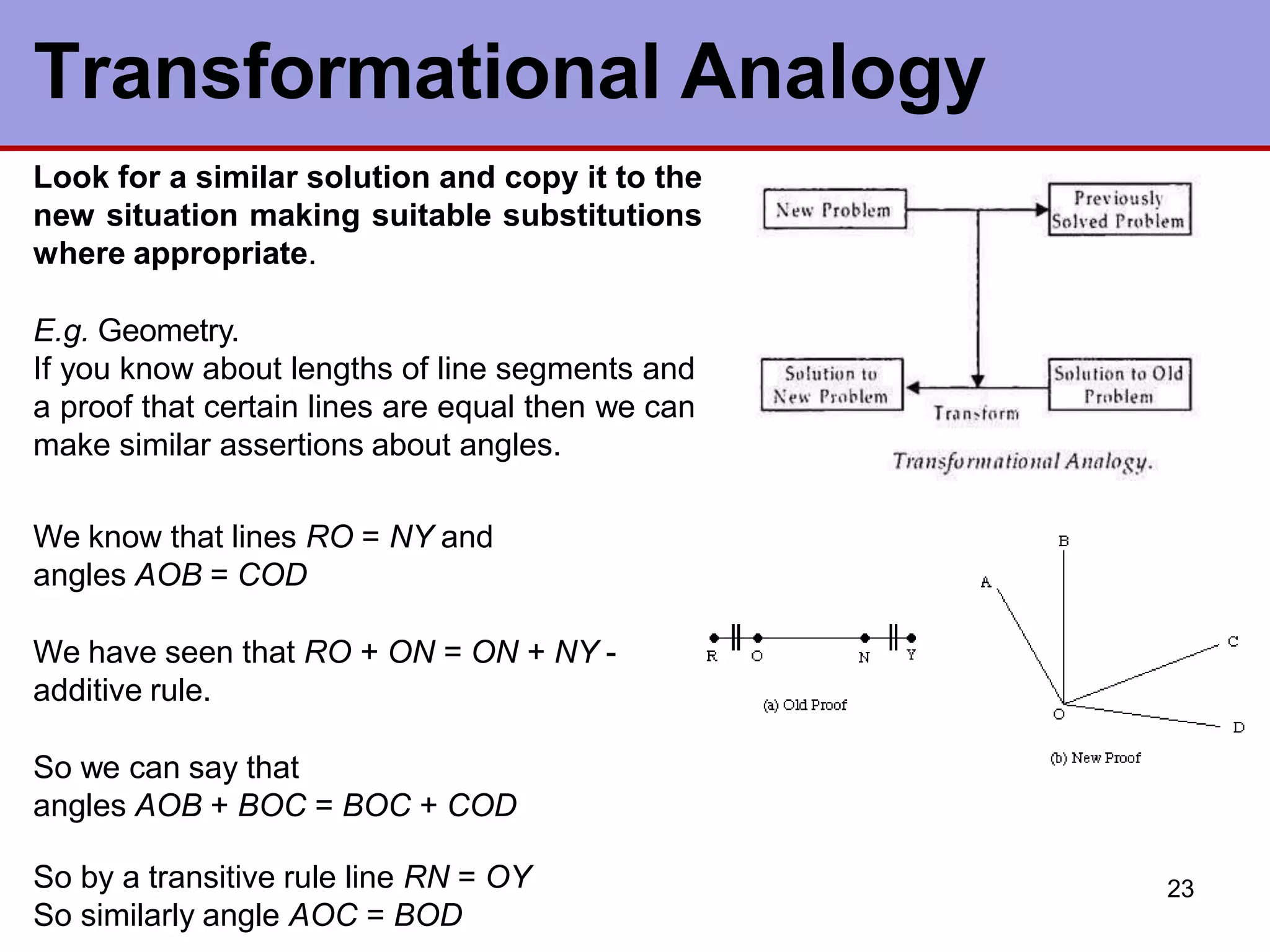

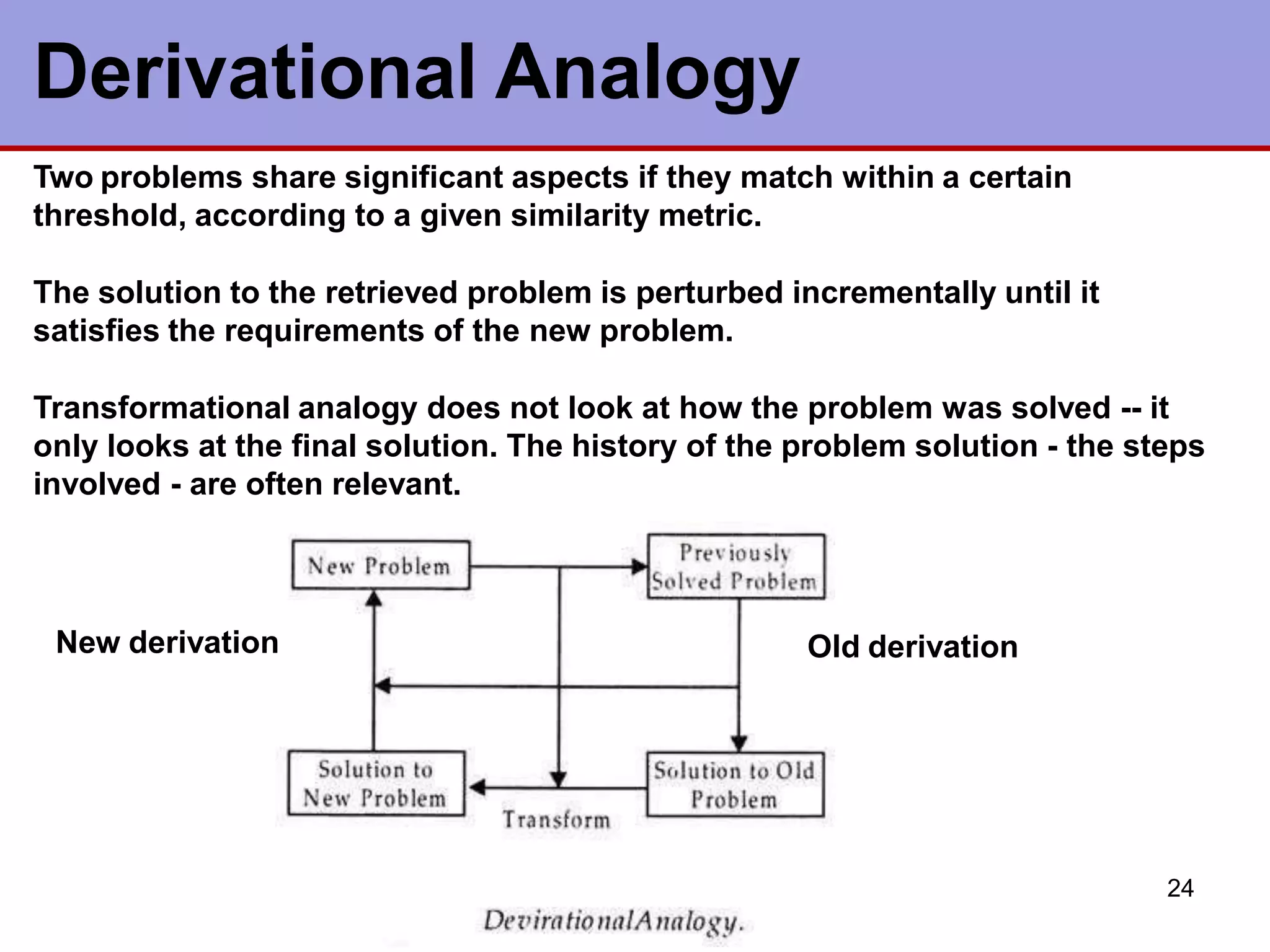

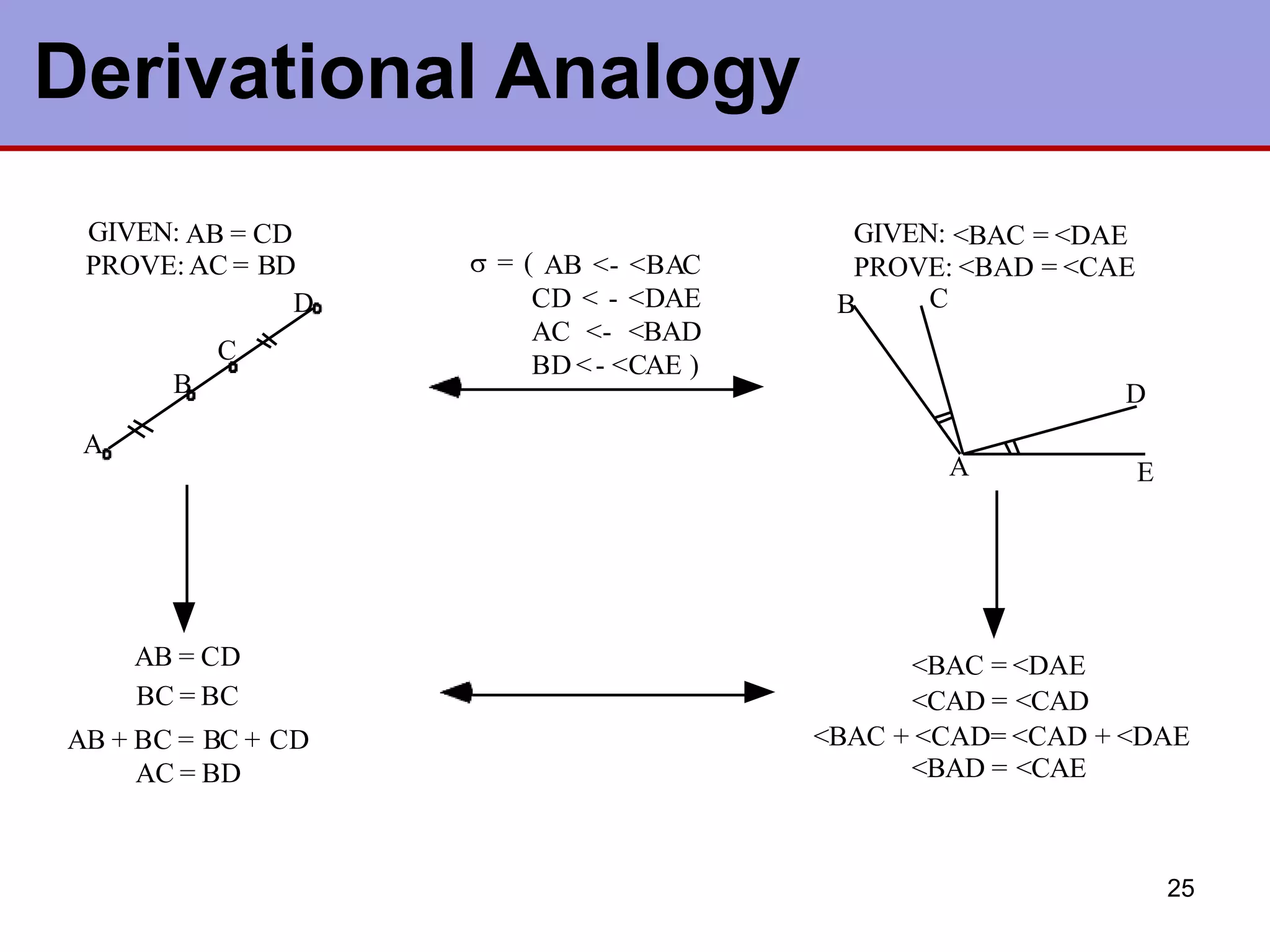

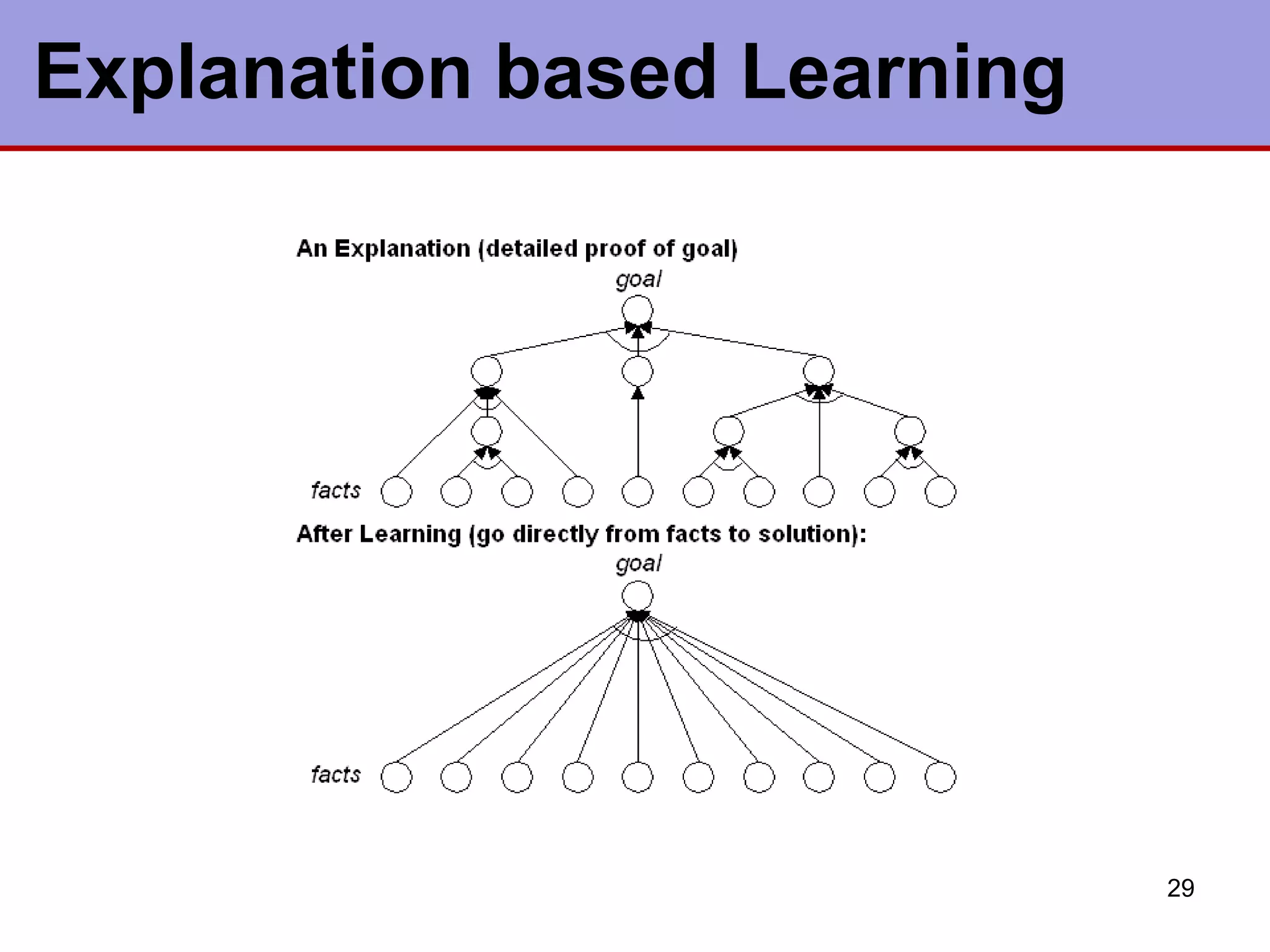

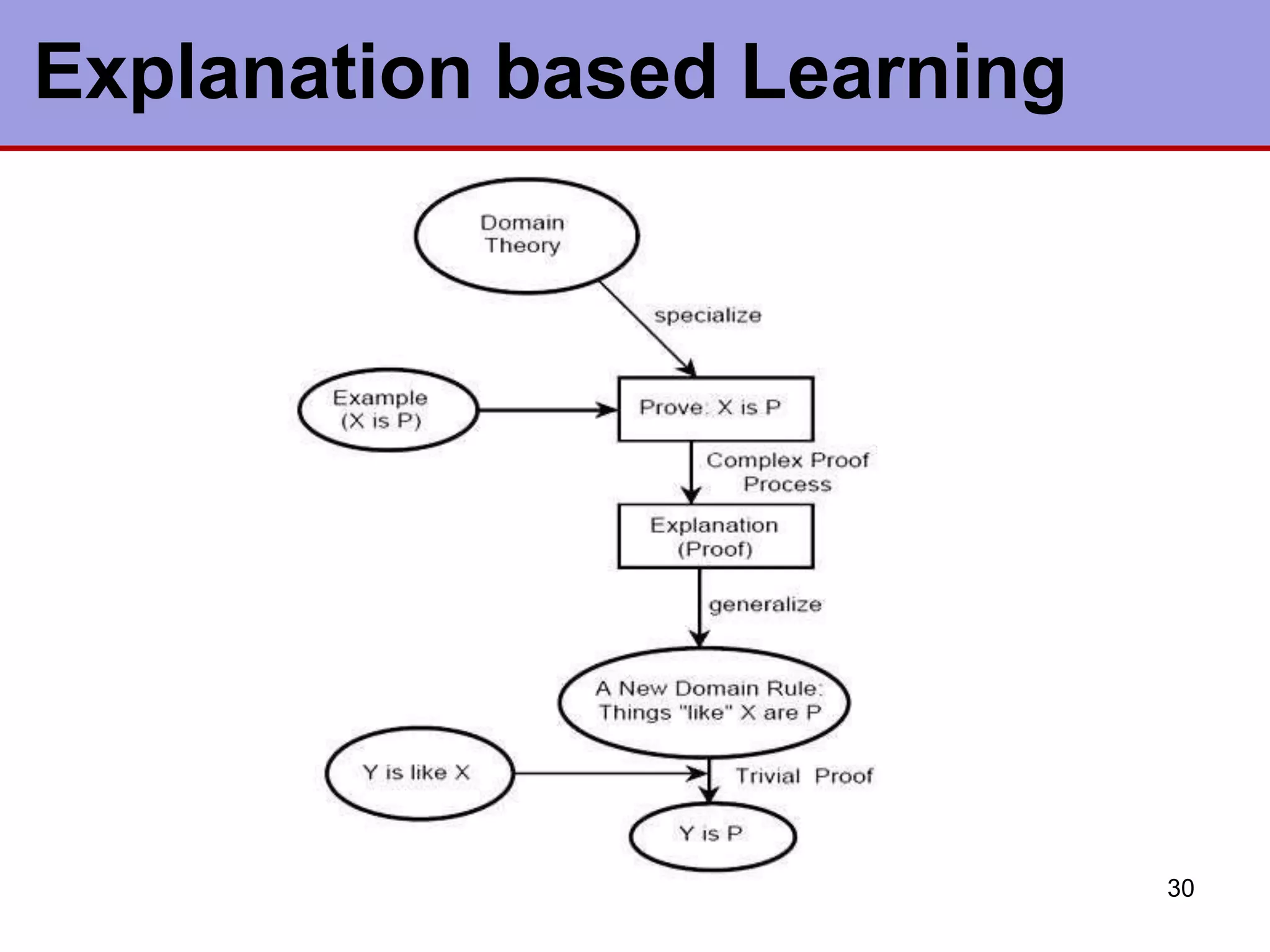

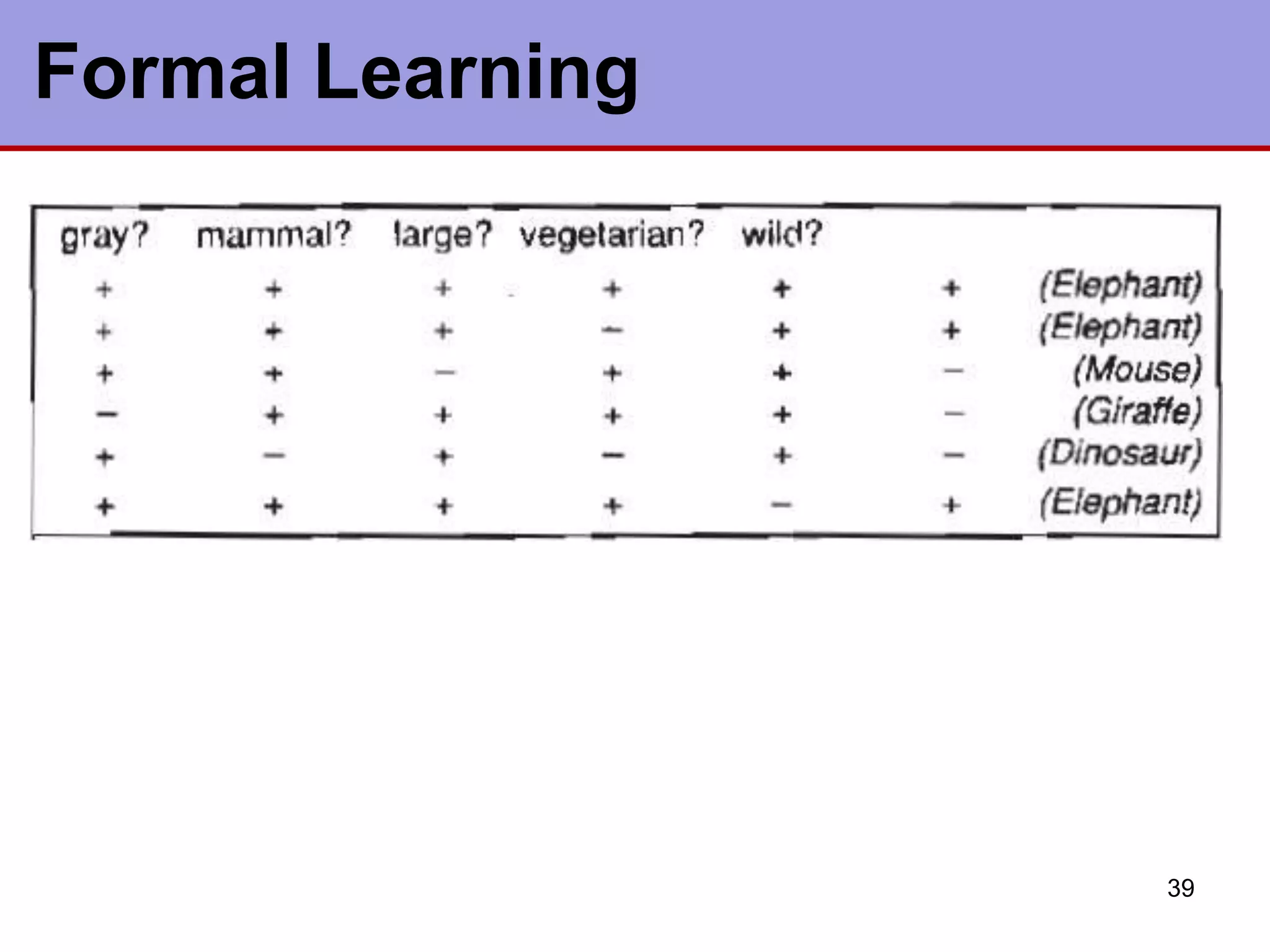

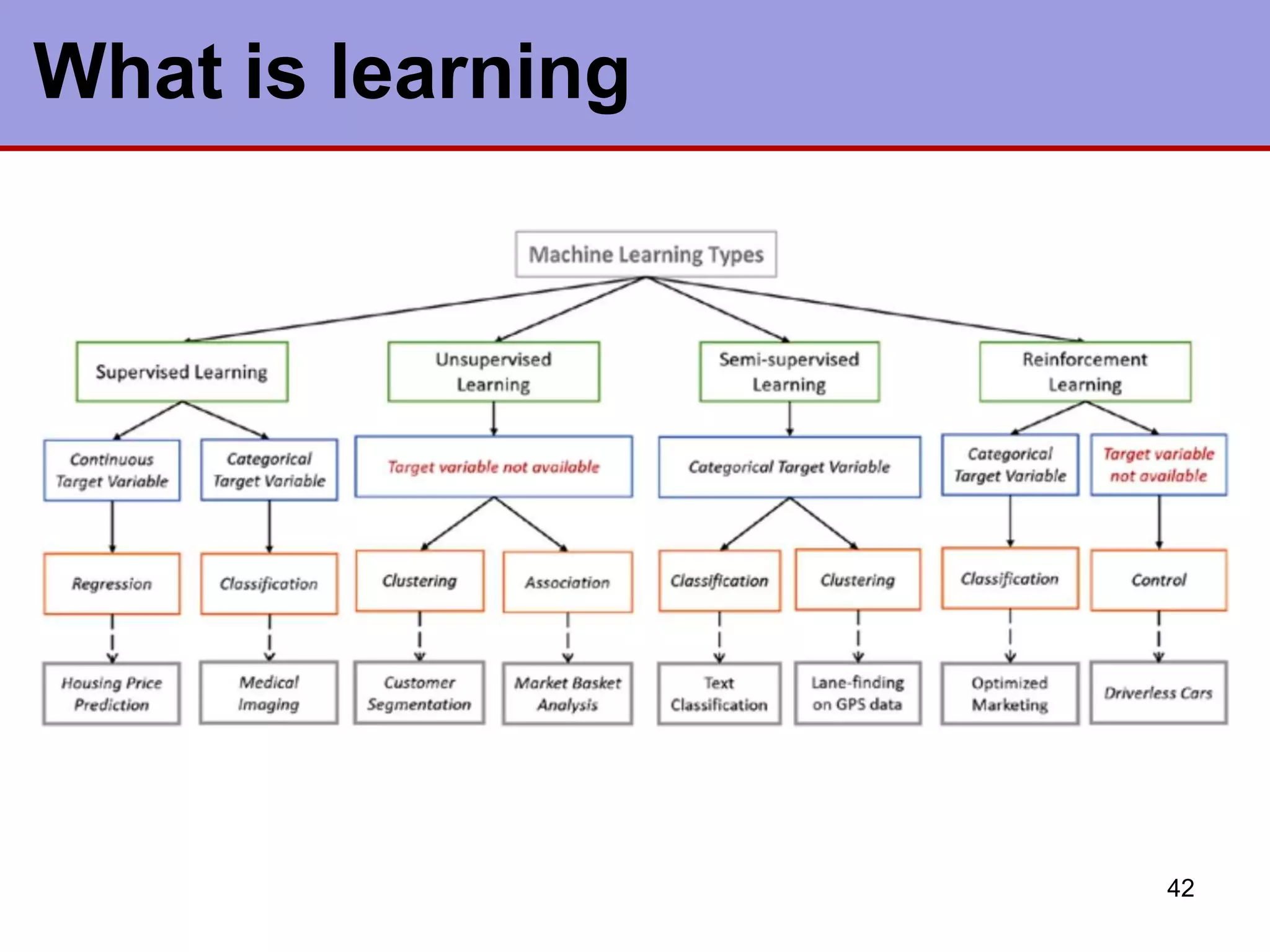

This document discusses various types of machine learning. It begins by defining learning as changes that enable a system to perform tasks more efficiently over time through constructing or modifying representations of experiences. It then covers several types of learning including rote learning, learning by taking advice, learning through problem solving via parameter adjustment and chunking, learning from examples through induction, explanation based learning, learning through discovery and analogy. It also discusses formal learning theory and neural network and genetic learning. Key aspects of learning systems and different learning paradigms are described.