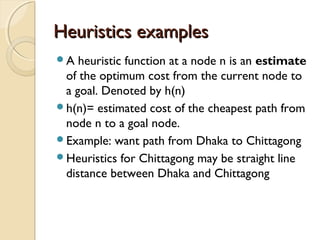

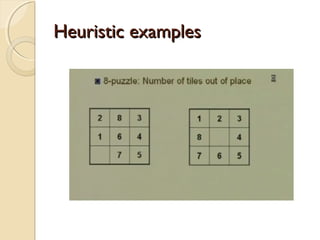

1) The document discusses various search algorithms including uninformed searches like breadth-first search as well as informed searches using heuristics.

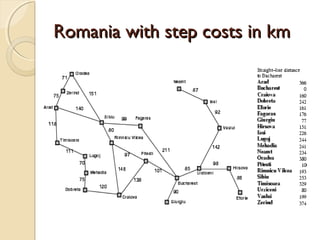

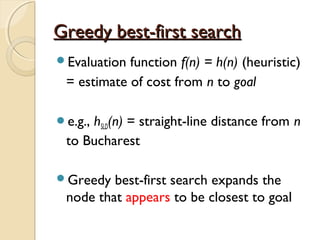

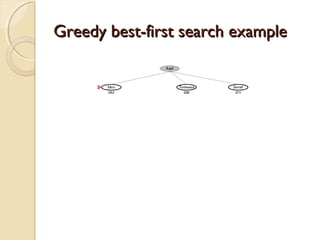

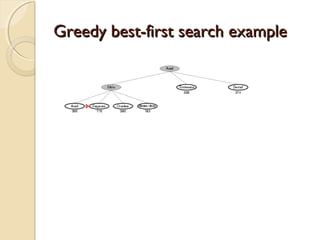

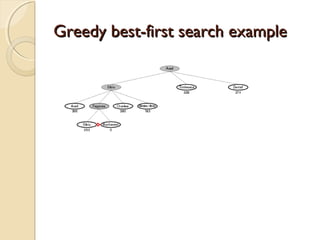

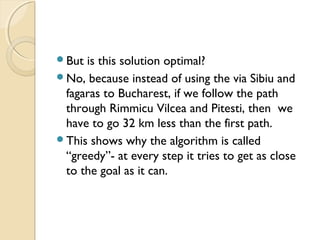

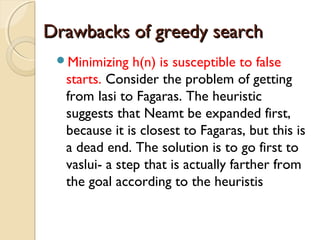

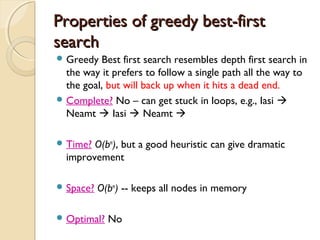

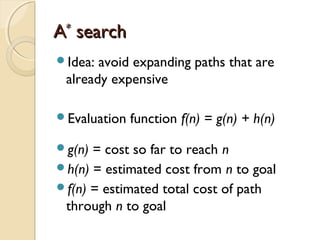

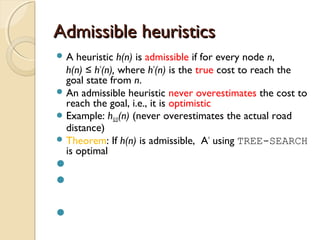

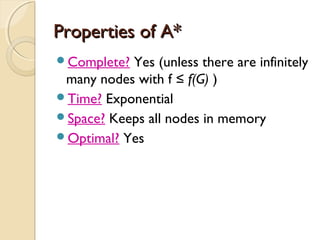

2) It describes greedy best-first search which uses a heuristic function to select the node closest to the goal at each step, and A* search which uses both path cost and heuristic cost to guide the search.

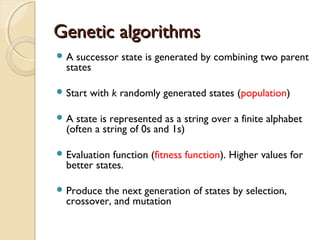

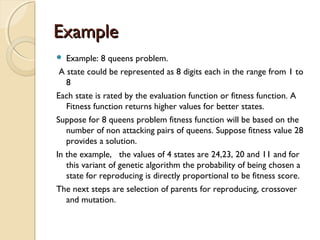

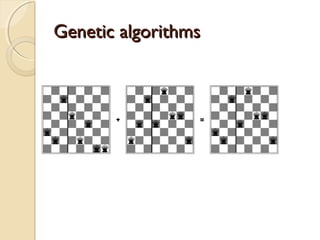

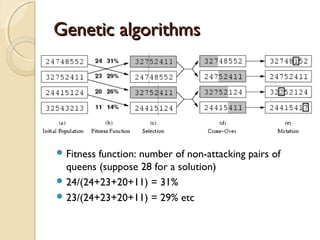

3) Genetic algorithms are introduced as a search technique that generates successors by combining two parent states through crossover and mutation rather than expanding single nodes.