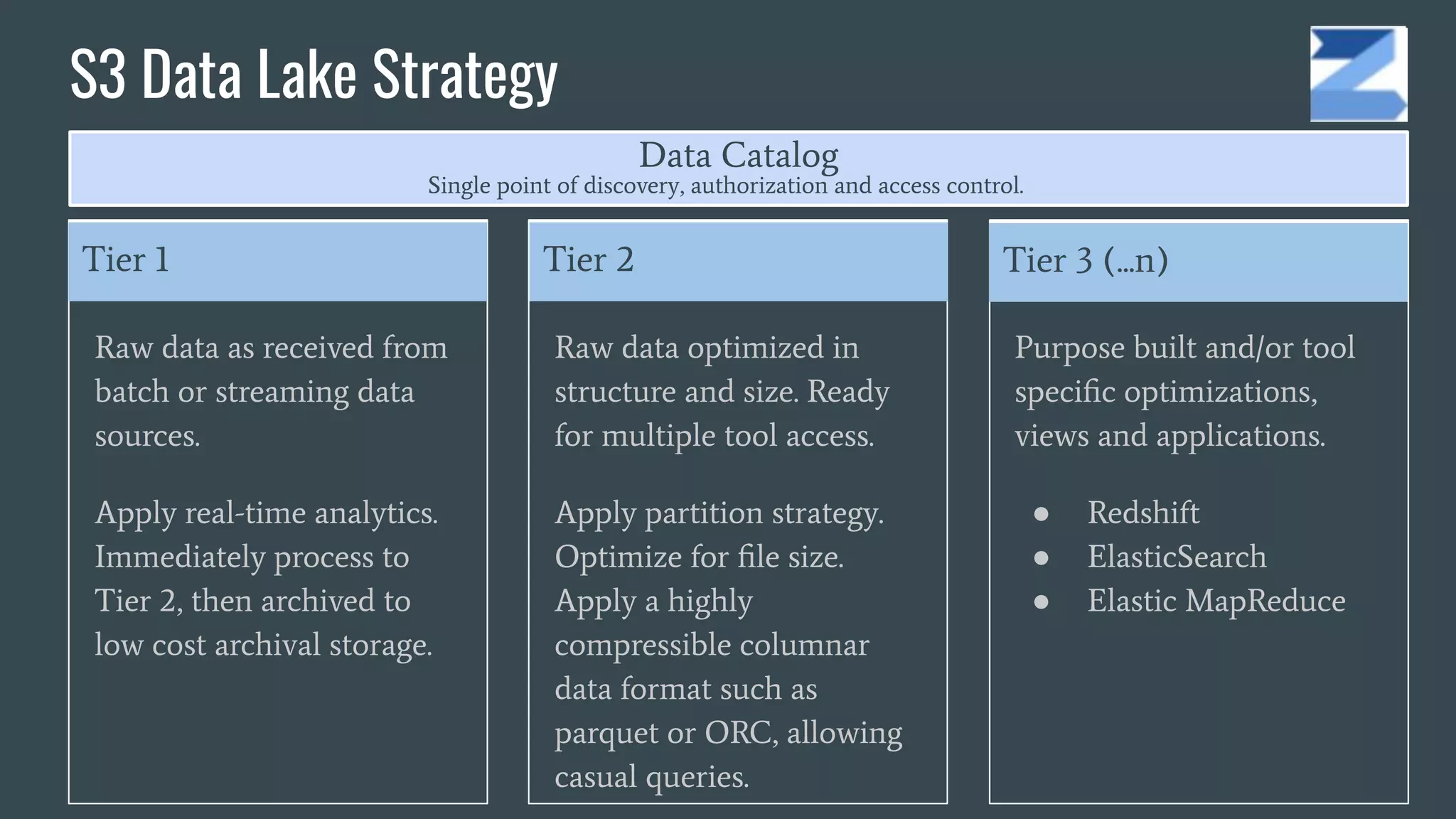

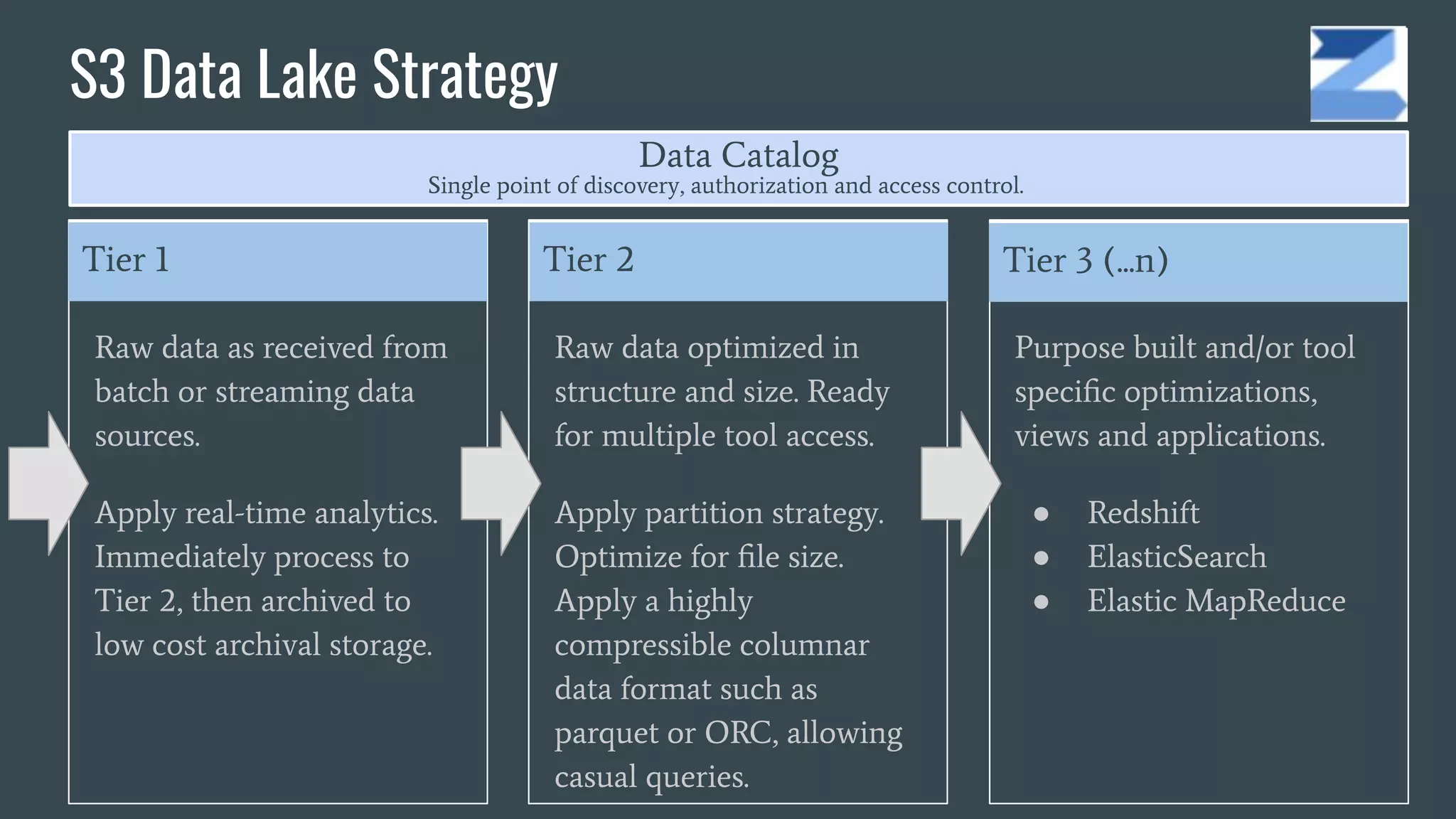

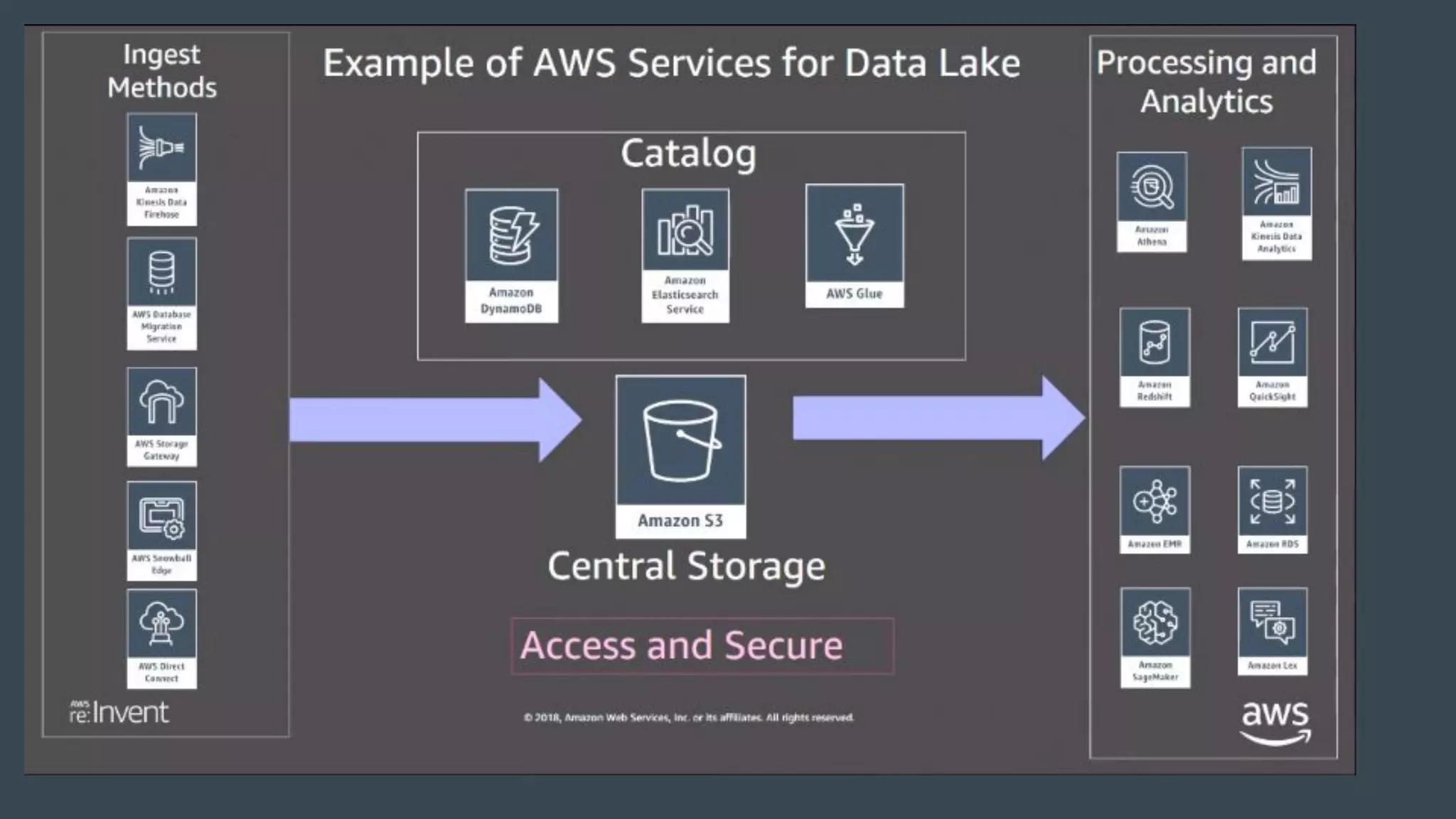

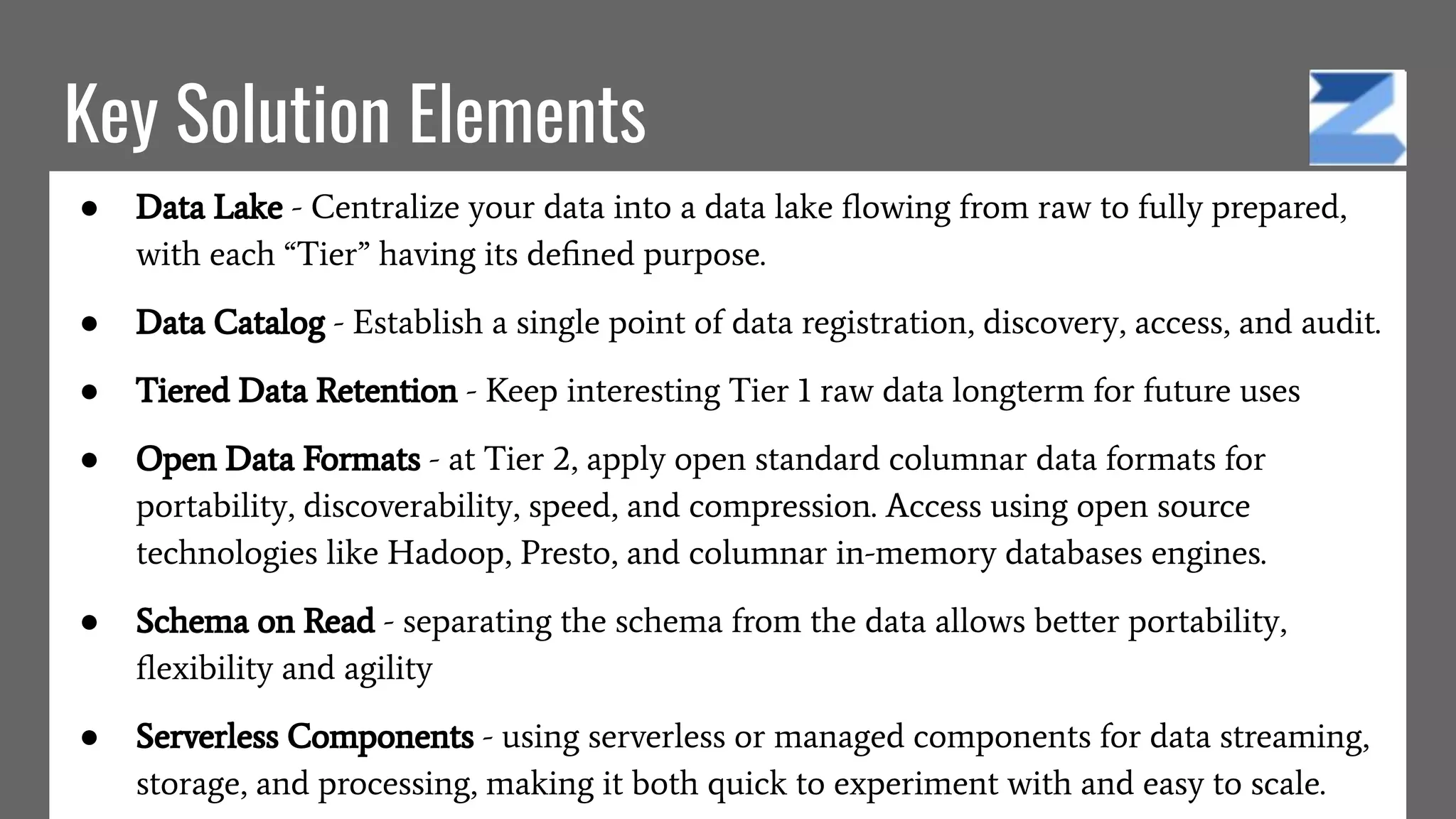

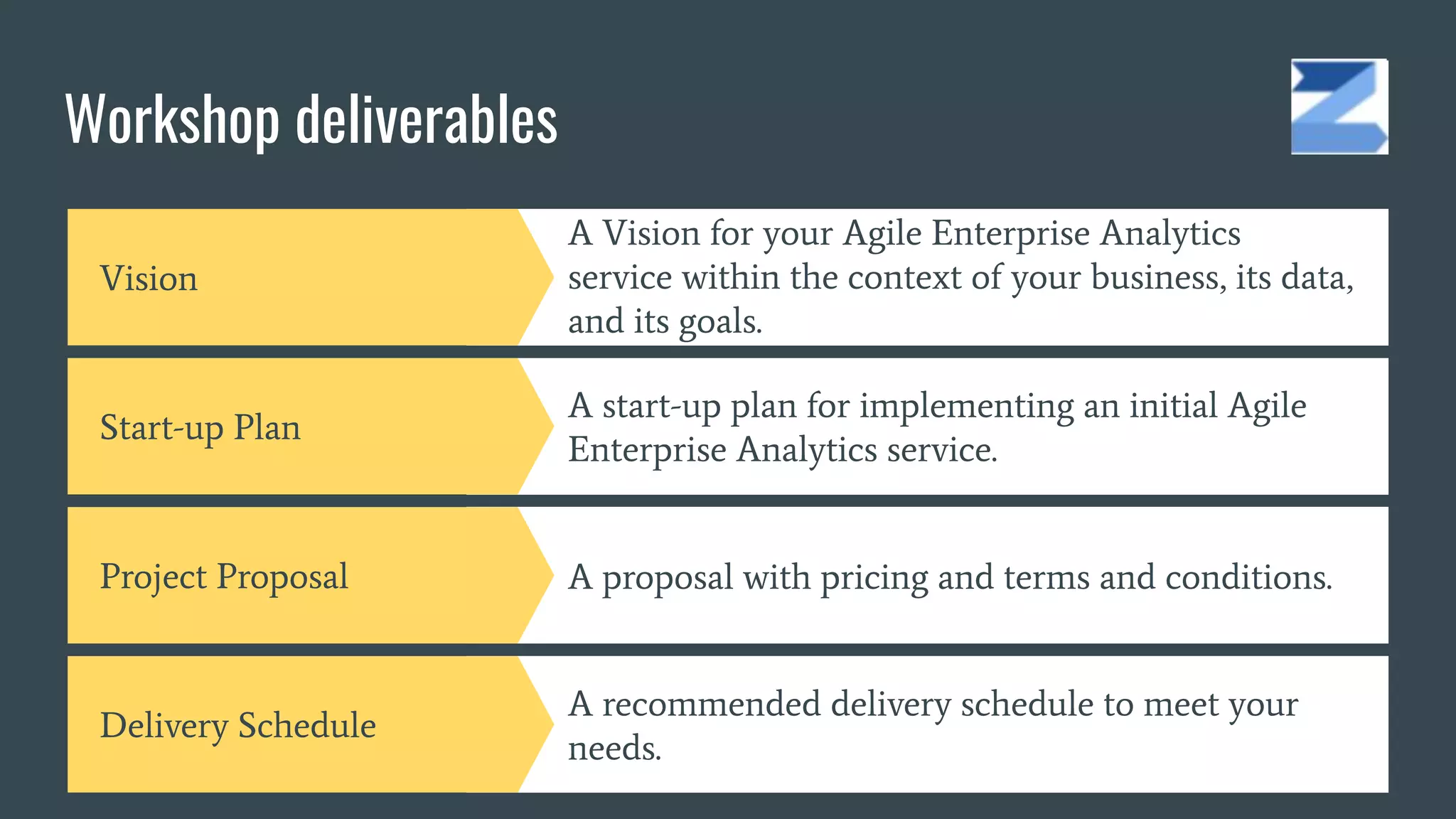

The document discusses the challenges and opportunities in managing enterprise data through agile analytics on AWS. It emphasizes the importance of establishing data lakes for effective data governance, access, and analytics, while leveraging open data formats and tools. Additionally, it provides a strategic framework for implementing a data-driven organization that facilitates evidence-based decision making and encourages the use of machine learning and AI.