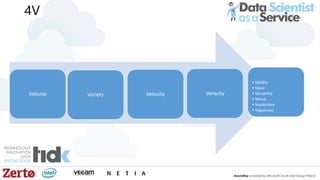

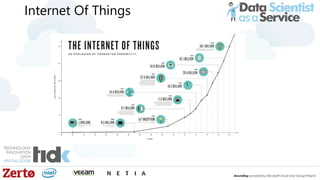

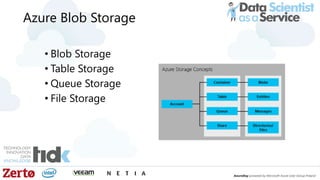

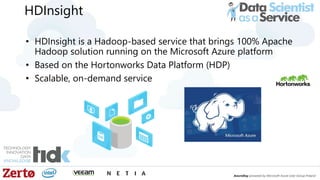

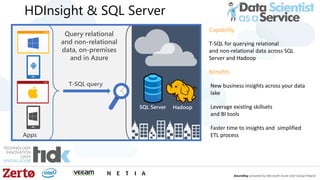

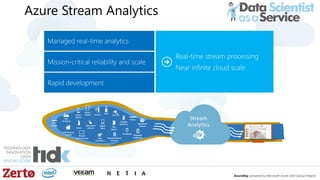

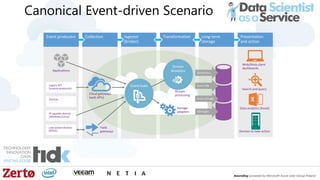

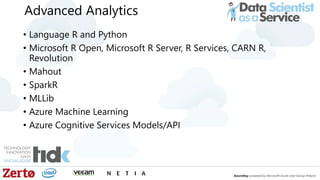

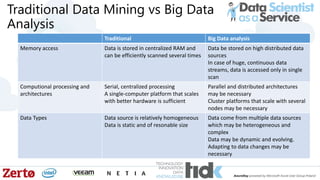

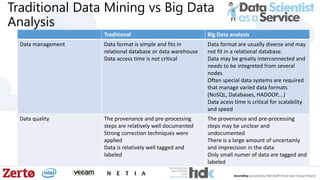

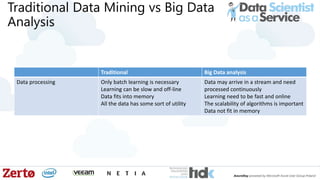

The document is a presentation by Łukasz Grala, a senior architect specializing in big data analytics, discussing the rapid growth and challenges associated with big data. It outlines technological advancements, including Azure services for data storage, processing, and machine learning, alongside the evolution of traditional analytics methods. The speaker emphasizes the need for efficient data management and analytics in the context of increasing data volumes generated by modern technologies.