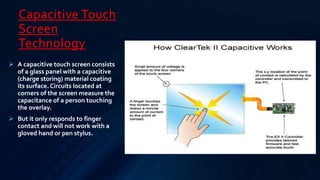

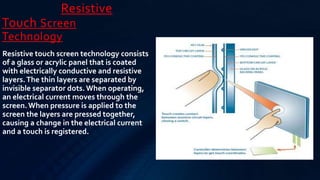

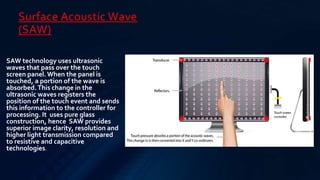

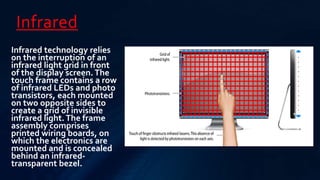

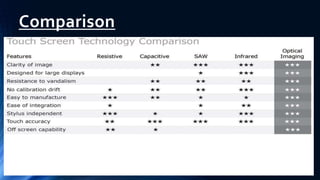

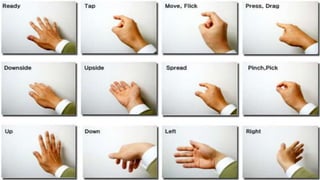

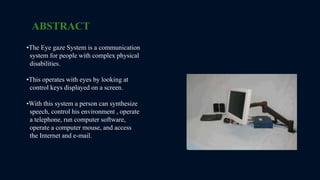

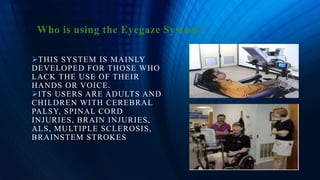

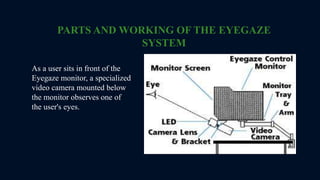

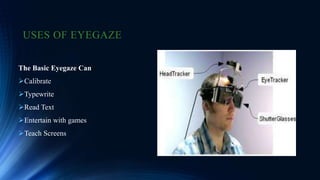

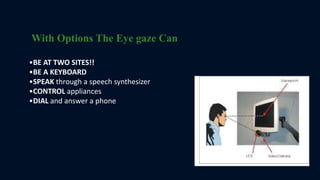

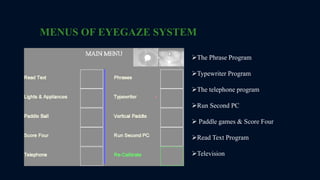

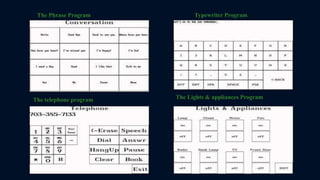

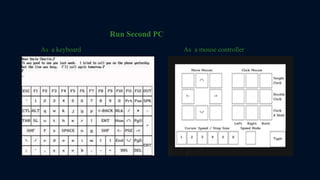

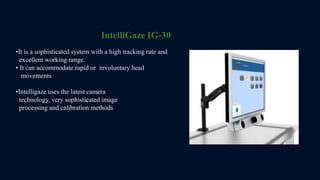

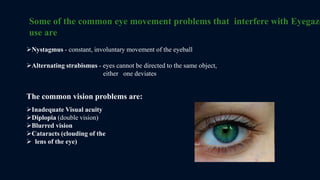

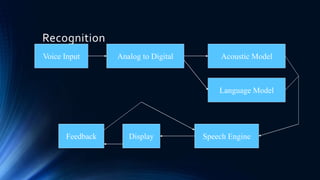

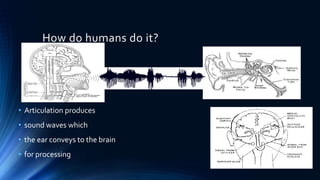

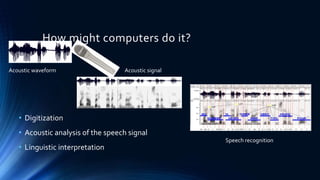

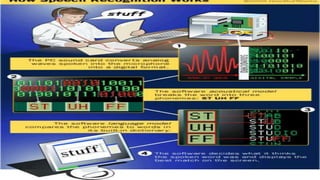

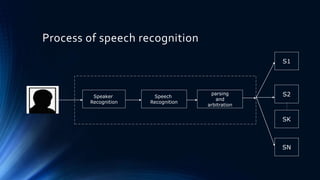

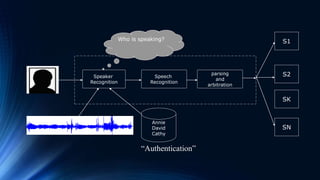

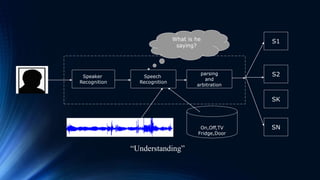

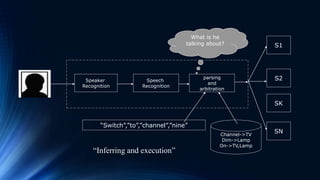

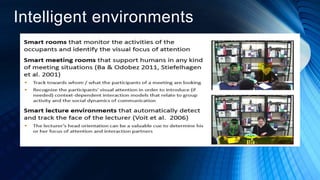

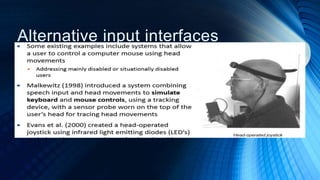

The document discusses various advanced interaction techniques including touch, gestures, eye tracking, head and pose tracking, speech, and brain-body interfaces. It provides information on multitouch interaction technologies like capacitive touch screens, resistive touch screens, surface acoustic wave touch screens, infrared touch screens, and optical touch screens. It also discusses gesture recognition, eye gaze systems, and speech recognition technologies. The document outlines the components, working principles, applications and future scope of these human-computer interaction techniques.