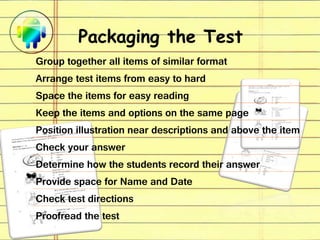

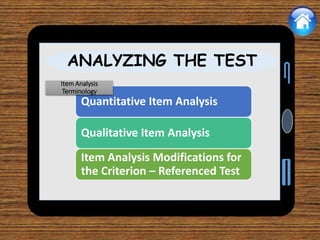

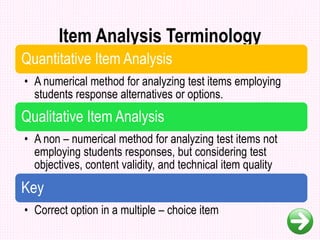

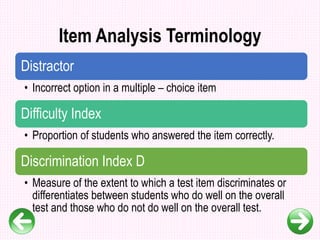

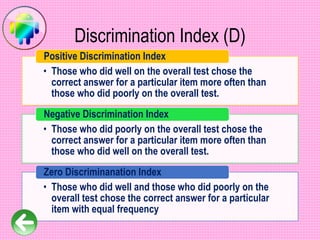

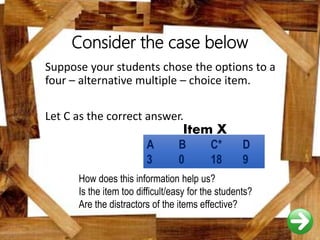

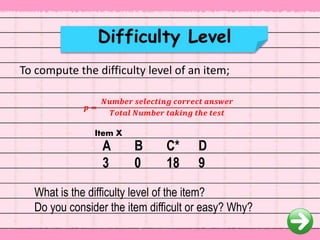

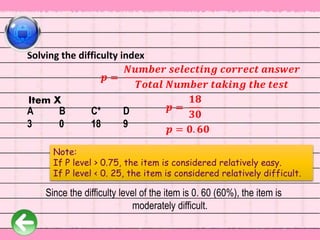

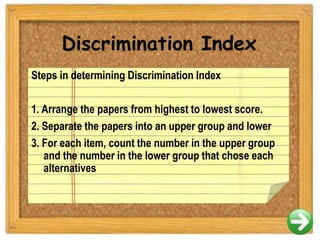

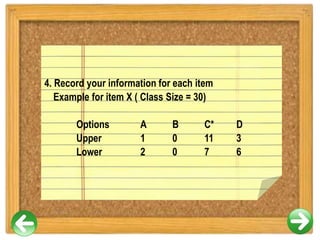

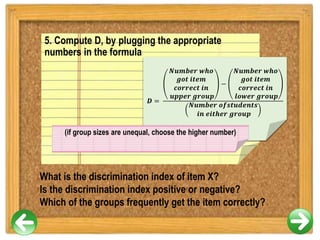

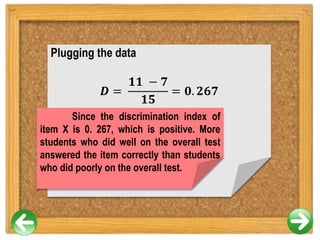

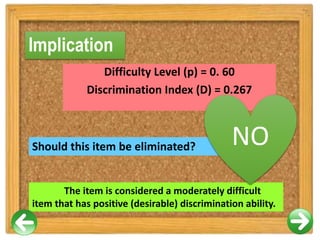

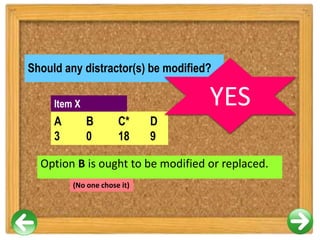

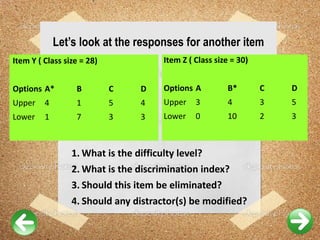

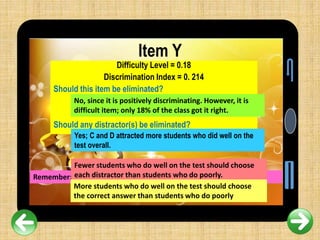

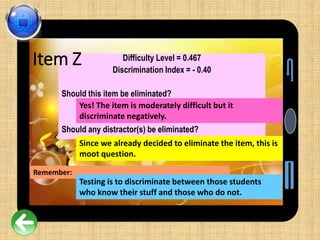

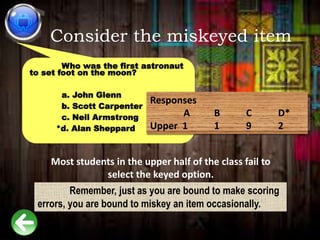

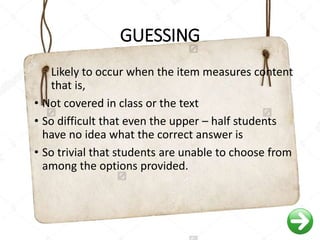

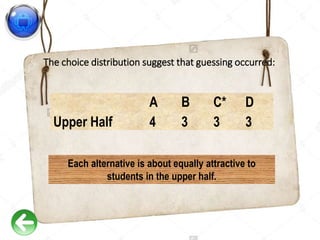

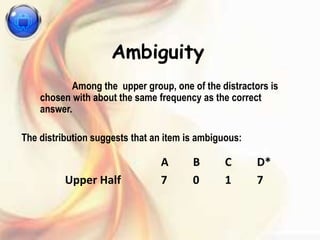

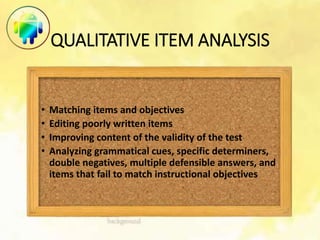

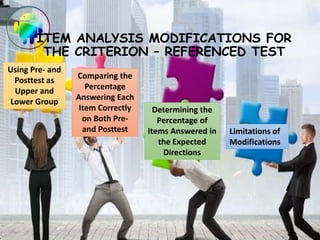

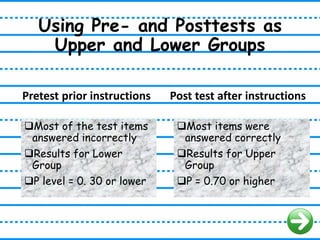

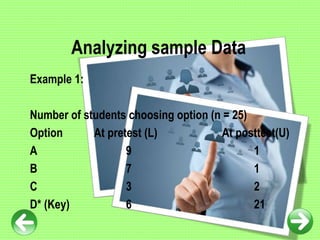

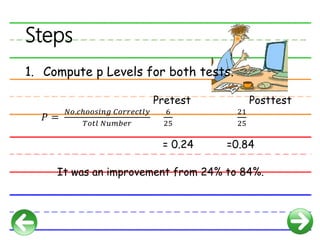

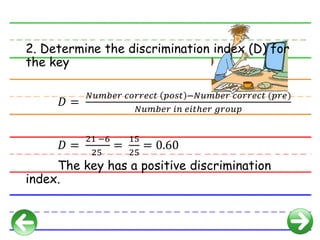

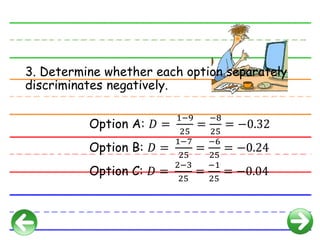

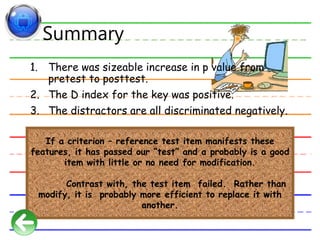

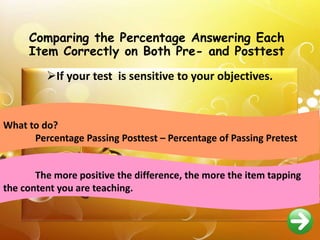

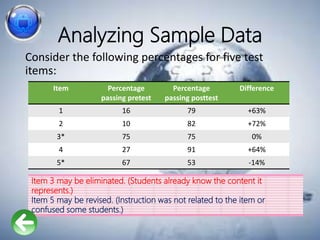

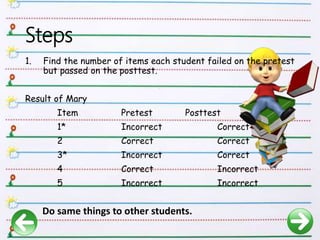

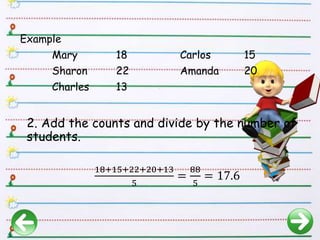

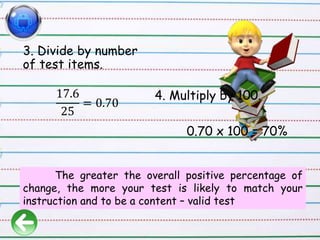

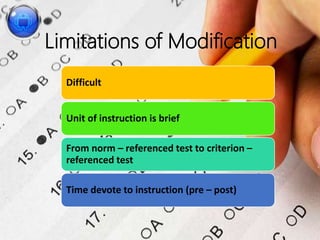

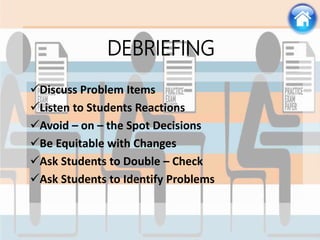

The document provides guidance on test development and administration. It discusses assembling the test, administering it, scoring it, and analyzing results both quantitatively and qualitatively. Quantitative analysis includes calculating difficulty levels and discrimination indices to evaluate items. Qualitative analysis examines items' match to objectives and technical quality. The document also describes modifications for criterion-referenced tests, such as using pre- and post-tests as upper and lower groups for analysis. Overall, the guidance aims to help avoid common pitfalls and improve tests and assessments.