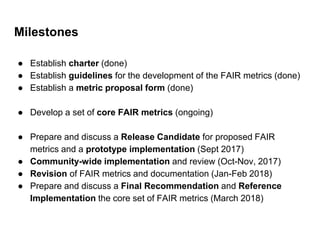

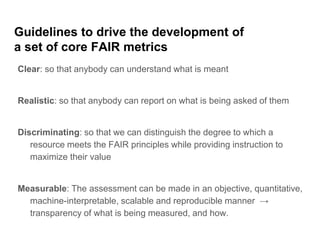

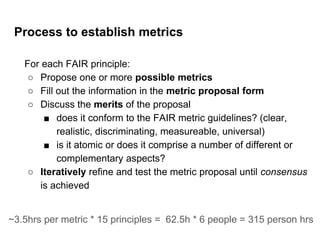

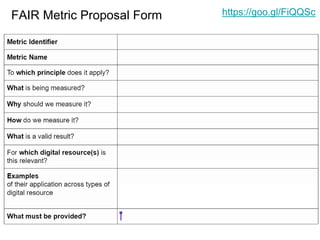

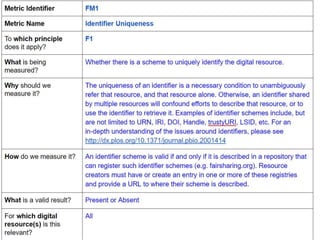

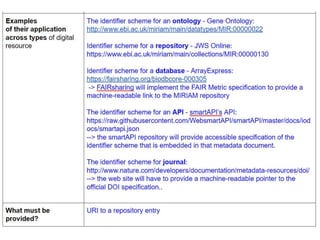

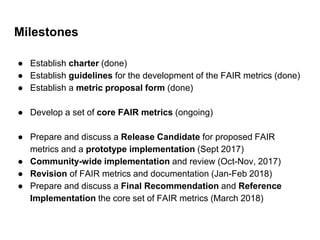

The document outlines a framework for developing core metrics to assess compliance with the FAIR principles. It establishes a working group to iteratively develop and refine metrics according to guidelines of being clear, realistic, discriminating, and measurable. Milestones include developing an initial set of metrics by September 2017, obtaining community feedback, and releasing a final recommendation in March 2018. The goal is for the metrics to be used to automatically evaluate the FAIRness of digital resources.