The document describes the Apriori algorithm for mining association rules from transactional data. The Apriori algorithm has two main steps: (1) it finds all frequent itemsets that occur above a minimum support threshold by iteratively joining candidate itemsets and pruning infrequent subsets; (2) it generates association rules from the frequent itemsets by considering all subsets of each frequent itemset and calculating the confidence of predicted items. The algorithm uses the property that any subset of a frequent itemset must also be frequent to efficiently find all frequent itemsets in multiple passes over the transaction data.

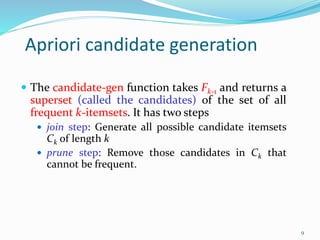

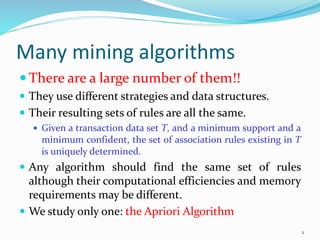

![The Apriori algorithm

The best known algorithm

Two steps:

Find all itemsets that have minimum support (frequent

itemsets, also called large itemsets).

Use frequent itemsets to generate rules.

E.g., a frequent itemset

{Chicken, Clothes, Milk} [sup = 3/7]

and one rule from the frequent itemset

Clothes Milk, Chicken [sup = 3/7, conf = 3/3]

3](https://image.slidesharecdn.com/7algorithm-190206054701/85/7-algorithm-3-320.jpg)

![Details: ordering of items

The items in I are sorted in lexicographic order (which

is a total order).

The order is used throughout the algorithm in each

itemset.

{w[1], w[2], …, w[k]} represents a k-itemset w

consisting of items w[1], w[2], …, w[k], where w[1] <

w[2] < … < w[k] according to the total order.

7](https://image.slidesharecdn.com/7algorithm-190206054701/85/7-algorithm-7-320.jpg)