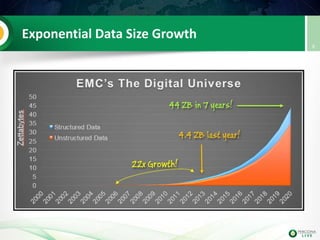

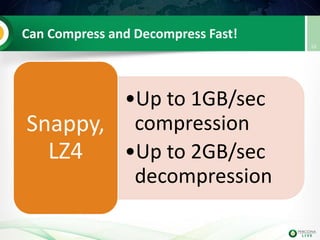

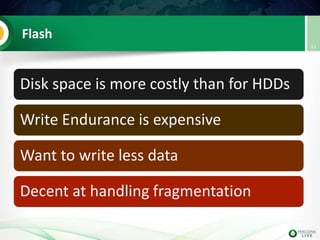

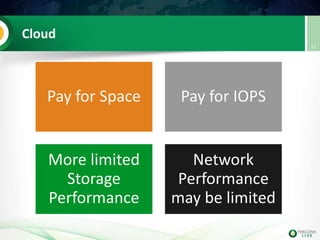

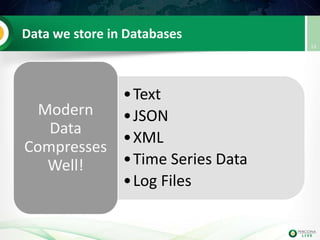

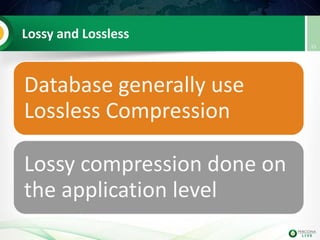

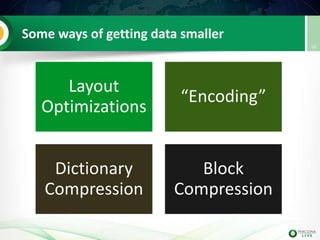

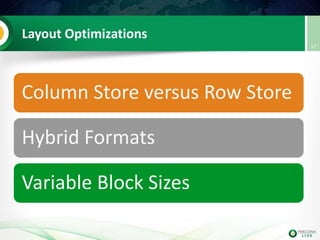

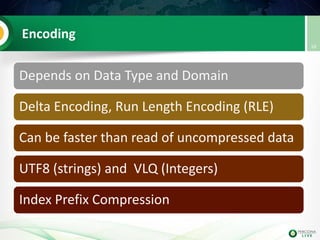

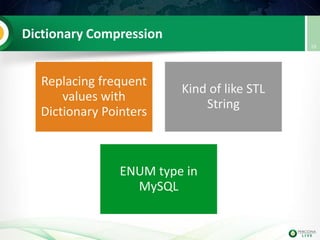

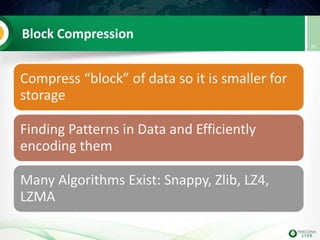

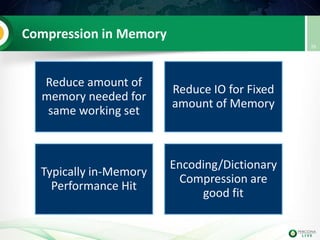

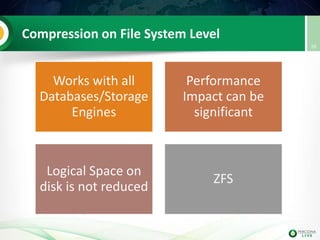

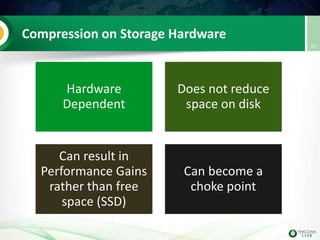

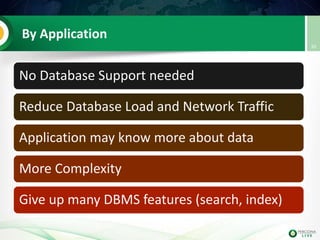

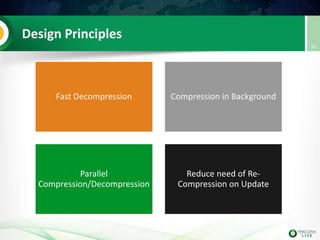

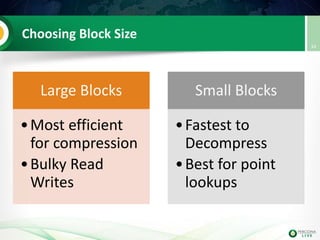

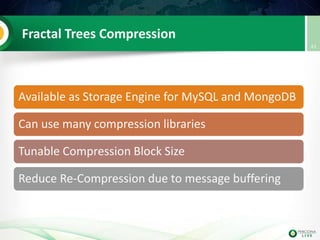

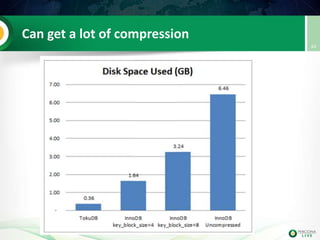

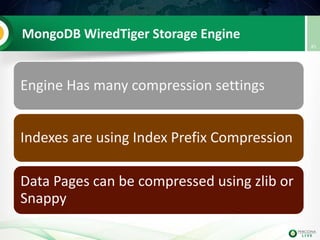

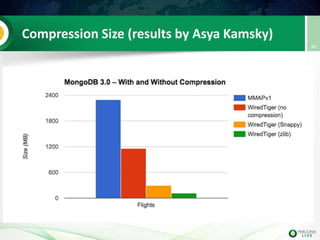

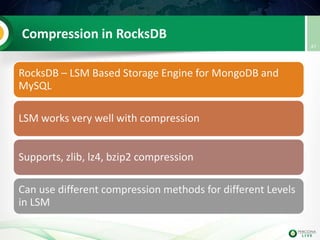

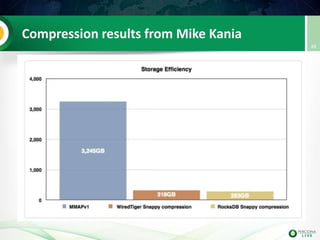

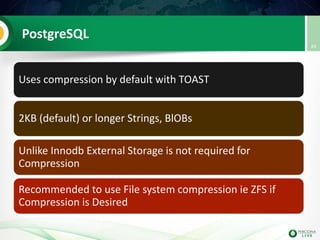

The document discusses data compression techniques in open-source databases, emphasizing its importance in managing the exponential growth of data. Various methods and algorithms for compression are analyzed, including lossless and lossy approaches, as well as practical implementations in MySQL, MongoDB, and PostgreSQL. It concludes that compression is crucial for improving storage efficiency and performance in modern databases.