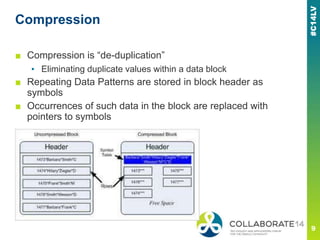

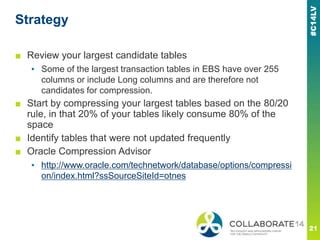

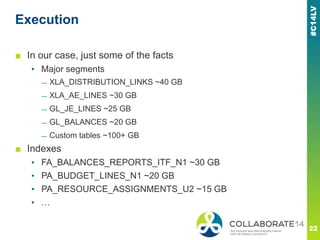

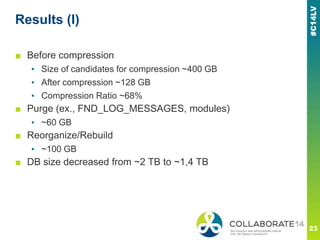

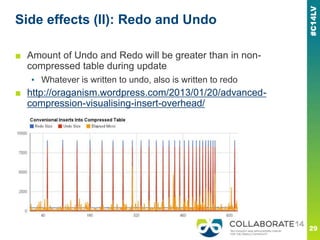

The document provides information about optimizing storage for an Oracle E-Business Suite database using Oracle Advanced Compression. It begins with introductions and an overview of topics to be covered, including compression in Oracle, implementing advanced compression with E-Business Suite, and recommendations and results. The document then discusses identifying tables for compression, preparing by applying patches, implementing compression in phases, and benchmarking performance. It notes compression can reduce storage requirements but may increase CPU usage and cause some SQL plan changes. Compression requires careful testing and monitoring of performance impacts.