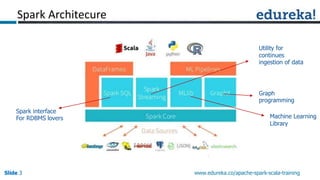

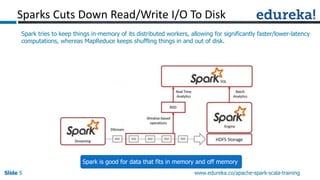

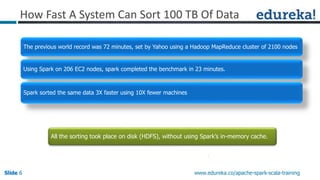

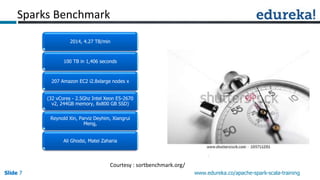

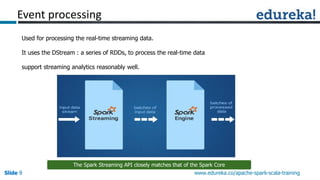

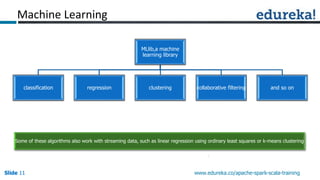

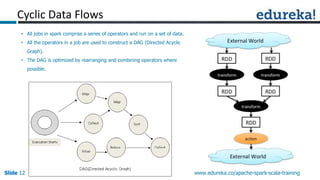

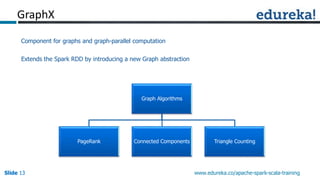

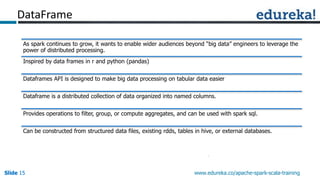

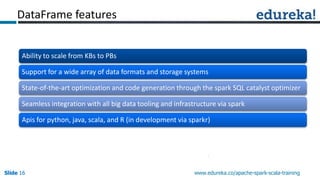

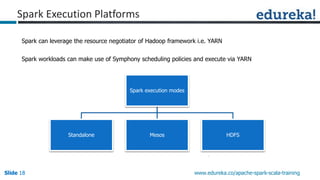

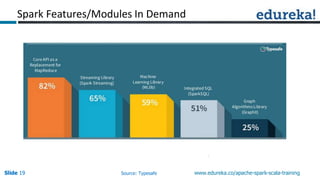

Spark can process data faster than Hadoop by keeping data in-memory as much as possible to avoid disk I/O. It supports streaming data, machine learning algorithms, graph processing, and SQL queries on structured data using its DataFrame API. Spark can integrate with Hadoop by running on YARN and accessing data from HDFS. The key capabilities discussed include low latency processing, streaming, machine learning, graph processing, DataFrames, and Hadoop integration.